EricRingger - BYU Computer Science

advertisement

Eric Ringger

Researcher in the NLP group at Microsoft

Research in Redmond, Washington

Thursday March 17, 2005

1170 TMCB, 11:00 AM

Linguistic Feature Engineering for Machine Learning in Natural

Language Processing

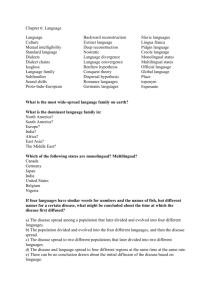

According to various estimates, empirical work in the field of NLP has grown from less than 10% a decade and a

half ago to nearly 90% of all published research papers. For some computational linguists, this has been a shock to the system,

as ideas from statistical physics and machine learning have replaced theoretical linguistics as the tools of choice. Whereas

Chomsky and his cohorts focused on linguistic competence (the abstract knowledge of grammar possessed by a native speaker)

to the exclusion of performance (the actual utterances from a native speaker), modern approaches to speech and language

processing owe more to information theory in the vein of Shannon and are focused on performance. The old approaches to big

problems such as parsing and semantic interpretation of sentences, discourses, and dialog using logic and inference have been

largely replaced by a new, more pragmatic approach that tends to attack simpler problems, including part-of-speech tagging,

named entity tagging, shallow parsing (or parse chunking), and information extraction, with a focus on handling the common

cases with high accuracy, as a good engineer would. Are the computational linguists who used to write rules out of a job?

To address the question, I discuss two research problems in which linguistic and empirical insights have met in

hybrid systems with mixed results. The first is the sentence realization problem: given an abstract representation of a sentence,

we aim to produce a fluent string. For a transfer-based MT system, this is the third leg of a three-step process (analysis,

transfer, generation). In a previous BYU CS colloquium, I presented our approach to producing fluent word order; in this talk, I

focus on our empirical approach to an inherently linguistic problem, namely clausal extraposition in German. (This work is part of

the Amalgam and MSR-MT systems.)

The second problem is email classification. Our task is to identify email messages containing sentences of interest,

in particular, tasks (action items) and promises (commitments). We extract a broad set of features, some superficial and some

reflecting deeper linguistic phenomena, as raw material for the classification task. The product is a working solution that helps to

address the email overload problem. In this case, we find that all features contribute to better accuracy, although the linguistic

features do not help as much as one would hope. (This work is part of the SmartMail / TaskFlags system.)

Biography

Eric Ringger is a Researcher in the NLP group at Microsoft Research in Redmond, Washington, since 1997. Eric has a B.S. in

Mathematics from BYU and M.S. and Ph.D. degrees in Computer Science from the University of Rochester. His research

interests include computational linguistics, statistical pattern recognition, machine learning, text classification, language

generation, machine translation, and statistical language modeling. Eric served an LDS mission in Munich, Germany ('87-'89).

Donuts will be provided