Introductory Econometrics – Formula Sheet for the Final Exam

advertisement

Introductory Econometrics – Formula Sheet for the Final Exam

You are allowed to use the following formula sheet for the final exam.

1. Multiple linear regression assumptions:

MLR.1: Y = β0 + β1 X1 + . . . + βk Xk + U (model)

MLR.2: The observed data {(Yi , X1i , . . . , Xki ), i = 1, . . . , n} is a random

sample from the population

MLR.3: In the sample, none of the explanatory variables has constant values

and there is no perfect linear relationships among the explanatory variables.

MLR.4: (Zero conditional mean) E(U |X1 , . . . , Xk ) = 0

MLR.5: (Homoskedasticity) V ar(U |X1 , . . . , Xk ) = V ar(U ) = σ 2

MLR.6: (Normality) U | X1 , . . . , Xk ∼ N (0, σ 2 )

2. The OLS estimator and its algebraic properties

The OLS estimator solves

n

X

min

(Yi − β̂0 − β̂1 X1i − β̂2 X2i − . . . − β̂k Xki )2

β̂0 ,...,β̂k

i=1

Solution: In the general case no explicit was solution given. If k = 1, i.e.

there is only one explanatory variable, then

Pn

(X − X̄1 )(Yi − Ȳ )

Pn 1i

and β̂0 = Ȳ − β̂1 X̄1 .

β̂1 = i=1

2

i=1 (X1i − X̄1 )

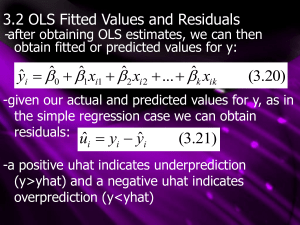

Predicted value for individual i in the sample: Ŷi = β̂0 + β̂1 X1i + . . . β̂k Xki .

Residual for individual i in the sample: Ûi = Yi − Ŷi .

Pn

•

i=1 Ûi = 0

Pn

•

i=1 Ûi Xji , j = 1, . . . , k

• estimated regression line goes through (X̄1 , . . . , X̄k , Ȳ )

3. Properties of the log function:

100∆ log(x) ≈ 100

1

∆x

= %∆x

x

Predicting Y when the dependent variable is log(Y ):

Ŷadjusted = eσ

2 /2

eβ̂0 +β̂1 X1 +...β̂k Xk

4. SST, SSE, SSR, R2

P

SST = ni=1 (Yi − Ȳ )2

P

SSE = ni=1 (Ŷi − Ȳ )2

P

SSR = ni=1 Ûi2

SST = SSE + SSR

R2 = SSE/SST = 1 − SSR/SST

5. Variance of the OLS estimator, estimated standard error, etc.

For j = 1, . . . , k:

V ar(β̂j ) =

σ2

,

SSTXj (1 − Rj2 )

P

where SSTXj = ni=1 (Xji − X̄j )2 and Rj2 is the R-squared from the regression

of Xj on all the other X variables. The estimated standard error of the β̂j ,

j = 1, . . . , k, is given by

s

σ̂ 2

\

se(

β̂j ) =

,

SSTXj (1 − Rj2 )

where σ̂ 2 =

SSR

.

n−k−1

6. Simple omitted variables formula:

True model: Y = β0 + β1 X1 + β2 X2 + U

Estimated model: Y = β0 + β1 X1 + V

E(β̂1 ) = β1 + β2

2

cov(X1 , X2 )

var(X1 )

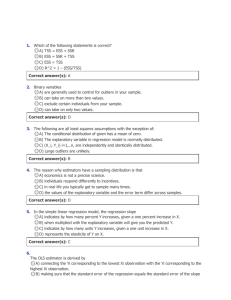

7. Hypothesis testing

a. t-statistic for testing H0 : βj = a

β̂j − a

se(

ˆ β̂j )

∼ t(n − k − 1) if H0 is true

b. The F-statistic for testing hypotheses involving more than one regression coefficient (“ur” = unrestricted, “r” = restricted, q = # of restrictions in H0 , k = # of slope coefficients in the unrestricted regression)

(SSRr − SSRur )/q

∼ F (q, n − k − 1) if H0 is true

SSRur /(n − k − 1)

8. Heteroskedasticity

Breusch-Pagan test for heteroskedasticity: Regress squared residuals on all

explanatory variables and constant; test for joint significance of the explanatory variables.

(F)GLS estimator: Let h(X1 , . . . , Xk ) = V ar(U |X1 , . . . , Xk ). Divide the

original model by the square root of h and do OLS. If h is unknown, one

needs to model and estimate it as well.

9. Linear probabiliy model

V ar(U |X1 , . . . , Xk ) = (β0 + β1 X1 + . . . βk Xk )(1 − (β0 + β1 X1 + . . . βk Xk )).

3