DEFINITION

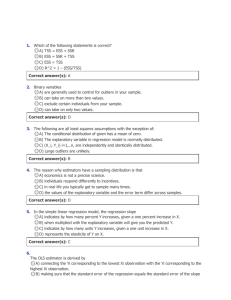

3.2 OLS Fitted Values and Residuals

after obtaining OLS estimates, we can then obtain fitted or predicted values for y: i

ˆ

0

ˆ

1 x i 1

ˆ

2 x i 2

...

ˆ k x ik

(3.20)

-given our actual and predicted values for y, as in the simple regression case we can obtain residuals: u

ˆ i

y i

i

(3.21)

-a positive uhat indicates underprediction

(y>yhat) and a negative uhat indicates overprediction (y<yhat)

3.2 OLS Fitted Values and Residuals

We can extend the single variable case to obtain important properties for fitted values and residuals:

1) The sample average of the residuals is zero, therefore: y

y

ˆ

2) Sample covariance between each independent variable and the OLS residual is zero…

-Therefore the sample covariance between the

OLS fitted values and the OLS residual is zero

-Since the fitted values come from our independent variables and OLS estimates

3.2 OLS Fitted Values and Residuals

3) The point

( x

1

, x

2

,..., x n

, y )

Is always on the OLS regression line: y

ˆ

0

ˆ

1 x

1

ˆ

2 x

2

...

ˆ k x k

Notes:

These properties come from the FOC’s in (3.13):

-the first FOC says that the sum of residuals is zero and proves (1)

-the rest of the FOC’s imply zero covariance between the independent variables and uhat (2)

-(3) follows from (1)

3.2 “Partialling Out”

In multiple regression analysis, we don’t need formulas to obtain OLS’s estimates of B

-However, explicit formulas can give us interesting properties

-In the 2 independent variable case: j

ˆ

1

r

ˆ i 1 y i r

ˆ i 1

2

(3.22)

-Where rhat are the residuals from regressing x

1 on x

2

-ie: the regression: x

ˆ

1

ˆ

0

ˆ

1

x

2

3.2 “Partialling Out”

rhat are the part of x with x

12 i1 is equivalent to x i1 that are uncorrelated

-rhat i1 after x i2

’s effects have

-thus B hat measures x been “partialled out”

1

’s effect on y after x

2 has

-In a regression with k variables, the residuals come from a regression of x

-in this case B hat measures x

1

1 on ALL other x’s

’s effect on y after all other x’s have been “partialled out”

3.2 Comparing Simple and Multiple Regressions

In 2 special cases, OLS will estimate the same

B

1 hat for 1 and 2 independent variables

-Write the simple and multiple regressions as:

~

0

~

1 x

1

ˆ

0

ˆ

1 x

1

ˆ

2 x

2

-The relationship between B

1 hats becomes:

~

1

ˆ

1

ˆ

2

~

1

(3.23)

-Where delta is the slope coefficient from regressing x

1 on x

2

(proof in Appendix)

3.2 Comparing Simple and Multiple Regressions

Therefore we have two cases where the B

1 will be equal:

1) The partial effect of x

2 on yhat is zero:

ˆ

2

0

hats

2) x

1 and x

2 are uncorrelated in the sample:

~

1

0

3.2 Comparing Simple and Multiple Regressions

Although these 2 cases are rare, they do highlight the situations where B similar

-When B

2 hat is small

1 hats will be

-There is little correlation between x

1 and x

2

In the case of K independent variables, B

1 hat will be equal to the simple regression case if:

1) OLS coefficients on all other x’s are zero

2) X

1 is uncorrelated with all other x’s

-Likewise, small coefficients or little correlation will lead to small differences in B

1

3.2 Wedding Example

Assuming decisions in a wedding could be quantified, wedding decisions are regressed on the bride’s opinions to give:

MDecisions

i

0 .

7

523

Bride

i

-Adding the groom’s opinions gives:

MDecisions i

0 .

6

511 Bride i

23 Groom i

-Since B

2 hat is relatively small, B

1 hats are similar in both cases

-Although the bride and groom matters in weddings could have similar opinions, it’s the bride’s opinion that often

3.2 Goodness-of-Fit

Equivalent to the simple regression, TOTAL SUM

OF SQUARES (SST), the EXPLAINED SUM OF

SQUARES (SSE) and the RESIDUAL SUM OF

SQUARES (SSR) are defined as:

SST

i n

1

(y i

y )

2

(3.24)

SSE

i n

1

( yˆ i

y )

2

(3.25)

SSR

i n

1

(y i

yˆ i

)

2 n

1 i

( uˆ i

)

2

(3.26)

3.2 Sum of Squares

SST still measures the sample variation in y.

SSE still measures the sample variation in yhat

(the fitted component).

SSR still measures the sample variation in uhat

(the residual component).

Total variation in y is still the sum of total variations in yhat and total variations in uhat:

SST

SSE

SSR (3.27)

3.2 SS’s and R 2

If total variation in y is nonzero, we can solve for

R 2 :

SSR

SST

SSE

SST

1

R

2 SSE

SST

1 -

SSR

SST

(3.28)

R 2 can also be shown to equal the squared correlation coefficient between the actual y and the fitted yhat: (remember ybar=yhatbar)

R

2

[

[

( y i

(y

i y

-

)

2 y )

][

( y

ˆ i

( y

ˆ y

ˆ

)]

2 y

ˆ

)

2

]

(3.29)

3.2 R 2

• Notes:

-R 2 NEVER decreases, and often increases when a variable is added to the regression

-SSR never increases when a variable is added

-adding a useless varying variable will generally increase R 2

-R 2 is a poor way to decide whether to include a variable

-One should ask if a variable has a nonzero effect on y in the population (theory question)

-Somewhat testable in chapter 4

3.2 R 2 Example

Consider the following equation:

Gambling O utcome i

0 .

2

0 .

05 Skill i

0 .

04 Experience i

N

176 R

2

0 .

24

-Here percentage of gambling winnings or losses is explained by gambling skill and gambling experience

-skill and experience account for 24% of the variation in gambling outcomes

-this may sound low, but a major gambling factor, luck, is immeasurable and has a big impact

-other factors can also have an impact

3.2 R 2

• Notes:

-Even if R 2 is low, it is still possible that OLS estimates are reliable estimators of each variable’s ceteris paribus effect on y

-These variables may not control much of y, but one can analyze how their increase or decrease will affect y

-a low R 2 simply reflects that variation in y is hard to explain

-that it is difficult to predict individual behaviour – people aren’t as rational as would be convenient

•

3.2 Regression through the Origin

If common sense or economic theory states that B

0 should be zero:

1 x

1

2 x

2

...

k x k

(3.30)

Here tilde distinguishes from typical OLS

-It is possible in this case that the typical R 2 is negative

-ybar explains more than the variables

-(3.29) avoids this, but no common procedure exists

-Note also that these OLS coefficients are biased

3.3 The Expected Value of the OLS Estimators

-As in the simple regression model, we will look at FOUR assumptions that are needed to prove that multiple regression OLS estimators are unbiased

-these assumptions are more complicated with more independent variables

-remember that these statistical properties have nothing to do with a specific sample, but hold in repeated random sampling

-an individual sample’s regression could still be a poor estimate

Assumption MLR.1

(Linear in Parameters)

The model in the population can be written as: y

0

1 x

1

2 x

2

...

k x k

u (3.31)

Where B

0

, B

1

, … B k are the unknown parameters (constants) of interest and u is an unobservable random error or disturbance term

(Note: MLR stands for multiple linear regression)

Assumption MLR.1 Notes

(Linear in Parameters)

-(3.31) is also called the POPULATION MODEL or the TRUE MODEL

-as our actual estimated model may differ from (3.31)

-the population model is linear in the parameters (B’s)

-since the variables can be non-linear (ie: squares and logs), this model is very flexible

Assumption MLR.2

(Random Sampling)

We have a random sample of n observations, [(x i1

, x i2

,…, x ik

, y i

): i=

1, 2,…, n} following the population model in Assumption MLR.1.

Assumption MLR.2 Notes

(Random Sampling)

Combining MLR.1 with MLR.2 gives us: y i

0

1 x i1

2 x i2

...

k x ik

u i

(3.32)

Where u i contains unobserved factors of y i

Where B k hat is an estimator of B

K

.

Ie: l og ( utility ) i

0

1 l og ( income i

)

2

S crubs i

S crubs

2 u (ie)

3 i i

We’ve already seen that residuals average out to zero and sample correlation between independent variables and residuals is zero

-our next assumption makes OLS well defined

Assumption MLR.3

(No Perfect Collinearity)

In the sample (and therefore in the population), none of the independent variables is constant, and there are no

exact linear

relationships among the independent variables.

Assumption MLR.3 Notes

(No Perfect Collinearity)

-MLR.3 is more complicated than its single regression counterpart

-there are now more relationships between more independent variables

-if an independent variable is an exact linear combination of other independent variables,

PERFECT COLLINEARITY exists

-some collinearity, or impact between variables is expected, as long as it’s not perfect