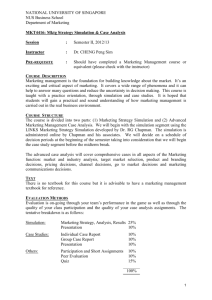

Simulation Model - Optimisation & Decision Analytics

advertisement

DEVELOPMENT OF A PROCEDURE FOR MANUFACTURING MODELLING AND SIMULATION Emanuele Nubile1, Eamonn Ambrose1 and Andy Mackarel 2 1 National Institute of Technology Management, University College Dublin, Blackrock, Co. Dublin. 2 Lucent Technologies, Bell Labs Innovations, Blanchardstown Industrial Park, Co. Dublin ABSTRACT Simulation is a powerful tool for allowing designers imagine new systems and enabling them to both quantify and observe behaviour. Whether the system is a production line, an operating room or an emergency-response system, simulation can be used to study and compare alternative designs or to troubleshoot existing systems. With simulation models, we can explore how an existing system might perform if altered, or how a new system might behave before the prototype is even completed, thus saving on costs and lead times. Modelling and simulation are emerging as key technologies to support manufacturing in the 21st century. However, there are differing views on how best to develop, validate and use simulation models in practice. Most development procedures tend to be linear and prescriptive by nature. This paper attempts to develop a more emergent and iterative procedure that better reflects the reality of a simulation development exercise. It describes a case study of a telecommunications manufacturing operation where a simulation package was used to address an optimisation problem. The study suggests that simulation models can be valuable not just in helping to solve specific operational problems, but also as a trigger to generate more innovative thinking about alternative problems, models and uses for simulation tools. This study represents the first in a series of case studies to gain a better understanding of this contingent process, with a view to developing a more flexible development procedure for simulation modelling. KEYWORDS: 1. Manufacturing, simulation, modelling INTRODUCTION Process modelling and simulation are commonly used tools in manufacturing process improvement, and to a lesser extent in the supply chain field. While their application to specific process problems is well understood, their use as a design tool is more problematic. In this paper we explore the actual model development process, to gain insights as to how simulation models can be extended to aid complete redesign of a supply chain. The telecommunications industry was chosen due to the high rate of new product development and the short product life cycles. There is limited value in simulating and optimising a hierarchy of manufacturing processes unique to a particular product that might be out of date before the optimisation is complete. The challenge is to use the simulation tool more organically to build an understanding of the complete supply chain and support the design of future generation products. We carried out a case study in Lucent Technologies, Blanchardstown, which is the supply management center for a range of telecommunications products. The study involved developing a simulation of part of a manufacturing process, and then exploring with Lucent personnel at various levels of the organization how the model might be used as a supply chain development tool. The focus of this paper is not the simulation model itself, but rather the model development process, and how it interfaces with Lucent personnel. 2. LITERATURE REVIEW Process modeling and simulation are modeling techniques available to support companies in gaining a better understanding of their manufacturing system behaviors and processes and therefore helping them in decision making. Process modeling provides management with a static structural approach to business improvement, providing a holistic perspective on how the business operates, and provides a means of documenting the business processes. Process simulation allows management to study the dynamics of the business and to consider the effects of changes without risk. In considering process modeling and simulation as processes in themselves, the literature generally agrees that a formal procedure is desirable. A series of between four and twelve steps is typically recommended, in order to provide a formal procedure, which is seen as a contributor to success. Emphasis varies as to the most important steps. Banks [1] presented a 12-step procedure where verification and validation of the model is the most important element. The goal of this step is a model that is accurate when used to predict the performance of the real-world system that it represents, or to predict the difference in performance between two scenarios or two model configurations. Shannon [2] presents a similar 12 step procedure, but with added stress on elements such as experimental design and interpretation of result. The author also cites the "40-20-40 Rule". This rule states that 40 percent of the effort and time in a project should be devoted to the understanding of the problem, goals, boundaries and collecting data, 20 percent to the formulation of the model in an appropriate simulation language, and the remaining 40 percent to the verification, validation and implementation. It was decided that a procedure combining elements of Banks and Shannon would be used in this study. While much literature exists on the procedures to develop simulation models, less attention has been given to limitations of simulation, and problems with its use. Greasley [3] identifies the cost of building sophisticated models, and risk of over-emphasis on modelling and simulation. The author also notes that simulation is best suited to dynamic systems that do reach equilibrium. The stability of the process needs to be considered before simulation is used. Citing hardware and software limitations, Barber [4] recommends ‘large-scale static business models with selective small-scale dynamic process models for manufacturing enterprises’. In discussing the benefits of business process simulation, Greasley [3] mentions the ability to incorporate variability and interdependence, and the scope for new designs of business processes without the cost of establishing a pilot process. He also cites the importance of the visual animated display as a communication tool to facilitate discussion and development of new ideas. 3. CASE STUDY 3.1 Background Lucent Technologies designs and delivers the systems, services and software that drive nextgeneration communications networks. Lucent's customer base includes communications service providers, governments and enterprises worldwide (http://www.lucent.com). The product chosen for analysis is a low cost Add Drop Multiplexor by which an STM-1 (155 Mb/s interface) or STM4 (622 MB/S interface) device can cost-effectively link small- and medium-sized customers to access networks. It lets you add Ethernet and Fast Ethernet transport service to your existing telecommunications infrastructure. The product is sold as a series of different optical specifications, depending on the component mix assembled on a single circuit board. Several variants of application can be loaded onto the unit and the interaction of the hardware and software are extensively tested to prove that the unit confirms to its full specification. Parametric Results are measured and stored as a full test history for each unit. All steps of testing are tracked in a predefined order using a forced routing data collection system. As testing is a very expense cost in this low margin product all efforts are taken to maximise tester utilisation and reduce test and rework costing while being able to demonstrate that the product is monitored and checked to the highest specifications possible. The whole process to manufacture the main circuit board product assembly consists of solder paste application, component placement, soldering, in-line inspection, and testing operations. If a board fails the in-line, in-circuit or functional tests, it goes to a rework station for detailed off-line diagnosis, debugging and rework. Each component and connection on a circuit board is a potential defect site, and the types and causes of defects are varied. For instance, a board might malfunction because of a defective component(s), placement of the wrong component, defect in the printed circuit board’s internal wiring, or improper out of control manufacturing process such as poor soldering or component misalignment. We decided to focus on the functional test step of the process, as it is seen as the capacity bottleneck of the process. The study focussed on how to optimise this step by adjusting the available resources within that stage of the process – the functional test operator and test set, the reworker, and the debug technician and debug test set. 3.2 Simulation Model Building Procedure The following procedure was developed based primarily on the steps proposed by Shannon [2] and Banks [1]: 1. Problem Definition. Clearly defining the goals of the study so that we know the purpose, i.e. why are we studying this problem and what questions do we hope to answer? 2. Project Planning. Being sure that we have sufficient and appropriate personnel, management support computer hardware and software resources to do the job. 3. System Definition. Determining the boundaries and restrictions to be used in defining the system (or process) and investigating how the system works. 4. Conceptual Model Formulation. Developing a preliminary model either graphically (e.g. block diagram or process flow chart) or in pseudo-code to define the components, descriptive variables, and interactions (logic) that constitute the system. 5. Preliminary Experimental Design. Selecting the factors to be varied, and the levels of those factors to be investigated, i.e. what data need to be gathered from the model, in what form, and to what extent. Agreeing the required outputs of the experiment 6. Input Data preparation. Identifying and collecting the input data needed by the model. Defining the data sources and standardising the formatting. 7. Model Translation. Formulating the model in an appropriate simulation language and coding the data. 8. Verification. Concerns the simulation model as a reflection of the conceptual model. Does the simulation correctly represent the data inputs and outputs? 9. Validation. Provides assurance that the conceptual model is an accurate representation of the real system. Can the model be substituted for the real system for the purposes of experimentation? 10. Final Experimental Design. Designing an experiment that will yield the desired information and determining how each of the test runs specified in the experimental design is to be executed. 11. Experimentation. Executing the simulation to generate the desired data and to perform sensitivity analysis. 12. Analysis and Interpretation. Drawing inferences from the data generated by the simulation runs. 13. Implementation and Documentation. Reporting the results, putting the results to use, recording the findings, and documenting the model and its use. In this study we excluded the final steps of experimentation, analysis and implementation (Step 11 to Step 13), as the purpose of our research was to examine the interaction between model users and the simulation model during the development procedure. Our case study starts by defining and formulating the problem (Step 1). Unlike most simulation problems, the intent in this case study was to avoid defining a specific problem at an early stage While the initial intent was to explore all aspects of the Functional Test bottleneck, it was not clear at this early stage whether the operational data needed would be available, so it was decided to proceed with a loose problem definition – ‘to examine the utilisation of resources within functional test subject to using only readily available data’. The resources available were the Lucent Test Engineering Manager and the process analyst – hence the restriction of limiting the study to readily available data. The timetable was to produce a working simulation within 5 weeks (Step 2). The nature of this particular project was quite broad – to develop a model of the bottleneck process step without a specific problem in mind. Hence, steps 3,4 and 5 were emergent, and the main driver was step 6 – availability of data. However the way in which the system definition and the conceptual model were iteratively evolved was in our view typical of most model development procedures. The final conceptual model (Step 4) is shown in Figure 1 below. This could not be formalised until the data was collected (Step 6), so that we could be sure that data was readily available to support it. Simulation Model Logic Process Flows Failure Paths Priority Rules Input Resource Capacity Failure Rates Production Loading Data Output Resource Utilization Throughput Times and Costs Failure Modes Repair Times Failure Profiles Resource Costs Figure 1: Conceptual Model We were now ready to translate the model in an appropriate simulation language (Step 7).The model translation took relatively little time due to the data input accuracy and consistency. Once we had the working model, it was time to verify and then validate the model (Steps 8,9). Verification was carried out initially by the analyst, to ensure that the model accurately reflected the data supplied and that it generated the outputs required by the conceptual model. Two separate were then set up, one with the Test Engineering Manager and one with a wider group of users, to assess the impact of the simulation on the users during the validation step. 3.3 Validation Meeting The first meeting we had was with the Test Engineering Manager who had participated in the development of the conceptual model. Allowing for the restricted data available, the model was accepted as broadly valid. What was interesting was that although the Test Engineer was fully aware of the model boundaries and limitations, he immediately saw a number of ways to extend its scope and application - ideas had not arisen in the earlier system definition phase. Seeing the model triggered thoughts as to how it might be made more realistic - by extending the permutations, number of input, complexity of the model itself. It was difficult to restrict this discussion to purely validation issues, as the Test Engineer was immediately thinking in terms of further experimental possibilities. Modifications were made to the model prior to moving to the next stage. (Back to an iteration of Steps 3 to 6, followed by Step7 again). 3.4 Experiment Design Meeting A varied group of interested users were selected for the second meeting. Their roles were: Senior Research in Optoelectronics and Value Chain Analysis Test Engineering Manager for Remote Access Data Switching Manufacturing Process/ Product Engineer for the AM1 Product Test Engineer for the AM1 product family Lucent Reliability Engineer This second meeting did not fit neatly into the procedure, as it was partly a validation exercise and partly an experimental design meeting – to gain suggestion as to how experiments might be designed around such models. Effectively we had moved on to step 10. Following a basic presentation of the simulation model, we discussed how the modelling process could be applied at various levels – production, product design, outsourcing decisions, and supply chain strategy. However, there were a wide variety of perspectives in the audience, each of which dominated that user’s horizon for imagining applications of the model. Most saw the simulation model as a possible tool in optimising manufacture or in assessing outsourcing options, rather than seeing the potential to simulate their own management processes. 4. DISCUSSION During the development of this simulation model, we identified three distinct phases: • Steps 3,4,5,6, which were not simply sequential, but concurrent steps where information flows between analyst and client crossed the boundaries of system definition, model formulation and experimental design. This was by far the most resource intensive part of the project. In addition the steps were not liner but iterative, as often the inability to identify or collect data required continual re-assessment of the basic system design. The input data step acted as a stage gate that could not be passed until all previous steps were successfully aligned. • Steps 7,8 which were well defined steps involving only the analyst. Careful preparation at the previous phase ensured that this phase did not result in iterations back to earlier steps. • Steps 9,10 where the dynamic model was demonstrated to the system users. Here we found that the existing procedures from the literature did not effectively capture the emergent nature the process, and hence failed to identify the potential value in these steps. The impact of the animation varied among users, and did not follow any kind of linear procedure. Instead, suggestions were more random, depending on the perspective of the Lucent personnel involved. We modified the simulation procedure map as proposed by Banks [1] in an attempt to capture these alternative paths. These included: successive iterations from steps 4,5 and 6 back to step 3; iterations from steps 9 and 10 back to step 1; and alternative paths for further problems and models flowing from step 10. Problem Definition 1 Project Planning 2 3 System Definition 4 5 Conceptual Model Formulation Model Translation 7 8 6 Preliminary Experimental Design Yes Input Data Preparation 9 Verification Validation 10 Yes No Final Experimental Design Agreed No Revisions Alternative problems, models and uses for the simulation process Experimentation 11 Analysis and Interpretation 12 Implementation and Documentation 13 Figure 2: Proposed Simulation Procedure Map 5. CONCLUSIONS This study suggests that a linear approach to the modelling/simulation process is unrealistic. Problem definition will always be impacted by data availability, so iterations will have to occur. In allocation of time and resources to a simulation project, allowances should be made for these iterations. More importantly, validation and verification of the dynamic simulation model will lead to unplanned changes to project scope and even to core problem definition. It is necessary to have a procedure that allows for this more organic/contingent process from the outset, rather than attempting to define and fix the scope early on. Our findings suggest that the real value of modelling and particularly simulation is the way in which exposing process experts to the dynamic simulation impacts upon their perception of the problem definition. A restrictive problem definition early on would ‘kill off’ this organic process, thus undermining the potential value of the whole simulation project. As modelling and simulation work continues on the Lucent Supply Chain, we will continue the case studies to gain a better understanding of this contingent process, with a view to developing a more flexible development procedure for simulation modelling. ACKNOWLEDGEMENTS The authors would like to express their gratitude for the support given by Lucent Technologies/Bell Labs and the National Institute of Technology Management, UCD, throughout the research project. REFERENCES [1] [2] [3] [4] J. Banks, Introduction to Simulation, in Proceedings of the 1999 Winter Simulation Conference, Phoenix, Arizona, December 5 – 8, www.informs-cs.org/wscpapers.html. R. E. Shannon, Introduction to the Art and Science of Simulation, in Proceedings of the 1998 Winter Simulation Conference, Washington, D.C., December 13 – 16, www.informscs.org/wscpapers.html. A. Greasley, Using Business-Process Simulation within a Business Process Reengineering Approach, Business Process Management Journal, Vol. 9 (2003), No. 4, 408-420. K. D. Barber, Business-process modeling and simulation for manufacturing management, Business Process Management Journal, Vol. 9 (2003), No. 4, 527-542