Horizon Conference Paper

advertisement

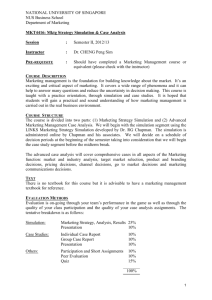

Horizon: A System-of-Systems Simulation Framework Cory M. O’Connor1 and Eric A. Mehiel2 California Polytechnic State University, San Luis Obispo, CA, 93407 The analysis of system level requirements is often difficult due to the convoluted nature of subsystem interactions and the interaction of the system with environmental constraints. The process of validating System-Level Use-Cases impact subsystem design and the converse is also true; subsystem design affects System-Level Performance. The Horizon Simulation Framework has been developed to help the systems engineer better understand the role of subsystems, environment and Concept of Operations for system design iteration and systems level requirements validation and verification. The Horizon Simulation Framework provides an extensible and well-defined modeling capability concurrent with a parameterized search algorithm to generate operational schedules of systems-of-systems. While many system simulation tools rely on Multi-Disciplinary Optimization techniques to design an optimal system, the Horizon Framework actually focuses on a different part of the systems engineering problem. Does the current design meet system level requirements that are based on a Use Case and cannot be directly verified by analysis? Programmed in C++, the Horizon Framework can answer this question and provide the systems engineer with all relevant system and subsystem state data throughout the simulation. Subsystem modeling and the ability to retrieve state data of the subsystems is not generally a component of most geometric access based simulation tools. To simulate the state of the system over the time frame of the simulation, the Horizon Framework uses an exhaustive search method, which employs pruning techniques to accelerate the algorithm. The algorithm relies on the system indicating whether or not it can perform the requested task at the requested time. Therefore, the scheduling algorithm does not have, or need, insight into the particulars of the system. Hence, the modeling capability of the Horizon Framework is extended. Currently the Horizon Framework is in the final stages of test and development. This paper covers the conceptual architecture of the software, the scheduling methodology employed by the framework and the modeling assumptions and philosophy inherent to the current framework design. This paper also presents an analysis of the sensitivity of simulation time to the various scheduling algorithm parameters and model complexity. Finally, the preliminary results of applying the Horizon Framework to the conceptual design of a time-sensitive Earth-imaging system are presented. I. Introduction The precise application of Systems Engineering principles to the Space System Design and Development process is critical to the success of a program. The goals of systems engineering are simple: reduced cost, reduced schedule, increased reliability and greater satisfaction of system requirements. Many tools and methodologies exist which implement the systems engineering principals. From configuration and documentation management tools, requirements tracing and decomposition databases to process management and program organization methodologies, there are as many tools as there are interpretations of the core systems engineering concepts. 1 2 Student, California Polytechnic State University, San Luis Obispo, CA, 93407, Student Member Assistant Professor, California Polytechnic State University, San Luis Obispo, CA, 93407, Member 1 American Institute of Aeronautics and Astronautics The full lifecycle of any program involves many stages as outlined in most books on systems engineering. More specifically, several texts outline and discuss the principles of systems engineering applied to the Aerospace Industry. Several books on Spacecraft Design rely heavily on systems engineering principles. For example, see Larson and Wertz’si classic text, Charles Brownii, or Vincent Pisacaneiii. During the initial design phase (Phase A) the design team must turn there attention to requirements development, flow and decomposition. Hence, most of the work and analysis during Phase A tends to focus on System Concept of Operations (CONOPS) and system level use cases. During the initial design phases, system simulation is the most worthwhile method for system level requirements development and verification. The need for a modular system simulation tool for use in the initial design phase was the motivation for the development of the Horizon Simulation Framework. The Cal Poly Space Technologies and Applied Research laboratory (CPSTAR) is a multidisciplinary group of engineering students and faculty at the California Polytechnic State University, San Luis Obispo dedicated to the application of systems engineering principals as applied to space technologies and space systems. The CPSTAR team, with support from Cutting Edge Communications, LLC, developed the initial Horizon Simulation Framework during the summer of 2006. A. The System Simulation Problem There are several ways to approach the problem of system level requirements verification via system simulation. One method of system simulation involves the Multi-Disciplinary Design or Optimization (MDD/MDO) approach. MDD and MDO typically involve varying system design parameters to reach a satisfactory (or optimal) design point. However, the MDO approach does not traditionally involve the aspect of system scheduling, or system Utility Analysis. In other words, traditional MDO methods tend to focus on optimizing a system design by varying system and subsystem parameters. During “Phase A” design, MDO methods are appropriate for trade study analysis, determining system-level attributes and develop CONOPS requirements. However, once a design concept has been determined MDO techniques are not as valuable. With a design concept identified, the question is no longer: “What is the best design?” but “Will the detailed design work and satisfy the system requirements?” Therefore, the CPSTAR team built the Horizon Simulation Framework was with system requirement verification in mind. There are tools available, both COTS and custom applications, which are capable of integrating various sources of design data. The software package Model Center, from Phoenix Systems, is an example of a COTS product that can integrate various data sources including custom tools. Model Center provides a method for networking the various data sources together and a GUI for system layout and design. One draw back to Model Center for system simulation over time is the overhead associated with integrating the various data sources through standard networking protocols. There is also a variety of products available for system dynamic simulation and visualization. For example, STKiv from AGI, Freeflyerv and SOAPvi, to name a few, are all currently available products. Each of these system simulation tools has their advantages and disadvantages. All are excellent for visualizing the behavior of systems and determining geometric access to targets. The CPSTAR team developed the Horizon Simulation Framework to fill the niche between generalized integration tools like Model Center and high power geometric access and visualization tools like STK. The CPSTAR team developed the Horizon Simulation Framework to do the following: Provide a general framework for subsystem modeling, Provide a set of utilities for Matrix Algebra, Numeric Integration, and Geometric and Subsystem Modeling, Define an interface between the system model and a task-scheduling algorithm. Given a set of targets, assets, and subsystem models, determine a feasible task schedule based on all external and internal constrains and subsystem dependencies. With a tool like the Horizon Simulation Framework, an analyst can develop a system model, provide a proposed schedule of desired tasks, and simulate the behavior of the system over some time interval. The Horizon Simulation Framework provides the analyst with all state data for each subsystem during the entire course of the simulation time interval. With the information provided by Horizon, the analyst can begin to determine whether a proposed system design meets various types of system level requirements. For example, for a Remote Sensing System, one system level requirement might be something like, “The system shall acquire 30 images above 20 degrees North Latitude per revolution.” The solution to the geometric access problem is relatively straightforward for this type of requirement. However, does the current design of all the subsystems allow the satellite to meet the requirement? By 2 American Institute of Aeronautics and Astronautics simulating the behavior of the whole system, acting within its environment, the analyst can begin to gain confidence in the proposed design or make informed design changes. B. Utility Analysis The process described in the pervious section is known as Utility Analysis. Utility Analysis is the process of verifying system level requirements that may not yield to typical verification methods. Many requirements both at the system level and down the requirements chain can be verified directly through analysis or test. However, system level requirements often take the form of a use requirement. In other words, many system level requirements refer to how the system will typically perform. Common requirements questions include “How many images per day will the system take?” or “What is the maximum time allowed from user request to data delivery?” These types of determinations define how the user will interact with the system and how the system is used. Hence, these requirements define the utility of the system. 1. Requirements and CONOPS Verification Difficulties Many industry programs employ a Canonical Design Case (CDC) or a Design Reference Mission (DRM) as a baseline case for requirements verification. This paper will use the term DRM throughout. The Systems Engineering Group should develop the DRM and then flow the DRM down to other segments for segment and subsystem requirements verification. Adequate DRM development should include requirements and performance characteristics from both the segment and system levels. Hence, the DRM development effort is a systems engineering problem. The exact methodology of DRM development varies across the industry. However many system integrators are moving to system simulation methods to develop data that defines the DRM. This is where the Horizon Simulation Framework fits into the design iteration process. As demonstrated in Figure 1, the Horizon Simulation Framework employs a rigorously defined modeling procedure with an exhaustive scheduling algorithm to produce data for DRM generation and Utility Analysis. Utility Analysis (Req. Verifications) Horizon Scheduling Modeling Figure 1. The Relationship between the Horizon Simulation Framework and Modeling, Scheduling and Utility Analysis The Horizon Simulation Framework is ideally positioned within the construct of Fig. 1 to implement both physical models of subsystems and Concept of Operations (CONOPS) requirements. The Horizon Simulation Framework can model and account for other external constraints as desired since the defined scenario exists within a simulated physical environment. Therefore verification through simulation, accomplishes the difficult task of 3 American Institute of Aeronautics and Astronautics verifying system level requirements that are not verifiable through analysis or testing. Also all CONOPS imposed on the use of the system can be verified through the DRM data generated by the Horizon Simulation Framework. Finally, the DRM data generated by the Horizon Simulation Framework can be flowed down to other segments and subsystems as a baseline task set for verification of components and assemblies of the subsystems. If the data generated by Horizon produces any discrepancies with regard to the requirements, either this means the models used by Horizon or the requirements need to be addressed. The process of flowing the DRM down to the segments and subsystems is part of the Phase A pre-design and Phase B design, and is known as design iteration. Figure 2 shows the design iteration procedure and where the Horizon Simulation Framework fits into that process. A-Spec Segment Specs and Interface Documents Requirements Flow Utility Analysis Vetted Design Final Specs for Sell-off Models and Equations Horizon Simulation Framework Figure 2. The Role of the Horizon Simulation Framework in the Design Iteration and Requirements Flow Process After an A-Spec is derived from a Request-For-Proposals (RFP), the process of requirements development for all segments and subsystems begins. As a design matures the design team moves toward PDR and CDR reviews. Prior to PDR, the Systems Engineering Group is constantly evaluating the design concept through system level requirements verification and CONOPS development. In other words, the Systems Engineering Group is constantly evaluating the completeness of the current design. The Horizon Simulation Framework can be used as the feedback mechanism for requirements verification and flow by generating the DRM as input to the requirements documents, the Utility Analysis Group and even the Models and Equations Group. Figure 2 does not show the inputs to the Horizon Simulation Framework. The inputs to Horizon include system level and all derived requirements and all models and equations developed as part of the design process. As such, the Horizon Simulation Framework becomes one tool in the bag for system design. 2. Bottlenecks and Leverage Points One particularly valuable service the Horizon Simulation Framework can provide is to help find any design bottleneck or leverage points hidden within the system design. Here a bottleneck within the system is any system design feature or parameter that is a hindrance to the overall utility of the system. A simple example of a bottleneck may be the communications system data rate. A slow data rate my preclude data gathering because the data buffer which holds the mission data may remain full. If the Horizon Simulation Framework scenario includes a model of the data buffer and the communications data rate than the state of the buffer is an output of the framework. Hence, it is easy to identify the data rate bottleneck. Leverage Points within the system are system design elements or parameters that if changed only slightly result in a proportionally large benefit to the system utility. The costs associated with exploiting a leverage point in the 4 American Institute of Aeronautics and Astronautics system may be worth the capability added to the system. A leverage point of the system can only be exploited if it is first identified. Analysts can tease out leverage points of the system through trade studies performed with the Horizon Framework. II. The Horizon Simulation Framework Architecture This section describes the overall design of the Horizon Simulation Framework. Before the detailed description of the particulars of the Horizon Framework, this section discusses the main goal of the Horizon development effort. The three guiding principles that were derived from the framework goal are described. Next, the important terms used to describe the Horizon Simulation Framework are defined for use throughout the discussion of the software architecture. While some of the terms are obvious, a strict definition of the terms is useful to help avoid confusion. Finally, the section ends with a more detailed description of the fundamental mechanics of the framework. A. The Horizon Philosophy The goal of the Horizon Simulation Framework is to be useful and reusable. From this simple design statement, three development principles were derived. The design of the framework is based on the application of modularity of system modeling and scheduling, flexibility with respect to the fidelity of the simulated system, and absolute utility of the supporting libraries in the framework. Within object-oriented programming, the interfaces between software segments primarily determine whether these three principles are achievable. As a result, a significant amount of time was spent defining the interfaces between the various modules of the framework. The two major modules of the Horizon Simulation Framework are the main scheduling algorithm and the system model. Within the system module, simulating the subsystems is also highly complex problem. Therefore, the two chief interfaces designed early in the development process are the interface between the main scheduling algorithm and the system, and the interface between subsystems. Figure 3 illustrates how fundamental these interfaces are within the framework. We will explore these interfaces as they pertain to the framework principles. Simulation Parameters (Input) System Parameters (Input) Horizon Simulation Framework System Model Main Scheduling Algorithm Subsystem Subsystem Scheduler/System Interface Interface between Subsystems Subsystem Final Schedule, State Data (Output) Figure 3. The Major Modules (Boxes) and Interfaces (Arrows) Implemented in the Horizon Simulation Framework 5 American Institute of Aeronautics and Astronautics 1. Modularity Simulation modularity is an important tenet, because it simplifies extensibility, it constrains fuzzy and confusing interfaces, and fundamentally increases the value of the simulation for users who want to understand a problem more deeply. Modularity within Horizon was the deciding factor in two important design decisions. First, it was decided early on that the main scheduling algorithm should be independent of the system model to avoid several problems that can arise from system model/scheduling algorithm interaction. Secondly, the subsystem models are modular to avoid leakage of one subsystem model into another subsystem model, and to allow for subsystem model reuse. The Horizon Simulation Framework is a compromise between computational performance and ease of use. To write fast simulation code the benefits of optimized compiled code are generally required. Hence, most high fidelity custom code simulations are not run in interpreted languages such as Matlab or Visual Basic. At an even high level would be simulation tools like Simulink, which is very easy and intuitive to use, but slow by comparison to compiled code. Compiled code can be fast but it is generally more difficult to test and modify. As such, the learning curve for using compiled languages like C++ or FORTRAN for simulation is steep. In addition, since compiled languages are general-purpose languages, no modeling rules or frameworks are necessarily provided. The Horizon Simulation Framework straddles both worlds, ease of use and performance, by providing a modular framework for system modeling which must then be compiled before it can run. In other words, the Horizon user must know the C++ programming language to write subsystem models, but the does not need to handle the interface between the subsystem models. The subsystem model interface is designed to be intuitive and to have very little overhead or supporting code behind it. Therefore, the task of subsystem modeling should become simply defining where the subsystem lies in the hierarchy, reproducing the governing equations of the subsystem in the code, and specifying how it interacts with other subsystems. Thus, the framework embodies the ease of use of a “plug-and-play” simulation tool, with the power of compiled-code direct simulation. To assist the Horizon user, two levels of modularity were built into the framework. First, the interface between the main scheduling algorithm and the system model is rigorously defined such that they can only interact through a well-defined interface. Since the main scheduling algorithm is impendent from the system model, the scheduling algorithm can be modified, or changed entirely, without requiring modification to the system model. Therefore, in the future several options could be available to the user regarding scheduling methods. Currently the main scheduling algorithm is an exhaustive search algorithm with cropping (described in section II.B.5) but in the future, an evolutionary algorithm could easily be put in place. Essentially, the scheduling algorithm repeatedly asks the system, “Can you do this?” If the system model returns true, the schedule is modified, if not, the schedule remains unchanged. Two important outcomes are realized with this modularity, modification of the system model is not required when the scheduling algorithm is modified, and the interface between the scheduling algorithm and the system model is static. Hence, system modeling can be considered independently from the scheduling algorithm. However, the modular interface between the main scheduling algorithm and the system model does impose a few strict conditions on the modeling process. Namely, no circular dependencies between subsystems are allowed, and dependencies between subsystems must be verified in the proper order. Put simply, the past state of the system cannot depend on the future state of the system. Currently the user must manually implement the correct sequence in which the subsystems are verified against any desired constraints. There are significant opportunities to automate this process and our group at Cal Poly is currently exploring several solutions, including auto-code generation. Another less significant condition imposed on the system model is all subsystems must have access to generated state data as appropriate during the simulation time. The method by which the framework generates state data handles this constraint quite elegantly. The state data, as described in more detail in section II.B.4 can be thought of as a large bulletin board. Each subsystem places a sheet of paper on the bulletin board for all other subsystem to see as state data is generated. The framework then allows subsystems to share state data across subsystems via a defined interface. In terms of designing a fast simulation, the user should adhere to a “fail fast” philosophy. Therefore, a certain amount of know how from the analyst is expected. The main algorithm is an exhaustive search algorithm. Therefore, the feasibility of adding a new event to a given schedule is verified many times during a typical simulation. As such, the model of the scenario should be designed to “fail fast”. In other words, the user should verify any constraints that are expected to be invalid more than valid early in the constraint checking sequence described in II.B.2. 2. Flexibility 6 American Institute of Aeronautics and Astronautics Since each subsystem model is written in C++, nearly any subsystem model can be implemented. For example, a simple model consisting of the number of bits on a buffer can be used to represent a data system. Alternatively, the Horizon user could implement a complex data model including priority cueing, data access time, and any other important parameter to the simulation. Therefore, since the subsystem models are written in C++ and then compiled into an executable, any level of subsystem model fidelity is possible. Hence, the Horizon Simulation Framework is very flexible. The Horizon Simulation Framework is intended for use throughout the program lifecycle therefore allowing for different levels of fidelity is crucial. For example, during the pre-design and initial design phases of a program, it can be used to support low fidelity trade studies, which provide data for conceptual design and CONOPS analysis. Further, along in the program lifecycle the previously implemented modules can be updated and modified to capture the detailed analysis performed at the segment and subsystem level. The flexibility designed into the Horizon Simulation Framework also allows for extended simulation capability. Since the framework is modular, (the system is modeled without regard to the scheduling algorithm) and therefore flexible, the system is not limited to any specific application. The initial development thrust was focused on the simulation of space systems; however, the framework is capable of simulating any dynamic system. For example if dynamic targets such as ground troops or aircraft were modeled, and the system was modeled as an aircraft carrier group, the result of the simulation would be a battlefield scenario. The flexibility to model nearly any system was a key design goal achieved during the Horizon Simulation Framework development effort. 3. Utility The final underlying principle of the Horizon Simulation Framework was Utility. To this end, many useful algorithms have been created that indirectly help the user model any possible system. Libraries of integration methods, coordinate frame transformations, matrix and quaternion classes, and profile storage containers were created to separate the user from the overhead of using the C++ programming language. Where possible, interfaces with framework elements were designed for simplicity, reliability and transparency. Additionally, the modular, flexible framework allows previously designed subsystem models to be easily used for rapid development of simulation scenarios. Likewise, any environmental models (models of environmental constraints and dynamics, such as gravity models and coordinate transformations) can also be reused. With the development of a GUI (a current area of work), the user could literally drag and drop subsystem models into a scenario, make minor modifications, and have a simulation running in no time. A library of subsystem and environmental models combined with a set of mathematical functions and input and output utilities make the Horizon Simulation Framework extremely useful to the user. B. The Horizon Architecture/Glossary The simulation architecture of the Horizon framework is described in this section. First, definitions of the eight key simulation elements are given. Then, building on these key elements, the main interfaces and routines in the framework are explained. Lastly, the utility tools, which are not part of the main architecture, but are essential in supporting the framework are described. 1. Basic Simulation Elements Task – The “objective” of each simulation time step. It consists of a target (location), and performance characteristics such as the number of times it is allowed to be done during the simulation, and the type of action required in performing that task. State – The state vector storage mechanism of the simulation. The state contains all the information about system state over time and contains a link to its chronological predecessor. Event – The basic scheduling element, which consists of a task that is to be performed, the state that data is saved to when performing the task, and information on when the event begins and ends. Schedule – Contains an initial state, and the list of subsequent events. The primary output of the framework is a list of final schedules that are possible given the system. Constraint – A restriction placed on values within the state, and the list of subsystems that must execute prior to the Boolean evaluation of satisfaction of the constraint. Also the main functional system simulation block, in that in order to check if a task can be completed, the scheduler checks that each constraint is satisfied, indirectly checking the subsystems. 7 American Institute of Aeronautics and Astronautics Subsystem – The basic simulation element of the framework. A subsystem is a simulation element that creates state data and affects either directly or indirectly the ability to perform tasks. This simple definition is necessary when considering the framework elements that a subsystem is supposed to interface with, and the requirements for accurate simulation. Dependency – The limited interface allowed between subsystems. In order to keep modularity, subsystems are only allowed to interact with each other through given interfaces. The dependencies specify what data is passed through, and how it is organized. Dependencies collect similar data types from the passing subsystems, convert them to a data type the receiving subsystem is interested in, and then provide access to that data. System – A collection of subsystems, constraints, and dependencies that define the thing or things to be simulated, and the environment in which they operate. 2. Constraint Checking Cascade The primary algorithm invoked when determining whether a system can perform a task is the constraintchecking cascade. The internal functionality of a constraint is described, and then the context in which each constraint is checked. Last, the advantages of using the constraint-checking cascade are explained. In order to confirm that the system and the schedule satisfy a constraint, first, the list of subsystems that provide data for the constraint are called. As each subsystem executes it adds its contribution to the state data. After all subsystems successfully execute, the constraint qualifier (the actually check on the validity of the state after the data has been added) is evaluated. Figure 4 shows a graphical representation of this internal constraint functionality. New Task Constraint Fail Subsystem 1 Pass Fail Constraint Fails Data is accessed from and written to the state as the subsystems perform the task. Subsystem 2 State Data Pass Fail Subsystem N Pass Fail Qualifier Pass The qualifier accesses the state data to make its Boolean evaluation. Constraint Passes Figure 4. Internal Constraint Functionality If the qualifier is satisfied, then the next constraint repeats this process, until all constraints on the system have been checked. In this way, state data is generated indirectly involving only the subsystems necessary to check each constraint. Only after checking all the constraints does the scheduler run the subsystems whose data is not constrained. Figure 5 shows this constraint-checking cascade further. 8 American Institute of Aeronautics and Astronautics New Task State Data Constraint 1 Fail Pass Constraint 2 Fail Pass Possible Schedule EVENT EVENT Constraint N Fail As each of the constraints passes, the state vector is slowly built up until it includes all of the data generated during an event. Pass Fail Remaining Subsystems Pass If all the constraints pass, the task and state are added to the schedule as an event. Figure 5. Constraint Checking Cascade Diagram This procedure has two distinct advantages over simulating the subsystems in a fixed precedence order. First, constraints whose qualifiers often fail can be prioritized at the beginning of the cascade to create a “fail-first system.” This ensures that if the system is going to fail a possible task, it happens as close to the beginning of computation as possible, and does not waste simulation time. This is especially useful if the hierarchy of subsystem execution has many branches that are independent of each other. Additionally, it caters to the Systems Engineering user, in that it primarily drives the simulation on CONOPS-derived constraints. 3. Subsystems and Dependencies If subsystems are the basic functional blocks that make up the system, then dependencies are the arrows that connect them together. This analogy is useful, because it reinforces the idea of subsystems as independently executing blocks of code. Previous simulation frameworks have fallen prey to what is known as “subsystem creep”; where information about and functions from each subsystem slowly migrate into the other subsystems. This is an evolutionary dead-end in simulation frameworks, as it makes diagnosis of problems difficult and reuse of the subsystem libraries impossible. Additionally it goes against the tenets of object-oriented programming in that any given subsystem class should only have information contained within that subsystem, and access to data types that the subsystem would understand how to deal with. Dependencies are then the interpreters between subsystems. They have access to each of the subsystems and understand how to translate data generated by one subsystem into data needed by another subsystem. For example, one dependency might convert spacecraft orientation information generated by the attitude dynamics and control system (ADCS) subsystem into an incidence angle between the solar panel face and the sun vector for the power subsystem. The power subsystem has no business knowing what a quaternion is, or how the spacecraft is oriented. It is only interested in how much power the solar panels are receiving. Likewise, the ADCS subsystem has no business of knowing how much power the solar panels are generating because of its orientation maneuvers. In this way, subsystems process only data that they know how to handle and are logically contained within their domain. 4. The System State As the subsystems determine that they are able to perform a task, variables that describe the operation of the system must be saved somewhere. In the Horizon framework, this repository of data is called the state. Each state is unique to each event, such that all the data generated over the course of the event is stored in its corresponding state. 9 American Institute of Aeronautics and Astronautics Each state also has a pointer to the previous state to track previous changes and simplify in the identification of initial conditions for simulation algorithms. Storage inside the state emulates the mechanics of a bulletin board. When new data is generated, only changes from previously recorded values are posted, with the new time that they occur. This way, when reading from the state, the most recent saved value of the variable is also the current value. Many different objects in the framework have access to the state, including subsystems, constraints, dependencies, data output classes and schedule evaluation functions. Figure 6 illustrates an example of how the state is used to keep track of data. State Power Subsystem Watts 500 300 270 0.5s Time Watts 0 0 0.5 300 0.85 270 1.5 500 Other Subsystems 0.85s 1.5s Event Start Time Event End Figure 6. Graphical Illustration of the State 5. The Main Scheduling Algorithm The main scheduling algorithm will be briefly introduced, along with the control mechanisms that make it an exhaustive search method. Next, schedule cropping and evaluation, which make exhaustive searches possible in the presence of very large numbers of possible actions, are explained. The main scheduling algorithm contains the interface between the main scheduling module of the framework and the main system simulation module. It guides the exhaustive search in discrete time steps and keeps track of the results. Functionally, it is essentially a call to the main system simulation routine inside a series of nested code loops, with checks to ensure that schedules that are created meet certain criteria from simulation parameters. In the outermost of these loops, the scheduler steps through each simulation time step, always going forward in time. Unlike other simulation frameworks, this ensures that past simulation events are never dependent on future simulation events, and avoids recursion, where subsystems “reconsider” their previous actions. Then, it checks to see if it needs to crop the master list of schedules. More information on that is provided in section II.B.6 and II.B.7, but briefly, it just keeps the number of schedules to a manageable number. In the inner-most loop, for each of the schedules in the master list from the previous time step, it tries to add each of the tasks onto the end of the schedule by creating new events containing each task and an empty state. Before this is allowed, there are three checks it goes through to determine whether it is a valid action. First, it must ensure that the schedule is finished with the last event. Because new events always start at the current simulation time when they are added, no schedule may have one event start before the previous one ends. Second, the scheduler checks the schedule to determine whether the task is allowed to be performed again. Some imaging tasks only need to be performed once, while other state-ofhealth tasks need to be repeated several times over a certain interval. Lastly, and most critically, the system must confirm that it can perform the task, and add its performance data to the state. If these three checks are satisfied, then 10 American Institute of Aeronautics and Astronautics the whole schedule is copied into a new schedule, and the new event at the end of the new schedule. If any of these checks fail, however, the event is discarded and no new schedule is created. 6. Schedule Cropping As the previously explained in the main algorithm, the scheduler attempts to create new schedules by adding each task (in the form of an event) to the end of each schedule in the master list from the previous simulation time step. It becomes readily apparent that the number of possible schedules grows too quickly during a simulation to keep every possible schedule. Therefore, in order to keep the framework run time reasonable, when the number of schedules exceeds a maximum value, the schedules are valued for fitness and then cropped to a default size. The maximum number and the default size are defined are inputs in the simulation parameters. In this way, the exhaustive search operates as a “greedy algorithm.” While this function is not guaranteed to return the globally optimal schedule, it is a close approximation in many instances. 7. Schedule Evaluation Schedule evaluation is the fitness criteria used to generate a metric by which schedules may be judged. Since the schedule evaluator has access to the state, the possibilities for different metrics are nearly endless. Two simple evaluation methods included in the framework include an evaluator that values the schedules by the number of events scheduled, and an evaluator that values the schedules by the sum of the values of each of the targets taken during the schedule. These evaluations determine which schedules are determined to be better than others are during cropping, and which schedule is ultimately returned as the most-feasible simulation of the system over the time period. 8. Utility Tools Below is a list of the most notable utility libraries included in the Horizon framework. Not strictly part of the main functional modules, these libraries are nonetheless used very frequently in modeling subsystems, constraints and dependencies. Many of the tools have been designed to function very similarly to their counterparts in Matlab. This leads to a distinct simplification in portability of existing code. Utility Math Libraries i. Matrices ii. Quaternions iii. Common transformations and rotations iv. Conversions between existing C++ STL types and new classes Numerical Methods Tools i. A Runge-Kutta45 integrator ii. A Trapezoidal integrator iii. Singular Value Decomposition functions iv. Eigenvalue and Eigenvector functions v. Cubic-Spline and Linear interpolators Geometry Models i. Coordinate system rotations ii. Orbital access calculations iii. Orbital eclipse calculator III. Testing and Performance Evaluation Horizon was tested to verify that the framework source code was performing as intended and to determine how simulation parameters vary the framework run time. First, unit testing on classes critical to the main algorithm is described. Then, building on those unit tests, system tests explore how the entire system runs in the unit testing 11 American Institute of Aeronautics and Astronautics phase, and benchmark tests establish a standard unit of measurement of simulation run time. Lastly, to get an idea of the sensitivity of the software to the simulation parameters, parametric code tests were run. The results are described, and a generalized model of computation time with respect to input parameters is derived. A. Unit Testing Unit testing is a procedure used to test that each individual unit of code is independently valid. A “unit” is the smallest bit of functionally operating code. In object-oriented (C++) programming, this is always a class. In order to validate that the framework was operating as intended unit testing was conducted on each of the components of the core program functionality. CppUnit, a C++ port of the JUnit open-source unit-testing framework was used. Each of the low-level Horizon classes were isolated and tested with a wide variety of correct and incorrect inputs to verify that the code units perform as expected. Additionally, functional groups of units were collected into modules and tested to verify the higher-level interactions. In each of the unit tests conducted, the goal was to create a series of conditional expressions that evaluated the unit’s return of expected values. This was accomplished by using each overloaded class method and constructor in as many ways as possible and carefully checking expected values for each of the tests. When possible, external sources were used as references (Matlab, STK) to ensure quality control. Complicated or nonlinear modules were given inputs that were solvable analytically, to ensure that the unit’s evaluation results could be compared to known values. Forty different unit tests were created, with several hundred individual checks, and all returned the expected values. B. System Testing Because Horizon is a simulation framework and the core functionality has no knowledge of the models that could be evaluated by it, unit testing does not necessarily exercise all of its functionality. Example subsystems were created, linked together by dependencies, and included in example CONOPS constraints. These units, which are not part of the standard Horizon framework, were run with varying combinations of the framework to ensure that all models simulated by the framework could be implemented reliably. Subsystems and constraints were drawn from simplifications of existing operational systems. As there was no “correct” output (which could vary significantly depending on simulation parameters); successful execution consisted of results mirroring high-level theoretical expectations on the simulated system. C. Benchmark Performance Testing It is useful to understand how the basic simulation parameters affect the run time of a simulation independent of the model used. In order to benchmark the performance of the framework scheduling and simulation algorithms given varying simulation parameters, a set of typical use case parameters had to be created. Because the framework is independent of the implementation, the system used in the benchmark consisted of a single subsystem with a constant 3 percent chance of being able to perform the task. In this way, the framework simulation parameters were isolated, such that the resulting run time could be scaled reliably to the relative complexity of a later model. For benchmarking purposes, a typical use of the framework was decided to have a crop ratio of 0.02, with 500 maximum schedules, 30 seconds between simulation time-steps and a target deck size of 5000. Using these simulation parameters, the benchmark case was run 100 times. The mean simulation run time was 17.5891 seconds with a standard deviation of 0.4423 seconds. D. Simulation Parametric Variation With a benchmark time available to use as a reference, the simulation parameters were varied to determine the effect on run time. This is very informative for the user as before a simulation is started it is necessary for the user to know what order of magnitude the run time will be. As some of the parameters may be highly variable in some of the typical use cases of the framework, run times could vary significantly. Various parameters governing scheduling, tasking, and cropping are varied and mean run times calculated. New simulation run times were divided by the benchmark mean run time to obtain normalized run times in fractions or multiples of the benchmark case. Lastly, a simple computation order model is developed that can identify changes to estimated run time by changes in simulation parameters. If a parameter was not explicitly stated as varied in one of the tests, its value is assumed the same as the benchmark. 12 American Institute of Aeronautics and Astronautics 1. Simulation Step Size Perhaps the simplest parameter to vary is the simulation step size, or the number of seconds between attempts to task a system in any given schedule. Increasing the number of evaluation steps does not necessarily increase the fidelity of the simulation. It would however reduce the delay between when the system can perform the next event, and when the next event is actually scheduled. 3 10 y vs. x Power Fit 2 Normalized Simulation Run Time 10 1 10 0 10 -1 10 2 3 10 10 Simulation Timesteps Figure 7. Simulation Run Time vs. Time Steps with Power Curve Fit The number of time steps in the simulation was varied from 91 to 2701 by varying the simulation time step from 2 to 60 seconds. A preliminary guess would suggest that the run time should increase linearly with the number of time steps. However, remembering that the entire list of schedules (each with lists of events loosely correlated to number of simulation time steps) must be evaluated to be cropped if the number of schedules is greater than the max schedules simulation parameter, the problem changes to polynomial order. Evaluating a power curve fit provides the polynomial order expression shown in Eq. (1). T An b ( 1) In this equation, T is the normalized simulation run time, and n is the number of time steps. Figure 7 shows the raw data and the power fit plotted on a log-log graph. The parameters A and b were found to be 0.003122, and 1.937 respectively, with an R-squared value of 0.9985. 2. Crop Ratio vs. Task Deck Size It is desirable to know how the task deck size and the ratio of max/min schedules relates to the simulation run time. The maximum number of schedules is held constant, so the varying crop ratio is achieved by varying the minimum number of schedules. The varying crop ratio affects the number of schedules to keep once the list of schedules goes over the maximum value. In other words, up to what place in the schedule value rankings do you 13 American Institute of Aeronautics and Astronautics keep when you have too many schedules with which to continue adding events? The task deck size is simply the number of possible tasks that can be added to the end of each schedule. 2.5 Normalized Simulation Time 2 1.5 1 0.5 0 15000 0.2 10000 0.15 0.1 5000 0.05 Task Deck Size 0 0 Cropping Ratio Figure 8. Simulation Run Time as a Function of Crop Ratio and Task Deck Size The crop ratio was varied from 0.002 to 0.2 and the task deck size (number of targets) was varied from 4 to 14000. Figure 8 shows a surface plot of the results. As can be seen from the plot, the cropping ratio has no significant effect on the run time. However, the run time varies relatively linearly with task deck size at any cropping ratio. There is an early sharp peak when the task deck size is close to 100. This was discovered early on in the testing, and the resolution of task deck size was increased near 100 to try to determine if this was a numerical outlier. The data shows that approaching 100 tasks from either direction causes the simulation time to increase. This is likely due to a switching of task deck container type as it gets larger than 100. 3. Crop Ratio vs. Maximum Schedules In addition to the task deck size, it is valuable to understand how the maximum and minimum schedule sizes relate to each other as far as simulation time is concerned. These parameters directly affect how “greedy” the scheduler is with simulation time. This in turn slightly affects the optimality of the final list of schedules generated. 14 American Institute of Aeronautics and Astronautics 4 Normalized Simulation Time 3.5 3 2.5 2 1.5 1 0.5 0 0.2 0.15 2000 1500 0.1 1000 0.05 500 0 Crop Ratio 0 Maximum Number of Schedules Figure 9. Simulation Run Time as a Function of Maximum Schedule Size and Schedule Crop Ratio The maximum number of schedules was varied from 10 to 2000, while the crop ratio was varied from 0.001 to 0.2. It is worth noting that data points that corresponded to a non-integer number of minimum schedules were rounded to the nearest schedule. Cases with zero minimum schedules were not run. Figure 9 shows the resulting simulation time as a function of the maximum number of schedules and the crop ratio. As can be seen, crop ratio has a nonlinear effect on run time. It seems to be a roughly sinusoidal relationship, with a varying magnitude and frequency. Sometimes increasing crop ratio increases the run time, while other times it decreases it. However, varying the maximum number of schedules linearly increases the simulation time. 4. Simulation Time Estimation Independently the simulation time has been plotted as a function of the more important simulation parameters. Ideally, a five dimensional surface could describe the relationship between all these variables in order to generate a function that could predict execution time relatively reliably. Unfortunately, getting enough data to construct this prediction is not practically possible. However, it is useful to know the final order of the problem given all the varying input parameters. Taking data from the previous figures, the order of the equation is shown in Eq. (2) below. O DS max n 2 t sys In this equation, D is the target deck size, (2) S max is the maximum number of schedules, n is the number of time steps and t sys is the mean system model execution time. Crop ratio was determined to have no quantitative and reliable effect on the final simulation time. 15 American Institute of Aeronautics and Astronautics IV. Aeolus: A Development Test Case The capstone software requirements test necessitated a low-fidelity system simulation performing a reasonably well understood aerospace application. An end-to-end use case of the software comprehensively testing the inputoutput framework capabilities with which the analyst would directly interact is described. Included are descriptions of the subsystem models and constraints imposed on the system, as well as visualizations of the orbits, targets, and results. The results are then discussed within the context of whether or not they constitute valid and useful simulation results. A. Mission Concept It was decided that the system would be an extreme-weather imaging satellite for several reasons. It afforded the possibility to use large decks of targets, spread out geographically in order to let the scheduler choose between a subset of possible targets each time step. It also made the output of possible schedules with big decks of state data easy to evaluate and analyze. Lastly, targets could be valued for importance, time-criticality and minimum image quality to exercise the value function evaluations. The simulation was named Aeolus for the Greek god of wind. Targets were clustered into high-risk areas, including Southeast Asia and the Gulf of Mexico. Additional targets were scattered randomly around the globe as incidental weather anomalies. Additionally, it was assumed that the sensor could generate useful images, even when in eclipse to further stress the system. It was envisioned that users would submit weather phenomenon to a ground station for approval. Those weather phenomena were judged and a full list of approved targets would be created with values on each one as the operators saw fit. Next, a full simulation of the Horizon framework would be run with the given target deck and the initial conditions of the satellite for a certain time period. Results would be used for mission analysis and command generation to decide which targets would be captured by the actual satellite. B. Aeolus System Models Simulating the Aeolus scenario required the modeling of subsystems, constraints and the creation of dependency functions that specify the interactions between subsystems. Because the simulation was envisioned as a low-fidelity model, suitable for conceptual evaluation, many subsystems are not direct simulations, but rather approximations of performance. The following is a list of subsystems that were modeled and their main functions: Access – Generates access windows for different types of tasks. The access subsystem created in the framework for this test is not a physically identifiable spacecraft subsystem. However, as previously defined, a subsystem in the Horizon framework is anything that generates state data. Attitude Dynamics and Control System – Orients spacecraft for imaging. Electro-Optical Sensor – Captures and compresses images when it has access to an imaging target and sends data to the Solid-State Data Recorder. Solid-State Data Recorder – Keeps imagery data before being sent down to a ground station. Communications System – Transmits imagery data when it has access to a ground station. Power – Collects power usage information from the other subsystems, calculates solar panel power generation and depth of discharge of the batteries. The subsystems dependencies were elucidated by a series of functions that clarified what each subsystem expected of other subsystems. Three CONOPS-derived constraints were placed on the system. The first required that during imaging, no information could be sent to ground stations. Communications with ground stations and imaging were modeled as exclusive tasks in order to satisfy this CONOPS constraint in the scheduler implicitly. The other two are specified below: The data recorder cannot store more than 70% of its capacity in order to keep space available if it becomes necessary to upload new flight software to the system. The depth of discharge of the batteries cannot be more than 25% in order to ensure that cyclic loading and unloading of the batteries does not degrade their storage capability. C. Simulation Parameters 16 American Institute of Aeronautics and Astronautics The Aeolus satellite was to be simulated for three revolutions starting on August 1st, 2008. It used a 30-second time step, 10 minimum schedules, 500 maximum schedules and 300 targets. Figure 10 below shows the projected orbit of the Aeolus satellite for the simulated day, as well as the locations of the randomly generated weather targets and ground stations. Figure 7. STK Visualization of Aeolus Orbit, Targets and Ground Stations for Simulation D. Aeolus Simulation Results The goal of the Aeolus test case was to confirm that given a basic system to simulate, the results are reasonable and roughly optimal within the domain of the framework. Of particular importance to an imaging system are communications rates, data buffer state, and power states. Additionally, it is crucial to look at the value-function results for the final schedule to determine whether they are reasonable. In this section, these variables are graphed over the simulation timeline to determine whether the model and framework are performing as expected. 17 American Institute of Aeronautics and Astronautics 1 Event Start Battery DOD (%) 0.8 0.6 0.4 0.2 Generated Solar Panel Power (W) 0 0 2000 4000 6000 8000 10000 12000 Simulation Time (s) 14000 16000 18000 0 2000 4000 6000 8000 10000 12000 Simulation Time (s) 14000 16000 18000 150 100 50 0 Figure 8. Power Subsystem State over Aeolus Simulation Figure 11 shows the depth of discharge of the batteries and the power generated by the solar panels throughout the simulation. The depth of discharge reaches no higher than 25%, which corresponds to the CONOPS requirement that the batteries not experience significant cyclic loading. As can be seen by comparing the battery depth of discharge with the solar panel input power plots, when the satellite goes into penumbra and then umbra, power is drawn solely from the batteries to image and downlink data. This causes the depth of discharge to increase at a rate proportional to the number of images taken. As the frequency of imaging decreases towards the end of the simulation, the maximum depth of discharge reached during eclipses decreases also as expected. 18 American Institute of Aeronautics and Astronautics 1 Buffer Usage (%) 0.8 0.6 0.4 0.2 Downlink Data Rate (Mb/s) 0 0 2000 4000 6000 8000 10000 12000 Simulation Time (s) 14000 16000 18000 0 2000 4000 6000 8000 10000 12000 Simulation Time (s) 14000 16000 18000 4 3 2 1 0 Figure 9. Data Storage and Downlink States over Aeolus Simulation Figure 12 shows the percent usage of the data buffer and the communications data rate being transmitted to the ground stations throughout the simulation. The buffer is constrained to remain below 70%, which results in the plateaus seen in the upper plot. These plateaus correspond to parts of the orbit where there is no access to a ground station, and the satellite cannot take more images. This may not be the most optimal way to run the system (increasing the size of the onboard data buffer would make sense) but it does display the functional operation of the framework constraints. Additionally, each of the downlink bars corresponds at the same time to a proportional decrease in the buffer usage as expected. 19 American Institute of Aeronautics and Astronautics 14 12 Event Value 10 8 6 4 2 0 0 0.2 0.4 0.6 0.8 1 1.2 Simulation Time (s) 1.4 1.6 1.8 2 4 x 10 Figure 10. Scheduler Event Values for the Aeolus Simulation Figure 13 shows the values of each event scheduled in the simulation and the time that the event occurs. The average target value through the simulation is 5.2681, with a standard deviation of 2.8255. There is a definite trend that the higher valued targets are captured towards the beginning of the simulation, with lower value targets being taken towards the end. Periods where there is no access to new targets are not tightly scheduled with new events, and periods of down linking or multiple target access are clustered with events. This plot demonstrates that the scheduler time-domain search and cropping algorithms are working as intended. These figures show very clearly that Horizon is performing as intended. Data like this is invaluable for the analyst in determining feasibility and validating system-level requirements in highly complex scenarios. V. Conclusions The Horizon Simulation Framework was developed to help the systems engineer better understand the role of subsystems, environment and Concept of Operations for system design iteration and systems level requirements validation. The Horizon Simulation Framework provides a modular and flexible modeling capability along with a wide array of modeling utilities to generate operational schedules of systems-of-systems. Tests on the validity of the system returned significantly useful results as well as ideas for useful future additions. With further additions, the Horizon Simulation Framework could be another powerful tool for the systems engineer useful from preliminary design all the way through final requirements verification. The designer-observed strengths and weaknesses of the framework are discussed below, along with current work and future planned work. A. Framework Strengths and Weaknesses Ultimately, the modeling mantra is still true: “The better the model the better the output.” Since the framework is supportive at all levels of fidelity, any model that is run will generate data that may or may not be useful. It is incumbent on the modeler to create an accurate system to simulate in order to create data provides value to the analyst. Intelligent model creation can greatly simplify a problem and make the output significantly more useful. 20 American Institute of Aeronautics and Astronautics Implementing multiple different vehicles is more tedious than and not as intuitive as initially intended. A new version currently (4/07) in the preliminary stages of testing has these drawbacks rectified as well as a much more flexible multi-asset scheduling algorithm. This significantly increases the framework flexibility, but increases simulation run time. The new version also has a significant increase in the power of the utilities available. The user interface needs the most work of all the parts of the framework in its current incarnation. The programming interface relies heavily on implementing new models programmed in C++ with existing framework functionality. This can be troublesome if any of the important details in specifying the system are not included. As displayed in the Aeolus test case, Horizon is capable of producing useful data given relatively simple subsystem models. It does not rely on existing knowledge of the type of vehicles simulated, so it is flexible enough to work on any system. It is programmed in C++, with an emphasis in platform independency, so it can run on any platform and is open to modifications. Lastly, each subsystem is modular, so libraries of subsystems can be built that are not reliant on other subsystems or specific simulations. In this way, rapid system simulation and analysis can be performed using existing libraries without a loss of fidelity. B. Current Development Work Horizon is currently the topic for several Masters Thesis papers and Senior Projects for students at Cal Poly San Luis Obispo. CPSTAR is in the process of several major upgrades to the framework that greatly improve the userexperience conducting simulations. An architecture feasibility study is being conducted on the proposed Cal Poly Longview Space Telescope using the Horizon framework. Additionally another development test case of the framework is planned for the new version using a constellation of satellites to capture weather images. C. The Future of Horizon Many upgrades are being considered that would improve the utility of the Horizon Framework. Some of the major improvements planned for implementation in the near future include: A robust user interface in C# with which to enter simulation parameters, initial conditions and output types. Automatic code-template generation for subsystems, constraints, and dependencies based on system diagram built in GUI. Different scheduling algorithms built on optimizations possible when the system is constrained. Output visualization through either custom-built graphics tools or automatic scenario generator for standard industry visualization tools (STK, Matlab). Parallelization of the main algorithm. This will rely on the ability to divide the schedule list into a number of independent simulation problems, because they are essentially different instances of possible timelines. In the terminology of parallel computing, this is referred to as an “embarrassingly parallel” computing problem. Creation of a large asset/subsystem module library Acknowledgments Special thanks to Dave Burnett of Cutting Edge Communications, LLC for high-level requirements identification on the concept simulation framework as well as the funding that spawned the framework. Additional thanks to Dr. John Clements of the Computer Science Department at Cal Poly for his programming expertise, guidance and suggestions. References i Larson, W.J., (ed) and Wertz, J.R., (ed), Space Mission Analysis and Design, 3rd ed., Microcosm, Inc., Torrance, CA, 1999. Brown, Charles D., Elements of Spacecraft Design, AIAA Education Series, AIAA, New York, 2002 iii Pisacane, Vincent L., Fundamentals of Space Systems, 2nd ed., Oxford University Press, New York 2005 iv STK, Satellite Tool Kit, Software Package, Version 6, Analytical Graphics, Inc., Exton, PA, 2005 ii v FreeFlyer, Software Package, Version 6.0, a.i. solutions, Inc., Lanham, MD, 20706 21 American Institute of Aeronautics and Astronautics Stodden, D.Y.and Galasso, G.D., “Space system visualization and analysis using the Satellite Orbit Analysis Program (SOAP)”, Proceedings of the 1995 IEEE Aerospace Applications Conference, Issue 0, Part 1, page(s): 369 – 387, Vol.1, 4-11 Feb. 1995 vi 22 American Institute of Aeronautics and Astronautics