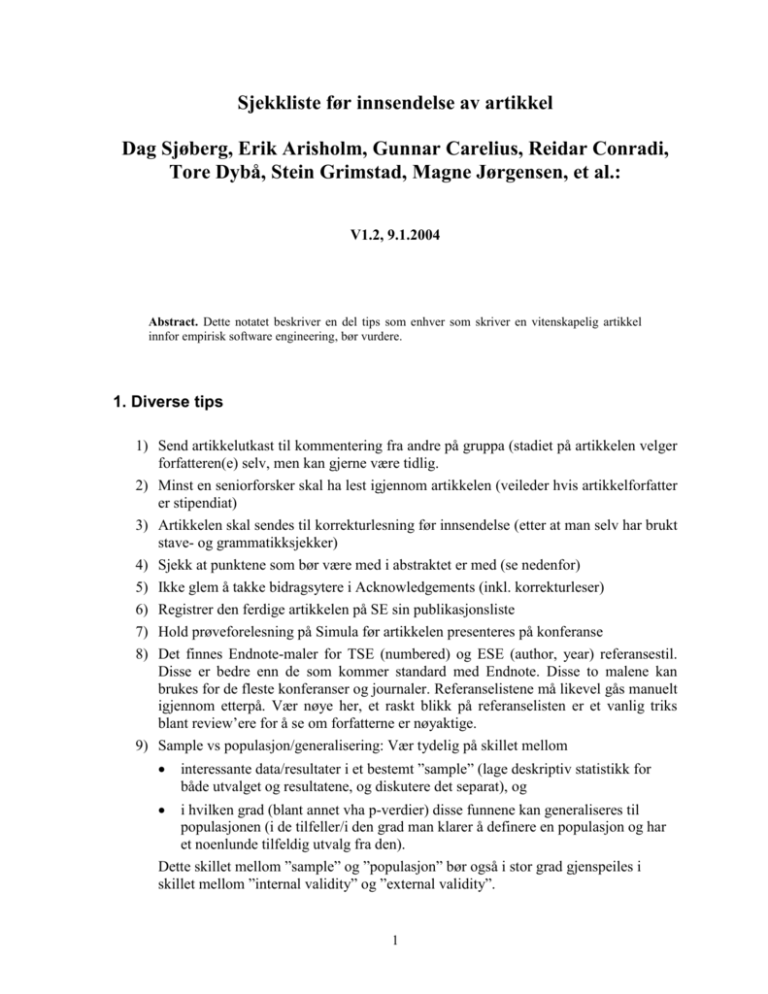

Sjekkliste før innsendelse av artikkel

advertisement

Sjekkliste før innsendelse av artikkel Dag Sjøberg, Erik Arisholm, Gunnar Carelius, Reidar Conradi, Tore Dybå, Stein Grimstad, Magne Jørgensen, et al.: V1.2, 9.1.2004 Abstract. Dette notatet beskriver en del tips som enhver som skriver en vitenskapelig artikkel innfor empirisk software engineering, bør vurdere. 1. Diverse tips 1) Send artikkelutkast til kommentering fra andre på gruppa (stadiet på artikkelen velger forfatteren(e) selv, men kan gjerne være tidlig. 2) Minst en seniorforsker skal ha lest igjennom artikkelen (veileder hvis artikkelforfatter er stipendiat) 3) Artikkelen skal sendes til korrekturlesning før innsendelse (etter at man selv har brukt stave- og grammatikksjekker) 4) Sjekk at punktene som bør være med i abstraktet er med (se nedenfor) 5) Ikke glem å takke bidragsytere i Acknowledgements (inkl. korrekturleser) 6) Registrer den ferdige artikkelen på SE sin publikasjonsliste 7) Hold prøveforelesning på Simula før artikkelen presenteres på konferanse 8) Det finnes Endnote-maler for TSE (numbered) og ESE (author, year) referansestil. Disse er bedre enn de som kommer standard med Endnote. Disse to malene kan brukes for de fleste konferanser og journaler. Referanselistene må likevel gås manuelt igjennom etterpå. Vær nøye her, et raskt blikk på referanselisten er et vanlig triks blant review’ere for å se om forfatterne er nøyaktige. 9) Sample vs populasjon/generalisering: Vær tydelig på skillet mellom interessante data/resultater i et bestemt ”sample” (lage deskriptiv statistikk for både utvalget og resultatene, og diskutere det separat), og i hvilken grad (blant annet vha p-verdier) disse funnene kan generaliseres til populasjonen (i de tilfeller/i den grad man klarer å definere en populasjon og har et noenlunde tilfeldig utvalg fra den). Dette skillet mellom ”sample” og ”populasjon” bør også i stor grad gjenspeiles i skillet mellom ”internal validity” og ”external validity”. 1 13. Vurder nøye og diskuter med andre hvor artikkelen hører hjemme. Som en generell regel bør konferanseartikler kun ses på som et steg mot en journal-artikkel. Ikke vær beskjeden (men heller ikke urealistisk) mhp hvor artikkelen sendes. Hittil har vi trolig vært for beskjedne. Stipendiater skal avklare med veileder om og evt. hvor en artikkel sendes inn. 14. Sjekk at argumentasjonen henger sammen. Pass særlig på at sammenheng mellom konklusjon og det som er studiens hovedresultater. Spesielt skal konklusjonen svare på de spørsmålene som blir stilt i introdusksjonen. 15. Gjennomfør et siste litteratursøk for å sikre at alle viktige (også nye) artikler om temaet er referert. 16. Reviewere i journaler og konferanser bruker ofte detaljer i fremstillingen som indikatorer på kvaliteten til helheten – som ofte er vanskeligere å vurdere. Dette innbærer at også forhold som i utgangspunktet er mindre viktige ting kan bli svært viktige, f eks: Dersom en variabel er delt inn i klasser, MÅ en argumentasjon for inndelingen angis. Dersom en bestemt statistisk test velges, MÅ argumentasjon for denne og en kort beskrivelse av om antakelsene for å bruke testen er oppfylt angis. Dersom relevansen av studien og resultatene ikke er helt åpenbar for alle (og da menes absolutt alle som kan tenkes å reviewe), MÅ det brukes en del plass på å angi relevansen. På dette området er det knapt mulig å overvurdere leseren – selv om man selvsagt ikke skal fylle side opp og ned med opplagtheter. 17. La artikkelen ligge i minst 2-3 dager (helst en uke) mellom nest-siste og siste gjennomlesning. Dette øker mulighetene for at du oppdager svakheter og mangler. Flere gjennomlesninger i løpet av kort tid – f eks natta før deadline til en konferanse er risikofylt. 18. Vær ikke redd for å sende artikkelen til andre utenfor vårt miljø for gjennomgang, men sørg for at det blir en vinn-vinn situasjon – enten vha betaling, eller ved andre former for gjenytelse. Uten en slik gjenytelse kan du ikke forvente kommentarer av særlig verdi. Dersom gjenytelse er vanskelig, f eks ved spørsmål til en guru innen fagfeltet, trekk ut ett spørsmål du har og still kun dette. 19. Henvendelse til utledninger: Vær høflig. Kjenner du ikke vedkommede, start så å si alltid med ”Dear <fullt navn>”, avslutt ”Best regards, <navn>. For eksempel, til en professor du ikke kjenner begynn aldri med ”Hi”, det vil oppfattes respektløst. Er personen du kommuniserer med ”senior” enn deg, overlat alltid til vedkommende å gå ned i formalitet, for eksempel slutte med ”Dear”, gå over til bare fornavn. Verre å være for uformell, enn for formell. Husk å bruk ”please”, bedre med ett ”please” for mye enn ett for lite. Å ikke bruke ”please” kan til tider virke direkte uforskammet. 2 20. Klesstil på presentasjoner: kle deg som tilhørerne, men litt mer formelt/penere. 2. Struktur på abstrakt Objective: Rationale: Design of study: Results: Conclusion: Det aller meste av dette kan inngå som abstract, se eksempel nedenfor, mao det er heller ikke skrivemessig bortkastet. (Ref. Barbara Kitchenham). To eksempler på bruk av struktur i abstract: Eksempel 1: Objective: Improvement of uncertainty assessments of software development cost estimates. Rationale: The uncertainty of cost estimates is important input to the project planning process, e.g., to allocate a sufficiently large budget to deal with unexpected events. Studies suggest that uncertainty assessments are strongly biased towards over-confidence, i.e., that software cost estimates are believed to be more accurate than they really are. Design of study: Analysis of empirical evidence on strategies for cost estimation uncertainty assessment. We evaluate the improvement potential of several extensions to and replacements of unaided, human judgment-based cost estimation uncertainty assessment. Results: Preliminary evidence-based guidelines for cost uncertainty assessment in software projects: (1) Do not rely on unaided, intuition-based processes, (2) Apply strategies based on an “outside view” of the project, (3) Do not replace expert judgment with formal models, (4) Apply structured and explicit judgment-based processes that improves with more effort, (5) Combine uncertainty assessments from different sources through group work, not through mechanical combination, (6) Use motivational mechanisms with care and only if it is likely that more effort leads to improved assessments, (7) Frame the assessment problem to fit the structure of the uncertainty relevant information and the assessment process. Eksempel 2: Objective: Our objective is to increase the realism of minimum-maximum effort intervals in software development projects. Rationale: Minimum-maximum effort intervals may be important input in the project planning process enabling properly sized contingency buffers and realistic project plans. Unfortunately, several studies suggest that unaided, expert judgment-based minimum-maximum effort intervals are systematically too narrow, i.e., there is a bias towards over-confidence in software estimation work. We test the hypothesis that information about previous estimation error of similar projects increases the realism in the minimum-maximum effort intervals. Design of study: Nineteen realistically composed estimation teams of three or four software professionals estimated the most likely effort and 3 provided minimum-maximum effort intervals of the same software project. Ten of the teams, the Group A teams, received no minimum-maximum effort interval assessment instructions. The remaining nine teams, Group B teams, were instructed to start the minimum-maximum effort interval assessment with a recall of the distribution of the estimation error of similar projects. Results: All Group B teams provided minimum effort values corresponding to their recalled error distribution of similar projects. However, only three out of nine teams provided maximum effort values corresponding to the error distribution. This result, together with a comparison of the average minimum-maximum effort values of the Group A and B teams, suggests that information about previous estimation error on similar projects mainly had an impact when assessing the minimum effort values. Conclusion: It is not always sufficient to be aware of the distribution of estimation errors on similar projects to remove too optimistic assessments of maximum effort. To ensure maximum effort values reflecting the previous estimation error distribution it may be necessary to instruct the estimation teams how to apply the historical information and only allow minor adjustments. 3 Lay-out 1) Det finnes en Word LNCS-mal for formatering av artikler 2) Dokumentet blir penere med tabeller og figurer enten øverst eller nederst på siden. Bruk ankere i Word. Putt figurer og tabeller i en ”textbox” slik at caption (over tabeller og under figurer) følger objektet. 3) For å lette redigering av layouten og konvertering mellom ulike layouter (én- og tospalter, etc.) er det viktig å være konsekvent i bruk av stiler (styles) på overskrifter, brødtekst (både innrykket og ikke-innrykket) tall- og punktnummerering og caption. Bruk fast sideskift (Ctrl-Enter) i stedet for masse linjeskift og ”myk” orddeling (Ctrl+”-”) i stedet for ”hard (”-”) når ord må deles. Bruk også innrykk og tabulator i stedet for å hvile tommelen på mellomromstasten (spacebar). 4 For artikler som beskriver eksperimenter 4.1 Sjekkliste for randomiserte to-gruppe eksperimenter Denne listen anbefales brukt i medisin, mer info på www.consort-statement.org. Der ligger artikkelen som presenterer sjekklisten og en artikkel som forklarer og gir eksempler til hvert enkelt punkt. I tillegg ligger det separate linker til selve sjekklisten og et flytdiagram som kan benyttes for å vise hvordan deltakerne i eksperimentet "flyter" gjennom fasene fra utvelgelse, via treatment til oppfølging og analyse. 4 Checklist when Reporting Parallel-Group Randomized Trials1 Paper Section and Topic Item No. Title and abstract 1 Introduction Methods Participants 2 3 Interventions 4 Objectives Outcomes 5 6 Sample size 7 Randomization Sequence generation 8 Allocation concealment Implementation 9 10 11 Blinding (masking) 12 Statistical method Results Participant flow 13 Recruitment Baseline data Numbers analyzed 14 15 16 Outcome estimation 17 and 18 Ancillary analyses 19 Adverse events Discussion Interpretation Generalizability Overall evidence 1 20 21 22 Reported on Page No. Description How participants were allocated to interventions (e.g. “random allocation,” “randomized,” or “randomly assigned”). Scientific background and explanation of rationale. Eligibility criteria for participation and the setting and locations where the data were collected. Precise details of the interventions intended for each group and how and when they were actually administered. Specific objectives and hypotheses. Clearly defined primary and secondary outcome measures and, when applicable, any methods used to enhance the quality of measurements (e.g. multiple observations, training of assessors). How sample size was determined and, when applicable, explanation of any interim analyses and stopping rules. Method used to generate the random allocation sequence, including details of any restrictions (e.g. blocking, stratification). Method used to implement the random allocation sequence. Who generated the allocation sequence, who enrolled participants, and who assigned participants to their groups. Whether or not participants, those administering the interventions, and those assessing the outcomes were blinded to group assignment. Statistical methods used to compare groups for primary outcome(s); methods for additional analyses, such as subgroup analyses and adjusted analyses. Flow of participants through each stage (a diagram is strongly recommended). Specifically, for each group report the number of participants randomly assigned, receiving intended treatment, completing the study protocol, and analyzed for the primary outcome. Describe protocol deviations from study as planned, together with reasons. Data defining the periods of recruitment and follow-up. Baseline demographic and other characteristics of each group. Number of participants in each group included in each analysis and whether the analysis was by “intention to treat.” State the results in absolute numbers when feasible (e.g. 10 of 20, not 50%). For each primary and secondary outcome, a summary of results for each group and the estimated effect size and its precision (e.g. 95% confidence interval). Address multiplicity by reporting any other analyses performed, including subgroup analyses and adjusted analyses, indicating those prespecified and those exploratory. All important adverse events or side effects in each intervention group. Interpretation of results, taking into account study hypotheses, sources of potential bias or imprecision, and the dangers associated with multiplicity of analyses and outcomes. Generalizability (construct and external validity) of the trial findings. General interpretation of the results in the context of current evidence. The CONSORT Statement (http://www.consort-statement.org) 5 5 Related Work - How not to get a paper accepted (IRIS en gang på 90-tallet) - div. litteraratur på metode - div. litteraratur på språk (how to write in science, the elements of style) Appendix A: Jeanice Singer: APA Style Guidelines for reporting experiments (resume by Reidar Conradi) From Jeanice Singer, National Research Council, Canada: "Using the American Psychological Association (APA) Style Guidelines to Report Experimental Results", 5 p. See http://wwwsel.iit.nrc.ca/~singer/. This report outlines how experience papers on experimentation should be written. The items are: 1. Abstract - Summary of the paper: hypothesis, method, studied population, and result overview. - An abstract has two purposes: 1) to allows a reader to decide if the article is interesting, 2) to allow researchers to locate and retrieve articles (by keyword searchers). - The abstract must be useful as a free-standing document. - The results should be reported without evaluative comments! - APA Style Guide: maximum 960 characters (i.e. less than half a page). 2. Introduction - Describe the specific problem under study. - Give a summary of what happened in the study and why, e.g. discussing questions regarding the point of the study, the relationship between the experimental hypothesis and design, and the theoretical implications of the study. - Give a literature survey to motivate the given study, and treat controversial issues fairly! Statements of facts are generally more acceptable than length excursions into opinions! Start out broadly, and then be specific as the current research is introduced. - End with a list of the variables manipulated and a formal statement of the hypotheses tested. The rationale of each hypothesis should be clearly defined. This can include expected results. 3. Method - Describe how the study was conducted, i.e. a "cookbook" for research. Needed to assess validity of the study, i.e. whether the study can justify the drawn conclusions. Allows other researchers to perform replications and meta-analysis. - See also subsections 3.1-3.3 below. 6 3.1 Subjects/participants - Describe the human subjects in the experiment: * Who participated using demographic variables such as age, education, experience etc.? * How many participants were there? * How were they selected, e.g. from a course, being paid etc.? - NB: Experimental design, such as allocation into groups, are not discussed (see Procedure). - "Subject" may also be a piece of software or a software process. It is important for replication that necessary information is specified, e.g. what company participated, were the company given free software etc.? This may influence the motivation of the subjects, and thus the results of the study. 3.2 Apparatus/Materials - Describe the "apparatus" used in the experiment, to the relevant amount of detail to allow replication or meta-analysis (e.g. the execution speed of the computer). - All software artifacts must be described (or referred to), possibly in appendices. - NB: Use of the apparatus/materials is reported in Procedure below. 3.3 Procedure - Describe the steps in planning and executing the research. - Experimental designs (grouping x treatments x artifacts) must be explained, as well as information on randomization, counterbalancing, and other control features of the design. - Participant instructions (assignment descriptions) must be outlined. - NB: APA Style Guide does not outline an explicit design section, but this may be included, and must have information to allow meta-analysis: dependent variables, all levels of independent variables, and whether they are within- or between-subjects. 4. Results - Summarize the collected data. and how data was managed. - Explain all quantitative and qualitative analysis, and match with corresponding hypotheses. - Report inferential statistics, with the value of the test, the probability level, the degrees of freedom, and the direction of the effect. Descriptive statistics (e.g. mean, median, and deviation) may be included. - In APA Style Guide: basic assumptions, such as rejecting the null hypothesis, are not reviewed. However, questions about the appropriateness of a particular test (justification) must be discussed. - NB: no interpretation of results here (in below Discussion), just reporting. 5. Discussion (interpretation) - Evaluate results and their implications, especially wrt. the original hypothesis. - Try to answer questions, e.g. how the original problem has been resolved and what conclusions can be drawn. - Also briefly discuss the difficulties encountered then conducting the study, or unexpected results. - If several experiments are presented, summarize both each experiment and the aggregate generalizations that can be drawn. 7 6. References - This describes the APA reference style (RC: ignored here). 7. Appendices - Misc. tables and figures -- but AP Style Guidelines states it is too early for giving any guidelines here. 8