Project

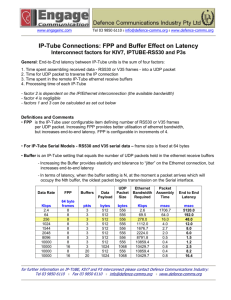

advertisement

The Computer Communication Lab (236340)

Spring 2003

TCP/IP enabled DSP platform

Final Report

Submitted by:

Zusman Dmitry

Stolin Yulia

Kupferberg Benny

Steiner Andre

Supervisors:

Dr. Dani Raz

Rami Cohen

319160867 szusman@t2.technion.ac.il

313883191 sjulia13@t2.technion.ac.il

033829391 sbennyk@t2.technion.ac.il

015281843 avsteiner@newmail.net

1.Introduction

Digital Audio Broadcasting (DAB) offers CD-quality radio reception

and eliminates distortion in radio transmissions. Digital radio

makes use of one-way broadcasting to all receivers.

Since the main source for such streaming is the Internet, the digital

radio server should be able to forward of IP packets (mostly UDP)

to the digital radio network.

Since the transmission is one way and there is no feedback from

the receivers of the data, a unique design is needed at the server

to assign transmission priorities to the content.

BlackFin DSP overview

The BlackFin DSP is the latest general-purpose digital signal

processor from Analog Devices one of the leaders in the analog

and digital signal processing chip industry. The BlackFin processor

run at 300 Mhz and includes a unique execution core that enables

a mixture of RISC and DSP features in a single instruction flow.

This DSP is therefore ideal for application that packs together lowlevel signal processing and the high level application that makes

use of such signal processing.

Project Description

The objective of the project is to build a platform for an IP-DSP

application using the BlackFin processor, that will serve later

laboratories to build specific applications.

IP-DSP is a device acting as a UDP/IP end point that is optimized

for DSP application.

Being a dedicated application device, the IP-DSP has minimal

memory for code and data, which imposes limitations of the

implementation of the IP stack.

The goal of the project is to build an infrastructure platform for IPDSP applications using the BlackFin processor.

2.General Layout

Information flow on input

Let try to examine the general structure of IP stack.

Recall that operating system contains device driver for software that

communicates with hardware I/O devices and handle interrupts. The code

is hidden in an abstraction “device”.

The following figure illustrates the flow of packets from the network

interface hardware through the device driver in the operating systems to

an input queue associated with the device:

queue for

packets sent

to IP

Device for

net

Hardware

for net

operating system

hardware

From Network Interface to IP

In order to pass packets from the ip layer to the data link layer and vice

versa and to achieve hardware independence a general data structure is

defined called netif. This data structure includes the local IP address

associated with the interface, IP broadcast address, MTU of the network

and two queues: one for incoming packets and one for outgoing packets.

When a new packet arrives the device driver must invoke procedure ip_in

in order to enqueue the packet to the queue of incoming datagrams and

wake the ip receive process.

The following figure illustrates communication between the network

device driver and the process that implements IP, which uses queue for

incoming datagrams. When a datagram arrives, the network input process

enqueues it and sends a message to the IP process.

IP

Process

queue for packets

sent to IP

From IP to UDP

Because UDP is rather simple, UDP software module does not execute as

a separate process. It consists of conventional procedure that the IP

process executes to handle an incoming UDP datagram. These procedures

examine the destination UDP protocol port number, and use it to select an

OS queue (port) for incoming datagram. The IP process deposits the UDP

datagram on appropriate port, where an application program can extract

it.

The following figure illustrates the flow of datagrams through higher

layers of software. The IP process sends incoming UDP datagram directly

in separate ports where they can be accessed by application programs.

ports for UDP

datagrams

UDP layer

IP

Process

From UPD to Socket

As shown in previous figure, UDP demultiplexes incoming user

datagrams based on protocol port number and places them in queue.

When an application program, for example socket interface, needs to

receive a UDP datagram it must access the UDP queue and dequeue the

packet.

Global overview of incoming datagrams:

The following figure illustrates the input process structure showing the

path of data between the network hardware and an application program.

Socket receive

application programs

queues for UDP

datagrams

UDP in

IP

receive

Process

queue for packets

sent to IP

Device for

net

operating system

hardware

Hardware

for net

Information flow on output

From Socket to UDP:

When the function send or sendto is called with a buffer to be sent these

functions directly call the udp_send function (passing a pointer to the

buffer to be sent).

From UDP to IP:

Because UDP does not guarantee reliable delivery, the path for outgoing

UDP traffic is quite simple. The sending machine does not keep a copy of

the datagram nor does it need to time retransmissions. Once the datagram

has been created, it can be transmitted and the sender can discard its

copy.

Any process that sends a UDP datagram must execute the UDP

procedures needed to format it, as well as the procedures needed to

encapsulate it and pass the resulting IP datagram to the IP layer.

The following figure illustrates the path of outgoing UDP datagrams to IP

layer.

UDP out

IP

Layer

From IP to Network output:

Once UDP produces a datagram, it passes the datagram to IP for delivery.

IP passes it to the network output process.

The following figure illustrates the path of outgoing from IP layer to

queue of network interface.

IP

Layer

queue for outgoing

packets

From Network output to hardware:

Queue allow processes to enqueue packet for output, and continue

execution without waiting for the packet to be sent. Meanwhile the

hardware can continue transmitting packets simultaneously. The process,

performing output, enqueues its packet and calls a device driver routine to

start the hardware. When the output operation completes, the hardware

interrupts the CPU.

Thus from the point of view of the IP process, transmission of packets

occurs automatically in the background. As long as packets remain in a

queue, the hardware continues to transmit them.

Of course, the output queue has finite capacity and can become full if the

system generates packets faster than the network hardware can transmit

them. We assume that such cases are rare, but if they do occur, processes

that generate packets must make a choice:

discard the packet

block until the hardware finishes transmitting a packet and makes

more space available.

In our case we chose to implement the first strategy option. Also we will

try to make the implementation as flexible as possible, so it will be quite

easy to change the queue module that will implement the second strategy.

The following figure illustrates network output and the queue that

buffered output packets.

queue for

outgoing

packets

Device for

net

operating system

hardware

Hardware

for net

Global overview of outgoing datagrams:

The following figure illustrates the output process structure showing the

path of data between the an application program and network hardware.

Socket output

application programs

UDP output

IP layer

queue for packets

outgoing from IP

Device for

net

…

operating system

hardware

Hardware

for net

3.Modules

3.1. Common data structures

struct packet_out {

char *buf;

int buflen;

char *udph;

int udphlen;

char *iph;

int iphlen;

}

the processing of outgoing datagrams in the UDP and IP layers is done

under the context of a user thread. Each layer adds its own header a lets

the packet_out point to the header, and updates the size.

only pointers are used since the size of the buffer (the data) is variable

and also the size of the IP header might be variable in case options are

supported. Although the UPD header size is constant, using a pointer for

UDP might prove useful for using the same struct for future

enhancements to TCP.

struct packet_in {

IPaddr sIP;

unsigned short sport;

char *data;

unsigned int datalen;

unsigned int offset;

}

Oher data structures used:

A buffer that stores incoming packets received from the driver.

The netif struct which is an abstraction of a network interface. It includes

the local IP address, the maximum transfer unit (MTU) and an array to

store outgoing packets. Each entry in the array includes a buffer to store a

single packet and the size of the packet. The IP address and the MTU can

be configured by the programmer.

3.2. IP

3.2.1.

General description

IP header:

Version

H. Len

Identification

TTL

Options

Type of service

Total length

Flags

Fragment offset

protocol

header checksum

Source address

Destination address

Padding

Data

The IP layer implementation includes the interface function

ip_send which is called by the upper layers (UDP in our case) and

ip_receive which is a separate thread running in an infinite loop

that processes incoming datagrams. The ip_send function runs

under the context of a user thread.

Using distinct threads rather than running under an existing context

increases the level of concurrency. This is extremely important

since processing of incoming datagrams in the IP layer requires

significant time. Thus, creating a separate process allows the data

link layer to continue the processing of other incoming datagrams

and the user’s application to continue without waiting for the ip

layer to end its processing.

Some systems choose to implement IP as a single process handling

both incoming and outgoing datagrams. This design is especially

useful in routers, where the software cannot be easily partitioned

into input and output parts because a router may generate output

while processing an incoming datagram. Another advantage of this

design is achieving fairness between processing incoming and

outgoing datagrams by processing an incoming datagram and

outgoing datagram alternately.

We chose to use a different thread only for processing incoming

datagrams, mainly because our implementation is intended to run

on a host and not on a router. Thus, the processing of an incoming

datagram varies greatly from the processing of outgoing datagrams.

Fairness between incoming and outgoing datagrams is left to the

fair round robin scheduling of the VDK kernel. Processing an

outgoing datagram is done under the context of a user process since

the processing of outgoing datagrams in a host is rather simple.

Once processing outgoing datagrams in the IP layer is done, the

headers of the UDP and IP layer and the data are copied to the

buffer of outgoing messages. At this point the IP layer returns

control to the user function without waiting for the actual sending

of the datagram. The consumer thread is awakened and calls the

driver function to send the datagram.

Our implementation of the IP layer assumes there is only one

interface.

IP options are not supported (in case a datagram with options

arrives, the datagram is processed like a regular datagram,

disregarding the options).

3.2.2.

Data structures

IP Structure:

struct

ip

{

u_char ip_verlen;

u_char ip_tos;

u_short ip_len;

short ip_id;

short ip_fragoff;

u_char ip_ttl;

u_char ip_proto;

short ip_cksum;

IPaddr ip_src;

IPaddr ip_dst;

u_char ip_data[1];

};

/* IP version & header length (in longs) */

/* type of service

*/

/* total packet length (in octets)

*/

/* datagram id

*/

/* fragment offset (in 8-octet's)

*/

/* time to live, in gateway hops

*/

/* IP protocol

*/

/* header checksum

*/

/* IP address of source

*/

/* IP address of destination

*/

/* variable length data

*/

global variables (not including reassembly sub module) :

ipackid – id given to every packet used in ip_id field in ip struct.

multicast_table - a table of multicast addresses to which the

host is subscribed.

IP-mutex – a global semaphore to protect global variables of the

IP layer used by different threads calling the IP functions.

The buffer space available and items available semaphores, the

consumer entry and producer entry variables – used to

synchronize between the user thread that uses the IP functions

and the consumer threads (described later).

3.2.3.

Main Functions

1. int ipsend(IPaddr dest_addr, packet_out *packet, int datalen,

unsigned char proto, unsigned char ptos,

unsigned char ttl)

ipsend - constructs the IP header and sends the packet

parameters: the destination IP address, the packet to be sent,

the size of the data (including the UDP header size), the

protocol field, the tos field and the time to live.

the version field is set to IP version 4, the IP header len is set

to 5 (i.e. a header of 20 bytes).

the packet iph field is set to point to the IP header and the

iphlen field in the packet is set to 20 bytes (IP_MINHLEN).

note that the checksum is not computed since fragmentation

might be needed

last, the function calls ipputp for fragmentation and actual

sending.

note that a global mutex protects all global variables used by

IP since it can run in the context of many user threads.

2. Ipputp function - send a packet to the output queue and

handle fragmentation

parameters: pack - a packet to be sent.

The function checks the length of the entire datagram

(according to the total length field in the IP header) against the

local MTU, which is written in the interface. If the total length

is smaller than the MTU the function simply converts the IP to

net byte order, computes the checksum and calls synch_write

to write the data to the buffer of outgoing messages.

In case fragmentation is needed - fragmentation is not done

using the IP fragmentation mechanism but rather each

fragment is a different packet with a different packet id. The

buffer pointed at by packet out is divided into smaller packets.

The size of each smaller packet (except for the last packet) is

MTU-ip_heade_size - 4th layer header size, rounded down to

a multiple of 8. Each packet is given a different id, and the

total length is changed. Only the last packet is handled

separately since its size might be smaller. Once the header is

fixed the function calls synch_write in order to copy the data

and the headers to the outgoing buffer.

Note that sending a datagram larger than MTU as different

datagrams rather than using the fragmentation mechanism has

2 main advantages:

1. The receiver doesn’t need to use reassembly which is a

costly operation that might require system resources like

memory allocation, timers etc.

2. If the usual fragmentation mechanism is used and one

fragment is lost, the whole packet must be resent rather

then resending a single fragment. In our approach, only a

single packet needs to be resent.

3. synch_write function. used by the IP layer to copy 4th layer

header, IP header and data to the buffer of outgoing packets. This

buffer is actually an array, where each entry can hold data up to a

predefined size. It uses 2 semaphores (buffer_space_available and

items_available) to implement a well known solution to the

producer - consumer problem. First the function checks whether

the buffer has at least one free entry, by using the buffer space

available semaphore. Therefore, the function first waits on the

buffer_space available semaphore.

After an item is produced the producer entry, which indicates the

entry number to which the next item produced will be copied, is

cyclically incremented. The counter of the items_available

semaphore is incremented using signal since a new item is now

ready to be consumed.

Note that the items_available semaphore is initialized to 0 and the

buffer_space_available semaphore is initialized to the size of the

array.

The consumer thread

A separate thread is used to handle sending an outgoing datagram

over the interface. Using a different thread to carry out this task has

2 main purposes:

1. Allowing the user thread to regain control without waiting for

the packet to be sent over the interface.

2. Making the IP layer driver independent.

The thread consumes an outgoing datagram from the buffer of

outgoing messages and calls the driver in order to send the

datagram. Two semaphores are used to synchronize between this

thread and the IP layer, which runs under the context of a user

thread. The function first waits on the items available semaphore

until there is an item to consume. Then the function calls the

driver with the packet to be sent, using the consumer_entry

variable which points to the entry in the buffer where the next

packet to be sent is stored. The entry includes both the data and the

size of the data in bytes. After that the thread cyclically increments

the consumer_entry variable and signals the buffer space available

semaphore, since the entry is now free to be used by the producer.

The IP-receive thread

Handles incoming datagrams.

The process includes an infinite loop in which a datagram is

extracted from the incoming datagram queue (this part

implementation is driver dependent. In our case it includes an

infinite loop in which the get_message function is called until a

new packet is found). If no packet has arrived, the thread yields in

order to allow other threads to utilize the CPU (this can be

improved in case the driver’s get_messasge function blocks the

calling thread if no packet has arrived) .once a new datagram

arrives, the datagram is processed.

The function converts the relevant fields of the ip header from net

to host byte order.

Checks whether the IP version is indeed version 4 and that the

destination address is not in class E. If one of the above isn’t true,

the datagram is discarded.

Verifies that the checksum is correct (else discards the datagram).

Now the process should check whether the host should accept the

packet, according to the destination address field in the IP header

(since the project is designed for a host and not for a router, if the

datagram is not destined for the host it is discarded and not

forwarded).

There are 4 cases in which the datagram is accepted in layer –3:

1. The destination IP address is a local address of the host.

2. The destination IP address is a limited broadcast (all 1’s) or

directed broadcast address (a local network number and host

number is all 1’s). Directed broadcast is checked using a

helper function - check_directed_broadcast. This function

finds the class of the local IP address and according to the

class checks whether the bytes representing the host are all

1s.

3. The destination IP address is this computer address (0.0.0.0).

4. The destination IP address is a multicast address of a group

to which the host is subscribed. In case the address is a class

D address, a helper function named lookup_multicast checks

if the address is registered in the global table of multicast

addresses.

If none of the following holds– discard the message. Else the

datagram is indeed destined to the local machine.

Now we check whether the next protocol field indicates UDP.

Else the message is discarded.

Now call ipreass function to handle reassembling of datagrams, if

needed.

If reassembling is not needed or the new packet is the last

fragment of a packet (the packet is reassembled), call udp_in with

the packet as argument. Before calling udp_in the offset field in

the packet is set according to the IP header size, to indicate to the

4th layer where its header begins.

3.2.4.

Reassembly sub module:

Reassembly requires the IP on the receiving machine to

accumulate incoming fragments until a complete datagram can be

reassembled. Because IP does not guarantee order of delivery, the

protocol requires IP to accept fragments that arrive out of order or

even intermixed with fragments from other datagrams.

Data structures used:

A single array called ipfqt, where each entry corresponds to a

single datagram. Each entry contains: a flag that indicates

free/valid, the source IP address and the identification field, a

time to live counter and a pointer to the linked list of fragments.

Fragments belong to the same datagram if they have identical

values in both their source address and IP identification fields.

A global buffer is used in order to reassemble a packet, once all

fragments have been collected.

Since IP is an unreliable delivery mechanism datagrams can be

lost on the internet. Thus, if a fragment is lost IP will never

recover the datagram.

To keep lost fragments from consuming memory that can be used

for other fragmented packets, and to keep IP from becoming

confused by reuse of the identification field, a special thread

(ipftimer) is used. This procedure updates the time-to-live field of

a fragments queue and deletes fragments whose time-to-live field

has expired.

The fragments are added to the list in sorted order, according to

their fragment offset field in the IP header making it easier to

check whether a complete datagram has arrived.

Note that since the global variables are used for reassembly are

used by 2 different threads, synchronization between them must

be used. This is done using a semaphore initialized to 1.

The reassembly sub module contains one interface function:

struct packet_in * ipreass (struct packet *pack)

pack – a pointer to a packet.

returns packet if reassembly is complete, 0 otherwise.

The function first checks according to the fragment offset field

and the MF field that reassembly is needed. Reassembly is

needed if the fragment offset isn’t zero or if the fragment offset is

zero and the MF bit is set. Else the function returns the packet.

Then checks if there is already an existing entry in the table for

the datagram. If a match was found the fragment is added to the

list and the function checks whether all fragments have arrived. If

no match was found the first unused entry in the array is used, the

source and identification fields are copied and the fragment is

added to the new queue.

Other functions used for reassembly:

ipfadd - add a fragment to an IP fragment queue.

parmeters: a pointer to an entry in the ipfq table and the packet to

be inserted to the queue. if the entry isn't valid or if the queue is

full returns false, else restarts the TTL timer and returns true.

note that the check whether the entry is valid is necessary since

the timer might release all fragments in the queue in case ttl is

over.

ipfjoin - join fragments, if all collected

parameters: - the number of the relevant entry in the ipfqt array.

The check whether all fragments have arrived is done as follows:

using a variable indicating the offset (initialized to zero) iterate

over the entire queue and check whether the offset of the current

fragment exceeds the offset variable. If it does then there is a

missing fragment. Else – compute the expected offset of the next

fragment, by adding the current fragment length to the offset

variable and continue to the next iteration. If all fragments have

been collected, the MF bit of the last fragment must be zero.

ipfcons - construct a single packet from an IP fragment queue.

This function is called by the ipfjoin function in case all

fragments have been collected.

parameters: the number of the relevant entry in the ipfqt table.

Each fragment in the queue of fragments includes IP header and

data. The function extracts the first fragment and copies it to the

reassembly buffer, including the IP header. The rest of the

fragments are extracted from the queue and copied without the IP

header. The function sums the number of bytes copied to the

reassembly buffer using the off variable. After all fragments have

been extracted and copied, the IP header of the reassembled

packet is fixed: the fragment offset field is now 0, and the total

length field in the IP header is changed to reflect the new size of

the reassembled datagram, according to the total size computed in

the iterations.

The check whether all fragments have arrived is done as follows:

using a variable indicating the offset (initialized to zero) iterate

over the entire queue and check whether the offset of the current

fragment exceeds the offset variable. If it does then there is a

missing fragment. Else – compute the expected offset of the next

fragment, by adding the current fragment length to the offset

variable and continue to the next iteration.

If all fragments have been collected a large buffer is allocated.

The IP header is copied to the buffer.

The function iterates over the queue of fragments adding each

fragment to the buffer and re-computing the total size of the

datagram.

The reassembly sub module uses three functions that implement a

queue - a linked list where each fragment is added to the queue

according to its fragment offset field. The queues are statically

allocated (to avoid the run time overhead involved in dynamic

memory allocation). Each item in the queue includes a buffer to

store the data and a pointer to the next element.

Each queue includes a variable that counts the number of

fragments in the queue, a pointer to the first element, and an array

of queue items.

The functions are:

Init_q – receives a pointer to a queue and sets the number of

fragments in it to 0 and the pointer to the next field to –1. the

head of the queue is set to 0 since the first element will be copied

to the first entry.

Insert – receives a pointer to a queue and a pointer to an incoming

packet. If the queue is full (the number of elements variable

equals the array size returns false. Else, Inserts the data pointed at

by the packet to the array in the queue (the data is stored in the

entry indicated by the number of fragments variable but the exact

place of the fragment in the linked list is determined according to

the fragment offset field in the IP header). The number of

fragments variable is incremented and in case this is the first

fragment in the packet the head variable will point to the new

fragment.

Del – In case the queue is empty, returns NULL else deletes the

first fragment in the queue (remove it from the linked list) and

return the fragment. (the function decrements the number of

fragments variable).

Initialization

All global variables used by IP (including those used for

reassembly) are initialized in the ip_init function.

This function is called once from a single boot thread.

This boot thread also creates the ip_receive thread, the consumer

thread and the timer thread.

3.3. UDP

3.3.1.

General description.

UDP header:

source port number

UDP message length

destination port number

UDP checksum

Data

appl

2

appl

1

Datagrams

with

Dst = 200

Datagrams

with

Dst = 210

UDP

input

UDP (User Datagram Protocol) layer provides connectionless

communication among application programs. It allows a program

on one machine to send datagrams to program on another

machine and to receive replies.

3.3.2. General flow

3.3.2.1. Send

When sending datagram socket layer calls udpsend().

udpsend() fills UDP header, calculates checksum and calls

sequentially to ipsend.

3.3.2.2. Receive

IP layer on receiving packet calls udp_in(). UDP checks

checksum, finds application that waits on port packet arrived

to, puts the packet to the place application can get it and

awakes the application.

3.3.3.

UDP interface

int udpsend(IPaddr, u_short, u_short, struct packet_out *, unsigned,

Bool)

Send one UDP datagram to given IP address

int upalloc(void)

Allocate UDP internal use port for demultiplexing queue

int udp_in(struct netif *pni, struct packet_in* pp)

Handle an inbound UDP datagram

3.3.4.

Data structures

struct

udp {

unsigned short u_src;

unsigned short u_dst;

unsigned short u_len;

unsigned short u_cksum;

};

/* message format of DARPA UDP */

/* source UDP port number */

/* destination UDP port number

*/

/* length of UDP data

*/

/* UDP checksum (0 => none)

*/

struct

upq

{

bool

up_valid;

short

up_port;

VDK::SemaphoreID sem;

/* UDP demultiplexing info */

/* is this entry in use */

/* local UDP port number

*/

/* semaphore to synchronize processing

incoming packets */

/* incoming packet */

packet_in* pin;

};

3.3.5.

Global Variables

struct

upq

upqs[];

3.3.6.

/* array of ports */

Functions

int udpsend(IPaddr, u_short, u_short, void *buffer, unsigned, Bool)

The function will create packet_out, fills fields of data and UDP

header and will give it to ipsend.

int upalloc(void)

Initialize upq structure and return its index. The process that

wants incoming datagram should wait on semaphore in upq

structure specified by this function.

int udp_in(struct packet_in pp)

This function is called when UDP datagram arrived. The function

will determine to what port this packet should be delivered and

what process should be awakened. It puts the datagram to struct

and awakes process that listened on that port.

3.3.7.

UDP Checksum

A 16-bit checksum used to verify the integrity of the UDP

packet. Unlike IP checksum, this checksum covers the

integrity of data as well. The UDP checksum is optional. If

checksum is not used all fields should be set to zeros.

For calculation UDP checksum pseudo header is used and it

consists of source and destination IP addresses, protocol type

and data length(UDP header length + data length).

3.4. Socket interface

3.4.1.

General description.

Socket layer is the door applications can use to get access to

transfer layer protocols.

Socket is pair of IP address and port number.

Port number allows receiving host to determine to which local

process the message should be delivered.

3.4.2. General Flow

3.4.2.1. Send

Socket layer calls sequentially to udpsend and gives buffer

given by user, destination IP address, destination port and

source port. For client programs Socket layer binds free port to

socket.

3.4.2.2. Receive

Socket allocates struct fills port and start waiting for packet on

semaphore. When UDP gets the packet it awakes application

process. Application process copies data to user buffer (the

only time data is copied).

3.4.3.

Socket Interface

int socket(int domain, int type, int protocol);

Creates a new socket and returns the socket descriptor. The only

type supported SOCK_DGRAM. Arguments domain and

protocol are ignored.

int bind(int sd, const struct sockaddr *name, int namelen);

Bind the socket identified by sd to port in the given struct name.

If binding is successful the function returns 0 else -1.

int connect(int sd, struct sockaddr * serv_addr, int namelen)

Can be done by a client (not necessary). Since we use sockets

over UDP calling connect function on the socket simply records

the specified address and port number as being the desired

communications partner. After calling connect the application can

use send functions instead of using sendto.

int sendto (int sd, void * buf, size_t nbytes, int flags, const struct

sockaddr * to, int tolen)

Sends nbytes of data stored in buf to the address and port

specified at the “to” parameter using the sd socket descriptor.

Returned value is the number of bytes sent or –1 on error.

int recvfrom(int sd, void *buf, size_t nbytes, int flags, struct sockaddr

*from, int *fromlen)

Receives messages using the socket sd, stores the message in buf

(which is of size nbytes) and stores information about source in

from structure. Returned value is the number of bytes received or

–1 on error. If buff is not large enough any extra data is lost. The

calling process is blocked until a datagram arrives.

int send(int sd, const void *msg, size_t nbytes, int flags)

Same as sendto but without specifying second end point (can be

used only after calling connect).

int recv(int sd, void *buf, size_t nbyets, int flags)

same as recvfrom but without requesting information about

source.

int close (int sockfd);

Closes the socket descriptor indicated by sockfd. Returns 0 upon

success or –1 on error.

3.4.4.

Data structures

struct in_addr {

IPaddr s_addr;

};

struct sockaddr {

unsigned short sa_family;

char

sa_data[14];

};

// in_addr struct

// IP address

// socket address

struct sockaddr_in{

// sockaddr_in struct

short

sin_family;

// family

unsigned short

sin_port; // destination port

struct in_addr sin_addr; // destination IP address

char

sin_zero[8];

};

// Internal structure

struct socket {

socket */

struct sockaddr_in addr;

unsigned short int my_port;

int sock_valid;

/* represent a

/* address of peer host */

/* my port number */

/* indicate free or valid

socket: 0 is free */

};

3.4.5.

Global Variables

struct socket sockets[]; /* array of sockets */

3.4.6.

Functions

int socket (int type);

When a user calls socket a free entry in the sock is searched and

set to valid. the socket descriptor is returned.

int bind (int sd, int port);

According to sd (socket descriptor) find the relevant entry in the

sock array and set the my_port field according to the given port.

int connect(int sd, struct sockaddr * serv_addr);

According to sd (socket descriptor) find the relevant entry in the

sock array and set the addr field according to the given sockaddr

parameter.

sendto (int sd, void * buf, int nbytes, const struct sockaddr * to)

According to sd (socket descriptor) find the relevant entry in the

sock array and check if a valid port was allocated. if not – find a

free port and allocate it. call udp_send.

recvfrom(int sd, void *buf, int nbytes, struct sockaddr *from);

According to sd (socket descriptor) find the relevant entry in the

sock array and check if a valid port was allocated. the function

fill from structure with source parameters. call upalloc, fill the

port and pid in the appropriate entry in the upqs array and wait on

a semaphore for a datagram to arrive.

close (int sockfd);

Find the relevant entry in the sock array and change the

sock_valid field to free. Deallocate the queue of incoming

datagrams. Change the relevant entry in the upqs array to free.

Using the Uart driver interface

The Uart class is an implementation of the Blackfin processor’s

Output com driver.

It’s main purpouse is to send and receive data from the physical layer.

It’s functionality is based upon accessing the hardware‘s internal buffer

and transferring data between that buffer and a software allocated buffer.

The function members of the Uart class are responsible of transferring the

data from the software allocated buffer to the hardware’s buffer and vice

versa, defining the DMA ownership and the hardware’s responsibility to

send it’s buffer’s data through the physical connection, and

implementation of a packet form which consists of a header and a

message body ( which will be described in more details later).

Examle of using Uart interface : Clear-test

The Clear-test application is used in order to communicate with a remote

pc. The remote pc must have layer 2 connectivity with the blackfin

processor since Clear-test performs the communication using Uart which

as said , accesses the physical layer directly.

The Uart is designed to send and receive the message’s body and a

message code as well. The message code appears in the header and can be

one out of six options including: test audio, read tuner, set tuner and other

Radio related queries.

The code indicates the message’s issue and the message body

Indicates parameters. Clear test uses the sending and receiving function

members of the uart and treats each message according to it’s code.

Our implementation uses the uart function calls in the same way clear-test

uses them but we ignore the message code. It is irrelevant to our project.

Using Uart to send through physical connection:

Uart class has the main sending function member Send_msg.

It receives the buffer to be sent and it’s size.

It basically generates a header to the message and then concatenates the

message body to that header and places them in a sending buffer.

Once the sending buffer is ready the write descriptor ( which is initialized

to the right com in the construction of Uart) is updated with the size of

the message and the pointer to the beginning of the buffer.

Afterwards the write_descriptor’s config_word field is set to

UART_TX_DMA_CFG_WORD + DMA_OWNERSHIP which means

passing responsibility to the hardware to send the message via the

physical layer.

Then the processor is blocked with “ asm("ssync")

“.

This command acts like a semaphore which stops the processor from

executing further commands and lets the com hardware send from the

buffer. Once the com hardware finished it’s task, it gets blocked with the

same “

asm("ssync")

“ command and the processor continues

the command execution from where it stopped.

Using Uart to receive through physical connection:

Uart class has the main receiving function member get_msg.

It receives the software allocated input buffer to which the received

message is written.

It basically accesses the read descriptor and checks that there is data in

the descriptor’s buffer.

Once data arrives through the physical layer, it is located in the

descriptor’s buffer and when get_message is called, it checks the

descriptor’s buffer for the new data.

get_message reads the first 8 bytes as the message’s header.

Then the rest of the message (the message body) is written into the

software allocated input buffer. Only then the message is discarded from

the reading descriptor’s buffer using the function skip_to_next_msg .

How does it affect our design?

Since the driver gets blocked every time it sends a message, we had

to separate the user thread (which sends a message through the

UDP/IP interface) from the driver call which physically sends the

message as described.

This separation is very important because we don’t want a sending

application to wait until the message is sent and be blocked until

then. We want to have a mechanism that an application can send it

it’s messages and continue it’s tasks right after.

We can also have a scenario in which many application threads

send different messages in the same short time interval. Since the

sending thread gets blocked, if we do not separate the driver call

from the sending thread, all the application threads will get

blocked for a long time. The risk for deadlock gets high as well.

In our project we implemented a buffer for each interface which

stores all the data packets to be sent via that interface. An

independent consumer thread accesses that buffer and takes each

time another packet and sends it using the Uart driver send_msg

function.

Each application thread eventually puts the generatd packet into

that buffer and continues its tasks and the consumer thread is the

one which takes care of the sending and gets blocked using the

driver call.

Once messages arrive, they are stored in the read descriptor’s

buffer and only when get_message is called, they are read into

software’s buffers.

In our implementation we have a thread called Ip_Receive which is

responsible to call the get_message function and read the packet

and then send it further up to the Ip computation.

If the driver had been made to use Interrupts generated every time

a message arrives, we could keep the Ip_receive thread asleep until

a message arrives and then generate interrupt that wakes it up.

Once Ip_receive woke up, it calls get_message, when it knows for

sure that a message exists in the read descriptor’s buffer.

The driver does not generate interrupts and the only access to the

received data is through calling get_message, whenever the user

decides.

Since we want that every received message will be instantly read

and passed to Ip computation, Ip_receive is doing busy-wait

calling get_message. Therefore it consumes precious processor

time.

If interrupts were generated we could wake up Ip_receive only

when a message arrives and save the processor time that is wasted

on the busy-wait.

Possible enhancements:

1. It is possible to remove timer thread in reassembly module and to

delete timeout fragments if we need the place they consume.

2. Change socket layer the way it can receive packets even if socket

isn’t blocked by recvfrom() function.