nfs-finalpaper

advertisement

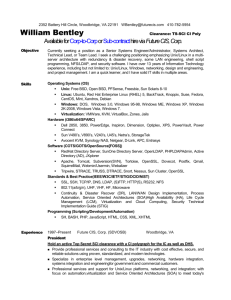

Examining NFS Performance Janak J Parekh (jjp32@cs.columbia.edu) Alex Shender (sauce@cs.columbia.edu) May 1, 2000 Copies of this paper may be obtained at http://www.cs.columbia.edu/~sauce/nfs. Overview The reach of Sun NFSi has been extended tremendously from its early days of SunOS and Solaris to one where NFS exists on virtually every Unix implementation, including Linux. However, NFS performance on Linux has been considered subpar since its early releases and is still considered subpar in the latest production kernel (2.2.14).ii Additionally, Linux (up to 2.2.14) only officially supports NFS v2, which was developed years ago and has been supplanted on other UNIX platforms, especially Solaris, by NFS v3, which features performance improvements as well as TCP support. (NFS v4 is still in the design and early implementation stages.) Linux suffers from the following performance problems. Client-side: Many users have complained that NFS read performance under Linux is acceptable, but NFS write performance is several times slower than comparable Solaris or BSD systems, due to the need for synchronous writes in the NFSv2 support that’s built into Linux.iii Server-side: The problem in earlier revisions was the lack of a kernel-mode NFS server daemon; while the user-level NFS daemon was functional, it was slow (due to extra context switches) and unstable. The problem has been rectified with the addition of knfsd, but it is still considered “experimental”. Recently, patches against the 2.2 kernel have been released to address both issues. A kernel module is available to allow the Linux NFS server to run in kernel-mode space, known as knfsd. Within the last few months, patches against knfsd for v3 support have been released. They are still in an early beta state, but are the beginnings of an attempt to finally merge v3 server-side support into Linux. NFS v3 client-side support is now available for Linux 2.2 and has become quite stable. We obtained the latest releases of these patches, integrated them into both Linux for x86 and SPARC platforms, and performed performance tests. Details of the implementation, testing methodology, and performance results as compared to NFS v2 and NFS on Solaris are covered in this paper. Previous/related work Surprisingly, little work has been done in examining the performance of Linux NFS. We have done extensive searches on the web, and apart from discussions in newsgroups and printouts of the Bonnie benchmark on NFS disks, little else exists. No papers have been written on the subject, at least according to the ACM, IEEE, and USENIX digital libraries. Additionally, little if any documentation has been written on Linux NFS v3 performance. This is not surprising, considering that the Linux NFS v3 client only recently has become near-production code and has not yet been rolled into the kernel, and considering that the Linux NFS v3 server is still in an early beta form. As of April, the client-side patches are near completion, except tuning; NFS v3 UDP and TCP are supported and the patches apply cleanly against Linux 2.2.14. Server-side patches are available to update knfsd to support NFS v3, but only UDP is supported at this time. Recent postings have suggested that these sets of patches will be rolled into the Linux kernel for 2.2.15 or 2.2.16, and very likely will not be finalized until the 2.4 series are available (i.e. they will probably be considered experimental for the foreseeable future). We were able to find a few links summarizing NFS v2iv and preliminary v3 client-sidev performance. Note that the v3 performance stems from older patches against older 2.2 kernels, and performance has likely changed, hopefully for the better. Additionally, neither of these references goes to any great extent in examining variables on both the client side and the server side, or comparing the results to baseline performance measurements such as ttcp or local filesystems. A Network Appliances presentation discussing NFS v3 TCP performance in the context of the upcoming NFS v4 standard exists, but does not deal with Linux.vi Design, implementation and testing methodology Computer setup We decided to utilize 4 machines – two x86 machines and two sparc computers. x86 computers: 433MHz Intel Celeron Intel810 core chipset/IDE controller 128MB PC100 SDRAM 10GB Quantum Fireball IDE hard drive Intel 82557 Ethernet chipset (used in EtherExpress PRO/100B adapters) sparc computers: 333MHz UltraSparc IIi SparcStation 128MB PC100 SDRAM 10GB Seagate ST39140A IDE hard drive Sun HME 100Mbps Ethernet chipset An old but serviceable Cisco 100Mbps hub was borrowed to create a completely private subnet. Operating system setup and configuration Each machine was set to dual-boot between Linux and Solaris. Clean installations of each were performed to ensure that outside factors were not included (i.e. special hacks, patches, or background processes). This way every common combination of the operating systems and processors could be tested without worrying about hardware differences. The flexibility of the Linux LILO and SILO boot loaders (the latter is for Sparcs) allowed dual-booting into Solaris; after adjustment to the appropriate conf files, the two operating systems cooperated. We then set about the task of updating the Linux kernel to 2.2.14 along with the appropriate NFS v3 patches. The first challenge in updating the kernel appropriately was to find the patches in the first place. The Linux NFS project, like many other open- source Linux kernel projects, is decentralized and disorganized; only by extensive searching via Google™ were we able to find the appropriate, latest sites for Linux NFS information. Recently (within the last two months), a clearinghouse for NFS was established at SourceForge.vii This site links to the NFS v3 client patchesviii as well as server-side patchesix, which must be applied after the client patches on NFS servers. Nevertheless, documentation is still scarce. Moreover, the code only appears to have been tested against the x86 platform. While attempting to compile the patched kernel on the Sparc platform, a symbol conflict was encountered (page_offset); apparently, sparc Linux defines this global variable and makes the PAGE_OFFSET constant point to it, while the x86 platform only defines PAGE_OFFSET in absolute terms. This makes it very clear that compilation on at least the sparc Linux platform was never attempted. We were forced to track down the conflicts and resolve them by hand; fortunately, the code compiled cleanly thereafter, and the kernel booted. The next challenge was to enable use of the kernel nfs daemon as well as to explicitly request the client to use the NFS v3 protocol. The latter request is fairly simple; mount takes a -o parameter to which we supply a tag such as vers=3,proto=UDP corresponding to the NFS version and protocol, respectively. However, the client repeatedly insisted that the server didn’t support NFS v3. A test against a Solaris server ruled out the client patches being the source of the problem. A visit back to the SourceForge NFS clearinghouse led us to updated nfs daemon toolsx, which failed to solve the problem. After hours of debugging, we discovered that RedHat 6.1 actually includes an earlier version of knfsd, which does not support NFSv3, but advertises by default that it does, only to hang when a client tries to use the v3 protocol. RedHat had therefore modified the nfs rc script to explicitly tell knfsd to disable advertising the v3 capability; unfortunately the new knfsd which does support v3 was also being suppressed from advertising appropriately. Once discovered, a simple modification to the rc script solved this problem. This problem was not documented anywhere. The fact that the latest knfsd does not yet support v3 TCP mode was not well documented either; as a result our Linux server-side tests will focus on UDP. Fortunately, later kernels are likely to integrate these modules and simplify these procedures so that future Linux distributions do not suffer the same configuration issues encountered in RedHat 6.1. Testing scheme After establishment of basic functionality, it was important to design a testing scheme that would allow for an apples-to-apples comparison. For example, unequal raw network throughput is undesirable, and if any exists it must be accounted for. The same must be considered of the filesystem (i.e. local disk performance). To solve the first problem we utilized ttcp measurement to serve as a baseline. Initially, we received unequal ttcp performance among the four machines, sometimes on the order of a staggering 5-10 times difference. We traced the largest portion of this discrepancy to poor cabling, including incorrectly pre-crimped Sun cables (pins 3 and 6 were not one pair; instead, pins 3 and 4, and 5 and 6 were two separate pairs, which is specifically warned against by 100Mbps Ethernet card manufacturers) and poor quality home-made cables (it would be interesting to see how much faster the CS department subnet would be with all new Ethernet cables). After replacing the cables approximately 3 times, we managed to secure 4 cables from the same manufacturer of a consistent, high quality. This resolved most of the performance differences in our ttcp tests. We discuss the remaining inconsistencies in our results section. cp was used to do baseline NFS tests (as opposed to ttcp which does not touch NFS). We used cp to perform simple reads from the remote mount to the local disk and timed the results using GNU time. The GNU time source code was modified to provide more precision. Bonniexi is one of the canonical filesystem performance tests. It is currently used by Linux NFS developers to identify bottlenecks. It can be used to test both locally mounted and remotely mounted filesystems. We compiled and installed Bonnie on all four environments. Bonnie was then run on each local filesystem to determine the baseline performance of the computer, since we expected differences especially between the two architectures. Experimental Setup Server testing. This included single-client load, i.e. stress testing would be attempted to determine maximum throughput. o Control/Base: Solaris sparc nfs server Solaris x86 nfs server o Experimental Linux sparc nfs server (using knfsd and the v3 patches) Linux x86 nfs server (using knfsd and the v3 patches) Client testing. Since v3 TCP server-side support is not yet implemented on Linux, our tests have been pared down from the original set. o Control/Base: Solaris sparc nfs v2 client Solaris sparc nfs v3 UDP client Solaris sparc nfs v3 TCP client (against Solaris SPARC, x86) Solaris x86 nfs v2 client Solaris x86 nfs v3 UDP client Solaris x86 nfs v3 TCP client (against Solaris SPARC, x86) o Experimental: Linux sparc nfs v2 client Linux sparc nfs v3 UDP client Linux sparc nfs v3 TCP client (against Solaris SPARC, x86) Linux x86 nfs v2 client Linux x86 nfs v3 UDP client Linux x86 nfs v3 TCP client (against Solaris SPARC, x86) The clients connected to the aforementioned servers, so approximately 40 combinations were tested. See the next section for an overview of the results, including ttcp, cp, and bonnie tests for the above. Results and low-level analysis We include the subset of results here that we feel are relevant (all of the raw data is available in an Excel workbook, separately). ttcp performance After the defective cables were replaced, we received fairly consistent overall ttcp performance (the results are averaged over 3 trials for each test). UDP-wise, the results were extremely consistent; this is not surprising, considering the lossy nature of UDP (ttcp makes no attempt to recover from lost packets). We used the UDP test to ensure that both interfaces were able to transmit essentially the same raw throughput. proto udp 14000 Throughput, kbps 12000 10000 to toos sparcc - linux sparcc - sol sparcs - linux sparcs - sol x86c - linux x86c - sol x86s - linux x86s - sol 8000 6000 4000 2000 0 linux sol sparcc linux sol linux sparcs sol x86c linux sol x86s from fromos All of the combinations of architecture and CPU’s produced results between about 11,500kBps and 11,900kBps. (Note that the missing bars correspond to “localhost” calculations, which are irrelevant for the purpose of this test. Also note that while Excel acts weird with capitalization and implies that the above are kilobit/second throughput, we’re actually referring to kilobyte/second throughput here.) We had much more variance on our TCP results, as shown in the following graph. proto tcp 12000 Throughput, kbps 10000 to toos 8000 sparcc - linux sparcc - sol sparcs - linux sparcs - sol x86c - linux x86c - sol x86s - linux x86s - sol 6000 4000 2000 0 linux sol sparcc linux sol linux sparcs sol x86c linux sol x86s from fromos Here, most of the results range from 8,000kBps to 11,000kBps. There is about a 30% range from the “worst” box to the “best” box in peak transfer rates. The performance difference between the boxes, detailed in the results section, can be attributed to either (a) the Sun HME interface being a better-optimized Ethernet interface as compared to the Intel 82557 interface; (b) better bus utilization and throughput on one architecture to the other; (c) the less variety of Ethernet cards on the Sparc platform, resulting in more consistent performance there; or (d) Solaris-to-Linux handshaking (window sizes) issues. These results are considered in evaluating performance differences between the platform/OS combinations in the next sections. The above notwithstanding, the x86 Linux server box had particular trouble acting as a “target” for this test. When acting as a destination from the sparc client in ttcp, the Linux server box performs about half as fast as the Linux client box. The Linux server box also acts slowly as a “ttcp client” in 2 other configurations. This is extremely surprising, because the server and client boxes are identical, with an identical software configuration (we dd’ed the Linux partition between the two machines). Swapping network cables between the two machines did not make a difference in performance. Lastly, the Linux server acts perfectly fine as a ttcp “source”. Due to the fact that we could not acquire a third identical machine, we were not able to determine whether this was due to faulty hardware. The consolation here is that the server box will never serve as a client, so this anomaly should not pose a significant factor. We were able to draw a number of other conclusions from the TCP results. (Graphs are shown where appropriate.) proto tcp 12000 Throughput, kbps 10000 8000 fromos linux sol 6000 4000 2000 0 sparcc sparcs x86c x86s from As shown in this graph, Linux performed better as a “source/transmitter” platform. proto tcp 12000 Throughput, kbps 10000 8000 6000 4000 2000 0 sparcc sparcs x86c x86s from As a “transmitter”, the x86 machines were somewhat faster, but not substantially so. proto tcp 12000 Throughput, kbps 10000 8000 toos linux sol 6000 4000 2000 0 sparcc sparcs x86c x86s to As shown in this graph, Linux performed better as a “receiver” under the sparcs as opposed to Solaris; on the x86 units the results were the opposite. The sparc performance is readily understandable considering Solaris’ slower-start effects on TCP; however, on x86 the only conclusion we can draw is that the i82557 driver might be better-tuned for this on Solaris than Linux. Overall, sparc Linux was the fastest client, while x86 Linux was the slowest client (note, however, this includes the low performance of the x86 server as client, which had the aforementioned performance anomaly). cp (NFS read) performance For most of the results, we discuss the performance for transferring the 10MB file; except for the TCP slow-start effects, the 1byte and 32768KB files did not provide any significantly meaningful results, since the times were too small to draw significant conclusions from. Also, note that these results are given in seconds, i.e. lower bars mean better performance. FileSize 10240000 14 Time (s) 12 10 CliCPU CliOS 8 sparc - Linux sparc - Solaris x86 - Linux 6 x86 - Solaris 4 2 0 udp2 udp3 tcp3 Linux udp2 Solaris udp3 udp2 udp3 tcp3 Linux sparc udp2 udp3 Solaris x86 SrvCPU SrvOS Method As the above results show, performance was pretty uniform across the board. There are several notable exceptions. First, an x86 Solaris client hates to talk to a sparc Linux NFSv3 UDP mount. The result was so out-of-line that we can only assume the fact that the NFSv3 code has been completely untested under Linux must be the culprit – there must be a race condition or some similar situation where the client performs very poorly. FileSize 10240000 Method (All) 8 Time (s) 7 6 5 CliCPU CliOS sparc - Linux 4 sparc - Solaris x86 - Linux x86 - Solaris 3 2 1 0 Linux Solaris Linux sparc Solaris x86 SrvCPU SrvOS As the red bars show, Solaris on sparc is still the best client and server; although the server performance doesn’t differ from the x86 Linux or the x86 Solaris server tremendously, the client numbers are uniformly the best across-the-board. The sparc Linux client performs very poorly, but this is skewed because of the x86 Solaris client’s NFS v3 UDP performance with a sparc Linux server – otherwise it is also in-line with the others. The Linux clients produce interesting results. The x86 Linux client is not a great performer, but is consistent and, surprisingly, performs best with an x86 Linux server. sparc Linux performs substantially better than x86 Linux; we believe this is due to the superiority of the HME interface as evidenced in the ttcp benchmarks. The sparc Linux client is also consistent, except it doesn’t like the x86 server. FileSize 10240000 9 Time (s) 8 7 6 CliCPU CliOS 5 sparc - Linux sparc - Solaris 4 x86 - Linux x86 - Solaris 3 2 1 0 udp2 udp3 tcp3 Linux udp2 udp3 Solaris SrvOS Method Under Solaris, the expected gain in NFSv3 UDP is achieved compared to NFSv2 UDP. NFS v3 TCP support is also very good, but slightly slower than NFS v3 UDP on the local subnet. Unfortunately, the NFSv3 support doesn’t appear to improve matters on Linux much on these reads yet. FileSize 10240000 4 Time (s) 3.5 3 2.5 CliOS Linux 2 Solaris 1.5 1 0.5 0 tcp3 udp2 udp3 Method As this graph indicates, for large files NFSv3 via TCP works very well on Solaris. On Linux, NFSv3 via TCP is not well-optimized, and as a result is slower than using UDP. The “mini-conclusion” here is that Solaris is still a better NFS client and server platform. The sparcs are more efficient, presumably since there are fewer drivers to support. bonnie performance It turns out that the size of the test file makes a substantial difference in bonnie’s performance. We had initially used 10MB files for bonnie, but received results where the remote performance would often exceed local performance in situations where the remote machine performed slower on its local disk. This clearly indicated that caching was occurring on the 128MB machines. We were forced to scrap those results entirely and to use 300MB temporary files, which took considerably longer, especially considering some of the bottlenecks that cp hinted at above. However, the 300MB files give much more accurate and consistent results. Bonnie performs a number of tests. ASeqOChr: Sequential output by character essentially tests putc() performance; AseqOBlk: Sequential output by block tests efficient writes; ASeqORW: Sequential output read-write tests replacing certain blocks; ASeqIChr: Sequential input by character essentially tests getc(); ASeqIBlk: Sequential input by block tests efficient reads; ARandSeek: Bonnie performs a random seek on the filesystem. We have averaged these numbers in the following graphs, i.e. “ASeqOChr” is sequential output by character averaged across tests (when appropriate). Local performance Method local 20000 18000 16000 Throughput (KBps) 14000 Data 12000 ASeqOChr ASeqOBlk ASeqORW ASeqIChr ASeqIBlk ARandSeek 10000 8000 6000 4000 2000 0 linux solaris linux sparc solaris x86 SrvCPU SrvOS The local-disk performance, unfortunately, is subpar on the x86 machines as can be shown above. It appears that the IDE chipset used on these x86 machines is not optimally supported on these units, while the unified driver model on the sparc avoids this problem entirely. As a result, we were forced to consider the results regarding Linux NFS server performance appropriately. Fortunately, we are still able to draw some conclusions, as the following graphs demonstrate. 25000 Throughput (KBps) 20000 Data 15000 ASeqIBlk ASeqIChr ASeqORW ASeqOBlk 10000 ASeqOChr 5000 udp2 udp3 udp2 udp3 udp2 udp3 udp2 udp3 tcp3 udp2 udp3 tcp3 udp2 udp3 tcp3 udp2 udp3 tcp3 udp2 udp3 udp2 udp3 udp2 udp3 udp2 udp3 udp2 udp3 tcp3 udp2 udp3 tcp3 udp2 udp3 tcp3 udp2 udp3 tcp3 udp2 udp3 0 sparc x86 sparc x86 linux sparc solaris x86 sparc linux linux x86 solaris solaris sparc x86 sparc x86 linux solaris sparc linux sparc x86 sparc linux x86 solaris solaris x86 SrvCPU SrvOS CliOS CliCPU Method The above graph is too complex to demonstrate much; the results are broken down below in sections. However, it becomes immediately obvious that Linux can hold its own in both serving NFS and acting as a NFS client. (In the above graph, the x-axis is partitioned, from bottom-to-top by server CPU, server OS, client OS, client CPU, and method (i.e., protocol).) NFS client performance 18000 16000 14000 Throughput (KBps) 12000 Data ASeqIBlk 10000 ASeqIChr ASeqORW 8000 ASeqOBlk ASeqOChr 6000 4000 2000 0 tcp3 udp2 udp3 tcp3 sparc udp2 udp3 tcp3 x86 udp2 udp3 tcp3 sparc linux udp2 udp3 x86 solaris CliOS CliCPU Method As the above shows, Solaris still is a better across-the-board client than Linux (averaged across all servers). However, the x86 client is steadily improving. In particular, the NFS v3 UDP client performs within about 75-80% of the peak Solaris transport, which is NFS v3 TCP. Interestingly enough, Linux UDP performance is well within striking distance of Solaris – it’s within 90%. This is a sign that the TCP performance still has to be optimized (it’s less than UDP on Linux) – when this is done Linux may be a solid NFSv3 TCP-based client. In particular, the sequential rewrite test seems to improve tremendously when TCP is used on Solaris – perhaps this is a bottleneck that needs to be addressed in the NFSv3 TCP code on Linux. Note that the above test averages across servers, so that filesystem slowness would be averaged into the results appropriately. Let’s examine the performance across server platforms. Method udp2 25000 Throughput (KBps) 20000 Data 15000 ASeqIBlk ASeqIChr ASeqORW ASeqOBlk 10000 ASeqOChr 5000 0 linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris sparc x86 sparc linux x86 solaris sparc x86 sparc linux sparc x86 solaris x86 CliCPU CliOS SrvCPU SrvOS Note that the bottommost segment of the x-axis refers to the client architecture and OS, which is then further subdivided by the server architecture and OS. Some interesting conclusions can be drawn here. First of all, x86 Linux is a decent NFS v2 client, but it strongly prefers a Linux server. The same can be said of the sparc platform, but sparc Linux performs slightly worse as a client. Method udp3 25000 Throughput (KBps) 20000 Data 15000 ASeqIBlk ASeqIChr ASeqORW ASeqOBlk 10000 ASeqOChr 5000 0 linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris sparc x86 sparc linux x86 solaris sparc x86 sparc linux sparc x86 solaris x86 CliCPU CliOS SrvCPU SrvOS Note here that the bottommost segment of the x-axis refers to the server architecture and OS, which is then further subdivided by the client architecture and OS. Again, Solaris starts pulling ahead when using NFSv3 UDP. However, x86 Linux still performs decently with Linux servers. (Note that the poor showing by the x86 Linux server is possibly due to the poor local filesystem performance.) TCP is more interesting. Method tcp3 25000 Throughput (KBps) 20000 Data 15000 ASeqIBlk ASeqIChr ASeqORW ASeqOBlk 10000 ASeqOChr 5000 0 solaris solaris solaris solaris solaris solaris solaris sparc x86 sparc x86 sparc x86 sparc linux solaris linux sparc solaris x86 solaris x86 CliCPU CliOS SrvCPU SrvOS These numbers again demonstrate the potential of TCP v3 performance. Of course, these results are skewed by the fact that only Solaris can serve v3 machines, but note that in particular that write performance is improved on Linux with the TCP v3 client. Of course, optimization still needs to be done for Linux here. NFS server performance 18000 16000 14000 Throughput (KBps) 12000 Data ASeqIBlk 10000 ASeqIChr ASeqORW 8000 ASeqOBlk ASeqOChr 6000 4000 2000 0 udp2 udp3 tcp3 linux udp2 udp3 udp2 solaris udp3 tcp3 linux sparc udp2 udp3 solaris x86 SrvCPU SrvOS Method Here, some of the most interesting results come to light. First and foremost is the fact that Solaris is not the fastest across-the-board NFS server, when all of the clients are averaged in. In particular, the sparc Linux UDP v2 server is. Note that this test isn’t completely accurate due to the filesystem overhead on the Intel boxes. However, on both architectures Linux is on par with Solaris, performance-wise. A disturbing point in the above graphs is that NFSv3 does not benefit the Linux server code. This clearly means that the server needs much more optimization. On Solaris, the v3 code is a tremendous speed boost. Let’s examine these numbers further, client-wise. Method udp2 25000 Throughput (KBps) 20000 Data 15000 ASeqIBlk ASeqIChr ASeqORW ASeqOBlk 10000 ASeqOChr 5000 0 linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris sparc x86 sparc linux x86 solaris sparc x86 sparc linux sparc x86 solaris x86 SrvCPU SrvOS CliCPU CliOS Interestingly, the sparc Linux box, under UDPv2, beats out all of the other boxes, and the x86 Linux box beats out the x86 Solaris box. Now, for NFS v3 performance. Method udp3 25000 Throughput (KBps) 20000 Data 15000 ASeqIBlk ASeqIChr ASeqORW ASeqOBlk 10000 ASeqOChr 5000 0 linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris linux solaris sparc x86 sparc linux x86 sparc solaris x86 sparc linux sparc x86 solaris x86 SrvCPU SrvOS CliCPU CliOS Here, the Solaris box finally posts the highest overall throughput. However, the sparc Linux client works horribly against the sparc Solaris client, which skews its numbers further down. Interestingly, Linux as a client (as mentioned previously) much prefers Linux as a server. In fact, the sparc Linux server/x86 Linux client combination happens to perform almost on par with the sparc Solaris server/sparc Solaris client (which is widely considered to be the optimal combination). Method tcp3 25000 Throughput (KBps) 20000 Data 15000 ASeqIBlk ASeqIChr ASeqORW ASeqOBlk 10000 ASeqOChr 5000 0 linux solaris linux sparc solaris x86 linux solaris linux sparc solaris x86 solaris solaris sparc x86 SrvCPU SrvOS CliCPU CliOS Here, the power of TCP for server throughput is shown. It will be interesting to see how Linux stacks up against Solaris when the TCP server code is available. SGI, among others, is contributing to the knfsd server code, so arguably there’s a movement for better performance on this end. It can be concluded, from the Solaris results, that TCP is indeed a superior protocol for large file transfers. Conclusion Despite the release of NFSv3 patches for Linux, performance is still an issue. Clientwise, while the write-performance problem has been addressed to some extent certain cases, more tuning of the code is clearly necessary, especially on the sparc Linux platform. We expect this to happen after the codebase is merged into the kernel. Serverwise, Linux is starting to show solid performance. Once reliability issues are resolved, there is no reason why Linux can’t be a feasible production NFS server. It’s important to note that Solaris particularly benefits from v3 and TCP. According to (vi), Solaris itself needs to be better tuned for TCP support, and as a result we expect Solaris to improve at the same time. While Linux may become more competitive in the near future, it still has a long ways to go before it is an optimal NFS solution. In any case, research of this nature is clearly necessary for Linux to gain acceptance as a viable replacement for many of the production systems that currently run commercial Unices. Hopefully, with the advent of the 2.2.15 and 2.2.16 kernels and the increased focus on improving NFS performance, Linux will soon have respectable NFS performance and reliability. References i Sandberg, R., Goldberg, D., Kleiman, S., Walsh, D., and Lyon, B., "Design and Implementation of the Sun Network Filesystem," Proceedings of the Summer 1985 USENIX Conference, Portland OR, June 1985, pp. 119-130. ii “Ask Slashdot: NFS on Free OSes Substandard?”, http://slashdot.org/article.pl?sid=99/04/30/0132237&mode=thread iii Post on “Ask Slashdot: NFS on Free OSes Substandard?”, http://slashdot.org/article.pl?sid=99/04/30/0132237&mode=thread iv “Linux NFS (v2) performance tests”, http://www.scd.ucar.edu/hps/TECH/LINUX/linux.html. v Ichihara, T. “NFS Performance measurement for Linux 2.2 client”, http://ccjsun.riken.go.jp/ccj/doc/NFSbench99Apr.html (If the document is unavailable, use http://www.google.com/search?q=cache:ccjsun.riken.go.jp/ccj/doc/NFSbench99Apr.h tml+NFSv3+performance&lc=www.) vi Network Appliances NFSv4 IETF Nov ’99 presentation, http://www.cs.columbia.edu/~sauce/nfs/nfs-udp-vs-tcp.pdf vii “Linux NFS FAQ”, http://nfs.sourceforge.net viii “Linux NFSv3 client patches”, http://www.fys.uio.no/~trondmy/src/. ix “Linux knfsd v3 server patches”, http://download.sourceforge.net/nfs/kernel-nfsdhiggen_merge-1.4.tar.gz (used to be http://www.csua.berkeley.edu/~gam3/knfsd/ but this address is no longer maintained) x “Latest NFS-utils package”, http://download.sourceforge.net/nfs/nfs-utils-0.1.7.tar.gz xi “Official Bonnie homepage”, http://www.textuality.com/bonnie/