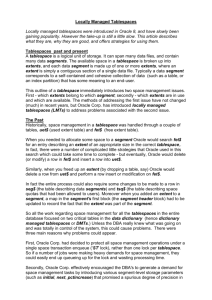

Ravi Bhateja - Trelco Limited Company

advertisement

Ravi Bhateja 3-D, DDA Flats, Sarai Jullena New Delhi 110025, India Mobile: + 91 92131 39314 Email ravibhateja@hotmail.com, ravibhateja@yahoo.com Introduction: I am Oracle Certified professional DBA for Oracle 9i and I possess 3+ years of experience working as an Oracle DBA and my total IT experience is 5 years. I possess 1 year of work experience working at Perth, Australia where I worked with Teach Foundation from August 2004 till August 2005. Currently I am working with Bharti Telesoft on a project for Airtel Mobile, I am involved in maintaining the uptime, tuning the performance of this database, shell scripting and performing other DBA activities. Career Objective I possess expertise in the area of Performance Tuning, configuring Oracle databases for Data Warehousing and OLTP systems over Solaris 9/Windows Server and shell scripting. I am looking ahead for a DBA position where I can practice Performance Tuning, data Warehousing, 24*7 uptime and extend my skills in other areas of Oracle Database Administration on Solaris and other UNIX family systems. Certifications 1) Oracle 9i Certified Professional DBA 2) Sun Certified Programmer for Java 2 Education Qualification Institute/ Uni. Subjects Completed B.Sc. Bhavans College, Mumbai University. June 1999 3 Year Advance Diploma in Software Engineering Aptech Computer Education. Physics (major) and Computer Science(minor). Software Engineering Jan 2001 Work Experience: My total experience is 5 years, which includes Oracle Database Administration on Solaris/Linux and Windows server; and programming using PHP, J2EE, JSP, WML and XML. I have extensive exposure to the following Oracle Database Administration aspects: (i) Performance Tuning of Oracle 9i databases. I have high expertise in the area performance tuning the Oracle 9i database on Solaris and Windows server. I possess in depth knowledge tuning the shared pool, database buffer cache, large pool, Java pool, sort area, shared server environment for best scalability, UGA, Redo buffer, redo log files, archive log files, I/O, checkpointing, automatic rollback, application, SQL, OS, locking issues and latches. I have solely authored a study material for OCP 1Z0-033 Performance tuning exam for Whizlabs Software, please see the article for that Study material on http://www.whizlabs.com/articles/1Z0-033-article.html (ii) Configuring Hybrid Databases that have Data Warehousing and OLTP systems running under one Database, configuring such hybrid systems by multiple Block Sizes and then configuring corresponding tablespaces using those non-standard blocks for OLTP and Data Warehousing systems. (iii) Installing and configuring Solaris 9 and Oracle over Solaris by using optimized settings for performance, troubleshooting Solaris and Oracle at production time. (iv) Creating full and incremental backup plans using RMAN in archivelog mode and implementing them on Oracle 9i Databases. Creating plan for recovering a database in case of disaster (in all possible situations) with least possible down time. Performing point in time (incomplete) recovery using RMAN. (v) Managing the Oracle database for normal day-to-day activities and the databases that require a critical uptime of 24*7, efficiently handling situations in an unexpected failure at those times. (vi) Application and SQL tuning that includes tuning and optimisation of SQL queries using TKPROF, Explain Plan, Autotrace and Statspack, using optimizer settings and plan stability to save execution plan in outlines. Optimising queries so that they have the least impact on I/O, buffer cache, library cache and the CPU (vii) Working on Linux, extensive experience in writing shell scripts and scheduling scripts to run automatically using crontab. (viii) In depth knowledge of Data Modeling using ER Model, normalization and using SDLC for application development. Employment History Current Company Company Website Start Date End Date Role Responsibilities Bharti Telesoft Ltd. www.bhartitelesoft.com January 2006 Current Oracle Database Administrator (1) Performance Tuning of Oracle Databases on Linux. (2) Managing the backups using RMAN and user managed for multiple databases. (3) Troubleshooting Oracle production system (4) Extensively coding shell scripts for automation of tasks by crontab. Previous company Company Website Start Date End Date Role Responsibilities Teach Foundation Limited, Perth, Australia. www.teachfoundation.org.au August 2004 August 2005 DBA and Software Development. Administering and developing Database that runs on Linux Server. Coding web applications using PHP, SQL and Java Script. Please refer to the Project 1 i.e. (www.teachfoundation.org.au ) below in the project details Projects Second previous company Whizlabs Software Ltd., Delhi, India (ISO 9001:2000 certified) Website www.whizlabs.com Start Date December 2003 End Date June 2004 Role Content Author (Oracle Database Administrator) Responsibilities Projects I have Authored the Study Material for OCP 1Z0-033 Performance Tuning exam for this organization. Please refer to the details of my Project 2 (i.e. Authoring the contents for Preparation kit for the OCP 1Z0-033 Performance Tuning exam.) below. Company (Third previous) Escolife IT Services Pvt. Ltd (An Escorts Group Company), Delhi, India Company Website www.escolife.com Start Date April 2002 End Date October 2003 Role Oracle DBA/ Architect Award I have received an Award from Escolife IT Services (then known as Esconet Services Ltd.) for my dedication towards work and excellent Performance. I was the only engineer who was honored by this award within such less period of joining this company i.e. 4 months. Responsibilities (1) Performance Tuning of Oracle Databases (2) Working as a DBA with the development team, designing the database for Data Warehousing and OLTP applications. Projects Please refer to the details of my Project 3 (i.e. www.escolife.com ) and 4 (i.e. www.RakshaTPA.com) below. Company (Fourth previous) Mindmill Software Ltd. (An ISO 9001 Company), Delhi, India Company Website www.mindmillsoftware.com Start Date Dec 2000 End Date March 2002 Role Software Engineer and Basic DBA Responsibilities Basic Oracle Database Administration and software development using Java, XML, WAP, WML. Projects M-Banking Developing WAP Application for Bank Of Punjab. The user can access the Bank account through a WAAP enabled cell phone. NOTE: I have removed the project details for this project. Projects: 1) Airtel CRBT Mobile database: This is a telecom project and CRBT is the project that deals in playing the audio clip when the user is called. So when a user who has CRBT facility is called the caller listens to the audio clip set by the user instead of the normal call tone. The technologies that I have been using for this project are Oracle 9i database that runs on a Linux cluster server. Duration: Jan 2006 till current. Job activities performed till date: Writing shell scripts for automation of user managed hot backups and RMAN backups that run during the night through crontab. Following the successful backups the backup is sent to a remote machine using FTP and old backups are deleted automatically. Automating maintenance activities during off peak hour that include analyzing of tables, indexes, export of important tables, rebuilding indexes and generation of MIS reports. Since the volume of transactions on this database per day is more than 400,000 and the deletion of old records is equivalent to the daily transactions, we need to keep a track of determining the indexes that need to be rebuilt and the tables that need to be moved. These activities are scheduled through scripts that are run by crontab during the midnight. Determining tables and indexes that need to be redesigned or partitioned to prevent full table scans by partition pruning Tuning the physical structure that includes data files, redo log files etc.; and the logical structure that includes tables, indexes views etc. indexing should be balanced wisely so that indexes are not too big by using the compress option and NOT indexing every column that is used in the where clause. Tuning the init.ora parameters, SGA, I/O etc. Migration of data from one database to another and one platform to another using exports. Performing some business calculations of data by shell scripts and storing the result in a flat file, which is then used by Oracle as an external table and the data is transferred into oracle tables automatically. Troubleshooting production problems and writing scripts that automatically gather statistics at peak hours. Configuring and troubleshooting Oracle network issues and configuring secure listener by protecting it by password and writing shell scripts that stops the password protected listener while shutting down the database. 2) Teach Foundation website: www.teachfoundation.org.au Duration September 2004 - December 2004. Responsibilities: Database Administrator and developer for the database for this web application that runs on Linux Server and Coding web applications using PHP, SQL and Java Script. Administering and developing the internal database for this organization. The DBA activities in this organization are limited because we use a small database here and the database structure is quite simple. 3) Authoring the contents for Preparation kit for the OCP 1Z0-033 Performance Tuning exam. Duration: Jan 2004 – June 2004 I authored the contents for the Preparation kit for the Oracle Certification Exam 1Z0-033 Performance Tuning that is provided by www.whizlabs.com. The article that supports this study material can be found on the following URL: http://www.whizlabs.com/articles/1Z0-033-article.html 4) ESCOLIFE: www.escolife.com Duration: Feb 2003 – Oct 2003 Responsibilities: Performance tuning DBA for Hybrid database (OLTP and Data Warehouse) Gathering baseline statistics for the database and instance and then tuning it for best performance. Tuning the following components of the database and the instance: Shared pool, database buffer cache, redo log buffer, java pool and large pool. SQL tuning using TKPROF, Autotrace, explain plan, statspack and Top SQL Tuning the sort area, Shared server mode, Checkpoint and Database writer and DB writer slaves’ activities, Tuning application to prevent FTS for best performance of the DB cache, CPU and I/O contention, Configuring the data files, temp files, redo log files and archive log files to minimize contention, Rollback tuning for undo retention, Analyzing the database, system and schema and then rebuilding tables and indexes accordingly, Fixing migrated and chained rows. 5) Raksha TPA : www.rakshatpa.com Duration: May 2002 - Jan 2003. Responsibilities: Working as a DBA with the development team, designing the Hybrid database for OLTP and Data Warehousing from scratch till complete deployment. Designing (i) Database Logical design: creation of ER Model, Data Dictionary and normalization. (ii) Oracle Physical Design: Creating Physical files, i.e. Data files, redo log files using the OFA recommended guidelines (iii) Oracle Logical Design: Creation of tables, integrity constraints, indexes, materialized views, IOT, Cluster tables. Creation of all these objects using the best possible parameters like PCTFREE, PCTUSED, INITRANS and MAXTRANS etc. Creation of tablespaces with non-standard block size and storing the tables accordingly Coding complex SQL queries that join multiple tables efficiently, setting the optimizer mode and implementing plan stability by using stored outlines. Development and implementation of database systems Configuring the Library cache, dictionary cache, database buffer cache, redo log buffer, large pool, java pool, checkpoint, I/O and background processes, Creating the default and temporary tablespace for users, Creating roles and assigning them to users, Maintenance Archive mode: configuring the archiving of the redo log files on multiple locations. Backup and Recovery Planning: creating and implementing backup and recovery plans and implementing them in archive log mode. ANNEXURE: Project Details 1) ESCOLIFE: www.escolife.com Responsibilities: Performance tuning DBA for Hybrid database (OLTP and Data Warehouse) Before starting the actually tuning process I decided to make a general plan for the tuning process that could be used over any database not just this project, I divided this plan into three categories viz. (i) Initial checks that will improve the architecture of this database. (ii) Period wise, this was divided into monthly and once in six months periods. (iii) Daily checks- this includes the checks the operational DBA should follow so that nothing goes wrong, this also includes the troubleshooting. Following is the brief General-purpose methodology I outlined for tuning the performance of the databases and instance. (i) (ii) (iii) (iv) (v) (vi) (vii) (viii) (ix) (x) (xi) (xii) Check alert log file as an initial step to trace any issue with the database performance. Library cache Hit Ratio should be determined by using the V$LIBRARYCACHE view or statspack report. This check is important in case SQL takes long to execute and if there seem to be problems with the library cache objects, set the parameters to enhance the Library cache performance. Data dictionary should be checked for Hit ratio using the V$ROWCACHE view or statspack report. Each Database buffer caches should be checked using the V$BUFFER_POOL_STATISTICS view. Using the V$DB_CACHE_ADVICE view obtain performance advice for the Buffer caches by setting the DB_CACHE_ADVICE. Analyze each object for caching them into the right buffer cache i.e. keep, default and recycle. This step takes really long time to be completed but most of the MEMORY issues are solved once this step is completed successfully. Determine the indexes that need to be rebuilt by checking the blevel and the space occupied by the deleted rows, similarly moving a table may be considered in case of too many migrated rows. To eliminate row chaining, create a tablespace with larger blocks and move the tables that have chained rows into that tablespace. Often check tables and indexes for their size and response time, consider the tables/indexes for stripping and partitioning if the table size is causing performance to decline. Allocate extents manually to tables on off-peak hour to avoid dynamic extent allocation during busy runtime. Export table/ index after any unrecoverable action has been performed. Large pool should be configured using the ‘session UGA memory max’ for use by Shared Server environment. Identifying bottlenecks in archive log system, i.e. checkpoint frequency, log file size, Log groups, ARCn processes, DBWn processes, archive destinations speed. Check for the event “checkpoint not completed” in the alert log file, in such cases checkpoint may need tuning. Use multiple DBWn if the operating system supports asynchronous I/O instead of using Database Writer Slaves. Or tune the checkpoint frequency or increase the number of Log Groups Check the V$FILESTAT view to point out the files that are causing excessive I/O and if the I/O over some files is taking longer than others. Check SQLs statistics using the TKPROF or STATSPACK report and determine the SQLs that need tuning if they are causing excessive physical reads or if they are being re parsed if it could be avoided by making them generic. Use EXPLAIN PLANS or AUTOTRACE for SQLs to point out any modifications required. Configure the CBO, since the CBO evaluates the I/O cost, CPU cost and Sort space; gathering the system statistics is a must for expecting best results from the CBO. Consider creating histograms while analyzing tables that have skewed distributed data. Determine the optimal size for the Undo tablespace by checking the V$UNDOSTAT view. Check the number and volume of Full Table scans occurring in the database and set the DB_FILE_MULTIBLOCK_READ_COUNT accordingly. 2) Raksha TPA Responsibilities: Working as a DBA with the development team, designing the architecture of the Hybrid database for OLTP and Data Warehousing from scratch till complete deployment. Following is the brief description of the guidelines that I outlined for this project. Database Design Physical Design: The following guidelines were outlined for designing the Physical structure. Storing datafiles over multiple disks, especially storing the table datafile and the associated index datafile on a separate disk so that there is no I/O contention as the table and its associated index would always be accessed simultaneously. Separate each applications data by tablespace. Tablespace stripping should be implemented so that the tablespace uses multiple datafiles to store the data for large tables over multiple independent disks. RAID and OS implicit stripping may be considered but the best way to strip table data is to strip it manually by storing datafiles on separate controlled disks and then manually allocating extents into those multiple datafiles. System tablespace should be devoted just to store the Data Dictionary and the PL/SQL objects, no other data should be stored in the System datafile. Default Data Tablespaces should be assigned to each user so that no user uses the system tablespace to store their objects. There should be a default temporary tablespace for the database so that each user uses that temporary tablespace and not the system tablespace as their temporary tablespace. The Temp/Data file for the temporary tablespace should be stored in a separate disk. There should be no other file in that disk because the Temp file for the temporary tablespace is the most volatile, which causes it to be the most fragmented. Use Automatic Undo management and store the UNDO tablespace data file on an independent disk. Multiplex the redo log files and store each member of a group on a different disk that is on a different controller. Do not use RAID devices to store the log files, the overhead caused to manage the RAID devices makes them slow whereas redo logs should be stored on very high speed devices. Do not store Log Files with any other file especially not with the data files. Two reasons for this being that the Redo Log files are written sequentially whereas the datafiles are randomly accessed. Second reason being that during a checkpoint both the redo log files and the data files are updated simultaneously this may cause severe contention if the data files and log files are on same controlled device. Multiplex the Control files on at least 2 locations; control files may go in the disks that contain data files. If Control file is used as a repository for RMAN then consider 3-5 multiplexed copies of the control file. Configure multiple archiving locations, preferably 1 location should be a remote server, which can be used to run a standby database. Do not store archived logs with data or Redo Log files because of the contentions reasons at the checkpoint. To make the database management simple use OMF by setting the parameters DB_CREATE_FILE_DEST for datafiles and DB_CREATE_ONLINE_LOG_DEST for multiplexed log files and control files. Set the BACKGROUND_DUMP_DEST, USER_DUMP_DEST and CORE_DUMP_DEST for the trace files and the alert log file. Logical Design: The logical structure included configuring the tablespaces with appropriate parameters, creating segments, extent management and block level configuration. Certain logical structures like the block level parameters require a thorough understanding of the tendency of data that would be stored in the blocks and estimating the future of that data. Following are the guidelines for designing the logical structure for a database. Designing the Default, Undo, Temporary Tablespaces with appropriate storage settings and options. Create the tablespaces for OLTP and Data warehousing with different block size. Hence the multi block size feature of oracle 9i should be applied. The blocks for the Date Warehousing tablespaces should be large and the tablespaces for OLTP should have smaller blocks. Configuring each table with appropriate values for the parameters PCTFREE, PCTUSED, INITRANS, MAXTRANS, BUFFER_POOL. Consider lookup tables to be CACHED and they should have higher value for INITRANS. Consideration for Data warehousing: Data warehousing objects would constitute the ETL objects, the easiest way to design the ETL objects and processes is by using an ETL tool like Oracle data warehouse builder (OWB), although I have not used an ETL tool but I have worked on ETL objects manually. The following objects would be good candidates in ETL. (i) Extraction part of ETL can be carried out using the following: Flat files that are created by using SQL Plus statements. Taking an export of the objects using the Oracle export utility. Create Table as select Transportable tablespace. I have worked extensively on create table as select and exporting the database objects. (ii) Transportation can be done as follows: SQL* Loader can be used to transport data from the flat files into the destination database. Flat files are very useful in case the target and source databases run on different platforms. The export dump file can be transported on the destination database by using simple import and some data manipulation can be implemented using the import. Using create table as select transports the table directly in one step. Certain data manipulation can be done using the SQL functions in this way. Transportable tablespaces have to be transported by using the import utility to transport the metadata of the tablespace and the physical data files need to be copied over the target database. This method cannot be adapted in case the source and target database run under a different platform because this involves the physical OS file transportation. (iii) Loading and Transformation can be done either in multi stage or pipeline. Loading can be achieved by the use of the following: SQL* Loader External tables Import utility Transformation can be done in the following ways Using SQL statements PL/SQL procedures or functions can be used. Table functions can be used. General considerations for Data warehouse system are as follows: Block size should be large and set DB_FILE_MULTIBLOCK_READ_COUNT to a high value, this high value setting would enhance the full table scans as the database would read more blocks in single I/O, causing fewer I/Os required to scan the full table. o Indexes may not benefit much because data warehouse generally carries out Full table scans, which would not benefit by using indexes. o Bitmap indexes were considered in some situations over columns with low cardinality. o In table design set PCTFREE to a very low or zero because there are no updates in the rows of these systems. o Materialized Views should be used to capture the data from OLTP systems and store them in preformatted manner in the Data Warehouse; this enhances the performance dramatically. o Partitioning large Tables and Indexes. o Stripping Tables over multiple disks. o Parallel Queries can dramatically increase the response time for very large tables. Considerations for OLTP: The Indexes should be planned very well in OLTP systems because excessive indexing would cause unnecessary slowing down of the DML statements. So there needs to be a balance maintained in the query response time and DMLs. Avoid the use of Bitmap Indexes, use B-Tree indexes and reverse key indexes should be used over the columns that have data in a sequence. Consider Index Organized Tables for lookup tables that use Primary Key search, as this will require only one I/O scan as compared to a B-Tree and Table combination that would require two scans. Consider Hash Clusters for tables that are always accessed together using equi-joins but seldom accessed on their own. Also, these tables should not be updated frequently. Generic code should be used in OLTP. Use of bind variables is encouraged. Consider implementing constraints over the columns that require integrity. Deferrable constraints may be useful at the transaction level integrity. o Development and implementation of database systems The development of database consisted of actually creating the physical and logical structure by using the design created in the design phase. Provide a list of guidelines to the SQL developers with the do and don’t. Configuring the Instance: Sizing the SGA by configuring the Shared pool, database buffer cache, log buffer, Java pool and the large pool. Configuring each of the background processes. Use of multiple DBWn processes should be implemented for high I/O systems. Database Writer Slaves should be used for asynchronous I/O in the systems that only support synchronous I/O. DBW Slaves will not be of use if there are free buffer waits in the system, in this case we need more processes to manage the LRU and Dirty list which is done only by the DBWn process and not the slaves. Configuring the checkpoint frequency using the parameter FAST_START_MTTR_TARGET, checkpoint frequency should be so configured that it doesn’t not happen too often that deteriorates the runtime performance of the system and also not so rarely that the instance recovery time is very large. Also size the Log files to influence the checkpoint activity. For scalability reasons implement shared server environment if the number of users is too high, transactions are short and of less volume and if the number of maximum processes reach often, for further scalability implement connection pooling and connection manager. Maintenance Archive mode: Configure the database to run in the Archive log mode, preferably also set automatic archiving. Set a minimum of 2 archive log destinations, one being a remote destination that sends the logs in a standby database, which will act as a backup database server. Set the number of archive processes at least equal to the number of archive destinations. Archive log destinations should not be set on the same disk as the log files due to contention reasons and single point of failure. Backup And Recovery Planning: The backup should be so planned that there is a full backup taken once or twice a week depending on the criticality of the database and an incremental backup on a daily basis. This incremental backup would be useful because it includes the unrecoverable changes made to the database which the archived logs will not and oracle server prefers to use incremental backups over using archived logs while recovering a database. The recovery plan should list all the possible kind of disasters and there should be a clear procedure documented to carry out during the time of disaster. We should not leave any bit for experiments during a disaster, all disasters should be simulated and a recovery plan should document activities for each in detail.

![Database Modeling and Implementation [Opens in New Window]](http://s3.studylib.net/store/data/008463861_1-79059dcf084d498c795a299377b768a6-300x300.png)