Using the Technology Acceptance Model in

advertisement

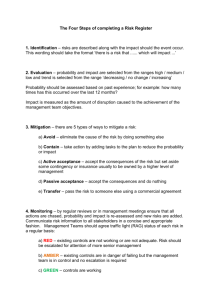

USING THE TECHNOLOGY ACCEPTANCE MODEL IN PREDICITNG ACCEPTANCE OF IMPOSED TECHNOLOGY: A FIELD STUDY Maureen Francis Mascha* Department of Accounting Marquette University Milwaukee, WI – 53201 Maureen.mascha@mu.edu (414) 288-0668 Monica Adya Department of Management Marquette University Milwaukee, WI – 53201 Monica.adya@mu.edu (414) 288-7526 *Corresponding author Abstract This paper extends an area of information systems into an AIS context. We describe a well accepted model in information systems, the Technology Acceptance Model (TAM), and examine whether or not the TAM is appropriate in determining acceptance of new technology where use is not optional (e.g. mandatory). The TAM posits that intention to use new technology is shaped by its perceived usefulness and the perceived ease of use of the technology. We describe the use of this instrument in predicating acceptance of an imposed technology, a web-enabled student registration system, as well as the effects of its use on acceptance employing a web-based survey. Results collected from 1,521 respondents indicate that, as expected, perceived usefulness predicts acceptance, but contrary to the model, perceived ease of use does not predict acceptance. New to the literature are the findings that trial time (time spent using the new technology) and class year (i.e. freshman, sophomore, etc.) significantly affect subjects’ attitudes towards acceptance. Specifically, as trial time increases, perceived ease of use declines and as class year increases, (e.g. freshman to senior) perceptions of usefulness and ease of use also decline. These findings suggest that attitude towards new technology is a function of use as well as familiarity with the prior system. Together, these imply that acceptance of new technology is more complex than originally proposed by the TAM and highlight the need for additional research on the longer-term affects of new technology on users’ attitudes and acceptance. 2 I. INTRODUCTION As advances in information systems (IS) have changed the way people conduct professional and personal lives, the study of user acceptance and willingness to use these systems has gained interest. Studies in IS have consistently indicated that positive user attitude towards an information system is critical to its success. User acceptance seems to be influenced by its perceived ease of use and usefulness (Davis 1989; Szjana, 1994; Venkaetsh and Davis 2000; Venkatesh, et al 2002); perceived voluntariness of usage (Agarwal and Prasad, 1997); and beliefs about the technology (Moore and Benbasat, 1991) among others. User acceptance of technologies can have a strong impact on an organization’s success at achieving the standards of performance and return on investments through new technological investments (Al Gahtani and King, 1999; Lucas and Spitler, 1999). Many factors such as characteristics and usefulness of the technology and attitudes of other users have been found to shape such attitudes. One tool that attempts to model the role of user attitude towards new technology is the Technology Acceptance Model (TAM). Originally proposed by Davis (1989) this simple yet powerful model suggests that the perceived usefulness (PU) and perceived ease of use (PEOU) of a new technology are fundamental determinants of its acceptance. PU is explained as a user’s assessment of his/her “subjective probability that using a specific application system will increase his or her job performance within an organizational context” (Davis 1989). PEOU is described as the “degree to which the user expects the target system to be free of effort” (Davis 1989). Figure 1 illustrates the TAM and its constructs. ---------------------------- FIGURE 1 about here --------------------------Influenced by Ajzen and Fishbein’s (1980) Theory of Reasoned Action (TRA), TAM constructs have demonstrated theoretical and psychometric support based on an extremely large body of literature in IS. Significant research effort has been devoted to establishing the validity and reliability of the constructs originally proposed by Davis (1989). Adams, et al (1992) was one of the earliest studies to replicate the original work and demonstrate its consistency in two different settings using multiple samples. Other replication and extension efforts include those by Szjana (1994), Chau (1996), Chau and Hu (2002), and Dasgupta, et al (2002). The model has been tested in multiple domains such as Internet marketing and online consumer behavior (Koufaris, 2002), intranet use (Horton, et al 2001), medicine (Hu, et al 1999), distance education 3 (Lee, et al 2003), outsourcing decisions (Benamati and Rajkumar, 2002) among many. Most of these studies have effectively demonstrated the ability of this model to explain much of user acceptance of technologies, making TAM one of the most influential models in information systems. While the TAM is certainly robust, questions remain as to whether or not it is an able predictor of user acceptance when use of new technology is mandatory, especially if the predecessor technology is withdrawn and users are left with no alternative. At issue here is not whether new technology will be used, but rather will new technology be accepted, recognizing that the former may occur absent the latter. This is an important concern, since the acceptance of new technology is often a necessary predecessor if the full benefits of the new technology including return on investment are to be realized fully. Since new technology is often mandated in accounting settings (e.g. enterprise systems, general ledger systems, etc.), this is an important issue for system designers as well as management charged with implementing these systems. A separate but equally important question concerns the ability of TAM in predicting PU and PEOU over a period of time. If familiarity breeds acceptance, then more use of new technology should increase the effects of PU and PEOU on acceptance. However, if increased use leads to discovery of problems or glitches, increased use could lead to a diminished effect of PU and PEOU on acceptance. Since the one prior study that investigated the effects of prior exposure on PU and PEOU toward acceptance measured prior exposure as a dichotomous, not continuous variable (Taylor and Todd 1995), we specifically address the issue here by measuring prior exposure in terms of number of times subjects used the new system. This is particularly salient since acceptance is a function of PU and PEOU, which can change over time, changing attitudes from “acceptable” to “unacceptable”. We examine these questions in a field study consisting of two on-sight surveys. These surveys investigate the effect of PU and PEOU on acceptance of a web-based registration system whose use was mandated (i.e. the prior system was withdrawn). We measure acceptance as the degree to which subjects perceive the new technology to be more useful than and/or easier to use than its predecessor. If the TAM is able to predict acceptance when technology is imposed, particularly where no alternative exists, and/or can provide guidance on the effect of use over time on acceptance, then the model’s relevance to applied research increases significantly. 4 The remainder of this paper is organized as follows. Section II discusses the literature and develops research questions; Section III describes the research methodology; Section IV presents the results; and finally, Section V summarizes the findings, suggest future areas for research, and addresses the limitations. II. THE TECHNOLOGY ACCEPTANCE MODEL In its simplest form, the TAM (Davis 1989) proposes that user acceptance of new technology is a function of two factors: perceived usefulness (PU) and perceived ease of use (PEOU). The TAM proposes that acceptance of new technology, defined very broadly, is a function of how useful the subject finds the technology to be as well as how easy it is to use. Referring to the model’s depiction in Figure One, the reader should notice two points: first PU directly affects behavioral intent, defined as expected or anticipated usage and second, PEOU is a function of PU. That is, PEOU in and of itself does not affect usage intent. If a new technology is not first perceived to be useful, PEOU does not matter. Simply stated, if new technology is not perceived to be useful, then the fact that it is easy to use does not sway acceptance. Prior studies have examined the TAM in a multitude of settings using many different subjects and technologies suggest that the TAM provides an easy and relatively quick method for determining acceptance of new technology in many different settings. Indeed, one study reports the use of the TAM in predicting user acceptance of new technology based on exposure only to the system prototype. (See Lee, et al (2003) and Ma and Liu (2004) for meta-analyses of studies using the TAM.) The flexibility from requiring minimal exposure to the technology in question is so important that Davis (1989) claims it to be the largest benefit of using the TAM. Three important issues regarding TAM need to be stressed. The first is that acceptance is proxied by respondents’ anticipated use; the greater the anticipated use, the greater the acceptance. This can be problematic given the wide gap between expected and actual behavior. Only one study to date has explored the difference between expected and actual usage of new technology. That research found that respondents overstated significantly their expected usage when compared with their actual use (Szajna 1996). His study is all the more telling as actual use was not self-reported by the participants, but rather measured directly by the researcher, suggesting that expected use may not be an effective proxy for actual use in all situations. 5 The second issue concerns the effect of usage over time, (referred to as prior exposure), on user acceptance. To date, only two papers have examined the effect of prior exposure and acceptance (Venkatesh and Dodd 2000; Taylor and Todd 1995). Interestingly, Taylor and Todd find that prior exposure leads to increased reliance of PU in predicting acceptance. Their study measured prior exposure as either present (at least one prior encounter) or absent (no prior exposure). The authors fail to report the range of prior exposures, so it is difficult to infer if their pattern of results holds when exposure increases. Venkatesh and Davis 2000 examined the role of prior exposure on user acceptance over a three month period. This study notes that the effect of PU and PEOU on users’ attitudes did change over a three month period. Subjects’ attitudes were measured at three points: preimplementation, one month and three month intervals subsequent to implementation. While Venkatesh and Davis investigate the effect of prior exposure on acceptance, their results were based on three data points using a model adapted from, although different from, TAM. As a result, the question regarding the ability of TAM in predicting acceptance over a period of time remains unanswered. Finally, the third issue addresses the question of whether mandatory usage affects acceptance of new technology. Here again, Venkatesh and Davis (2000) report no difference in acceptance between voluntary and mandatory settings. It is difficult to use their study in drawing conclusions, however, since they employed a different model and base their findings on responses from 43 subjects. In summary, while prior research provides support for the TAM in a variety of settings, issues related to prior exposure and conditions surrounding use (i.e. mandatory versus voluntary) remain unanswered. Research Questions Based on the preceding discussion, it seems reasonable to inquire whether or not the TAM accurately predicts the PU and PEOU of a new technology over its predecessor technology when use is mandatory. A related inquiry concerns the effect that usage, or prior exposure, has on attitude. Finally, a tertiary issue concerns the effect, if any, that gender plays in predicting acceptance. While most studies are silent as to the effect of gender, one study (Gefen and Straub 1997) did specifically investigate its role. This study reports that gender significantly affects 6 perception of but not use of technology, suggesting that gender should at least be included in any models measuring acceptance of technology. Since there is no body of literature to guide directional hypotheses, the following research questions are proposed. RQ1: Does the TAM predict users’ perceptions of whether or not the new technology is more useful and/or easier than the former system? RQ2: Will attitude toward the new system change with use? RQ3: Does gender affect the PU and PEOU of a new technology over its predecessor? III. RESEARCH METHODOLOGY The CheckMarq™ registration system was launched in March of 2004, in time for Fall 2004 registration at a large, private midwestern university. The new system, named CheckMarq™, replaced a predominantly manual, telephone-response system. CheckMarq™ consists of various software tools, including on-line registration availability, and is accessed via a web portal. The on-line registration feature was the object of our study since all returning students, graduate as well as undergraduate, were required to use this system for Fall 2004 registration. The registration portal provided students access to course catalogs, course offerings, registration information, and schedule maintenance. Measuring student attitudes towards using CheckMarq™ would yield no new information given its mandatory use, but TAM constructs could easily be adapted for measuring student perceptions of the new system as compared to the previous one. Such as assessment is critical for assessing the third criteria for successful project management – delivering a quality product that meets the needs of the user. Survey Design and Administration We used a multi-method approach to examine acceptance of the web registration system. The TAM instrument as provided in Davis, et al (1989) was modified for CheckMarq™ and served as the main source of empirical data. This instrument was administered via the web to all 11,000+ students at the university. Additionally, a second open-ended questionnaire soliciting strengths and weaknesses of CheckMarq™ was administered to a select group of undergraduate business students enrolled in a core course taught by one of the authors. 7 The instrument containing TAM constructs consisted of a 31 item instrument; anchored by “strongly disagree” and “strongly agree” measured using a five-point scale. This survey was made available to all students between mid-April and early May. This period immediately followed Fall 2004 registration. The intent was to capture student perceptions as close as possible to actual use. The original instrument provided in Davis (1989) was modified for our purposes. We retained all the original items as in the original study but added six more questions related to population demographics and assessment of student perceptions of the old system versus the CheckMarq system. Adding additional items required by the domain has frequently been done in the past with no significant effect on PU or PEOU. (See Ma and Liu 2004 for a detailed review.) Appendix 1 provides the instrument that was used and how our questions map to the TAM instrument. There were several reasons for using this web-based administration: • The web was the most cost effective method of reaching the student population. The survey could be actively promoted via Student Commons, one of the most visited sites on the student portal, and via e-mail communications. Links could be provided in all electronic promotional material to provide easy access to the instrument. The software used virtually guaranteed that only surveys with all questions completed would be accepted. If a student left a question blank, the software would immediately inform the student of this error. The survey could be accessed from anywhere the student had web access, making completion accessible and easy. Promotion of the instrument was critical in obtaining a reasonable sample size. To administer the instrument we solicited the assistance of the Office of Public Affairs. This office had been involved in campus-wide communications regarding CheckMarq™ from the early years of the project and its role in surveying student acceptance fell naturally into place. An announcement for CheckMarq™ survey was posted at the Student Commons site as well as sent out via e-mail in mid-April. A reminder was sent out via e-mail two weeks later. The survey was withdrawn from the website after first week of May, for a total on-line period of six weeks. Acceptance was measured here with two variables: perception of whether or not CheckMarq™ was more useful than and easier to use than its predecessor. 8 Students electing to respond to the web survey were asked to enter their unique student ID. Subjects were promised confidentiality; no effort was to be made connecting their ID to their responses. The ID was collected for two reasons, first to detect any duplicate responses and second to identify raffle winners. As an inducement to participate, students who complete the survey were automatically entered in a raffle. The prize was one of two $100 gift certificates to a local store. This had been communicated to them via email and when they logged onto the survey site. Winners were randomly selected by Student Services and notified of the prize after the survey was withdrawn. The second survey was designed to elicit specific feedback regarding users’ perceived strengths and weaknesses of CheckMarq™. The subject pool consisted of students enrolled in a core business course in the College of Business. Students were asked to list the top three strengths or weaknesses of using CheckMarq™. This request came in the time period immediately following registration. Because of the confidentiality associated with the online survey, no effort was made to identify any “dual respondents”; therefore, it is entirely possible that respondents to the first survey could also have responded to the second survey. IV. RESULTS A total of 1,521 subjects responded to survey one. This represents an overall response rate of 13.6%. Table 1 provides a breakdown of total enrolments versus respondents by gender and status for the University. In general, respondents found CheckMarq™ to be both useful and easy to use. A total of 39 subjects responded to survey two. The results from this survey are incorporated into the discussion of results. <TABLE 1 about here> Preliminary Analyses Correlation analyses performed on the variables indicate no significant correlation for gender, work experience, or technological experience with either of the two dependent variables: more useful than and easier to use than the previous system. However, trial time, measured as the extent to which respondents had used CheckMarq™ and status (the year in school measured on a 1-5 scale, with 1 being graduate student, 2 being senior, etc.) were both significantly correlated with the dependent variables, “more useful” and “easier to use”. Trial time could have a value from 1 (not used prior to registration) to “4” representing prior use of forty-five minutes or more. Prior to use for registration, students were allowed, in 9 fact encouraged, to become acquainted with CheckMarq™ and a non-production version of the system made available. The Information Technology Department, responsible for implementing CheckMarq™, informed us that the trial version was exactly the same as the production version, the only difference being that the production version created an actual registration record. Both trial time and status were retained as potential covariates in the analyses that follow. Factor analyses were performed to determine whether the instrument measuring PU and PEOS had factorial validity (Davis 1989). Results generated using the maximum likelihood method with oblique rotation suggest that the variables loaded on two factors in the direction predicted by the TAM and reported by Davis (1989), with the exception of the variables cumbersome and closure; (closure designed to measure how well CheckMarq™ informed users of where they were in the registration process). Because of the lack of discriminant ability of these variables, cumbersome and closure were excluded from further analyses. However, even with these variables, goodness of fit was .9275, suggesting that two factors adequately described the underlying data pattern. Table 2 displays the variables and the factors on which each loaded. ANOVA and Regression Analysis Based on the output from the factor analyses, ANOVAs and regression analyses were performed to determine the exact effect of each factor (PU and PEOU) on the dependent variables, more useful than and easier than. (The variables captured subjects’ attitudes comparing CheckMarq™ to the prior system.) Since an important tenant of the TAM concerns the roles that PU and PEOU play in affecting acceptance, separate regressions were performed examining the effect of each factor on each of the dependent variables. The first set of analyses focused on the “more useful than” dependent variable. The first model regressed perceived usefulness (PU) alone on “more useful than”. (Model: More Useful than = PU + error.) (Table 3, panels A, B, and C, detail these statistics.) Results indicate an R squared of .69628 and significance of .0001 for the PU factor. Next, the second factor, PEOU was added. The purpose of adding PEOU was to determine the effect of PEOU while controlling for PU; (PU.PEOU in statistical terms, Davis 1989). This addition marginally increased the R squared to .696313; however, only the PU factor was significant (p < .0001); the PEOU factor was not significant (p = .6854). Finally, since trial time and status both correlated significantly with the dependent variable they were added to the model without the PEOU factor (i.e. model: more useful than = PU + status + trial 10 time + error) yielding an R squared of .699586. Interestingly, after adding the covariates, only PU and status were significant P < .0001 and P = .0002, respectively; trial time was not significant (p = .1742). <Insert Table 3 here> The second set of analyses focused on the “easier than” dependant variable. Table 4, panels A, B, and C, display the statistics. When the PEOU factor was regressed on the dependent variable, (easier than = PEOU + error) the model indicated an R squared of .170202 and p of < .001 for PEOU; adding PU to the model while holding PEOU constant increased R squared to .682932. Results indicate that both factors were significant (p = .0085 and p < .0001, respectively). Finally, including the two covariates, trial time and status, increased R squared to .688697, but PEOU became non significant (.1995). Even more interesting is the fact that the coefficient for trial time was negative, suggesting that as use increased, PEOU decreased. <Insert Table 4 here> Research Questions Research Question One inquires as to the effect of each factor (PU and PEOU) on acceptance. Analyses indicate that PU is significant in explaining the dependent variable more useful than, while PEOU is not significant in explaining either dependent variable. Indeed, results seem to highlight the dominance of the PU factor in predicting acceptance. Research Question Two inquires as to the effect of acceptance over time. This study finds that trial time, or prior exposure, has no effect in affecting the “more useful than” dependant variable, but does have a significant negative effect in predicting the “easier than” dependent variable. Finally, Research Question Three inquires about the effect of gender. Results indicate that gender played no role in affecting either dependent variable. IV. DISCUSSION In summary, this study finds that of the two factors proposed by the TAM, only PU predicts acceptance of new technology when use is mandatory. Our findings confirm that of the two factors, PU and PEOU, PU plays the dominant role is predicting acceptance. However, unlike previous studies examining TAM, PEOU is not significant in affecting acceptance after controlling for the covariates of usage and class year. Specifically, we note that class year affects attitude as to whether the new system is more useful and easier than its predecessor and that trial time affects attitude as to whether the new technology is easier than the former system. 11 This latter finding deserves further attention. In essence, we find that as class year increases (i.e. from freshman to senior), PU and PEOU decline, suggesting that familiarity with the prior system affects acceptance. This statement is made based on the positive coefficient for the variable “status”. Since freshman status was coded as “5,” and decreased as class year increased, (i.e. sophomores were coded as “4,” juniors as “3,” etc.). It seems clear that as class status increased, acceptance decreased since use of the prior system would have increased as class year increased; (e.g. seniors would most likely have used the prior system more frequently than juniors, juniors more than sophomores, etc.). Thus, familiarity with the prior system, or at least use thereof, negatively contributed towards acceptance of the new system. Analysis of responses to the second survey indicates possible explanations for the pattern of findings from the first survey. Overall, subjects agreed that CheckMarq was easier, faster, and more useful in terms of information offered. This pattern seems to support the perception that CheckMarq was more useful than its predecessor. However, subjects also felt that error messages were not descriptive enough, particularly those concerning closed classes, and that at times, CheckMarq was tedious in the number of screen visits required to obtain certain information. These latter comments could explain why PEOU was not significant in predicting attitude as respondents perceive key information to be difficult to obtain. Taken together, these findings imply several things. Foremost, we note that the pattern of results is different for mandated versus voluntary use. Our findings, while providing partial support for the TAM, suggest that acceptance of new technology is more complex than originally proposed by TAM. Specifically, length of usage of the new system negatively affects subjects’ attitudes towards PU and PEOU. This is important since it implies even brief encounters with new technology can have a significant effect on acceptance. Secondly, we note that familiarity with the former system affects acceptance, in this case negatively, implying that measuring acceptance of new technology by solely focusing on the new and ignoring the former can lead to inconclusive results. While prior studies focused on attitude towards new technology, this study expanded the definition of attitude by comparing acceptance of the new technology to its predecessor. Since most new technology installations occur as the result of switching from one technology to another, this is an important issue that suggests that factors outside of those proposed by TAM need to be identified and included when measuring acceptance. 12 Limitations As with any study, there are limitations. First, this study compares a new system to one that was highly manual and very limited in use. Such a comparison may affect generalizability of these findings to other studies where the technology being replaced is more automated. Second, this study limits it focus to a technology where use is mandatory. Care needs to be taken in comparing this study’s finding with other studies where use was not mandatory since we did not include voluntary use in this study. Finally, we define acceptance as difference in attitude between the former and the current system. This definition differs from how acceptance is measured in other studies and may also affect generalizability. 13 REFERENCES Agarwal, R. and J. Prasad (1997), “The role of innovation characteristics and perceived voluntariness in the acceptance of information technologies,” Decision Science, 28(3), 557-582. Al-Gahtani, S.S. and King, M. (1999), “Attitudes, satisfaction, and usage: Factors contributing to each in the acceptance of information technology,” Behaviour and Information Technology, 18(4), 277-297. Benamati, J. & T.M. Rajkumar (2002), ‘The Application Development Outsourcing Decision: An Application of the Technology Acceptance Model’, Journal of Computer Information Systems, Vol. 42, No. 2, pp . 35-43 Chau, P.Y.K (1996), 'An Empirical Assessment of a Modified Technology Acceptance Model', Journal of Management Information Systems, Vol. 13, No. 2, pp. 185-204 Chau, P.Y.K. & Hu, P.J. (2002),‘Examining a model of information technology acceptance by individual professionals: An exploratory study’, Journal of Management Information Systems, Vol. 18, No. 4, pp. 191-229 Dasgupta S., M. Granger & N. McGarry (2002), ‘User Acceptance of E-Collaboration Technology: An Extension of the Technology Acceptance Model’, Group Decision and Negotiation, Vol. 11, No. 2, pp. 87-100 Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319-340. Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1989). User acceptance of computer technology: A comparison of two theoretical models. Management Science, 35, 982-1003 Horton, R.P., T. Buck, P.E. Waterson & C.W. Clegg (2001), ‘Explaining intranet use with the technology acceptance model’, Journal of Information Technology, Vol. 16, No. 4, pp. 237-249 Hu, P.J., P.Y.K. Chau, O.R. Liu Sheng & K.Y. Tam (1999), ‘Examining the Technology Acceptance Model Using Physician Acceptance of Telemedicine Technology’, Journal of Management Information Systems, Vol. 16, No. 2, pp. 91-112 Koufaris, M. (2002), ‘Applying the technology acceptance model and flow theory to online consumer behavior’, Information Systems Research, Vol. 13, No. 2, pp. 205-223 Lee, J.-S., H. Cho, G. Gay, B. Davidson & A. Ingraffea (2003), ‘Technology Acceptance and Social Networking in Distance Learning’, Educational Technology & Society, Vol. 6, No. 2, pp. 50-61 Lee, Y., K.A. Kozar, and K.R.T. Larsen, (2003), “The Technology Acceptance Model: Past, present, and future,” Communications of the AIS, Vol. 12, Article 50, pp 1-24. 14 Lucas, H.C. and V. Spitler (1999) “Technology use and performance: A field study of broker workstations,” Decision Sciences, 30(2), 291-311. Ma, Qingxiong and Liping Liu. (2004). The technology acceptance model: a meta-analysis of empirical findings. Journal of Organizational and End User Computing, (16:1), 59-70. Moore, G.C. and I. Benbasat (1991), “Development of an instrument to measure the perceived characteristics of adopting an information technology innovation,” Information Systems Research, 2, 192-222. Szajna, B. (1994). Software evaluation and choice: predictive evaluation of the Technology Acceptance Instrument. MIS Quarterly, 18(3), 319-324. Szajna, B. (1996). Empirical evaluation of the revised technology acceptance model. Management Science, (42:1), 85-92. Taylor, Shirley and Peter Todd (1995). Assessing IT usage: the role of prior experience. MIS Quarterly, (19: 4), 561-570. Venkatesh, V., Morris, M. G., Davis, G.B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, (27:3), 425-478. Venkatesh, V. and Davis, F. D. (2000). A theoretical extension of the technology acceptance model: four longitudinal field studies. Management Science, (v. 46, no. 2), 186-204. 15 Table 1: Student Registration Survey Responses by Status and Gender Survey Reponses by Status and Total Survey Response Rate Gender Enrolment Respondents (%) Freshmen – Women 1129 88 7.79 Freshmen - Men 911 192 21.08 Sophomore - Women 1160 60 5.17 Sophomore - Men 968 100 8.62 Junior – Women 927 98 10.57 Junior – Men 700 224 32 Senior – Women 998 120 12.02 Senior – Men 832 269 32.33 Graduate/Professional – Women 1761 110 6.25 Graduate/Professional – Men 1819 260 14.29 TOTAL 11205 1521 13.57 16 Table 2: Factor Loadings by Variable* (Standardized Regression Coefficients) Variable quality control accomplish support productive effective ease overalluse cumbersome learn frustrate overallease rigid recall effort clear skill find status error closure alternative overallease2 Factor1 Factor2 0.88739 0.84414 0.73724 0.66314 0.67827 0.87463 0.83781 0.83691 -0.39735 -0.05974 -0.34399 0.33990 -0.39591 -0.04491 0.05268 0.20497 0.08949 0.21628 -0.14392 0.08869 0.22330 0.45096 0.46052 0.01144 -0.02509 0.08794 0.08224 0.03920 0.03695 0.08760 0.09473 -0.39693 0.75911 -0.53988 0.53955 -0.42529 0.70007 -0.78607 0.68972 -0.79267 0.52136 0.04409 0.12050 0.22660 0.14666 0.50820 * The variables are presented in the order in which they appear on the survey. 17 Perceived Usefulness External Variables Attitude toward use Behavioral intention Actual Use Perceived Ease of Use FIGURE 1: The Technology Acceptance Model – adapted from Davis (1989) 18 APPENDIX 1 Modified TAM instrument Use the prescribed scale of 1 - 5 with 1 being "strongly disagree" and 5 being "strongly agree" to indicate your experiences. Items adapted from Davis (1989) 1. Using Checkmarq improved the quality of the registration process. 2. Using Checkmarq gave me greater control over the registration process. 3. Checkmarq enabled me to accomplish the registration task more quickly. 4. Checkmarq supports critical aspects of my registration process. 5. Using Checkmarq allowed me to accomplish more work than would otherwise be possible. 6. Using Checkmarq increased the effectiveness of the registration process. 7. Checkmarq made it easier for me to register for classes. 8. Overall, I find the Checkmarq system useful for class registration. 9. I find Checkmarq cumbersome to use. 10. Learning to use the Checkmarq system was easy for me. 11. Interacting with the Checkmarq system was often frustrating. 12. I found it easy to get Checkmarq to do what I want to do. 13. Checkmarq is rigid and inflexible to interact with. 14. It is easy for me to remember how to perform tasks using Checkmarq. 15. Interacting with Checkmarq required a lot of mental effort. 16. My interaction with Checkmarq was clear and understandable. 17. I find it takes a lot of effort to become skillful at using Checkmarq. 18. It was easy for me to find information on the Checkmarq site. 19. I felt I always knew what stage of the registration process I was in. 20.The Checkmarq system indicated to me when an error occurred. 21. The Checkmarq system indicated to me when the registration process was complete. 22. The Checkmarq system made it easy for me to select between alternative courses. 23. Overall, I found Checkmarq easy to use. 24.Overall, I found CheckMarq easier to use than the prior registration system.* 25.Overall, I found CheckMarq more useful than the prior registration system. * * used as the dependent variable 19 Demographic and Comparative items 26.Prior to this use of Checkmarq, for how long did you explore the trial version? Not at all Less than 30 minutes 30-45 minutes More than 45 minutes 27. What motivated you to try the system before the actual registration process? Prizes offered for trial Friends Instructors Desire to learn about system Other 28. What is your academic status? Graduate student Senior Junior Sophomore Freshman 29. How many years of work experience do you have? None at all Less than 1 year 1-2 years 2-3 years More than 3 years 30. How comfortable do you feel you are with computer technology? Very uncomfortable Uncomfortable Neutral 31. What is your gender? Female Male 20 Comfortable Very Comfortable TABLE 3 Panel A ANOVA of: More Useful = PU + error Source DF Sum of Squares Mean Square F Value Pr > F Model 1 1638.227881 1638.227881 3482.31 <.0001 Error 1519 714.601836 0.470442 Corrected Total 1520 2352.829717 R-Square Coeff Var Root MSE usefultvr Mean 0.696280 18.95413 0.685888 3.618672 Source PU DF Type III SS Mean Square F Value Pr > F 1 1638.227881 1638.227881 3482.31 <.0001 Parameter Estimate Standard Error t Value Pr > |t| Intercept factor1 -.6844713627 0.1341040229 0.07501167 0.00227252 -9.12 59.01 <.0001 <.0001 Panel B ANOVA of: More Useful = PU + PEOU + error R-Square 0.696313 Source Coeff Var 18.95935 DF PU PEOU Intercept PU PEOU Estimate -.7656822272 0.1336285792 0.0027282169 usefultvr Mean 0.686077 Type III SS 1 1 Parameter Root MSE 3.618672 Mean Square 1284.316550 0.077256 Standard Error 0.21403876 0.00255821 0.00673418 21 F Value 1284.316550 0.077256 t Value -3.58 52.24 0.41 2728.52 0.16 Pr > |t| 0.0004 <.0001 0.6854 Pr > F <.0001 0.6854 Panel C ANOVA of: More Useful = PU + status + trial time + error Source PU Status trial time Parameter Intercept PU Status Trial time DF Type III SS Mean Square F Value Pr > F 1 1 1 1040.351025 6.685186 0.861113 1040.351025 6.685186 0.861113 2232.82 14.35 1.85 <.0001 0.0002 0.1742 Estimate -.7053212785 0.1280587485 0.0768627957 -.0302765371 Standard Error t Value Pr > |t| 0.09515210 0.00271008 0.02029188 0.02227095 -7.41 47.25 3.79 -1.36 <.0001 <.0001 0.0002 0.1742 22 TABLE 4 Panel A ANOVA of: Easier Than = PEOU + error Source DF Sum of Squares Mean Square F Value Pr > F Model 1 436.724830 436.724830 311.57 <.0001 Error 1519 2129.192329 1.401707 Corrected Total 1520 2565.917160 R-Square Coeff Var Root MSE easiertvr Mean 0.170202 33.97035 1.183937 3.485207 Source PEOU Source PEOU DF Type I SS Mean Square F Value Pr > F 1 436.7248305 436.7248305 311.57 <.0001 DF Type III SS Mean Square F Value Pr > F 1 436.7248305 436.7248305 311.57 <.0001 Parameter Estimate Standard Error t Value Pr > |t| Intercept PEOU -2.959553472 0.182266615 0.36637630 0.01032599 -8.08 17.65 <.0001 <.0001 Panel B ANOVA of: Easier Than = PEOU + PU + error Source DF Sum of Squares Mean Square F Value Pr > F Model 2 1752.345678 876.172839 1634.80 <.0001 Error 1518 813.571482 0.535950 Corrected Total 1520 2565.917160 R-Square Coeff Var Root MSE easiertvr Mean 0.682932 21.00552 0.732086 3.485207 Source PEOU PU DF Type III SS Mean Square F Value Pr > F 1 1 3.724994 1315.620848 3.724994 1315.620848 6.95 2454.75 0.0085 <.0001 Parameter Estimate Standard Error t Value Pr > |t| Intercept PEOU PU -1.524467524 0.018944128 0.135247325 0.22839243 0.00718578 0.00272977 -6.67 2.64 49.55 <.0001 0.0085 <.0001 Panel C ANOVA of: Easier Than = PEOU + PU + status + trial time + error Source DF Sum of Squares Mean Square F Value Pr > F Model 4 1767.138400 441.784600 838.46 <.0001 23 Error 1516 798.778760 Corrected Total 1520 2565.917160 0.526899 R-Square Coeff Var Root MSE easiertvr Mean 0.688697 20.82740 0.725878 3.485207 Source PEOU PU Status Trial time Parameter Intercept PEOU PU Status Trial time DF Type III SS Mean Square F Value Pr > F 1 1 1 1 0.8679961 962.7269743 11.4868596 2.6099400 0.8679961 962.7269743 11.4868596 2.6099400 1.65 1827.16 21.80 4.95 0.1995 <.0001 <.0001 0.0262 Estimate Standard Error t Value Pr > |t| -1.247009152 0.009562581 0.128414966 0.105275322 -0.052788942 0.23387126 0.00745041 0.00300419 0.02254704 0.02371873 -5.33 1.28 42.75 4.67 -2.23 <.0001 0.1995 <.0001 <.0001 0.0262 24