LDLA User Manual

advertisement

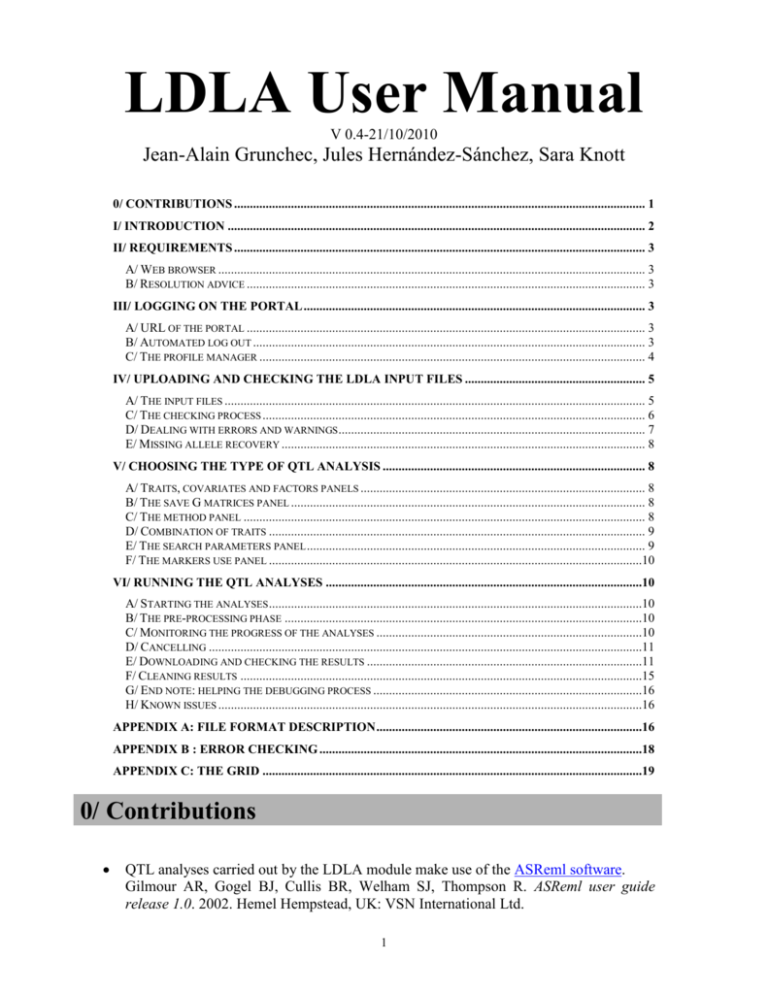

LDLA User Manual V 0.4-21/10/2010 Jean-Alain Grunchec, Jules Hernández-Sánchez, Sara Knott 0/ CONTRIBUTIONS .................................................................................................................................. 1 I/ INTRODUCTION .................................................................................................................................... 2 II/ REQUIREMENTS .................................................................................................................................. 3 A/ WEB BROWSER ....................................................................................................................................... 3 B/ RESOLUTION ADVICE .............................................................................................................................. 3 III/ LOGGING ON THE PORTAL ............................................................................................................ 3 A/ URL OF THE PORTAL .............................................................................................................................. 3 B/ AUTOMATED LOG OUT ............................................................................................................................ 3 C/ THE PROFILE MANAGER .......................................................................................................................... 4 IV/ UPLOADING AND CHECKING THE LDLA INPUT FILES ......................................................... 5 A/ THE INPUT FILES ..................................................................................................................................... 5 C/ THE CHECKING PROCESS ......................................................................................................................... 6 D/ DEALING WITH ERRORS AND WARNINGS ................................................................................................. 7 E/ MISSING ALLELE RECOVERY ................................................................................................................... 8 V/ CHOOSING THE TYPE OF QTL ANALYSIS ................................................................................... 8 A/ TRAITS, COVARIATES AND FACTORS PANELS .......................................................................................... 8 B/ THE SAVE G MATRICES PANEL ................................................................................................................ 8 C/ THE METHOD PANEL ............................................................................................................................... 8 D/ COMBINATION OF TRAITS ....................................................................................................................... 9 E/ THE SEARCH PARAMETERS PANEL ........................................................................................................... 9 F/ THE MARKERS USE PANEL ......................................................................................................................10 VI/ RUNNING THE QTL ANALYSES ....................................................................................................10 A/ STARTING THE ANALYSES ......................................................................................................................10 B/ THE PRE-PROCESSING PHASE .................................................................................................................10 C/ MONITORING THE PROGRESS OF THE ANALYSES ....................................................................................10 D/ CANCELLING .........................................................................................................................................11 E/ DOWNLOADING AND CHECKING THE RESULTS .......................................................................................11 F/ CLEANING RESULTS ...............................................................................................................................15 G/ END NOTE: HELPING THE DEBUGGING PROCESS .....................................................................................16 H/ KNOWN ISSUES ......................................................................................................................................16 APPENDIX A: FILE FORMAT DESCRIPTION ....................................................................................16 APPENDIX B : ERROR CHECKING ......................................................................................................18 APPENDIX C: THE GRID ........................................................................................................................19 0/ Contributions QTL analyses carried out by the LDLA module make use of the ASReml software. Gilmour AR, Gogel BJ, Cullis BR, Welham SJ, Thompson R. ASReml user guide release 1.0. 2002. Hemel Hempstead, UK: VSN International Ltd. 1 The epistasis module uses the SWARM meta-scheduler. The SWARM meta-scheduler as been noted as accelerating the execution of analysis with 200 jobs by a factor of up to 147 . Grunchec JA, Hernández-Sánchez J, Knott SA. SWARM: A meta-scheduler to minimize job queuing duration in a Grid portal. Proceedings of the International Conference of Cluster and Grid Computing Systems (ICCGS 2009). July 2009. Oslo, Norway ; 600-607. QTL analyses carried out by the GridQTL module make use of the resources provided by the Edinburgh Compute and Data Facility (ECDF). ( http://www.ecdf.ed.ac.uk/). The ECDF is partially supported by the eDIKT initiative ( http://www.edikt.org.uk ). Please, do note that registered accounts are personal i.e. if some of your colleagues or supervised students want to use the GridQTL service, they need to register individually. For very large LDLA and epistasis analyses, the free usage of the NGS has been noted to save weeks of waiting time for the end users when compared with a comparable virtual usage of a desktop PC. When publishing work based on use of the NGS, users should acknowledge both the relevant GridQTL publication or manual, and the NGS directly using the following line: "The authors would like to acknowledge the use of the UK National Grid Service in carrying out this work". This citation should, if possible, be used in conjunction with one of the NGS logos. If it appears to be impossible or difficult to include such a full citation in the manuscript owing to the format of the Journal paper or Conference proceedings, a reference to the UK National Grid Service, http://www.ngs.ac.uk/, could alternatively be included. I/ Introduction This document describes how to use the Linkage Disequilibrium and Linkage Analysis module which has been developed as part of the GridQTL project. 2 II/ Requirements A/ Web browser In order to run the LDLA module, you need to contact one of the authors to have an account created for you on the GridQTL portal. You also need to have a JavaScript-enabled web browser installed on your computer. For instance, the latest versions of the following web browsers have been tested successfully with the LDLA module: Mozilla Firefox Netscape Opera Windows Internet Explorer B/ Resolution advice The best graphic output is provided with Firefox. The screenshots provided in this manual have been taken when the LDLA module was used with Firefox. You may have a slightly different graphic resolution when you use other web browsers. All screen resolutions settings can be used but the graphic output looks better with screen resolution settings of 1024x768 or higher. III/ Logging on the portal A/ URL of the portal The URL of the GridQTL portal for the LDLA module is: http://cleopatra.cap.ed.ac.uk/gridsphere/gridsphere You need to type your user name and password, and then click on the button Login. B/ Automated log out Beware you may be logged out after a few minutes of inactivity during a QTL analysis. This happens typically when a QTL analysis is started and a user goes away while the computation is running. This is not a problem since the user can come back later and log in again so as to proceed with the rest of the analysis. Users can log in and log out, open and close the web browser at any time. When they log in again, their settings will be unchanged since the last time they logged out. Data are stored and computations are performed even when the user is not logged. 3 Figure 1: Logging on the portal. C/ The profile manager Once you have logged, you can change some of your settings on the profile manager portlet, such as your name or password. Beware that it may be convenient at some point to communicate this password to an administrator in case you encounter a problem with the LDLA, for debugging purpose. Therefore do not choose a password that you use in other circumstances. 4 Figure 2: The profile manager portlet. IV/ Uploading and checking the LDLA input files On Figure 2, there is an “IBD Module v03” tab in the menu. Click on it in order to load the LDLA module. IBD stands for Identity By Descent, a key concept in genetics. A/ The input files Five files are expected as input in the LDLA module. These are files containing information about the pedigree, traits, marker genotypes, marker positions, and demographic history of the population. Their format is described in Appendix A: File format description at the end of this document. These files should be located on your file system. You can upload them by selecting their path in the relevant text box (you can click on the browse buttons and use the file browser to select these paths). The Output text box can be used to provide a name for the compressed files that will be generated at the end of the analysis. Any name will do but it is better to use a clear identifier for future references, for instance, we can call it simN100T100M10, denoting analysis of a 5 simulated data set where historical IBD is predicted assuming Ne equal to 100 (50 males plus 50 females), 100 generations of history, using the nearest 10 markers to each tested location. Once the analysis is completed, a bundle with compressed output files will be generated and its name will contain the string simN100T100M10. Figure 3: Uploading and checking the input files. C/ The checking process Once the parameters have been typed, click on the Upload and check button. The files are then uploaded from your computer to the server in Edinburgh. They are then “checked” by the web server: if there are errors in the input files, you will then be warned. The type of errors checked for are: 1. 2. 3. 4. Individuals with genotypes and/or phenotypes not in pedigree Sex exchanges Mendelian errors General formatting errors It will take a few seconds or maybe even a few minutes for the web page to refresh. Do not click several times on the Upload and check button since it will restart the checking process from scratch and impact negatively on the web server’s performance. Once the files have been validated, you should see a screen similar to Figure 4. 6 Figure 4: The LDLA portlet after the files have been uploaded. D/ Dealing with errors and warnings If there are errors in the input files some messages can be displayed in the text area of the Errors panel (see Appendix B: Error checking). There are different types of errors, some are warnings, but others are critical and require some data corrections. In case of critical errors, 7 data needs to be corrected and uploaded again. In order to do this, the user needs to click on the New Dataset button, correct the errors and upload the files again. E/ Missing allele recovery Missing alleles are common. A strategy to recover as much information as possible before further analysis is also implemented at this stage. This method makes use of all available marker information (family trios, entire full-sib families, entire half-sib families, and distant genotyped ancestors) to recover missing alleles in an individual. The process is iterative until no more changes can be made, as recovered alleles can help recovering other missing alleles in the next round. V/ Choosing the type of QTL analysis A/ Traits, covariates and factors panels There are several panels below the Errors panel. The Traits, Covariates and Factors panels contain check boxes named after the records in the uploaded Traits file. Multiple traits can be uploaded simultaneously but, at this stage, only one at a time can be analyzed. Consequently, one box should be ticked in the Traits panel. Traits are analyzed under the assumption of normally distributed errors. Covariates are continuous variables that may or may not be associated with the traits, e.g. age determining height. Factors are discrete variables defining groups of records with something in common, e.g. gender. Covariates are read as numerical and factors as alphanumerical. B/ The save G matrices panel The Save G matrices panel allows the user to keep the G matrices (IBD matrices between individuals) in the output file produced during the QTL analysis. G matrices can be very large files. Their transfer and compression can take a lot of time. Therefore, by default G matrices are not saved. You can tick the Yes/No check box so as to save these matrices. These matrices can be useful for purposes other than QTL mapping. C/ The method panel The Method panel allows choosing three methods for estimation of historical IBD probabilities. The M&G method is based on Meuwissen and Goddard (2000, 2001), the H&HS method is based on Hill and Hernandez-Sanchez (2007), and the R method is based on Hernandez-Sanchez et al. (2006). M&G and H&HS require haplotype data and R requires genotype data. M&G and H&HS are more accurate than R given correct population parameters. We have yet to implement a haplotype algorithm based on the minimum recombinant concept of Qian and Beckmann (2002). Until then, and unless you have haplotypes, only R is available. 8 D/ Combination of traits By default, only one QTL is searched. Several options can be selected when one QTL is searched: Polygenic Additive Dominant The polygenic variance is calculated using the average relationship matrix across all individuals in the pedigree, and it contains all additive genetic effects on the trait, apart from the additive effect at the position being tested. The additive and dominant variances are calculated with IBD matrices based on marker data and historical information, and it is made up of additive or dominant effects at the position being tested, respectively. As for now it is not possible to analyze several QTL. However, it should be possible later to analyze two QTL (Figure 5). At most 6 check boxes can be ticked in addition of Polygenic. Figure 5: Other panels The restriction of maximum ticking 6 boxes is related to the maximum number of ‘userdefined’ covariance matrices ASReml can take (version 2001). Two QTL can have direct additive and/or dominant effects, plus interactions among them (epistasis). In general populations, epistasis is hard to detect, however, as gene-gene interactions are the norm rather than the exception we opted to offer this option to users. E/ The search parameters panel The Search Parameters panel allows choosing the positions where a QTL analysis will be performed. Three methods can be used. Every – cM: a strictly positive real number should be used. Hence, a QTL analysis is performed at regular intervals (see Figure 4). At – cM (File): a file needs to be selected on the user file system. This file should be a list of positive floating numbers, separated by blank space, tabs or “new line” characters. A QTL will be searched at each position indicated in this file (see Figure 5). 9 At – cM (Hand): a list of positions needs to be input. Those positions should be separated by blank spaces (Figure 6). Figure 6: Choice of positions by hand F/ The markers use panel You can either decide to select all markers for the computation of historical IBD (Figure 4). This option is computationally intensive if many markers are available. It may be better to run a first preliminary search with fewer markers. The other option Closest allows using fewer markers (Figure 5). The number of closest markers to the position being analysed must be an integer input. The Estimate option has yet to be implemented, and will assist users in using the optimal number of markers in each analysis. Note: at this stage, the method used should be R, there should be only one QTL analysed, and the Estimated option in the Markers use panel should not be used. Work is in progress to make these options functional. VI/ Running the QTL analyses A/ Starting the analyses When all the previous parameters have been selected, you can start the QTL analyses by clicking on the Start button of the analysis panel. There is a small tick box near the Start button. Ticking it allows displaying the progress of the jobs on the UK National Grid Service (see Appendix C: The Grid). B/ The pre-processing phase This Display Grid Activity tick box can be ticked or unchecked at any time during the computation. A progress bar is also displayed during the computation. When the Display Grid Activity box is ticked, the Analysis panel is refreshed roughly every 5 seconds (Figure 7). Several messages are displayed during the analysis. The pre-processing phase can take up to a few minutes. C/ Monitoring the progress of the analyses 1 0 Afterwards, the analyses are distributed on the Grid. There is one analysis for each position selected as described in Section IV-E. The dark blue part of the progress bar indicates the percentage of the analyses which have completed. The purple part of the progress bar indicates the percentage of the analyses which are being calculated on the Grid. The light blue part of the progress bar indicates the percentage of the analyses which have yet to start on the Grid. This progress bar is indicative of the progress, and generally reports real progress a few seconds with a slight delay. Figure 7: Monitoring the progress of the analyses D/ Cancelling It is possible to cancel an analysis by clicking on the Cancel button. In this event, if some computations have ended, it may be possible to retrieve some of the results (incomplete graphs will be generated). E/ Downloading and checking the results When the analyses have all completed, a dialog box will pop up (Figure 8). Click OK. 1 1 Figure 8: the results can be downloaded. Some graphs may be generated depending on the type of parameters you chose previously (Figure 9). 1 2 Figure 9: A few plots The results can now be downloaded. Click on the Download Results button. You should see a dialog box which allows you to save the results (Figure 10). 1 3 Figure 10: Download dialog boxes, Firefox style on the left, Internet Explorer on the right. The name of the file is in the format number@username.userdefinedname.zip. number is an identifier which is helpful to guarantee the uniqueness of the file. It can also be used as a reference to the analysis for software maintenance purpose. username is the user name of the user who started the analyses. userdefinedname is a string of characters which is expected to be meaningful to the user and helps him to manage his results on his file system. Save the file on your file system. You can open it with unzip in Linux or other uncompressing software under windows (7-zip, Winrar, Winzip). The compressed output files bundle contains .asr and .sln for each position where a QTL analysis was performed (Figure 11). They are indexed in the same order of the positions specified as described in Section IV-E. The .asr files are ASReml result files containing the most relevant information, e.g. variance components and maximum log-likelihood. The .sln file contains the BLUP and BLUE solutions (see ASReml manual for more explanations). Plots, both .ps and .png are also included. Other files such as an number.info.txt file are included. This file is designed to contain general statistics on the data, e.g. number of alleles per locus, allele frequencies, number of half and full sib families, number of cohorts (a rough estimate of number of discrete generations when they are really overlapping), etc. The files with instructions for ASReml (.as) will also be here, it can be helpful if later the user needs to check that he used the right model and data. 1 4 Figure 11: Content of the compressed output files bundle. F/ Cleaning results You may want to use the same data set and run a new analysis with almost the same parameters. In this event, you would not need to go through the checking process (see section IV-C), and the results of the pre-processing step would not need to be computed again (see section VI-B). This can save time when you deal with large datasets. In this case you just need to change the parameters as explained in section V, and you can submit new analyses as explained in section VI-A. In case you need to upload a new dataset, you need to click on the button NEW DATASET (see Figure 4). When you click on this button, it will take a while for the browser to refresh, possibly a few minutes. This is normal. Until you click on this button, many files which were related to your previous experiment are stored on the web server in Edinburgh and on the supercomputers across the UK, and they only get removed at this stage. 1 5 Once the temporary files have been removed, you should be able to upload your files as described in section IV. G/ End note: helping the debugging process In case you encounter a problem, or think you have found a bug, you are advised to take a screenshot of your browser, write what you did previously, copy-paste error messages to an email to be sent to one of the authors. You are also advised not to proceed with your computations in this event. If problems appear after the computation ended, do not click on the “new dataset” buttons since doing so would delete any temporary file on the server and the Grid, which can be invaluable for debugging purposes. H/ Known issues Current no known issue. Appendix A: File format description We expect data files to be standard text files, e.g. those that can be opened with winword in Windows or gedit in Linux. The names of these files should not contain spaces and they should not be too long, e.g. up to 50 characters or so. Data are pedigree, markers, traits and explanatory variables, and map distances. The first line in the pedigree file contains the number of individuals in the pedigree. The rest of the lines contain three identifiers: individual, father and mother (in this order). Identifiers are considered alphanumeric, so you can use letters, numbers or a combination of both. Unknown parents must be identified with zeros. For example: 4 a00 b00 c12 d12 Pedigree will be checked for errors (see Appendix B), and individuals will be added as required, e.g. if first two individuals in this pedigree, ‘a’ and ‘b’, had been missing dummy variables would have been created to identify them. There must be at least one space between identifiers, and the file must finish with a blank line. Markers information can come in two forms: genotypes or haplotypes. Both use the same format, but haplotypes are phased genotypes so the first allele at each locus is always paternal, and the second maternal. Again, any name (up to 50 characters or so) can be used for the markers file, however, a label, either .gen. or .hap., must be attached to it to distinguish 1 6 between genotypes and haplotypes, e.g. markers.gen.txt or markers.hap.txt. The following is an example of a file containing marker information: Chromosome1 Marker1 Marker2 a1234 b1234 c1133 d2244 The first line contains the name of the chromosome, an alphanumeric variable of up to 20 characters. The second line contains the names of all markers in the data, each name of up to 20 characters, separated by at least one space. The rest of the lines will contain an individual identifier and marker information. Each individual identifier must appear in the pedigree, or an error will be issued. The marker data following each identifier will be read alleles within loci. Each record must be written on a single line. For example, individual ‘a’ has genotype 1 2 and 3 4 at first and second loci, respectively. The file must end with an empty line. Markers can be typed in any order, not necessarily their ‘real’ order on the chromosome. However, their chromosome order must be reflected in the map file, for example: 1 Chromosome1 2 4 Marker2 1.4 Marker1 The first line denotes the number of chromosomes, however at present we can only handle one chromosome at a time, in the future we will expand the capabilities of our software to handle any number of chromosomes. The second line contains the name of the chromosome, which must be the same name found in the marker file, followed by the number of loci (2) and the number of individuals genotyped (4). The third line contains the name of each marker, in chromosome order, separated by the distance between consecutive markers in centi-Morgans (cM). The file must end with an empty line. In this example, Marker1 and Marker2 are separated 1.4 cM, and Marker2 appears before Marker1 on the chromosome (despite having written it in the opposite order in the marker file). The reason why a map file is necessary here is that genotypes are usually given unordered and hence an additional step is required to find their order. The traits file must contain one or more traits, and may contain factors, and/or covariates. Traits can be discrete or continuous in measurement, but all will be analysed assuming errors are normally distributed. Factors are alphanumeric categories grouping several individuals, for example gender. Covariates are continuous variables that may influence the trait, such as larger individuals may also be heavier than smaller individuals. A missing value label has to be specified. An example of a trait file is the following: 4 1 0 0 1 -99.9 Id Fat Gender 1 11.2 1 2 10.4 2 3 13.0 1 4 9.9 2 1 7 The above file specifies number of individuals (4), number of traits (1), the number of random effects (0), number of covariates (0), number of factors (1) and missing values (-99.9) in the first line. The second line contains labels for each column. Lines 3 onwards contain data. The file must end with an empty line. The history file contains demographic information of the population. An example is the following: 50 50 100 3 50 The first and second lines denote the effective sample size (Ne) of males and females, respectively. These are assumed fixed over time. The third line contains the number of discrete generations since population foundation. The fourth line contains the random mating system, the choices are: 0 for haploid population, 1 for diploid monoecious with selfing, 2 for diploid monoecious without selfing, and 3 for diploid dioecious, and 4 for diploid dioecious with hierarchical mating, e.g. as it stands each male is mated to each female chosen at random without replacement, if Ne had been 5 and 50 for males and females, respectively, then 10 random females without replacement would have be mated to each male. This last option reflects common situations in animal breeding. The fifth line contains the percentage (%) of minimum number of loci fully genotyped to qualify individuals as ‘base’. Base individuals are important because they correspond to the first generation in a pedigree for which historical IBD is estimated. LDLA was primarily designed to estimate historical relationships based on markers and demography among pedigree founders (in linkage, pedigree founders are assumed unrelated and non-inbred), and bring those down through the whole pedigree following the segregation of marker alleles. However, in many data sets, pedigree founders are not genotyped, and estimating historical IBD among them has not given us good results, presumably because all founders had the same degree of relatedness. Thus, we must find the first generation of individuals that are at least partially genotyped (the base), without having ancestors as informative as them. The level of marker information required to qualify as base is regulated by the last parameter in the history file. For example, 100 would mean that only fully genotyped individuals could qualify as base, or 0 would mean that pedigree founders are base irrespective of marker data. Finally, the file must end with an empty line. Appendix B : Error checking Errors in data are the norm. A crash would be most likely due to formatting errors. We endeavor to produce as meaningful error messages as possible but the many ways in which formatting errors can occur can only be accommodated over time and experience. If the program crashes and we are not able to give you an informative clue to solve the problem you can always contact us directly. Nevertheless, some errors have been captured by meaningful messages. These errors include: 1. Stated and actual file sizes differ, e.g. pedigree has 10 individuals but first line says 9. 1 8 2. Sex exchanges, e.g. a father can be later a mother or vice-versa. This may be legitimate in some species of fish so it must be considered a warning unless your working species cannot go through sex exchanges. 3. Individuals genotyped and/or phenotyped not in pedigree. All individuals must appear in the pedigree whether they have records or not. This should be treated as a severe error, and not taking action can have unforeseen consequences. 4. Mendelian errors. These are common errors or warnings and should be erased from the data set. A list with all the errors detected will be issued so that a perl program can delete them from the data set. Each error is typed on a single line and consists, at least, of genotypes of a family trio. Sometimes genotypes of more family members are included in the error, as an error can only be considered such in reference to relatives. Our perl program takes a liberal approach in deleting all the individuals typed in the same error line. A more subtle error treatment is possible using probabilities, e.g. heterozygous are more likely to be mistyped as homozygous rather than the other way round, but we have not implemented it here. Example Here we can see that individual 101 has genotype markers value 2 2, that the father is individual 27 (with genotype marker values being 1 1), the mother individual 62 (with genotype marker values 2 1). This is something that is not possible and hence is noted as an error. For individual 295, the genotype marker values are 1 2, with incompatible values for father (individual 101) and mother (individual 196). Whenever possible these errors should be corrected (here with individual 101 having genotype values 1 2 instead of 2 2). It may rarely happen that some mendelian errors are displayed and yet this will seem to be correct (possibly due to a bug). Appendix C: The Grid Basically, a Grid is a set of supercomputers, and we take advantage of the large computational power provided by the UK National Grid Service (NGS) (http://www.grid-support.ac.uk ). There are several High Performance Clusters which are part of the Grid used by the GridQTL project. Most of these clusters have more than 256 CPU. 1 9 The ECDF is a 512 CPU cluster located in Edinburgh (http://www.is.ed.ac.uk/ecdf/faq.shtml). A local Condor pool is part of the Grid, but is not expected to be used in production. In section V-E, the user specifies a set of positions where QTL analyses will be performed. A job is created for each of these positions. For instance, if your data covers 15 cM, and an analysis is expected to be performed every 1 cM, 15 computations will be undertaken in different places on the Grid. This allows speeding up the computations significantly, since many QTL analyses run at the same time. As an example, on the user’s perspective, the 15 analyses may complete in less than 10 minutes, but each of these analyses may take 8 minutes. The NGS will then increment the GridQTL account of 15x8=120 minutes. As an example of a large computation, the analyses of some of our mice data with 30 positions takes up to 100 hours on the Grid (but only 4 from a user perspective). As for now, this level of usage is expected to be exceptional and not frequent. Beware of this when you run large computations: it may be better to run a preliminary set of analyses with fewer positions, and then run a new analysis with a denser number of positions to be analyzed in particular regions of interest. The total duration of the computation on the Grid is displayed at the end of a set of analyses before results can be downloaded. This duration is recorded in a database in Edinburgh, per user, for statistical purpose. 2 0