Chapter 2

advertisement

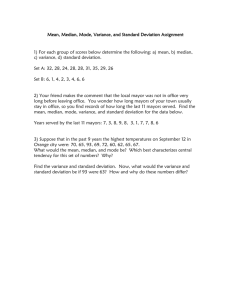

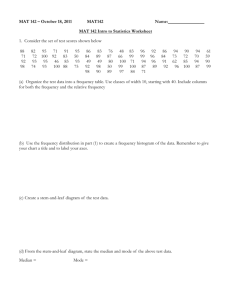

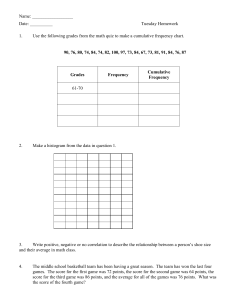

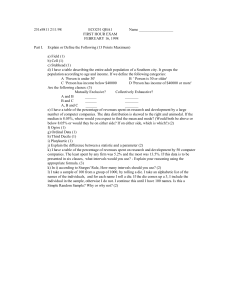

Chapter 2: Describing, Exploring & Comparing Data Section 1 2 3 4 5 6 7 Title Overview Frequency Distributions Visualizing Data Measures of Center Measures of Variation Measures of Relative Standing Exploratory Data Analysis (EDA) Notes Pages 2 3–4 5–8 9 – 12 13 – 18 19 – 20 21 1 §2.1 Overview There are 2 types of statistics: descriptive and inferential It is the descriptive statistic that we will be discussing in this chapter. A descriptive statistic describes the characteristics of data. Following is a list of the 5 important characteristics: 1) The center of the data which a representative value that indicates where the middle of the data lies. The most common descriptive statistic for measuring center is the average. 2) The variation or scatter of the data is also important. 3) The distribution of the data tells the shape of the data. We are very familiar and will become even more so with a distribution call the Normal – this is a bell shaped curve, the type of distribution that class grades follow. 4) Outliers which are data points that lie outside the “normal” variation of the data. 5) The change in characteristics of data over time. Just like memorizing order of operations (Please Excuse My Dear Aunt Sally, PEMDAS) or the order of the planets in our solar system (Matilda Visits Every Monday Just Stays Until Noon Period) or the names of the Great Lakes (HOMES) , there is a mnemonic device to help you remember these important characteristics of data: Computer Viruses Destroy Or Terminate (CVDOT) It is my belief as a statistics instructor that you can not understand a statistic until you have learned how to calculate that statistic by hand, so even though we will be using technology to calculate many statistics I will still require you to be able to find basic descriptive statistics by hand for small data sets. 2 §2.2 Frequency Distributions To help us in our study of the shape of data (distribution) we will be learning how to compile a frequency distribution (table), a relative frequency distribution and a cumulative frequency distribution. A frequency table will be created which will give categories (not like the categories for ordinal data) and the number of experimental units (data points) within each category. From these we will learn how to draw pictures that show us distributions. Let’s go through an example to learn the process. Here is a summary of the process to start: Creating a Frequency Distribution (Table) 1. Decide upon the # of classes (the categories). There should be between 5 and 20 classes, but if many have 1 or 0 data points then you have chosen too many. I will normally tell you how many classes to use. 2. Find the class width (the range of data points in each class). This is the (Maximum Minimum)/ # of classes * * You may need to round, usually up 3. Select a lower limit for the 1st class. This point does not have to be the lowest data point, but it should make sense in terms of the data (no negatives if negatives don’t exist, etc.). To this lower limit add the class width. This is the lower limit of the next class, not the upper limit of the first class!!!! Continue until you’ve gotten all your classes. 4. Since the last class has an upper limit that you have not found, be sure to fill it in, as well as the other upper limits. 5. Make your counts as to the number of data points in each class. Example: The ages of people arrested for purse snatching are: 16, 41,25,21,30, 17, 29, 50, 30, & 39 Using 4 classes create a frequency distribution (table) for the data. Classes: Class Width: Lower Limits: Place Upper Limits: Make counts: 3 Some places where students go wrong and that you should be cautious of are: 1) Are your classes mutually exclusive? 2) Did you include all classes even if one was zero? 3) Does the sum of frequencies add to the number of data points? This is really important! Next, we need to discuss the relative frequency distribution which is simply our frequency distribution listed in terms of percent of the whole. To create a relative frequency distribution you first create a frequency distribution and then divide each class frequency by the total number of data points (sum of all frequencies). Rel. Freq. = Class Freq. Sum of Freq. Example: For the above data of purse-snatchers create the relative frequency distribution. Finally, we need to know how to create a cumulative frequency distribution. This is just a running total that includes all classes below, but not any above. In a cumulative frequency distribution the cumulative frequency in the last class must be the total number of data points. We write the classes in a little different manner for a cumulative frequency dist. – we use less than and use the lower limit of the next class. Example: For the purse-snatchers create a cumulative frequency dist. Now for the real work, what does this all mean? Well, this is just a preview of how the data is behaving. We are learning where the data stacks up, what percentage lies in certain areas and at what point have we seen most of the data. A frequency distribution is really just the first step in seeing the shape of the data, and we will be using it to draw an actual pictorial representation in the next section. But, at this early stage we can begin by comparing frequency distributions to other similar data or data over time and looking for patterns – and really looking for patterns and finding out how mathematically significant those patterns are is what statistics is all about! 4 §2.3 Visualizing Data This section concerns itself with looking at pictures that show the shape of the data. We will talk about histograms, which are types of graphs created from frequency & relative frequency dist. We will also discuss line graphs of frequency (freq. polygon) and cumulative frequency dist. (ogives). We then move to another visual called a stem and leaf plot and its relative the dot plot. Finally we will discuss a scatter diagram, but will leave the other visuals such as the Pareto Chart and Pie Chart and Time Series to the book. Let’s begin with the histogram. A histogram is constructed from a freq. or rel. freq. dist. The horizontal axis consists of the scale of the data with either the midpoint of the class (called class marks or midpoints) labeled or the class boundaries (the midpoint between upper and lower limit) marked. The vertical scale is the frequency. Each class is shown with a vertical bar. There are no gaps between the bars on a histogram! Everything must be clearly marked!! Creating a Histogram 1. Create a frequency or relative frequency table 2. Find the class boundaries (or the class marks) 3. Create an axis system with the class boundaries on the horizontal axis & frequencies on the vertical axis (label clearly) 4. Create a bar the width of the class and the height of the frequency to represent each class in the table. Example: Create a Histogram for the purse-snatcher data. What does this do for us? Well, it shows you the shape of the data! We can see that as age increases the number of people snatching purses goes down and that in-between age we have the highest frequency. We see that it is a skewed data set; it is not normally distributed (that bell-shaped curve that we see in grades). If you create a relative frequency histogram you will not see any difference in shape. You are seeing the exact same trends; it is just represented in terms of percentages instead of raw numbers. When we talk about a frequency polygon we are talking about the points that correspond to the class marks (mid-point of the class) and the frequency of each class. From these points we create a line graph. This line graph can be shown super-imposed on our histogram. The line graph is nice for showing trends, because our eye follows the slope of the line. As a result of seeing the slope we see increases and decreases according to class. 5 Example: Draw the frequency polygon over the histogram above. An ogive is a line plot for a cumulative freq. These are constructed starting with the class boundaries and the cumulative frequencies. The major use would be to see the number of values below a particular point in the data. It can also help us to see an overall trend in data – where we are getting the most increase and where things level off. With some data this is important (see the example in the book) and with others it is not as useful. A stem-and-leaf plot gives us a nice visualization of the data shape, but unlike the histogram, allows us to keep the original data. This is what makes it superior to a histogram – there is no loss of the original data. This type of plot works with a stem, which is based upon the tens, hundreds, etc. and the leaf, which completes the number. You can think of this process like writing a number in expanded form! For large data sets it is sometimes necessary to give each stem two representations to spread the data out a little more (this does add some level of error to the process and thus misrepresentation of the data is possible) , called an expanded stem-and-leaf. We can also bring the data together a little more for smaller data sets creating a condensed stem-and-leaf (the same problem with misrepresentation can occur). Creating a Stem-and-Leaf Plot 1. Look over the data and decide upon stem and leaf (all below 100, use a stem of 10’s) 2. Write a table with stems on left and leaves on right (label the stem & leaf unit value) 3. Fill in the leaves to represent all the data points Example: Create a stem and leaf plot for the purse-snatchers data 16, 41, 25, 21, 30, 17, 29, 50, 30 & 39 Now, if you look at this on its side, you will see the nearly the same shape as the histogram, but with the added benefit of still being able to see the original data. Another advantage of the stem-and-leaf is that it sorts the data, which prepares us for finding some other important descriptive statistics that require ordered or ranked data (such as medians, quartiles & percentiles). 6 Another type of visual representation is the dot plot. The dot plot also keeps all the original data, sorts it, but is worse at showing shape than the histogram and dot plot. The dot plot is good at showing outliers, however. Use a number line representative of the range of data values and place a dot for each point just above its representative number on the number line to construct a dot plot. For our purse-snatching data, a dot plot is not too informative! This is what it looks like. 55 45 30 15 The final type of visual that I want to discuss in class is called a scatter diagram or scatter plot. This is very easy to construct and shows us trends that exist in the data. We will use this type of plot for our excursion into regression in chapter 9. This type of visual can only be used for a data set that has ordered pairs (two characteristics that are somehow related, collected from the same people or at the same time& place, etc.) You’ll be happy to know that I’m going to need a new data set to show this! All we have to do is construct ordered pairs based upon paired data and plot those points on a coordinate system using appropriately labeled axes for the independent and dependent variables. Example: The following data is the score of a math reasoning test and the starting salary of the person scoring thusly on the test. Create a scatter diagram based upon it. X = score Y = salary 78 89 85 93 92 99 100 100 85 84 7 Scatter plots can be used for noticing a relationship between two sets of data but we do not want to make the mistake of causality. Just because you receive a high score on the above test does not guarantee a high salary. There is a relationship (called a correlation), but this does not mean that a high salary is caused by a great score, it could be that a person had a lot of prior experience in the job and received a high salary for this reason. This will be discussed at length in Chapter 9. This chapter also includes discussions of the Pareto chart, pie charts and time-series graphs. The Pareto chart is a bar graph for qualitative data where the bars are ordered in descending height to help tell the story of what class (category) is most important. A pie chart is the same type that you are familiar with from your everyday life and uses the Relative Frequency Distribution to show which classes (categories) are the largest or most influential. The time-series graphs are useful for seeing trends over time. This section and the preceding are very helpful in showing how to visualize data (CVDOT) without showing time progression. We can easily see the center of the data, the variation, the distribution via a histogram and with the dot plot or stem and leaf we can see outliers very easily. We can not see time trends with these techniques however! 8 §2.4 Measures of Center Recall in §2.1 we talked about the 5 characteristics of data (CVDOT – Center, Variation, Distribution, Outliers, Time). The 3 most important are center, variation and distribution. In §2.2&2.3 we dealt with descriptive methods for showing the distribution (shape), and now we need to discuss the center (a representative value). There are 4 major measures of center, each measure being based upon different criteria and some being more appropriate than others. These 4 major measures are: Mean Median Mode Midrange The first in our discussion is the mean (also called the arithmetic mean). This measure is only appropriate for ratio or interval data. It only has meaning for numbers that are somehow related on a continuous ordered scale. It is an average. If we let “x” be a number from the sample and “n” be the total number in the sample. In mathematics and science a (capital sigma – a Greek letter) is used to represent the sum. If we use a capital N then we are talking about the total number in a population. We can compute the mean for a population or for a sample. It is important to note that English letters are used to describe a sample statistic and Greek letters are used to describe population parameters. x (read x bar) is the sample mean (pronounced mu) is the population mean x /n x /N It is important to note that population means are rarely known, and the sample mean is usually used as an approximation. Example: A sample of six school buses in the Carlton District travel the following distances each day: 14.2, 16.1, 7.9, 10.6, 11.2, 12.0 Find the sample mean. Note: would be the sum of all distances traveled by all Carlton District buses and then divided by the total number of buses in the district. This example only uses a sample of 6 buses. The next measure of center is the median. The median is the middle value of the ranked data. The median can be found for interval, ratio and ordinal data, but not for nominal data. The median is denoted as ~x, pronounced x-tilde. Here is the procedure for finding the median. It is quite easy if there is an odd number of data points but when there are an even number there is a slightly special procedure. 9 Finding the Median 1. Rank the data in ascending order (a stem-and-leaf is nice for this) 2. a) If odd # there is a number that has an equal number above and an equal number below it. For example if there are 15 points then the 8th is the median since there are 7 above it and 7 below it. It is the middle of the data. b) If there is an even number of data points the middle is between two points, so the two points must be averaged. If there are 20 points then the middle is between the 10th and 11th Example: Find the median of the following ranked data 7.9, 10.6, 11.2, 12, 14.2, 16.1 The median can be used with the same types of data as the mean (ordinal and interval), so why would we need the median instead of the mean? The answer is outliers. Outliers can affect the mean and but they do not affect the median. So, once the distribution of the data has been observed the decision as to which measure of center to use can be made! Note: The median is a better measure of center for highly skewed data or data which contains outliers. The next measure of center we will discuss is the mode. The mode is the score that appears most frequently. Ranking the data also helps to find the mode (hence the stemand-leaf plot has another use). The mode can be found for all for classifications of data, but it is the only measure of center appropriate for nominal data! Data can be of three types when considering the mode: No Mode – Meaning that there is no data point is repeated Bimodal – Meaning that there are 2 data points that appear with the greatest frequency. Multimodal – Meaning many data points appear with the greatest frequency Example: Find the mode(s) if one exists. Confinement in days: 17, 19, 19, 4, 19, 21, 3, 21, 19 Hourly Incomes: 4, 9, 7, 16, 10 Test Scores: 81, 39, 100, 81, 69, 76, 42, 76 10 In conclusion, which measure of center is best used depends upon 2 things – first the classification of data and second the presence of outliers. Mean is usually the measure of center that is used but it is not the most appropriate when outliers are present due to the strong influence that outliers can have on this measure. The midrange of the data is not often that important except when looking the symmetry of data and using it in comparison with other measures of center. It is nothing more than taking the high and low data points’ sum and dividing it by two. Please see your text more information (Ed. 9 p. 62-67, Essential Ed. 2 p. 60) I am going to include an example for the mean of a distribution using a frequency table, but we may not have time to discuss the example in class. Example: The following frequency table refers to a sample of purse snatchers. The data points represent the ages of the sampled purse snatchers at the time of their arrest. Class (ages) Frequency 16 – 24 3 25 – 33 4 34 – 42 2 43 – 51 1 In order to calculate the approximate mean using a frequency table we will need to fill in the following table. f Class Mid-Point (x) fx 16-24 3 (24+16)/2 = 20 203 = 60 25-33 4 34-42 2 43-51 1 n fx x = fx n We will not discuss the weighted mean but you are expected to read the section containing this information on p. 63-66 of Triola (9th ed) or p. 62 of Essentials ed. 2. 11 Skewness is something that we need to talk about now that we have discussed the mean and the median. It has to do with the distribution of the data with respect to the mean and the median and mode and therefore must be left for discussion after the mean and the median and mode. Skewness is a measure of symmetry. If a distribution extends more to one side than the other of its central grouping then it is called skewed. Left Skewed (negative) Mean & Median to the left of the Mode Outlying data is dragging the mean & to less extent median to the left of mode Symmetric MeanMedianMode Data is in a central grouping & outlying data is evenly spread Right Skewed (positive) Mean & Median Right of Mode Outlying data is dragging the mean & to less extent median to right of mode 12 §2.5 Measures of Variation This section is about the measure of variation, our second characteristic of data. We will be discussing the range and the standard deviation (variance), and how we can use these measures to tell us about our data. Range is very easy to define. It is how much the data varies from high to low. We find the range by computing the difference between the high and the low data points (high low). The problem with the range is that it can be affected when there are outliers. Outliers can make the data appear to have a much larger range than it actually does. Example: Find the range of the test scores: 81, 39, 100, 81, 69, 76, 42, 76 Note: If we look at the distribution of the data we see most of the data is 70 or above with 2 scores that are very different. These 2 scores affect the range of the data drastically. If we compute the standard deviation of these scores it will be less affected by the 2 very low scores, because most of the scores are near the top end of the scale. This is what makes standard deviation better than range in showing variation. Probably the most important measure of variation for ordinal and interval data is the standard deviation. This is the measure of the variation about the mean. The standard deviation is the square root of the variance, but it is used more often than the variance because of the difficulty in interpreting the units associated with the variance (they are squared, and the units of the mean are not). Let’s not be too quick to disregard the variance however as it has a characteristic that is extremely important is more advanced statistics – it is an unbiased estimator (it tends to be a good estimator of the actual population variance) . The standard deviation of a population is called sigma and is represented by the Greek lower case letter sigma (). The standard deviation of a sample is represented by the lower case “s”. If we are talking about population variance it is 2 and sample variance s2. The following is the formula for sample variance. Remember that the sample standard deviation is the square root of the variance. s2 = nx2 (x)2 n(n 1) = ( x x )2 (n 1) *Note: There are 2 ways to calculate the variance. The 1st formula is much easier than the 2nd with the use of a scientific calculator. The 2nd formula, is easier when a scientific calculator is unavailable and the calculation MUST be done entirely by hand. I should be noted that with a small data set, the 2nd is also a nice formula to use, but the more data, the more cumbersome the formula becomes. Example: a) The following are sampled finish times in a bike race (in minutes). 28, 22, 26, 33, 21, 23, 37, 24 Find the mean of the data. 13 b) = x 28 22 26 33 21 23 37 24 Complete the following table to calculate the variance using the 2nd formula given above. x2 x x-bar (x x-bar)2 *Note: The calculation of any sample statistic should contain 1 more decimal than the original data. Always maintain as many decimals as possible in the calculation process until the final answer is derived. If you can’t possibly maintain all decimals, try to keep at least 4, preferably 6. c) Now use your calculator and the first formula to calculate the variance. Start by inputting all data into the data register of a TI-83/84 (stateditenter data in L1) or with a simple scientific TI input data then use + until all data is entered. After inputting data on a TI-83/84 (statcalc1varstats2nd f(n)#1) or on simple TI 2nd f(n) left parenthesis. d) Find the standard deviation of the data by taking the square root of the value found in b or c. Remember that those values should be the same! e) Interpretation of the standard deviation involves the mean. The std. dev. in conjunction with the mean is used to give a range of values in which to find the data. Nearly 70% of all symmetric data will fall within one standard deviation of the mean (that is, 1s above and 1s below the mean; it tells us how the data spreads out from the mean). f) If this data is considered to be symmetric, calculate the range of values where you would expect to find about 70% of all bike times to be. 14 It should be noted that the formula for the population variance is slightly different than the that of the sample variance. The following are the formulas for the population variance. 2 = Example: (x )2 N = N x2 (x)2 N2 Six families live on Merimac Circle. The number of children in each family is: 1, 2, 3, 5, 3, 4 Since we a using all the families on Merimac Circle this is considered a population. a) On your own, calculate . b) On your own, complete the table below and calculate 2 based upon the table using the 2nd formula above. x2 x (x )2 x 1 2 3 5 3 4 c) On your own, calculate 2 using the 1st formula given above. d) Calculate the standard deviation of the population (). *Note: In you should get 1.3 when rounded appropriately. On a calculator the pop. Std. dev. is given as xn or simply x where as the sample std. dev. is given as sx or xn1. 15 The standard deviation can also be calculated using a frequency table. Although we have not gone over a frequency table get, I will walk you through the calculation of the standard deviation based upon a frequency table. We will need the following vocabulary, which will also be used in frequency tables. Class – The subdivisions of the data. All classes have equal widths. No class should overlap another. Class Width – The width of a class, found by subtracting the lower class limits of two successive classes. Lower Class Limit – The lowest point for which a data point is considered in a class. The class limits should have the same # of decimal places as the data. Upper Class Limit – The highest point for which a data point is considered in a class. Class Boundaries – The points equidistant between successive classes. This is found by adding a successive upper and lower limit and dividing by 2, or by taking a successive upper and lower limit, subtracting them and dividing by 2 and then adding this amount to each upper limit to achieve the boundaries, or equivalently by subtracting that amount from the lower limit to achieve the boundaries. Class Midpoints (also referred to as Marks) – The point in the middle of each class. This is found by adding the lower and upper limits of the class and dividing by 2, or by subtracting the upper and lower limits and dividing by 2 and then adding that amount to each lower limit. Frequency – The number of data points in each class. Example: The following frequency table refers to a sample of purse snatchers. The data points represent the ages of the sampled purse snatchers at the time of their arrest. Class (ages) Frequency 16 – 24 3 25 – 33 4 34 – 42 2 43 – 51 1 In order to calculate the approximate standard deviation using a frequency table we will need to fill in the following table. f Class Mid-Point (x) x2 fx f x2 16-24 3 (24+16)/2 = 20 202 = 400 203 = 60 4003 = 1200 25-33 4 34-42 2 43-51 1 n fx fx2 16 After you have finished the table, use the values to calculate the variance of the sample using the following formula: s2 = nfx2 (fx)2 n(n 1) *Note: This will not give the exact value of the variance, but as the data becomes more symmetric it will give a better and better approximation. The actual variance of this data set is 11.9 years 2. Of course, I don’t know what a squared year means, so it might be nice to put it in terms of a standard deviation!!! Even if we are unaware of all data in a data set and can make the assumption that the data is approximately symmetric, we can find an approximate value to use in the place of the standard deviation using something called: The Range Rule of Thumb. This comes from the fact that for symmetric data nearly all the data (95%) will lie within two standard deviation of the mean, and therefore that the maximum data point should be approximately the mean plus twice the standard deviation and the minimum data point should be approximately the mean minus twice the standard deviation (x-bar 2s). Range Rule of Thumb – Example: s range 4 For the bike racing data found on page 10 of these notes, find the value that you would expect for the standard deviation using the range rule of thumb. Compare this value to the actual standard deviation. Based upon the fact that the actual and the approximate should be pretty close if the data is symmetric, do you think the data is symmetric (even knowing nothing about what symmetric really means!)? Now, let’s also test out that idea of the maximum and minimum data points, again remembering that the data should be symmetric for the use of this approximation. Example: Bookstore sales receipts give a mean of $61.35 and a standard deviation of $28.658. What would you expect the minimum and maximum values in sales to be for the bookstore? We have been “quoting” an important approximation in this last section called the Empirical Rule. This rule has to do with symmetric, bell-shaped data and it tells us the following: 68% of the data will fall within 1 standard deviation of the mean 95% of the data will fall within 2 standard deviations of the mean 99.7% of the data will fall within 3 standard deviations of the mean 17 Furthermore, we consider it to be usual for a data point to be within 2 standard deviations of the mean and unusual to be beyond 2 standard deviations. Once we get beyond 3 standard deviations we consider data points to be possible outliers. Example: For a sample of blueberry cakes the mean weight was 500 g and the std. dev. was 12 g. In what weight range would you usually expect to find blueberry cakes from this population? Now, we can also use the Empirical Rule in another way. We can use it to give approximate percentages of the population that lie within certain ranges of data. We can do this by using something called the z-score. The z-score tells us how many standard deviations a data point is from the mean. z-score – Example: x x-bar s Using the Empirical Rule and the z-score, indicate what percentage of the data you would expect to find between the values of 488 and 512 g. Now, you might ask yourself, what if I know my data is not symmetric? In this case, we have another rule of thumb called Chebyshev’s Theorem. It is a pretty simple calculation and only works for values beyond 1 standard deviation from the mean. It tells us what percentage of the data to expect with in a specified number of std. dev., based upon that number of standard deviations (k). % of the Data = 1 1/k2 Example: If we assume that the data for the blueberry cakes is not symmetric, what percent of the data should fall within 2 standard deviation of the mean? *Note: This value will not change, no matter what the data. It is also a smaller range than that given by the Empirical Rule, since the data is not considered to be symmetric. 18 §2.6 Measures of Relative Standing This section considers measure of position which can help us to compare data. The most useful comparison score is a standard score, or a z-score. We have already discussed this measure of position in the last section. It measures the number of standard deviations from the mean for a bell-shaped curve and can be used to compare different measurements in an equivalent way. The larger the z-score the more unusual the value. We expect usual z-scores to be within 2 standard deviations of the mean and unusual values to be outside 2 standard deviations (an actual calculated value for standard deviation is always preferred to an estimated value using the range rule of thumb) . Z = x x-bar s Example: or x For a population of patients with = 60 years and = 12 years, would we consider 68 to be an unusual value? Why or why not? The next important measures of position are percentiles and quartiles. A percentile is a measure of the percentage of scores below a certain value. Quartiles are special percentiles. The 1st quartile (Q1) is the same as the 25th percentile (P25). The 2nd quartile (Q2) is the 50th percentile, also called the median (x-tilde). The 3rd quartile (Q3) is the 75th percentile (P75); it should be noted that the 3rd quartile is the same as P25, from the upper end of the data. To find the percentile represented by a data point, the following process should be followed. The data must 1st be ordered and then: Percentile = # of points below 100 Total Example: In the purse snatching data what is the percentile of 29? Stem (x10) Leaf (x1) 1 67 2 159 3 009 4 1 5 0 Now, let’s consider what needs to be done to find a data point that represents a given percentile. Again, the data must be ordered and then: Indicator Function: Lk = k n 100 k = %tile, n = # of data pts. 19 If L is whole number average that and next data point. If L is a decimal, round up to the next whole and use that as the %tile. Example: For the following data that represents the decibels create by an ordinary household item. Stem (x1) 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 Leaf (x0.1) 0 45 7899 24478 26 9 4458 023568 0478 0167 068 0689 7 28 019 29 4 1 Find P10. Find Q1 Find Q2 Find Q3 Another measure of position that we should discuss is the Interquartile Range. This is referred to as the IQR. The IQR is nothing more than Q3 Q1. The interquartile range is used to show where the bulk of the data resides. Seventy-five percent of the data should lie in the IQR. As a result, the IQR can be used to pinpoint outliers as well. Anything outside 3xIQR from the Q1 or Q3 should be considered as an outlier. Example: For the above data, should 77.1 be considered an outlier? 20 §2.7 Exploratory Data Analysis (EDA) Exploratory data analysis is the first step any Statistician takes when looking at a data set for the first time. It is important to see trends in the data such as shape, center, and variation. Usually the first exploratory analysis conducted is investigation of shape. With consideration of shape and data type, the most appropriate measures of center and variation can be calculated. Once a Statistician has conducted this exploratory analysis they are prepared for further analysis of the data using methods to be discussed in the remainder of the book. Investigation of Shape Historgrams/Stem&Leaf Plots/Dotplots/Boxplots Measures of Center Mean/Median/Modes Measures of Variation Variance/Standard Deviation/Range/IQR Outlier Investigation Minimum/Maximum/3xIQR beyond Q1&Q2 Of all the above exploratory analysis, the only one not discussed thus far has been the Boxplot. The boxplot is a 5 number summary that shows center, variation, position, spread and shape of the data, but it can not be discussed with the other graphical representations of shape because it requires the use of the quartiles. 5 Number Summary Minimum & Maximum Q1, Q2 & Q3 A boxplot uses the 5 number summary in a scaled drawing where the maximum and minimum are represented by a small marking (usually a vertical line), the 1st and 3rd quartiles form a box with the median as a vertical divider of that box, and then “whiskers” are drawn from the central box to the minimum and maximum. If there are outliers present, they are sometimes represented by asterisks (especially in Minitab). The boxplot shows the shape by showing where the “bulk” of the data lies in relation to the minimum and maximum, as well as showing a “swaying” of the data based upon where the median lies within the central box (think of the median as a fulcrum). Because the boxplot is a scaled drawing, it can make a nice comparison tool for multiple data sets. Example: For the following data representing a sample of the measures of the diameter (in feet) of Indian dwellings in Wisconsin, create a stem and leaf plot to order the data, find the 5 number summary and then draw a boxplot (I want labels on the boxplot indicating the 5 number summary). 22, 24, 24, 30, 22, 20, 28, 30, 24, 34, 36, 15, 37 21