FieldstonBiologyStatisticsSummary(OffWhite)

advertisement

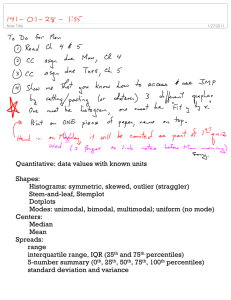

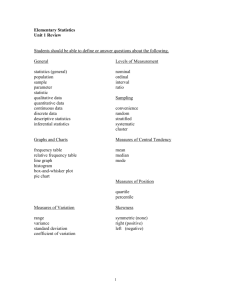

FIELDSTON BIOLOGY STATISTICS SUMMARY PACKET TOOL MEAN DESCRIPTION Average, sum of a set of data divided by the number of data When to Use: use to describe the middle of a set of data – most useful when data set does not have outliers or is not skewed to one extreme Useful when comparing sets of data Affected by extreme values or outliers Example: 4, 5, 6, 5, 4 Mean: (4 + 5 + 6 + 5 + 4) / 5 = 4.8 MEDIAN Middle value, or the mean of the middle two values, when the data is arranged in numerical order When to Use: use median to describe middle of set of data, more helpful when there are outliers or data is skewed Useful when comparing sets of data Not as affected by extreme values or outliers If the numbers in the data are more clustered, without outliers or skewed data, the mean provides more information than median Example: 4, 5, 6, 5, 4 Median: 4, 4, 5, 5, 6 = 5 MODE Value, or number, that appears the most; it is possible to have more than one value or for no mode to exist When to Use: use mode when the data are non-numeric or when asked to choose the most popular item Not as affected by outliers or skewed data When no values repeat in data set, mode is every value and not useful When there is more than one more, difficult to interpret and/or compare Example: 4, 5, 6, 5, 4 Mode = 4 and 5 DISTRIBUTION NORMAL DISTRIBUTION VS SKEWED DISTRIBUTION OF DATA: Normal Distribution Skewed Distribution Outliers NORMAL DISTRIBUTION: In normal distribution, extremely-large values and extremely-small values are rare. Most-frequent values are clustered around the mean (which here is same as the median and mode). SKEWED DISTRIBUTION: When data is shifted to one extreme, may be due to a real phenomenon that you are studying in your experiment. Another statistical tool (line of best fit) may be helpful in demonstrating that the skewed pattern is REAL, and not due to chance. Not necessarily the same thing as outliers OUTLIERS: an observation that is numerically distant from the rest of the data can occur by chance in any distribution but are often indicative of a measurement error no rigid mathematical definition of what constitutes an outlier --> ultimately determination is subjective and must be discussed openly if mean = (or close to) median, then probably no outliers if mean is not close to median, then most likely due to outlier often you will “know one when you see one” Estimators capable of coping with outliers are said to be robust: the median is a robust statistic, while the mean is not. How to Deal with OUTLIERS (Not Necessarily SKEWED DATA): If you can identify a concrete reason for why this outlier occurred then you can simply throw it out WHEN TO THROW OUT DATA, examples: if one group performed their experiment on a different day than the rest of the groups where the conditions of the experiment were affected by running it on a different day (ex, using reactive solutions that are a day older) if the group with the outlier noted that they had spilled some of their solution, then you could throw out their data. CAUTION: whatever criteria you used to throw out piece of data, that criteria must be applied to ALL other groups. EX: a procedure called for you to keep the solutions at 23 degrees C. So if you threw out data from a group because they performed the experiment at 33 degrees C, then you must throw out any data that was obtained at 33 degrees, even if that data “looks good” ACCURACY vs PRECISION If you can’t find legitimate way of eliminating an outlier, you can always perform statistical analysis WITH and WITHOUT the outlier included AND include both analysis. You could describe how while data did not support your hypothesis, if you eliminate this one outlier it changes the data enough to support your hypothesis. Accuracy: how close a measured value is to the actual (true) value Precision: how close the measured values are to each other If you are playing soccer and you always hit the left goal post instead of scoring, then you are not accurate, but you are precise! ERRORS Systematic Error: impacts accuracy of experiment; errors that may be reduced by some form of modification to the experimental design or execution Random Error: impacts precision of experiment; reduce the effect by averaging multiple trials ROUNDING Experimental Design / Method Error Errors in underlying assumptions in how the DV is being measured Human Error: ex., put in 2 drops when 3 drops were called for Instrumental Error Ex., balance that is calibrated correctly but gives two different measurements When calculating, rounded value should not go beyond the precision of instrument that was used to record. If last value is between 1 – 4 Round down; If last value is between 5 – 9 Round up Example: 3.15 + 2.7 + 1.624 = 7.474 7.5 % CHANGE Describes the amount of change relative to the original value. (Final – Initial)/Initial * 100 Example: Initial = 2 grams, Final = 3 grams % Change: (3 – 2) / 2 * 100 = 50% ABSOLUTE AVERAGE DEVIATION Absolute Average Deviation tells you the average spread (range) of the numbers around the mean of a sample. In order to calculate the absolute average deviation, you: 1. Take the difference between each score relative to the mean 2. And then take the absolute value average of all the deviations The value you get represents the spread, or range, of your data. % ABS AVE % in which the Absolute Average Deviation is relative to the Mean = (ABS AVE DEV / MEAN) * 100 DEV RELATIVE TO Example: MEAN = 30, ABS AVE DEV = 5 % the ABS AVE DEV relative to Mean = (5/30) *100 = 16.7% MEAN T TEST When to use: when comparing two or more different data sets, t test helps us to see if two data sets are different enough from each other so that we don’t think they are different due to chance. If they are different enough, we say the difference is statistically significant Calculation takes into consideration both difference in means as well as range/deviation within each data set

![[#GEOD-114] Triaxus univariate spatial outlier detection](http://s3.studylib.net/store/data/007657280_2-99dcc0097f6cacf303cbcdee7f6efdd2-300x300.png)