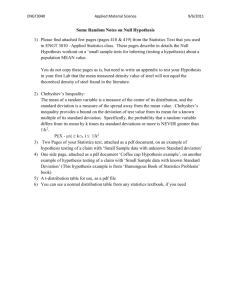

Charpy Impact Test: Steel Strength & Manufacturing Statistics

advertisement

EXPERIMENT A4 Impact Strength of Steel and Manufacturing Statistics Summary: In this lab, you will perform a Charpy impact test on a medium steel. You will be introduced to the use of the ASTM Guidelines for materials tests, and to the use of statistical methods for determining how well a manufacturing process is performing. Instructions: The actual experimentation in this lab can be performed in one afternoon. Some library research is also required to answer the questions and to provide background information. Please be careful to follow the experimental steps correctly, because Charpy samples are expensive, and you are limited to 10 per group for impact testing. Ensure that everyone in the group reads the STATISTICS appendix in the lab manual before coming to lab. Background: Charpy Testing Charpy testing was developed in the 1940's as a method of determining the relative impact strength of metals. The Charpy test provides a standard method of studying the impact strength of a component containing a stress concentration. A rectangular beam of metal is notched in the center. It is then placed between two anvils and is broken by a swinging pendulum. By measuring the height to which the pendulum rises after impact compared to the height from which it was dropped, one can find the total energy absorbed by the sample. Of particular interest is the way in which some metals, notably steel, show a marked decrease in impact strength at low temperatures, leading to component failures at stresses well below the rated strength of the metal. A discussion of the impact strength of iron and steel products can be found in the ASM Metals Handbook in the library. The transition from brittle to ductile behavior occurs over a certain temperature range specific to each material. Based on one of several criteria, a transition temperature is usually quoted above which the material is considered ductile, and below which the material may be brittle and suffer cleavage fracture. Outside the transition region, impact strength is generally constant with temperature, allowing the tabulation of upper shelf and lower shelf impact energies, which may be used as a guide for designers. Charpy test results can not be used quantitatively to estimate the impact strengths of complex geometric parts. Charpy tests only provide a relative indicator of comparative impact strength between materials. The test is still widely used, however, to check material quality, because it is quick and inexpensive. Specifications for Charpy strength are still included on structural parts which may be exposed to impact loads in embrittling conditions, such as bridge girders and nuclear power components. In this lab, you will test Charpy samples of 1018 steel. You will estimate the transition temperature based on two criteria. One is the point at which the impact strength is halfway between the upper and lower shelf energies. The other criterion is based on the appearance of the fractured surface. Ductile rupture surfaces are dull and fibrous in appearance, while cleavage surfaces are shiny and granular. Based on a chart, you will characterize the percentage of the fracture surface which is brittle. The temperature at which 50% of the surface appears brittle provides another definition of transition temperature. This estimate is typically higher than the one based on shelf energies. A description of the purpose of Charpy testing is found in Deformation and Fracture Mechanics of Engineering Materials by R. W. Hertzberg. A4-1 Equipment, sample, and procedural specifications for Charpy testing can be found in ASTM Annual Book of Standards 1994 in the library. The American Society for the Testing of Materials is an independent organization representing many materials and manufacturing industries. Its primary mission is to provide specific guidelines on the expected physical properties of all kinds of materials. This allows industrial users to identify the minimum expected properties of a supplied material based on its ASTM identification code. This makes the choice of materials for many applications much simpler. The ASTM also produces standards for various material processing techniques, and for material performance tests, like the Charpy test. Find the guidelines for Charpy testing. During the lab, note the deficiencies in our setup and procedure, due to time and equipment limitations. In industry, it would be wise to follow the ASTM guidelines whenever possible, and to inform your customers if you are unable to do so. In the Introduction section of your lab report, address the following questions. 1. What metallic lattice shows the most marked change in notch toughness with temperature? 2. What is the effect of increasing carbon content on the notch toughness and transition temperature of steel? 3. What change in your results would you expect: a. If your samples were thicker? b. If the notches were sharper? c. If the samples were unnotched? d. Why are the samples notched? 4. Briefly describe other tests of impact strength. 5. What are the guidelines for impact tests of plastics? How are they different from metals? EQUIPMENT: 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. Charpy Testing Machine Fisher 615ºF Forced Convection lab oven Sample tongs (1) Low temperature Dewar flask filled with dry ice (CO2) Antifreeze Methanol, or Isopropynol (4) Styrofoam cups mounted in a foam block 2 Beakers with ice Digital thermometers Heat resistant gloves 10 Standard Charpy samples of cold rolled 1018 Steel Micrometers Vernier calipers A4-2 Why Measure Impact Toughness? Materials are used to build load bearing structures. An engineer needs to know if the material will survive the conditions that the structure will see in service. Important factors which affect the toughness of a structure include low temperatures, extra loading and high strain rates due to wind or impacts as well as the effect of stress concentrations such as notches and cracks. These all tend to encourage fracture. The complex interaction of these factors can be included in the design process by using fracture mechanics theory. However, materials and structures do require testing as part of any safety and design assurance process. Typical Charpy Impact Test Machine The Charpy test provides a measure of the energy required to break a material under impact. The test was standardized to allow comparison between different types of steel made from a variety of manufacturing processes. The test consists essentially of a hammer with a given amount of energy striking a notched test piece of fixed dimensions and recording the energy required to fracture the test piece at a specific temperature. The experimenter should also record whether the fracture mode was ductile or brittle. The impact energy measured by the Charpy test is the work done to fracture the specimen. On impact, the specimen deforms elastically until yielding takes place (plastic deformation). Typically a plastic zone develops at the notch. As the test specimen continues to be deformed by the impact, the plastic zone work hardens. This increases the stress and strain in the plastic zone until the specimen fractures. Schematic of Basic Pendulum Function of Charpy Test Machine The Charpy impact energy therefore includes the elastic strain energy, the plastic work done during yielding and the work done to create the fracture surface. The elastic energy is usually not a significant fraction of the total energy, which is dominated by the plastic work. The total impact energy depends on the size of the test specimen, and a standard specimen size is used to allow comparison between different materials. The impact energy seen by a given test specimen is affected by a number of factors, such as: Yield Strength and Ductility. Notches. Temperature and Strain Rate. Fracture Mechanism. Charpy Test Specimen The Charpy impact test can be used to assess the relative toughness of different materials, e.g. steel and aluminum. It is used as a tool for materials selection in design. It may also be used for quality control, to ensure that the material being produced reaches a minimum specified toughness level. A4-3 PROCEDURE: 1. Practice centering a Charpy sample on the anvils with the tongs while wearing the gloves. Remember, this must be done in five seconds after a sample is removed from a thermal bath. 2. Place one Charpy sample and sample holder in the furnace, such that the sample will be placed in the correct configuration on the anvils, and will be easy to remove from the oven. Set furnace temperature to 200 °C, using the scale calibration chart. 3. When furnace reaches 200 °C, allow sample to soak for a minimum of 15 minutes. Try to hold the temperature +/- 2°C, if possible. 4. Meanwhile, lift the pendulum to the up position. Move the scale disk down to the height of the pendulum. Push the knife edge attached to the scale down until it touches the pendulum cam. 5. Allow the pendulum to swing freely. Record the value of energy absorbed. This will be your correction factor to all absorbed energy values subsequently obtained. 6. Zero the micrometers. Close each micrometer five times recording the approximate length of the zero offset for each check. Practice a consistent procedure for closing the jaws. Record the average zero offset for each micrometer. If the offset is greater than 5 microns, try cleaning off the jaw surfaces with a dry tissue. 7. Each group member should measure the sample thickness (perpendicular to the line of the notch) of each of the 10 samples with his own micrometer. Perform your measurements while you are running the Charpy tests to save time. Each person should record the samples in the same order. Deduct or add your zero offset from/to each measurement. 8. Each person should also measure a few sample dimensions using the Vernier calipers. Everyone should learn to read a Vernier scale during this lab. 9. Once the sample in the oven has soaked the specified length of time, have one partner stand ready with the pendulum release cord. Place the Charpy sample from the furnace on the sample stage in 5 seconds, with the notch facing away from the pendulum, and allow the pendulum to fall. CAUTION Do not release the pendulum until the person placing the sample is clear of the machine. 10. After a sample has been broken, retrieve the two pieces and mark the temperature at which they were tested. Keep these pieces separate. Remember, if the sample was hot when it was broken, wait a few minutes before retrieving the pieces to avoid burns. 11. The remaining Standard Charpy samples are to be broken at approximately -40° C, -20° C, 0° C, room temperature, 40° C, 80° C, 140° C and 200° C. For samples in the furnace, the minimum soak time is 15 minutes. For the sample in the ice water bath, the minimum soak time is only 5 minutes. If a sample is dropped or not in place in 5 seconds, it must be returned to the furnace for 5 minutes before another attempt is made (1 minute in the ice water bath). For each sample, record the energy absorbed. TIP - To speed things up, work from highest to lowest temperature. Leaving the furnace open between runs will expedite temperature decreases. Remember, the holder must soak along with the sample. 12. Fill beaker with ice from ice machine in the Chemistry Lab. Add tap water. 13. Use the thermometer to record room temperature in the laboratory. In the Procedure section of the report, address the following A4-4 ***PRE-LAB QUESTIONS: To be answered and handed in BEFORE lab begins. *** QUESTION A: If measurements are made to check the dimension of a part, measurement error plays an important role in the results. Is measurement error random, systematic, or both? How does averaging the values of your nominally identical measurements help? QUESTION B. Why is it necessary to air soak samples so much longer in order to ensure thermal equilibrium, compared to soaking in liquid? CALCULATIONS 1. For each of the 10 Charpy samples, take the average of your three measurements to give your experimental thickness. 2. Find the mean thickness of your population sample. 3. Find the standard deviation and variance of your population sample thickness. 4. Suppose the process specifications for machining Charpy samples is as follows: a. x , the mean thickness, must be 10.000 mm +/- 1/6 of the tolerance band (.025 mm), or settings must be checked. b. σ, the standard deviation, must be < 1/6 of the tolerance band (.025 mm), or operators must be observed and the machines inspected. Perform a one-sided hypothesis test on the σ criterion given, with Å = .10, given your sample. Your σ is acceptably low." Can you reject the null hypothesis? Use the X2 statistic. 5. Perform (2) one-sided hypothesis tests to check the x criterion. (Ho for the first test is "µ is greater than 9.975 mm." For the second test it is "µ is less than 10.025 mm.”). Use the Z statistic. Å is again .10. Is x OK? Find the symmetric confidence interval in which µ is 95% certain to be located, based on your measurements. What is the β risk of this experiment for x = 10.030, based on your σ value and number of measurements? 6. For each of the eight samples you break, use the brittle/ductile chart to estimate the percent brittle surface area. What is the 50% brittle transition temperature of this steel, based on your results and the data given? Find also the temperature at which the fracture energy is halfway between the upper and lower shelf values determined experimentally. Compare these temperatures. 7. Make a plot of fracture energy vs. temperature for 1018 steel. A4-5 Charpy Testing: A Brief Overview Charpy impact testing is a low-cost and reliable test method that is commonly required by the construction codes for fracture-critical structures such as bridges and pressure vessels. Yet, it took from about 1900 to 1960 for impact-test technology and procedures to reach levels of accuracy and reproducibility such that the procedures could be broadly applied as standard test methods. By 1900 the International Association for Testing Materials had formed Commission 22 On Uniform Methods of Testing Materials. The Commission’s Chairman was one Georges Charpy of France who presided over some very lively discussions on whether impact testing procedures would ever be sufficiently reproducible to serve as a standard test. Messieur Charpy’s name seems to have become associated with the test because of his dynamic efforts to improve and standardize it, both through his role as Chairman of the IATM Commission and through his personal research. He seemed to have had a real skill for recognizing and combining key advances (both his and those of other researchers) into continually better machine designs while also building consensus among his co-researchers for developing better procedures. By 1905, Charpy had proposed a machine design that is remarkably similar to present designs and the literature contains the first references to "the Charpy test" and "the Charpy method". He continued to guide this work until at least 1914. Probably the greatest single impetus toward implementation of impact testing in fabrication standards and material specifications came as a result of the large number of ship failures that occurred during World War II. Eight of these fractures were sufficiently severe to force abandonment of ships at sea. Remedies included changes to the design, changes in the fabrication procedures and retrofits, as well as impact requirements on the materials of construction. Design problems such as this can also be tackled by the use of a minumum impact energy for the service temperature, which is based on previous experience. Damaged Liberty Ship For example, it was found that fractures of the steel plate in Liberty ships in the 1940's only occurred at sea temperatures for which the impact energy of the steel was 20 J. This approach is often still used to specify minimum impact energy for material selection, though the criteria are also based on correlations with fracture mechanics measurements and calculations. It's interesting to note that the impact energy of steel recovered from the Titanic was found to be very low (brittle) at -1°C. This was the estimated sea temperature at the time of the iceberg impact. In circumstances where safety is extremely critical, full scale engineering components may be tested in their worst possible service condition. For example, containers for the transportation of nuclear fuel were tested in a full scale crash with a train to demonstrate that they retained their structural integrity (a 140 ton locomotive and three 35 ton coaches crashed at 100 mph into a spent fuel container laid across the track on a flat-bed truck. The train and truck were totally demolished but the container remained sealed, proving the durability of its design and materials. Test programs also use Charpy specimens to track the embrittlement induced by neutrons in nuclear power plant hardware. The economic importance of the Charpy impact test in the nuclear industry can be estimated by noting that most utilities assess the outage cost and loss of revenue for a nuclear plant to be in the range of $300,000 to $500,000 per day. If Charpy data can be used to extend the life of a plant one year beyond the initial design life, a plant owner could realize revenues as large as $150,000,000. Further, the cost avoidance from a vessel related fracture and potential human loss is priceless. As of 2006, the NRC has shut down one A4-6 U.S. plant as a result of Charpy data trends. It is important to note that this plant's pressure vessel was constructed from one-of-a-kind steel and is not representative of other U.S. commercial reactors. STATISTICS In any manufacturing process, there will be random and systematic variations in the properties of nominally identical parts. Random errors are caused by slight variations in part positioning, variability in material properties, and other processing parameters. These errors lead to random variations in a given property above and below specifications, which may be estimated using statistical methods. Systematic errors are caused by flaws in the machining process due to die wear, incorrect machine settings, etc. Systematic errors lead to non-random variations in part dimensions which are consistently above or below spec. Quality control and product testing is generally done by taking a small sample from a large quantity of manufactured parts, and making measurements of critical quantities, such as dimensions, resistivity, quality of finish, etc. From the results of these tests, one must infer whether the process is producing, for the population as a whole, an acceptable ratio of good parts to bad parts, and whether corrections need to be made in the process. Quality control (QC) is used to identify excessive error, which can then be traced and eliminated. Also important is statistical process control (SPC). This procedure involves taking samples at fixed time intervals (such as daily or before each shift). If any trend is spotted in the results, it represents a buildup of systematic error which might not be spotted using a random sample. SPC identifies changes in the mean value of a quantity over time. The following definitions give the basic quantities and tools needed for quality control statistics. THE MEAN The population mean , µ, is the average value of the quantity of interest, (length L, for example), for the entire population under study, defined by: i) /N where N is the total number of objects produced. Note that this can only be known exactly if, every part produced is measured. The average value of L for the sample, the group of parts for which L is actually measured, is called the sample mean, x. It is defined by: x=( i) /n where n is the number of parts sampled. Note that x and µ are the same only if all parts are sampled. One goal of QC testing is to determine if the manufacturing process is producing a mean value of the quantity of interest which matches the specification for that property (check for systematic error). STANDARD DEVIATION The population standard deviation, , and the sample standard deviation , S, are measures of the spread of your data. They are defined as follows: i - µ)2/(N-1) ) 1/2 i -x ) 2/(n-1) ) 1/2 Related quantities are the population and sample variances, which are 2 and S2 respectively. Another function of QC is to determine if the standard deviation of L is small enough to ensure an acceptably high proportion of “in spec” parts (check for excessive random error). GAUSSIAN (NORMAL) DISTRIBUTION The classic “bell curve", it is the expected distribution of any quantity generated by a random process. The general formula of the curve is A*exp[-(z- µ) 2/ 2]. For the standard normal distribution, µ is set to O, A and are set to 1. The standard normal distribution has the useful property that the area A4-7 under the curve between two values of x is equal to the probability of any given measurement falling in that interval. QUESTION 1: What must be the area under the standard normal curve on the interval from negative to positive infinity? What is the probability that a measurement will yield any single value? Since the normal distribution is not analytically integratable, tables like Table 1 are used. These give the total area under the standard normal curve from negative infinity to the z value shown. QUESTION 2: Determine the area under the Standard normal curve from Z= -1 .5 to Z = +1 .5. Z STATISTIC The probability of a measurement falling in any given range is proportional to the area under the distribution curve on that range. Since normal curves are not integratable, it is necessary to transform any experimental distribution onto the standard normal curve in order to use Table 1. The Z statistic does just this. Z = (x- µ) / ( / n1/2) Plugging in a z value from the standard normal curve allows you to solve for the corresponding x value for your experimental distribution. This can only be done if your sample is assumed to have a normal distribution, such that the standard deviation of your sample is the same as the population standard deviation. If your sample is larger than 30, this assumption is valid. For smaller samples, the t statistic is used. T STATISTIC The t statistic transforms points from a distribution curve onto the Student t distribution. This is a bell curve whose total area is 1, with a variable standard deviation depending on the number of samples. This takes into account the fact that one extreme value will have a much greater effect on the standard deviation of a small sample than that of a large one. The t statistic is defined, for a sample, as: t= (x- µ) / (S/n1/2) Values of the t- distribution are also tabulated. See Table 2. CONFIDENCE INTERVALS Since statistics attempts to use a sample to infer the properties of the population as a whole, there is obviously some uncertainty associated with every conclusion made based on statistics. Confidence intervals are intervals within which one is C% certain that the actual population mean falls, based on your sample mean. Confidence intervals are marked off on a standard normal curve. Any interval which contains 90% of the area under the normal curve of a sample may be termed a "90% Confidence interval” for the actual population mean. (You are 90% certain that m is contained in this interval.) In practice, two particular 90% intervals are normally of interest. A one sided confidence interval is an interval from negative infinity to Z.9O or from Z.1O to positive infinity. In this case, you would state that you are 90% certain that the mean is below x(Z.9O), or equally certain that the mean is above x(Z.1O) A two-sided confidence interval is a symmetric interval about the sample mean. Here you would state that you are 90% certain that the population mean is between x(Z.O5) and x(Z.95). QUESTION 3: Suppose a ruler factory has produced a batch of 5000 rulers. A sample of 50 rulers has yielded an average length, x of 12.040 inches, with an S value of .050 inches. What is the symmetric 96% confidence interval for µ, the mean length of this batch? A4-8 2 STATISTIC 2 (chi squared) statistic allows one to determine a confidence interval in which the The population standard deviation is likely to be located based on the sample standard deviation. It is defined by: 2 = vS2/ 2 2 are located in table where v = n-1 is the number of degrees of freedom of the experiment. Values of 2 3. Note that the value depends on the number of tests and the confidence level of interest. Note that (a, n) is used to determine a value that one is a% certain that S is above. Here, one generally uses one-sided confidence intervals, since the quantity of interest is usually the maximum standard deviation 2 (.10,n). possible given the sample S. To find the 90% confidence level so defined, we would use QUESTION 4: Given 20 samples with a standard deviation of .5 mm, what is the maximum 95% certain is not being exceeded? A4-9 that we are HYPOTHESIS TESTING Hypothesis tests provide the framework for decisions based on statistics. They are based on one initial assumption (the null hypothesis) and four possible outcomes. The null hypothesis is labeled Ho , and is generally a simple statement of what you wish to check. For instance, “The mean length of all rulers produced is 12.00 inches." In the test, you either choose to accept Ho , or to reject it in favor of H1, the alternate hypothesis. (“The mean length of all rulers produced is not 12.00 inches.") For a simple test, Ho and H1 must include all possibilities, i.e. “The mean length of all rulers is 11.50 inches” is not a valid alternate hypothesis. After the test is done, your decision will fall into one of four categories: 1. Ho is correctly found to be true. 2. Ho is found to be true when it is not true, a Type 1 error. 3. Ho is correctly rejected because it is false. 4. Ho is found to be false when it is true, a Type 2 error. Both types of error will have some risk of occurring, because we are using a sample to approximate a whole population. The risk of committing a Type 1 error is termed the Å risk , the risk of committing a Type 2 error the . Before the experiment begins, it is vital to decide what level of each risk you are willing to accept. This will determine how many samples you will need to test. You must weigh the relative consequences of being wrong versus the time and expense the test entails. If you decide that the mean of the rulers is 12.00 inches when it is not, your products may be defective. Conversely, if you decide the mean is not 12.00 inches when it is, you will be disrupting the manufacturing process for no good reason. As you can see, the choice of Å and example, if you decide you must be 99% sure that your rulers are 12.00 inches, you will find that your risk of shutting down the line unnecessarily will be much higher than if you only need to be 90% sure. Hypothesis tests are only valid if confidence levels and courses of action are decided on before the experiment begins. If an experimenter decides she must be 95% certain that tobacco causes cancer, for instance, she can not say, after collecting the data, that she now must be 99% sure, because this changes the acceptable” fail to understand the limitations of statistical inference. In choosing risks, it is standard practice to decide on your criterion, which should be the decision you are least willing to make incorrectly, set an appropriate Å level, then choose a sample size which will case you must make clear exactly how certain (or uncertain) you are of your results, and how many more samples you would require to attain an acceptable level of certainty. What statistic is used for the test will be determined by what you are studying. For a simple twolevel test (GO vs NOGO), the Z or t statistic can be used if you are checking your mean value, while the 2 statistic is used if you are trying to characterize the repeatability of your process. EXAMPLE: The mean length of a shipment of rulers must be 12.00 inches, “+/-.02". Unless we are 90% certain that the line is producing out-of-spec rulers, we are to keep the line running. Our null hypothesis will therefore be “The mean length of the rulers is 12.00 inches". We have had Å fixed for us as 0.10. We are given 20 rulers, randomly selected from the first run of 500, and are asked to perform a quality check. We must use a t test, since we have fewer than 30 samples. Our v value, the number of degrees of freedom, is n-1 = 19. Since this is a two-sided test, our t value will be at +/-(.95,19), which is +/-1.729. Thus, we will fail to reject the null hypothesis if t falls within this range. We now measure the length of each ruler +/-.01 inch. On doing so, we find the following: x = 12.011 in. S = .028 in. We can now calculate the t value for our sample. A4-10 t = (12.011-12.00)/(.028/201/2) = 1.76. Since this is out of our range, we must reject the null hypothesis and shut down the production line. rulers are in spec when they are not. The non-rejection region for x for our test went from: [-1.729*(.028/201/2)+12.00] to [+1.729* (.028/201/2)+ 12.00] or 11.9892 to 12.0108 We must now find the probability that x would fall in the non-rejection region if µ was 12.021. The t values of the non-rejection boundaries would be: t1= (11.9892-12.0210)/(.028/201/2) = -5.08 t2 = (12.0108-12.021 0)/(.028/201/2) = -1.63 The area under the curve would be around 6% of the total, good. A4-11 Data Collection Table Sample # Initial Thickness (mm) 1 Target Temp (ºC) - 40 2 -20 3 0 4 Room Temp 5 40 6 80 7 140 8 200 Temp. (ºC) A4-12 Temp. (ºK) Fracture Energy (lb-ft) Final Thickness (mm)