Temporal Behavior of Web Usage

advertisement

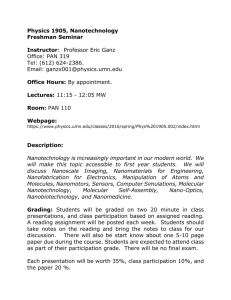

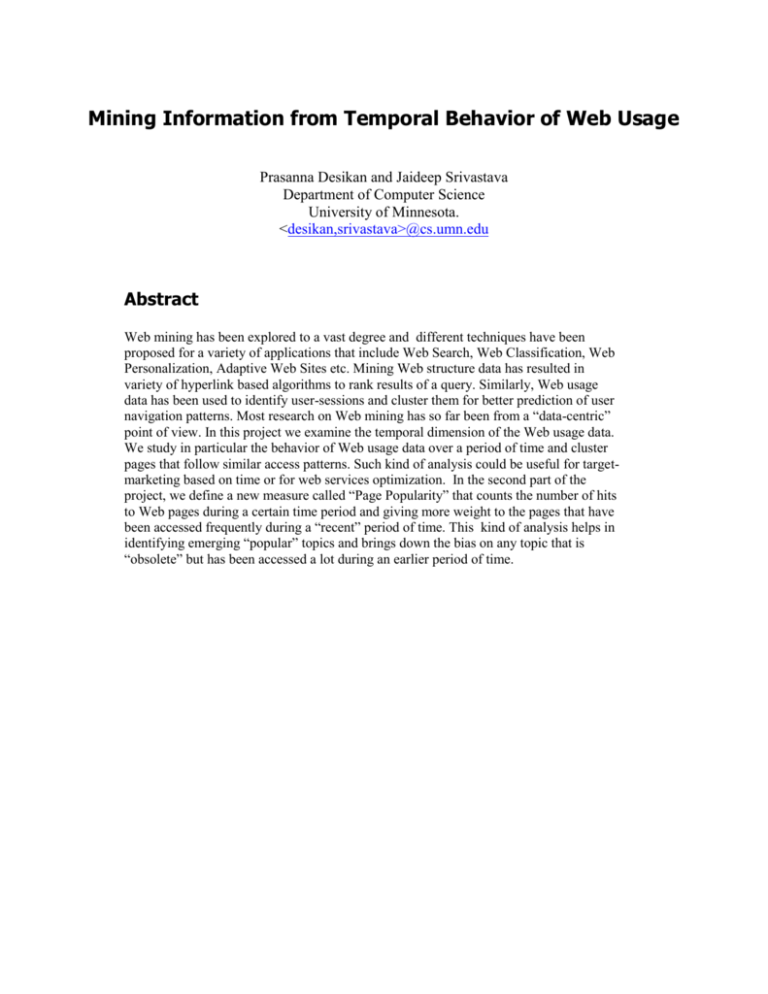

Mining Information from Temporal Behavior of Web Usage Prasanna Desikan and Jaideep Srivastava Department of Computer Science University of Minnesota. <desikan,srivastava>@cs.umn.edu Abstract Web mining has been explored to a vast degree and different techniques have been proposed for a variety of applications that include Web Search, Web Classification, Web Personalization, Adaptive Web Sites etc. Mining Web structure data has resulted in variety of hyperlink based algorithms to rank results of a query. Similarly, Web usage data has been used to identify user-sessions and cluster them for better prediction of user navigation patterns. Most research on Web mining has so far been from a “data-centric” point of view. In this project we examine the temporal dimension of the Web usage data. We study in particular the behavior of Web usage data over a period of time and cluster pages that follow similar access patterns. Such kind of analysis could be useful for targetmarketing based on time or for web services optimization. In the second part of the project, we define a new measure called “Page Popularity” that counts the number of hits to Web pages during a certain time period and giving more weight to the pages that have been accessed frequently during a “recent” period of time. This kind of analysis helps in identifying emerging “popular” topics and brings down the bias on any topic that is “obsolete” but has been accessed a lot during an earlier period of time. 1. Introduction Web Mining, defined as the application of data mining techniques to extract information from the World Wide Web, has been classified into three sub-fields: Web Content Mining, Web Structure Mining and Web Usage Mining based on the kind of the data available. This kind of classification is represented in Figure 1. While the Web Content provides the actual textual and other multimedia information, the Web Structure reflects the organization of the Web documents and thus helping in determining their relative importance. Web Structure has been exploited to extract information about the quality of Web pages in the Web. Traditionally, information provided by Web content combined with the Web Structure has been used in the context of search and ranking pages returned by a search result for a query. The stability of the Web structure led to the more research related to Hyperlink Analysis and the field gained more recognition with the advent of Google. Desikan et al provide an extensive survey on Hyperlink Analysis is provided. Structural information has also been used for ‘focused crawling’ – deciding the pages that need to be crawled first. The Web content and structure information have been successfully combined to classify Web pages according to various topics or to identify the topics that a page is known for. The Web structure information has also been applied to identify group of Web pages that share a certain set ideas, called Web Communities. Thus, most of the initial research on Web Mining was focused on Web content and later Web Structure. Data Source Used Figure 1: Web Mining Taxonomy The third kind of Web data, Web Usage reveals the users surfing patterns that has been of interest for a variety of applications. The Web has been widely used for different kinds of personal, business and professional applications that depend on user interactions in the Web. This has increased the need for understanding the users interests and his browsing behavior. The Web Usage data has thus received much attention in the recent times to study human behavior. Srivastava et al [4] provide a survey on Web Usage Mining, identifying the different kinds of Web Usage data, their sources and also provide a taxonomy for the major application areas in Web Usage Mining. At a high level, Web Usage Mining can be divided into three categories depending on the kind of data: • Web Server Data: They correspond to the user logs that are collected at Web server. They contain information about the IP address from which the request was made, the time of request, the URIs of the requested and referral documents and the type pf agent that sent the request. • Application Server data: The data that is generated by dynamically by the various application servers such as the .asp and .jsp files that allow certain applications to be built on top of them and collect the information that results due to certain user actions on the application. 2 • Application Level Data: The data that is provided by the user for an application, such as demographic data. These kinds of data can be logged for each user or event and can be later used to derive useful information. Web Mining research has thus focused more recently on Web Structure and Web Usage. In this project we focus on another important dimension of Web Mining as identified by [5] - the Temporal Evolution of the Web. The Web is changing fast over time and so is the users interaction in the Web suggesting the need to study and develop models for the evolving Web Content, Web Structure and Web Usage. Concept 1 Concept 2 (a) (b) (c) Figure 2: Temporal Evolution of a single Web Document (a) Change in the Web Content of a document over time. (b) Change in the Web Structure i.e. number of inlinks and outlinks; of a document over time. (c) Change in Web Usage of the document over time. The need to study the Temporal Evolution of the Web, understand the change in the user behavior and interaction in the World Wide Web has motivated us to analyze the Web Usage data. We use the user logs obtained from the Web server to study the evolution of the usage of Web documents over time. We perform two kinds of analysis: Temporal Concepts: We first cluster Web pages that have similar access patterns over a period of time and look at Web pages that have similar access patterns during the time period and see how they are related and if they represent any concept or related concepts or any other useful information. Page Popularity: We define a measure for the “popularity” of a page proportional to the number of hits to the page during the time period with more weight to the “recent” history. 3 We finally compare the results of this measure compares to the some of the other popular existing measures to rank Web pages. The experimental results reflect noticeable difference in the rankings. While the usage based ranking metrics, boost up the ranks of the pages that are used as opposed to the pure hyperlink based metrics that rank pages that are used rarely high. In particular, we notice that the Page Popularity ranks the pages that have been used more recently high and brings down the rank of the pages that have been used earlier but have had very low access during the recent period. The rest of the document is organized as follows: In Section 2 we talk about the related work in this area and in the following section, we discuss the approach followed by us. Section 4 discusses the experiments performed and the results. In section 5 we analyze the results and finally in Section 6, we conclude and provide future directions. 2. Related Work In our approach, we take into account pure Web usage data to extract the temporal behavior patterns of Web pages. Web usage data has been a major source of information and has been studied extensively during the recent times. Understanding user profiles and user navigation patterns for better adaptive web sites and predicting user access patterns has been of significant interest to the research and the business community. Cooley et al in [6] and [7] discuss methods to pre-process the user log data and to separate web page references into those made for navigational purposes and those made for content purposes. User navigation patterns have evoked much interest and have been studied by various other researchers [9], [10]. Srivastava et al [4] discuss the techniques to pre-process the usage and content data, discover patterns from them and filter out the non-relevant and uninteresting patterns discovered. [8,4] also serve as good surveys for web usage mining. As discussed earlier usage statistics has been applied to hyperlink structure for better link prediction in field of adaptive web sites. The concept of adaptive web sites was proposed by Pekrowitz and Etzioni [11]. Pirolli and Pitkow [12] discuss about predicting user-browsing behavior based on past surfing paths using Markov models. In [13] Ramesh Sarukkai has discussed about link prediction and path analysis for better user navigations. He proposes a Markov chain model to predict the user access pattern based on the user access logs previously collected. Zhu et al. [14] extend this by introducing the maximal forward reference to eliminate the effect of backward references by the user. They also predict user behavior within the ‘n’ future steps, using a N-Step Markov chain as opposed to the one step approach by Sarukkai. Information foraging theory concepts have also been used recently by Chi et al [15] to incorporate user behavior into the existing content and link structure. They have modeled user needs and user actions using the notion of Information Scent as described earlier. Cadez et al in [16] cluster users with similar navigation paths in the same site. They develop a visualization methodology to display paths for the users within each cluster. They use first order Markov models for clustering, to take into account the order in which the user requests the page. Huang et al in [17] present a “Cube-Model” to represent Web access sessions for data mining. They use K-modes algorithm to cluster sessions described as sequence of page URL Ids. On the other hand, in the area of Web structure mining there has been a lot of research on ranking of Web pages using hyperlink analysis. There have been different hyperlink based methods that have been proposed. Page Rank is a metric for ranking hypertext documents that determines the quality of these documents. Page et al. [18] developed this metric for the popular search engine, Google [19]. The key idea is that a page has high rank if it is pointed to by many highly ranked pages. So the rank of a page depends upon the ranks of the pages pointing to it. This process is done iteratively till the rank of all the pages is determined. The rank of a page p can thus be written as: 4 PR ( p ) d (1 d ) PR (q) n OutDegree(q ) ( q , p )G Here, n is the number of nodes in the graph and OutDegree(q) is the number of hyperlinks on page q. Intuitively, the approach can be viewed as a stochastic analysis of a random walk on the Web graph. The first term in the right hand side of the equation corresponds to the probability that a random Web surfer arrives at a page p out of nowhere, i.e. (s)he could arrive at the page by typing the URL or from a bookmark, or may have a particular page as his/her homepage. d would then be the probability that a random surfer chooses a URL directly – i.e. typing it, using the bookmark list, or by default – rather than traversing a link1. Finally, 1/n corresponds to the uniform probability that a person chooses the page p from the complete set of n pages on the Web. The second term in the right hand side of the equation corresponds to factor contributed by arriving at a page by traversing a link. 1- d is the probability that a person arrives at the page p by traversing a link. The summation corresponds to the sum of the rank contributions made by all the pages that point to the page p. The rank contribution is the Page Rank of the page multiplied by the probability that a particular link on the page is traversed. So for any page q pointing to page p, the probability that the link pointing to page p is traversed would be 1/OutDegree(q), assuming all links on the page is chosen with uniform probability. The other popular metric is Hubs and Authorities. They can be viewed as ‘fans’ and ‘centers’ in a bipartite core of a Web graph. The hub and authority scores computed for each Web page indicate the extent to which the Web page serves as a “hub” pointing to good “authority” pages or as an “authority” on a topic pointed to by good hubs. The hub and authority scores for a page are not based on a formula for a single page, but are computed for a set of pages related to a topic using an iterative procedure called HITS algorithm [K1998]. More recently, Oztekin et al [20], proposed Usage Aware PageRank. They modified the basic PageRank metric to incorporate usage information. In their basic approach assigned weights to the links based on the number of traversals on the link, and thus modifying the probability that a user traverses a particular link in the basic PageRank from 1 to Wl , where W l is the number of traversals on the OutDegree(q) OutTraversed (q) link l and OutTraversed (q) is the total number of traversals of all links from the page q. And also the probability to arrive at a page directly is computed using the usage statistics. The final formula for Usage Aware PageRank is: UPR q UPR q d UPR ( p) 1 d 1 d Wnl 1 d OutDegreeq Wl N q p G q p G OutTravers ed ( q ) where is the emphasis factor that decides the weight to be given to the structure versus the usage information 3. Our Approach Our goal is to cluster pages that have similar usage patterns over time and study them. The motivation behind the project was to study how the information on the Web changes over time and how to model such a change in the information. As time changes, the content, structure and usage of a Web page changes. These changes can be modeled both a single page level or for a collection of pages. Looking from a point of view of a single page, the concept that a Web page represents may change or evolve with 1 The parameter d, called the dampening factor, is usually set between 0.1 and 0.2 [19]. 5 respect to the time. Also, the basic structure of a page may change, i.e. the number of inlinks and the number of out links may change. Since most structural mining work considers that “if a page is pointed to by some other page, them it endorses the view of that page”. So as the number of incoming links changes, the topic that the page represents may change with period of time. Similarly the change in the number of out links may reflect the change in the relevance of the page with respect to a certain topic. The usage data is also affected by the content and structural change in a Web page. The usage data brings in information about the topic the page is “popular” for. And this “popularity” may or may not be necessarily be reflected by the change in the content of the page or the pages pointing to it. A page’s popularity may or may not be affected by the change in its indegree or outdegree. This motivates the need to study the change in the behavior of the Web over a period of time. This idea is not entirely new, the changes to the Web are being recorded by the pioneering Internet Archive project [IA]. Large organizations generally archive (at least portions of) usage data from there Web sites. With these sources of data available, there is a large scope of research to develop techniques for analyzing of how the Web evolves over time. In our project we focus on trying to extract information from the Web usage data inn general and data from Web Server logs to be more specific. H= Total number of hits in “past” data h = Total Number of hits in “recent” data. N= Total Number of days for which web server logs are analysed Figure 3: Concept of Page Popularity 6 We first try to cluster pages based on the total number of hits per day for each Web page. This would cluster pages that have similar access patterns during the given time period. This may reflect pages that are related in some manner, due to which their access patterns have been similar. This kind of analysis will also help in identifying pages that were “popular” during a certain frame of time. The next thing we bring up in this project is a measure called “Page Popularity” to determine the “popularity” of the page in the time period for which we analyzed the data.. In this measure we take into give more weight to the “recent history” than the past, so as to enable upcoming topics to be ranked better than old topics. Though this kind of a thing could be done by just considering a “recent” time period of data that would result in loss of information of the old data. So it would be better to consider the usage data for a longer duration and then weigh the “recent history” more so that there is no loss of information. Considering the old information would be important, specially when doing structure mining, as the web pages are crawled from time to time. So it would be a good idea to store the previous information from the Web graph that existed earlier and also make use the new graph to mine information. This kind of structural information can be obtained for the Internet Archive Project site. We now present the basic idea of “Page Popularity” as shown in Figure 3. The idea is though the Web page that has the access pattern “red” may have total number of hits high, the Web page represented by “green” curve has an increasing usage and so may represent a newer topic or something that is gaining “popularity” as opposed to the Web page that is represented by the “red” curve” which is no longer used that much. The formula we propose is very naïve at this stage, though it captures the main idea behind the approach. The Page Popularity is defined as: PagePopularity K H h H h Where K is some constant and H is the total number of hits for a Web page in the time period considered “past” and ‘h’ is the number of hits for the same web page in the “recent” period. is some parameter that is used to give weight to “recent history”. can be varied depending on the importance of the “recent” data. In our actual implementation, we took the average number of hits during the “past” time period and the “average” number of hits in the “recent” time period. Average was considered as it would neutralize the effect of any sudden spikes or drops in usage per day. If we weigh according to some other scale like linear, such sudden changes may drastically boost or bring down the rank of a page. We considered the first two-thirds of the time as “past” history and last one-third as “recent history”. There was no particular reason to choose so, but it seemed a reasonable estimate. We then weighed the hits in the “recent” history twice as that of the hits in the “past history”. So in the implementation the formula boils down to: 1 H PagePopularity 3 2N 2 h 1 H 4 h * 3 N H h 2N 3 3 7 4. Data Pre-processing One of the main issues in Web usage mining is Data pre-processing. Web usage data consists of all kinds of access to web pages. The general format of a Web server log data looks is shown in Figure 4. IP Address rfc931 authuser Date and time of request 128.101.35.92 [09/Mar/2002:00:03:18 -0600] request status bytes referer user agent "GET /~harum/ HTTP/1.0" 200 3014 http://www.cs.umn.edu/ Mozilla/4.7 [en] (X11; I; SunOS 5.8 sun4u) IP address: IP address of the remote host. Rfc931: the remote login name of the user. Authuser: the username as which the user has authenticated himself. Date: date and time of the request. Request:the request line exactly as it came from the client. Status: the HTTP response code returned to the client. Bytes: The number of bytes transferred. Referer: The url the client was on before requesting your url. User_agent: The software the client claims to be using. Figure 4: Extended Common Log Format (ECLF) of Web Server log For our experiment, we considered only Web pages with .html extension. We also eliminated “robots” by considering web pages that did not have “Mozilla” string in the user-agent field. Inspite of this we noticed some robots like “inktomi” used “Mozilla” in the user agent, which we noticed and so removed all data that had “slurp/cat” string the user agent field. This took care of eliminating most robots and unwanted data. We also pruned data for which the total number of hits was very “low” i.e. lower than the atleast the number of days in the “recent period”. This was just to take into account a web page that was started to use in the “recent” time period and is slowly picking up and so the number of accesses it may have will be low compared to other pages and so if it is a new page it should not be neglected. The data considered was from April through June. We didn’t have usage data after June, and for data before April, the CS website had been restructured, so that could mess up the kind of usage data needed for our experiment. The data we used however was good for intuitive purposes as it contained data in end of Spring Semester and then the period between Spring and summer term where the classes had not started full fledged. So this would give us interesting result as the class web page access would change dramatically after the end of a term. So the clustering of web page patterns for at least certain pages should be similar. Else in general it is difficult to find patterns, as most web pages are accessed very randomly. 8 5. Experimental Results 5.2 Clustering Interesting patterns 1 2 3 High hits during a short interval of time and almost no hits before and after this short period High hits during a short interval of time and lesser traffic during other times Traffic (number of hits) almost none during the latter half of the time period. Figure 5: Clustering of Web pages based on number of hits per day 9 The clustering of the Web pages was done using the tool CLUTO [21]. The number of clusters specified was 10. We tried with various number of clusters and of them 10 revealed a decent clustering of pages from the dendogram produced and as shown in Figure 5 Three interesting patterns were found. The kind of Web pages that belong to these clusters is shown in Figure 6. The first cluster belongs to the set of pages that were accessed a lot during a very short period of time. Most of them are some kind of wedding photos that were accessed a lot, suggesting some kind of a “wedding” event that took place during that time. The cluster of pages is again related to some talk slides of The Twin Cities software process improvement network (Twin-SPIN), that is a regional organization established in January of 1996 as a forum for the free and open exchange of software process improvement experiences and ideas. They seemed to have a talk during that period and hence the access to the slides. The third cluster was the most interesting. It had mostly class web pages and some pages related to “Data Mining” slides. These set of pages had high access during the first period of time, possibly the spring term and then their access died out. So it seemed the “Data Mining” web page was accessed, because someone was doing some work related to “data mining” during that semester, though no “Data Mining” course was as such not offered. 1 www-users.cs.umn.edu/~ctlu/Wedding/speech.html www-users.cs.umn.edu/~gade/boley/re0.html www-users.cs.umn.edu/~gade/boley/wap.html www-users.cs.umn.edu/~ctlu/Wedding/photo2.html www-users.cs.umn.edu/~ctlu/Wedding/wedding.html 2 www.cs.umn.edu/crisys/spin/talks/hedger-jan-00/sld016.htm www.cs.umn.edu/crisys/spin/talks/hedger-jan-00/sld017.htm www.cs.umn.edu/Research/airvl/its/ www.cs.umn.edu/crisys/spin/talks/hedger-jan-00/sld055.htm www.cs.umn.edu/crisys/spin/talks/hedger-jan-00/sld056.htm 3 www-users.cs.umn.edu/~mjoshi/hpdmtut/sld110.htm www-users.cs.umn.edu/~mjoshi/hpdmtut/sld113.htm www-users.cs.umn.edu/~mjoshi/hpdmtut/sld032.htm . www.cs.umn.edu/classes/Spring-2002/csci5707/ www.cs.umn.edu/classes/Spring2002/csci5103/www_files/contents.htm Figure 6: Web pages that belong to the "interesting" clusters. 10 5.2 Page Popularity Our next set of results was with respect to the Page Popularity measure. We ranked the web pages in accordance with the Page Rank, Page Popularity, Total Number of hits and Usage Aware PageRank2. The results are shown in the following figures: e.g These Web pages do not figure in usage based rankings PageRank Results 1. 0.01257895 www.cs.umn.edu/cisco/data/doc/cintrnet/ita.htm 2. 0.01246077 www.cs.umn.edu/cisco/home/home.htm 3. 0.01062634 www.cs.umn.edu/cisco/data/lib/copyrght.htm 4. 0.01062634 www.cs.umn.edu/cisco/data/doc/help.htm 5. 0.01062634 www.cs.umn.edu/cisco/home/search.htm 8. 0.00207191 www.cs.umn.edu/help/software/java/JGL-2.0.2-for-JDK-1.1/doc/api/packages.html 9. 0.00191835 www.cs.umn.edu/help/software/java/JGL-2.0.2-for-JDK-1.0/doc/api/packages.html 10. 0.00176296 www.cs.umn.edu/help/software/java/JGL-2.0.2-for-JDK1.1/doc/api/AllNames.html 11. 0.00176294 www.cs.umn.edu/help/software/java/JGL-2.0.2-for-JDK-1.1/doc/api/tree.html 12. 0.00167152 www-users.cs.umn.edu/~ctlu/Wedding/SP-Web/ 13. 0.00163228 www.cs.umn.edu/help/software/java/JGL-2.0.2-for-JDK-1.0/doc/api/tree.html 14. 0.00163228 www.cs.umn.edu/help/software/java/JGL-2.0.2-for-JDK1.0/doc/api/AllNames.html 15. 0.00155098 www-users.cs.umn.edu/~echi/misc/pictures/ 16. 0.00139978 www.cs.umn.edu/Ajanta/papers/docs/packages.html 17. 0.00123334 www-users.cs.umn.edu/~safonov/brodsky/ 18. 0.00111285 www-users.cs.umn.edu/~mjoshi/hpdmtut/sld001.htm 19. 0.00105998 www-users.cs.umn.edu/~mjoshi/hpdmtut/tsld001.htm 20. 0.00104579 www.cs.umn.edu/Ajanta/papers/docs/AllNames.html 21. 0.00104577 www.cs.umn.edu/Ajanta/papers/docs/tree.html 22. 0.00096958 www.cs.umn.edu/~karypis Figure 7: Ranking Results from PageRank 2 The results of PageRank and Usage Aware PageRank were obtained from Uygar Oztekin, who conducted similar experiments with the usage data in that time period. 11 Page Popularity Results e.g ranked 29 if we count pure hits 1. 0.000723 www.cs.umn.edu/ 2. 0.000145 www-users.cs.umn.edu/~mein/blender/ 3. 0.000081 www-users.cs.umn.edu/~sdier/debian/woody-netinst-test/ 4. 0.000053 www-users.cs.umn.edu/~mjoshi/hpdmtut/ 5. 0.000051 www-users.cs.umn.edu/~dyue/wiihist/ 6. 0.000050 www-users.cs.umn.edu/grad.html 7. 0.000050 www.cs.umn.edu/faculty/faculty.html 8. 0.000046 www-users.cs.umn.edu/~sdier/debian/woody-netinsttest/releases/20020416/ 9. 0.000044 www-users.cs.umn.edu/~desikan/links.html 10. 0.000042 www.cs.umn.edu/courses.html 11. 0.000042 www-users.cs.umn.edu/~dyue/wiihist/njmassac/nmintro.htm 12. 0.000040 www-users.cs.umn.edu/~bentlema/unix/ 13. 0.000039 www.cs.umn.edu/grad/ 14. 0.000037 www.cs.umn.edu/people.html 15. 0.000037 www-users.cs.umn.edu/~echi/papers.html 16. 0.000034 www-users.cs.umn.edu/~wadhwa/bits/ 17. 0.000034 www-users.cs.umn.edu/~konstan/BRS97-GL.html 18. 0.000033 www-users.cs.umn.edu/~kazar/ 19. 0.000032 www-users.cs.umn.edu/~mjoshi/hpdmtut/sld001.htm 20. 0.000032 www-users.cs.umn.edu/~karypis/metis/ th Figure 8: Page Popularity based rankings Total Hits based rankings 1. 2. 3. 4. 5. 6. 7. 8. 9. 10 . 11 . 12 . 13 . 14 . 15 . 16 . 17 . 18 . 19 . 20 . 0.071684 0.012906 0.008998 0.005082 0.005038 0.005002 0.004971 0.004835 0.004275 0.004209 0.004163 0.003787 0.003643 0.003530 0.003524 0.003484 0.003437 0.003436 0.003394 0.003130 www.cs.umn.edu/ www-users.cs.umn.edu/~mein/blender/ www-users.cs.umn.edu/~sdier/debian/woody-netinst-test/ www-users.cs.umn.edu/~wadhwa/bits/ www-users.cs.umn.edu/~mjoshi/hpdmtut/ www.cs.umn.edu/faculty/faculty.html www-users.cs.umn.edu/grad.html www-users.cs.umn.edu/~dyue/wiihist/ www.cs.umn.edu/courses.html www-users.cs.umn.edu/~desikan/links.html www-users.cs.umn.edu/~dyue/wiihist/njmassac/nmintro.htm www-users.cs.umn.edu/~echi/papers.html www-users.cs.umn.edu/~bentlema/unix/ www.cs.umn.edu/grad/ www.cs.umn.edu/people.html www-users.cs.umn.edu/~konstan/BRS97-GL.html www-users.cs.umn.edu/~heimdahl/csci5802/front-page.htm www-users.cs.umn.edu/~heimdahl/csci5802/heading.htm www-users.cs.umn.edu/~heimdahl/csci5802/nav-bar.htm www-users.cs.umn.edu/~shekhar/5708/ Figure 9: Total Hits based ranking 12 Usage Aware PageRank Low–Ranked because course web-pages were not accessed in the month of June, which was considered recent and hence more weight given to pages accessed during that period. 1. 0.01116774 www.cs.umn.edu/ 2. 0.00099434 www-users.cs.umn.edu/~mein/blender/ 3. 0.00067178 www-users.cs.umn.edu/~karypis/metis/ 4. 0.00066555 www.cs.umn.edu/Research/GroupLens/ 5. 0.00065136 www-users.cs.umn.edu/ 6. 0.00064202 www-users.cs.umn.edu/~shekhar/5708/ (37 acc. to Page Popularity) 7. 0.00061524 www.cs.umn.edu/grad/ 8. 0.00056791 www-users.cs.umn.edu/~bentlema/unix/ 9. 0.00050809 www-users.cs.umn.edu/~gopalan/courses/5106/ (72 acc. To Page Popularity) 10. 0.00049271 www.cs.umn.edu/users/ 11. 0.00046833 www-users.cs.umn.edu/~saad/ 12. 0.00040603 www-users.cs.umn.edu/~dyue/wiihist/njmassac/nmintro.htm 13. 0.00040195 www-users.cs.umn.edu/~rieck/language.html 14. 0.00038154 www.cs.umn.edu/classes/ 15. 0.00037953 www-users.cs.umn.edu/~sdier/debian/woody-netinst-test/ ( 3 acc. Page Popularity) 16. 0.00036740 www-users.cs.umn.edu/~bentlema/unix/advipc/ipc.html 17. 0.00036210 www-users.cs.umn.edu/~mjoshi/hpdmtut/ 18. 0.00035329 www-users.cs.umn.edu/~dyue/wiihist/ 19. 0.00035201 www-users.cs.umn.edu/~karypis/ 20. 0.00034844 www-users.cs.umn.edu/~safonov/brodsky/ Figure 10: Usage Aware PageRank The results from the different ranking measures reveals that because PageRank gives more importance to structure and does not include usage statistics, it ranks pages that are well linked high, though they are never used. For example, it ranked all the “cisco” and “jave-help” pages really high as they were structurally well-connected. Simple count of total hits is not very useful as the number of hits could be accumulating for a variety of reasons and pages that are used since a long time will tend to get a higher rank. Although simply counting the hits reveals to some extent what the user actually finds useful. Usage Aware PageRank makes use of both the usage statistics and the link structure and in all gives a balanced result in terms of the both the usage and Link structure. As it can been the CS home page is ranked high in Usage Aware PageRank and is ranked below ‘100’ using PageRank. It can be noticed that two of the pages in UPR are course web pages that are linked from the home pages of the professors and have been accessed a lot. So the rank of these pages has been boosted up. But “Page Popularity” on the other hand gives more weight to the “recent history” and since these course web-pages were not accessed during the month of June after the semester ended, their rankings were brought down. Thus by weighing the “recent” history more we can boost the ranks of the pages that are more “popular” or significant for that time period. 6. Conclusions and Future Directions Clustering web page access patterns over time may help in identifying a “concept” that is “popular” during a time period. PageRanks tend to give more importance to structure alone, hence pages that are heavily linked may be ranked higher though not used. Hence the importance is given to the person who 13 creates the Web page. Usage Aware Pagerank combines usage statistics with link information giving importance to both the creator and the actual user of a web page. Page popularity gives more weight to ‘recent’ history and helps in ranking “obsolete” items lower and boosting up the topics that are more “popular” during that time period. Certainly, more experiments run over longer time-period data. Also there needs to be a refinement of “recent” history definition in terms of the time period that is considered recent and the weight to be given to “recent” history. Another useful thing would be to apply time-based usage weights to link traversals and re-compute usage aware page rank. In general it would be good to come up with time based metrics that would help in ranking Web pages or any Web based properties relevant to the time period. For example, Metrict t Metrict 1 Metric(t ) where t would be the “recent” time period and is the weight assigned to the data gathered from the “past”. This kind of analysis would also help us not lose information about the data that changed during a period of time. Thus the study the behavior of change in the web content, web structure and web usage over time and their effects on each other would help us understand the way Web is evolving and the necessary steps that can betaken to make it a better source of information. Acknowledgements The initial ideas presented were the result of discussions with Prof. Vipin Kumar and the Scout group at the Department of Computer Science. A special mention must be made of Pang-Ning Tan, who gave valuable comments and suggestions during the project. Uygar Oztekin, provided the ranking results using PageRank and Usage Aware PageRank metrics. This work was partially supported by Army High Performance Computing Research Center contract number DAAD19-01-2-0014. The content of the work does not necessarily reflect the position or policy of the government and no official endorsement should be inferred. Access to computing facilities was provided by the AHPCRC and the Minnesota Supercomputing Institute. 14 References 1. P. Desikan, J. Srivastava, V. Kumar, P.-N. Tan, “Hyperlink Analysis – Techniques & Applications”, Army High Performance Computing Center Technical Report, 2002. 2. O. Etzioni, “The World Wide Web: Quagmire or Gold Mine”, in Communications of the ACM, 39(11):65=68,1996 3. R. Cooley, B. Mobasher, J. Srivastava, “Web Mining: Information and Pattern Discovery on the World Wide Web”, in Proceedings of the 9th IEEE International Conference on Tools With Artificial Intelligence (ICTAI’ 97), Newport Beach, CA, 1997. 4. J. Srivastava, R. Cooley, M. Deshpande and P-N. Tan. “Web Usage Mining: Discovery and Applications of usage patterns from Web Data”, SIGKDD Explorations, Vol 1, Issue 2, 2000. 5. J. Srivastava, P. Desikan and V. Kumar, “Web Mining – Accomplishments and Future Directions”, Invited paper in National Science Foundation Workshop on Next Generation Data Mining, Baltimore, MD, Nov. 1-3, 2002. 6. R. Cooley, B. Mobasher, and J.Srivastava. “Data Preparation for mining world wide web browsing patterns”. Knowledge and Information systems, 1(!) 1999. 7. R. Cooley, B. Mobasher, and J. Srivastava. “Grouping Web Page References into Transactions for Mining World Wide Web Browsing Patterns”, Journal of Knowledge and Information Systems (KAIS), Vol. 1, No. 10, 1999, pp. 1-3. 8. B. Masand and M. Spiliopoulu. WebKDD-99: Workshop on Web Usage Analysis and user profiling. SIGKDD Explorations , 1(2), 2000 9. M. S. Chen, J.S. Park, and P.S. Yu. Data Mining for path traversal patterns in a web environment. In the Proc. of the 16th International Conference on Distributed Computing Systems, pp 385-392, 1996. 10. A. Buchner, M. Baumagarten, S. Anand, M.Mulvenna, and J.Hughes. Navigation pattern discovery from internet data. In Proc. of WEBKDD’99 , Workshop on Web Usage Analysis and User Profiling, Aug 1999. 11. M. Perkowitz and O. Etzioni, Adaptive Web sites: an AI challenge. IJCAI97 12. P. Pirolli, J. E. Pitkow, Distribution of Surfer’s Path Through the World Wide Web: Empirical Characterization. World Wide Web 1:1-17, 1999. 13. R.R. Sarukkai, Link Prediction and Path Analysis using Markov Chains, In the Proc. of the 9th World Wide Web Conference , 1999. 14. Jianhan Zhu, Jun Hong, and John G. Hughes, Using Markov Chains for Link Prediction in Adaptive Web Sites. In Proc. of ACM SIGWEB Hypertext 2002. 15. Ed H. Chi, Peter Pirolli, Kim Chen, James Pitkow. Using Information Scent to Model User Information Needs and Actions on the Web. In Proc. of ACM CHI 2001 Conference on Human Factors in Computing Systems, pp. 490--497. ACM Press, April 2001. Seattle, WA. 16. I Cadez, D. Heckerman, C. Meek, P. Smyth, S. White, “'Visualization of Navigation Patterns on a Web Site Using Model Based Clustering”, Proceedings of the KDD 2000, pp 280-284 17. J.Z Huang, M. Ng, W.K Ching, J. Ng, and D. Cheung, “A Cube model and cluster analysis for Web Access Sessions”, In Proc. of WEBKDD’01, CA, USA, August 2001. 18. L. Page, S. Brin, R. Motwani and T. Winograd “The PageRank Citation Ranking: Bringing Order to the Web” Stanford Digital Library Technologes, January 1998. 19. S. Brin, L. Page, “The anatomy of a large-scale hyper-textual Web search engine”. In the 7th International World Wide Web Conference, Brisbane, Australia, 1998. 20. B.U. Oztekin, L. Ertoz and V. Kumar, “Usage Aware PageRank”, Submitted to WWW2003. 21. CLUTO, http://www-users.cs.umn.edu/~karypis/cluto/index.html 15