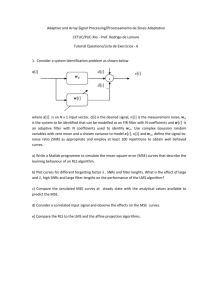

PyiPhyoMaung_FYP

advertisement