an interval-value approach

advertisement

An Interval-value Clustering Approach to Data Mining

Yunfei Yin

Department of Computer Science

Guangxi Normal University

Guilin 541004, P.R. China

Faliang Huang

Institute of Computer Application

Guangxi Normal University

Guilin 541004, P.R. China

yinyunfei@vip.sina.com

huangfliang@163.com

ABSTRACT

mining tries to find the regular patterns of consumer

Interval-value clustering algorithm is a result of the deep

behavior in retail database, and the mined rules can explain

development of calculation math, and it is widely used in

such patterns as “if people buy creamery, they will also buy

engineering, commerce, aviation and so on. In order to apply

sugar”. However, binary association rule mining restricts the

the research for interval methods and theory to practice, and

application area to binary one.

find more valuable knowledge in mining and analyzing the

In order to find more valuable association rules, bibliography

enterprise data, a kind of data mining method for interval

[6] had mined out clustering association rules by clustering

clustering is provided. After introducing three kinds of

way. But, because of the half-baked information and the

interval clustering methods, offer a method about mining

ambiguity between one thing and another are always existed,

association rules in interval databases. By comparison with

it makes using some interval numbers to represent an object

the traditional method of data mining, this method is more

become the only selective way. For example, “50 percent of

accurate, more effective, more novel and more useful. So

male people aged 40 to 65 and earned 80,000 to 1,000,000

there is much larger space for the development of this

each year own at lease two villas”. In this case, we can only

method, and it will be certain to bring huge realistic

use the interval clustering model to solve it. Based on this,

significance

this article introduces a model method, which is fit for

and

social

significance

in

commercial

information mining and deciding.

mining such database information. Proven by multiple true

examples, this method can find more valuable association

rules.

KEYWORDS

Interval-value,

clustering,

interval

database,

interval

distance, data mining

This paper is organized as follows. In the following section,

we will describe three interval-value clustering methods and

the relevant examples. In section 3, we will offer two

INTRODUCTION

interval-value mining ways in common database and interval

Since Agrawal R. put forward the idea of mining Boolean

database respectively. In section 4, there are 3 experimental

association rules [1], Data mining had been a fairly active

results about three experiments. We will give a brief

branch. During the past ten years, Boolean association rule

conclusion about the research in section 5.

mining has received more and more considerable attention of

famous authorities and scholars, and they have also

INTERVAL-VALUE CLUSTERING METHOD

published a great deal of papers about it. Such as,

Interval value clustering algorithm [7] is a result of the deep

Bibliography [2][12] brought forward a fast algorithm of

development of calculation math, and it is widely used in

mining Boolean association rule which can be used to solve

many fields such as engineering, commerce [13]. The data

commodities arrangement in supermarkets; Bibliography

mining based on interval-vale clustering is one of the

[13] put forward the idea about causality association rule

applications of such model, and this model involves in such

mining; Bibliography [9] offered a useful algorithm about

three

mining negative association rules. Boolean association rule

Number-Interval clustering, Interval-Interval clustering and

interval-value

clustering

methods

as

follow:

Matrix-Interval clustering.

is called the similar confidence of x similarly attributing to

set A. If x is similarly attributed to A1 , A2 ,..., As at the

Number-interval Clusetering Method

same

Suppose

x1 , x2 ,..., x n is n objects, whose actions are

characterized by some interval values. According to the

traditional clustering similarity formula [6], we can get

correlative similarity matrix:

1

[t , t ]

1

21 21

R(ri , j )

...

...

...

[t n1 , t n1 ] [t n 2 , t n 2 ] ... 1

Matrix R is a symmetry matrix, where

similarity between

rij [t ij , t ij ] is the

xi and x j , i,j=1,2,…n.

There are three steps about Number-Interval clustering

method [14]: (1) Netting: at the diagonal of R a serial number

is clearly labeled. If

user), the element

t ij 0 (the threshold is offered by

[t ij , t ij ] is replaced by “ ”; if tij 0 ,

[t ij , t ij ] is replaced by space; if 0 [t ij , t ij ]

the element is replaced by “#”. Call “ ” as node, while “#”

the element

as similar node. We firstly drag a longitude and a woof from

the node to diagonal, and use broken lines to draw longitude

and woof from similar node to diagonal as described in

figure 1. (2) relatively certain classification: For each node,

band the longitude and woof which go through it, and the

elements which are at the end of the node are classified as the

same set; finally, the rest is classified as the last set. (3)

similar fuzzy classification: For each similar node, band

them to the relatively certain class which is passed by the

longitude or woof starting from the similar node.

1

2

3

4

5

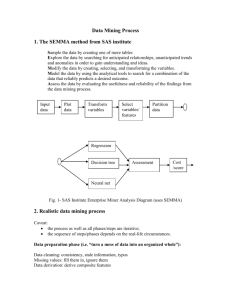

Figure 1 Netting

As can be seen from the figure 1, relatively certain

classification can clearly classify the objects, while similar

fuzzy classification cannot clearly classify the objects. For

example, objects set U={1,2,3,4,5} can be classified to two

sets as A={1,2,[5]},B={3,4,[5]}. However, which set does

“5” attribute to on earth? So, we introduce the concept of

similar confidence.

Definition

1.

(similar

confidence):

Suppose

A={ x1 , x2 ,...xn , [ x] }, [t i , t i ] is the similar coefficient of

t1 0 t 2 0

t n 0

,

,..., }

x and x i , and min{

t1 t1 t 2 t 2

tn tn

time,

and

the

confidences

are

respectively

1 , 2 ,..., s . Take j max{ 1 , 2 ,..., s } , when

j 0.5 , we believe that x should be attributed to set A j ;

when j 0.5 x should be classified to a set alone.

For example, in the above instance, the corresponding

similar coefficients of objects set U are known as follow:

1

[0.8,0.9]

1

1

[0.5,0.7] [0.6,0.72]

1

[0.6,0.61] [0.65,0.70] [0.78,0.81]

[0.7,0.81] [0.71,0.83] [0.7,0.85] [0.75,0.92] 1

and

0 =0.75.

Then, the confidence of object 5 similarly attributing to A is:

A =min{

0.81 0.75 0.83 0.75

,

}

0.81 0.7 0.81 0.71

=min{0.5,0.67}

=0.5

The confidence of object 5 similarly attributing to B is:

B =min{

0.85 0.75 0.92 0.75

,

}

0.85 0.7 0.92 0.75

=min{0.67,1}

=0.67

Take =max{ A , B }= B =0.67

∵ >0.5

∴ object 5 should be attributed to class B.

That is to say, the last classification is: A={1,2}, B={3,4,5}.

Interval-interval Clustering Method

Iterval-Interval clustering method is the extension and

generalization of Number-Interval; It expresses the threshold

of Number-Interval as the form of interval.

Interval-Interval clustering method also need netting,

relatively certain classification and similarly clear

classification as three procedures. In order to confirm which

relatively certain set the similar node is attributed to at last,

we extend the concept of similar confidence deeply as

follow:

Suppose

is

interval

value

[

0 , 0

],

A= {x1 , x2 ,..., xn , [ x]} , [t i , t i ] is the similar coefficient

of x and

formula:

xi . According to the following information

ti 0

t

ti ti

i

0

i 1 t t log 2

i

i

0

work

ti 0 ti

if

ti 0

if

.

Then,

Let

[ , ] min{[ , ], [ , ],..., [ , ]} ,

1

1

2

2

n

n

and call it as the similar confidence of x similarly attributing

to set A. if 1 , 2 ,..., s are the confidences which x is

attributed

to

j max{ 1 , 2 ,..., 3 } .

respectively,

If the center of

take

j 0.5 ,

regard x should be attributed to A j ; if the center of

j 0.5 , x should be classified alone.

Simply saying, Interval-Interval clustering method is to work

out the level set of similar matrix whose elements are all

interval values, and is also a interval value here.

For example, in the above experiment, we letλ=[0.7,0.8].

Then, the confidence of object 5 similarly attributing to A is:

0.810.8

0.81 0.8

log 20.810.7

,1+

0.81 0.7

0.810.7

0.830.8

0.81 0.7

0.83 0.8

0.810.7

0.830.7

log 2

log 2

],[1+

,1]}

0.81 0.7

0.83 0.7

A

rij t ij (t ij t ij )u0 . So, we transform

similar interval matrix R into real matrixes:

ti 0

if

[ i , i ]

out

ti 0 ti

if

ti 0

t

ti ti

i

0

i 1 t t log 2

i

i

1

be expressed by

=min{[1+

=min{[0.69,1],[0.51,1]}

=[0.69,1]

The confidence of object 5 similarly attributing to B is:

0.850.8

0.85 0.8

log 20.850.7

=min{[1+

,1+

B

0.85 0.7

0.850.7

0.920.8

0.85 0.7

0.92 0.8

log 20.850.7 ],[1+

log 20.920.75 ,1]}

0.85 0.7

0.92 0.75

=min{[0.47,1],[0.65,1]}

=[0.65,1]

Take =max{ A , B }= B =[0.65,1]

∵ >0.5

1

r

1

2 ,1

and

M

... ... ...

rn ,1 rn , 2 ... 1

1

u

1

2 ,1

, where the real matrix U is

U

... ...

...

un ,1 un , 2 ... 1

made up with different u ij which is related to different

interval value.

Next, after having a composition calculation to M and U

respectively, we get their fuzzy equality relationships; if take

different value, we can get different classification results,

where classification result is the intersection set of equality

relationship M and U, Finally, choose the reasonable class

according to the fact situation. Simply saying,

Matrix-Interval clustering method is to transform interval

value matrix into two real matrixes, and then, have a cluster

by fuzzy equality relationship clustering method. For

matrix-interval clustering is different with the change of the

value of u ij ( all the values of u ij consist in matrix U),

while the value of u ij is fairly influenced by the field

knowledge, it needs the field experts to give special

directions and can gain satisfied clustering results. But, as

this kind of way changes the interval-value to real one, the

efficiency will have a remarkably increase. Here, the

corresponding example is omitted for its complication.

It is because there exists many more interval-values in reality

that we discuss three kind of interval-value ways, while these

interval-values cannot be correctly processed by the

traditional method of data mining. Next we will discuss the

data mining way in interval-value database.

DATABASE INFORMATION MINING MODEL

There are two types for database information mining: one is

∴ object 5 should be attributed to class B.

the data mining in a common database; the other is the data

The result is same to the previous result of number-interval

mining in an interval-value database.

clustering.

Interval-matrix Clustering Method

For each interval

ij

ij

[t , t ] , it can be equal to

t ij (t ij t ij )u,0 u 1 . Given a u 0 , the interval can

Data Mining In a Common Database

In the common database, the records are made up with a

batch of figures, which range in a certain field. The values of

each field are changed in a certain area and their types are the

same, and the popular processing method is to divide them

x1 , x2 ,...xn ), where xi F ( Di ) (i=1,2,…,n) is

into several intervals according to the actual needs.

However, a hard dividing boundary will be appeared, so we

bring forward the interval clustering method. By doing so,

the classification will be more reasonable and the boundary

will be softened for the thresholds can be changed according

to the actual conditions; it is more important that we can have

an automatic operation by making all the thresholds take the

same value. That is to say, we can break away from the real

problem area, and make all data of each field clustered

automatically (under the control of the same threshold). The

algorithm is as follow:

Algorithm 1. (Data Clustering Algorithm Automatically)

Input: DB: database; Attr_List: attribute set; Thresh_Set:

the threshold used to cluster all the attributes;

Output: the clustering results related all attributes;

Step

1:

for

each

Attr_List

ai

t=(

Uni _ ai =Transfer_ComparableType( a i );//Transform all

database into comparative type by generalizing and

abstracting[11];

Step 2: In the processed database, work out the interval

distance between two figures for each F Di , and the

the attributes into comparative types, and save to

Uni _ ai .

Step

2:

while(Thresh_Value( a i )<Thresh_Set( ai , Uni _ ai )){//wor

k out the similarities of all the values of each attribute

Step 3: for any k,j DB and k,j ai {

Step 4: Compute_Similiarity(k,j);}//Calculate similarity

Step 5: Gen_SimilarMatrix( a i , M i );//Generate similarity

matrix of values of certain attribute

Step 6: C← M i ;}//C is the array of similarity matrix

Step 7: for each

ci C C= C +GetValue( c i );

Step 8: Gen_IntervalCluster(Attr_List,C);//Clustering

Step 9: S=statistic(C);//count the support of item set

Step 10: Arrange_Matrix(DB,C);//Merge and arrange the

last mining results

In the above steps, after getting the last clustering results, we

can have a data mining, and this kind of result of data mining

are quantitative association rules [11], which describe the

quantitative relation among items.

Data Mining In an Interval-value Database

This is another kind of information mining, and the

difference with common information mining lies in that it

introduces the concept of interval-value database.

Definition 2. (interval-value relation database): Suppose

D1 , D2 ,..., Dn

are

n

real

fields,

and

F ( D1 ), F ( D2 ),..., F ( Dn ) are respectively some sets

constructed by some interval-values in D1 , D2 ,..., Dn [3].

Regard them as value fields of attributes in which some

relations will be defined. Make a Decare Product:

F ( D1 ) F ( D2 ) ... F ( Dn ) , and call one of this

Decare set’s subsets as interval relations owned by record

attributes, and now, the database is called interval-value

relation database. A record can be expressed by

interval of

Di .

Definition 2. (closed interval distance): Suppose [a,b], [c,d]

are any two closed intervals, and the distance between two

intervals

is

defined

to

d([a,b],[c,d])= (( a c) (b d ) )

. It is easy to

certify the distance is satisfied with the three conditions of

the definition of distance.

Interval value data mining is to classify F ( Di ) by

2

2 1/ 2

“interval-value clustering method”, and finally merge the

database to reduce the verbose attributes, and transform to

common quantitative database for mining. The algorithm is

as follow:

Step 1: Transform F ( Di ) related to attribute Di in the

distance is regarded as their similar measurement. So a

similar matrix is generated like this;

Step 3: Cluster according to one of the three interval-value

methods;

Step 4: Decide whether the value is fit to the threshold, after

labeling the attribute again, get quantitative attribute;

Step 5: Make a data mining about quantitative attributes;

Step 6: Repeat step 3 and step 4;

Step 7: Arrange and merge the results of data mining.

Step 8: Get the quantitative association rules.

Example Research

There is the information as follow:

Table 1 Career, income questionnaire

Age

Income

Career

Number

of

villas owned

22

2000

Salesman

for 0

books

39

10000

Salesman for IT

2

50

3000

College teacher

1

28

5000

Career training 0

teacher

46

49000

CEO for IT

5

36

15000

CEO

for 1

manufacturing

Firstly, make a clustering for interval values:

The clustering result for ages: I 11 ={22, 28}, I12 ={36, 39},

I 13 ={46, 50};

The clustering result for incomes:

I 21 ={2000, 3000, 5000},

I 22 ={10000, 15000}, I 23 ={49000};

The clustering result for careers: I 31 ={Salesman for books,

Salesman for IT }, I 32 ={ College teacher, Career training

teacher},

I 33 ={ CEO for IT , CEO for manufacturing};

The clustering result for Number of villas owned:

I 41 ={0,

1}, I 42 ={2, 5}.

Then, calculate the supports:

Table 2 statistical table for item sets

Item

Support

I11

2

I12

2

I13

2

I 21

3

I 22

2

I 23

1

I31

2

I 32

2

I 33

2

I 41

4

I 42

2

Thirdly, normalize the database.

Table 3 Transformed transaction database

TID Items

1

I11 , I 21 , I31 , I 41

2

I12 , I 22 , I31 , I 42

3

I13 , I 21 , I 32 , I 41

4

I11 , I 21 , I 32 , I 41

5

I13 , I 23 , I 33 , I 42

6

I12 , I 22 , I 33 , I 41

Finally, if we mine the transformed database, can find such

association rules as “42 percent of male people earned 2,000

to 5,000 each month own at most one villa”, That is to say,

I 21 → I 41 (Support=0.42,Confidence=1).

ALGORITHOM EVALUATION

In order to testify the effect of the above algorithm, we have

made a large quantity of testing work. The imitative and true

data testing expressed the aforementioned algorithm could

improve the effect of data mining dramatically, and found

Table 4 The results of mining for A database named Flags

scale

for

data

set

numbers

of

effective

attributes

194

194

194

17

14

13

values

of

averag

e each

attribu

te

2

6

8

threshold

numbers

of pattern

suppo

rt

10

138

72

0.85

0.7

0.77

con

fide

nce

0.8

0.8

0.8

Experiment Two

This is a result of mining about a data set named Zoo, which

describes the different characters of 101 kinds of animals,

and the characters include: hair, eggs, milk, tail, legs, toothed

and so on. The mined results are described in table 5:

Table 5 The results of mining for A database named Zoo

scale

for

data

set

numbers

of

effective

attributes

101

101

101

18

14

10

values

of

averag

e each

attribu

te

3

2

2

threshold

numbers

of pattern

2

4

4

sup

port

confi

dence

0.8

0.72

0.7

0.7

0.8

0.74

Remarks: In the above experiments, data set comes from

ftp://www.pami.sjtu.edu.cn which are true databases. The

data scale refers to all the records included in database; the

scale for attributes refers to the numbers of attributes in

attribute set; the numbers of effective attributes refers to the

numbers of the rest attributes after reduced; the values of

average each attribute refers to all the different intervals after

being divided; the numbers of pattern refers to the numbers

of pattern after mining.

Additionally, it is needed to explain: In order to enhance the

effect, we labeled all the attributes during doing the

experiments. For example, if there is a rule “1,11→40”, it

represents “if the country is in America and the population is

within 10,000,000, there is not any vertical stripe in its flag”.

The detail explanations on the experiments and other

experimental results can be obtained by visiting

ftp://ftp.glnet.edu.cn.

many valuable association rules.

The following are the results of processing the true

CONCLUSIONS

databases.

The application of interval value clustering in data mining

Experiment One

This is a result of mining about a data set named Flags, which

describes the characters of the national flags of all the

countries in the world, and the characters include area of

country, population, national flag, color, shape, size, special

patterns, layout and so on. The mined results are described in

table 4:

has been discussed in this article. Firstly put forward three

kind of interval-value clustering methods, and explained by

examples; then respectively offered interval-value clustering

mining methods in common database and interval database;

at last there was an active example research; in section 4, the

results of three experiments sufficiently proved that the

application of interval-value clustering in data mining has

5. Lent, B., Swami, A., Widom, J. Clustering association

widely developing prospect.

rules. In Proc.1997 Int. Conf. Data Engineering (ICDE’97),

This kind of data mining method based on interval-value is

Birmingham, England, April, 1997.

mainly fit to mine some numeric data and comparable data;

6. Luo, C.Z. A Guide to Fuzzy Set, Beijing Normal

especially for the interval value database it is more

University Press, Beijing,1989.

significant. For other type data, it has certain value for

7. Moore, R., Yang, C. Interval Analysis I. Technical

reference. In the world, the research about the relation

Document, Lockheed Missiles and Space Division, Number

between interval value clustering and data mining is just at

LMSD-285875,1959.

the primary stage, but its development prospect is quite well.

8. Srikant, R., and Agrawal, R. Mining Quantitative

A series of new technology and software will be produced.

Association Rules in Large Tables. In: Proceedings of ACM

Now, most big commercial companies are all competing the

SIGMOD, 1996: 1-12.

st

supermarket, and 21 century will be a new era where

9. Wu, X.D., Zhang, C.Q., and Zhang, S.C. Mining Both

interval value clustering method is used in data mining.

Positive and Negative Association Rules. In Proceedings of

19th International Conference on Machine Learning, Sydney,

Australia, July 2002:658-665.

REFERENCES

10 Yin, Y.F., Zhang, S.C., Xu, Z.Y. A Useful Model for

1. Agrawal, R., Imieliski, T. and Swami, A. Mining

Software Data Mining, Computer Application 3, 2004:

Association Rules Between Sets of Items in Large

10-13.

Databases. ACM SIGMOD Int. Conf. On Management of

11. Yin, Y.F., Zhong, Z., and Liu, X.S. Data Mining Based

Data, 1993, 1993: 207-216.

Stability Theory. Changjiang River Science Research

2. Agrawal, R. and Srikant, R. Fast algorithm for mining

Institute Journal 2, 2004: 22-24.

association rules in large database. In Reseach Report

12. Zhang, C.Q., and Zhang, S.C. Association Rule Mining

RJ9839, IBM Almaden Research Center,San Jose,CA,June

Models and Algorithms. Springer-Verlag, Berlin Heidelberg,

1994.

2002.

3. He, X.G. Fuzzy Database System, Tsinghua University

13. Zhang, S.C., and Zhang, C.Q. Discovering Causality in

Press, Beijing,1994.

Large Databases, Applied Artificial Intelligence, 2002.

4. Hu, C.Y., Xu, S.Y., and Yang X.G. A Introduction to

14. Zhang, X.F. The Cluster Analysis for Interval-valued

Interval Value Algorithm, Systems Engineering -- Theory &

Fuzzy

Practice, 2003 (4): 59-62.

2001,14(1):5-7.

Sets,

Journal

of

Liaocheng

University,