Management Information Systems

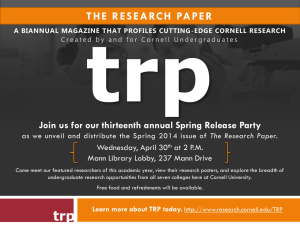

advertisement