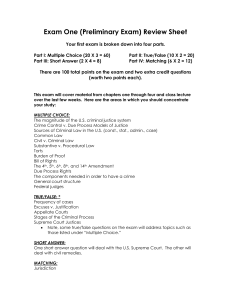

Introduction to the Criminal Justice Evaluation Framework

advertisement