I.1 The digital image - Memoria Digitization

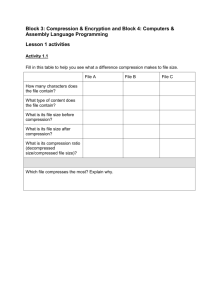

advertisement

Digital Technology in Service of Access to Rare Library Materials Adolf Knoll, National Library of the Czech Republic One of the most acute problems in Czech libraries, archives, and museums has been safeguarding and preservation of rare and endangered library materials to ensure long-term access to them for future generations. While in the beginning of 1990s the attention of collection curators was preponderantly given to old manuscripts and important archival materials, the new millennium is starting with great alarm concerning the condition of acid-paper materials, especially newspapers and journals. After improvement of storage conditions and careful mass treatment of selected paper materials the accent on long-term access got the primary importance. As the experience of direct open use of rare publications and manuscripts in Czech libraries was very negative, appropriate technologies were looked for to avoid users' manipulation with rare originals at minimum. For example, more than 200 years of very open access to the National Library manuscripts and old printed books left in many cases irreparable traces on frequently used volumes: cut-off folios or even illuminations, torn-away pages, worn-out bindings and page edges, dismantled spines of books and codices, blurred colours, etc. If the natural ageing of carriers was added to complete the scene and combined with ink corrosion and brittle paper, the situation was not too optimistic. In the years before, the library was trying to film certain titles, but the production volume was very low and no serious preservation microfilming behaviour was applied. The nineties brought a real boom of various digitization devices and also the idea that the digital technology could be applied in the library world to help to safeguard the documentary heritage. In parallel, however, the preservation microfilming strengthened its position and a lot of discussion was led to solve the problem, which of these two technologies would be more suitable for preservation. However, fortunately the life is richer than any artificial schemes and nowadays the emerging digital world coexists with the analogue realm of classical documents. Digitization is more considered being an access tool even though its preservation role cannot be disregarded. It brings an until today extraordinary enhancement of access opportunities that enables not to expose originals for direct use and - with the fast development of technology - it can also play certain role that can be called digital preservation. However, digital files are very fragile especially thanks to rapidly changing hardware and software platforms and formats; therefore, no total preference for the digital copy has been given until today to play the role of a real replacement preservation tool. Digital documents cannot be deciphered without technical equipment and a lot of other high technology products, they are not humanly understandable as written or printed materials or even their microfilm copies are. All this and a lot of other ideas were in our minds, when we considered for the first time in 1992 to apply digitization of originals for the work with old manuscripts and books. We liked certain touch of eternity in the unchangeable substance of the digital image and simultaneously we were concerned very much about its fragility. Our concern was not so much related to digital information carriers, because they could be rapidly replaced and the written information refreshed. Of course, this point of view was related mostly to what we intended to produce and store digitally. Today we must admit that we have been feeling certain preoccupations about the industrial production of documents on various information carriers, namely compact discs, as we were charged some six years ago with the function to archive the audio CD documents. I Data When we started to digitize old manuscripts in routine in 1996, we were aware of the fact that we should not return again to the originals, that the products of the work we were doing had to last in time. In the beginning much attention was paid to the digital image as the main container of the information in which the users were interested. This question concerned the parameters of the image, but the images had to be bound into a virtual book that should be identifiable as unique document. To make a correct choice, where the stable point was from the perspective of which we could take our decisions? Where should we look for it? And who should look for it and decide: librarians and archivists or technicians? How to bridge the vacuum existing in those years between technological thinking and classical library profession especially in manuscripts and old printed books circles? There was almost no common denominator: these two groups of people were unable to speak to each other. However, finally the common point was found and its role nowadays is even more decisive than before. It is the user. For him the digital access is being prepared, he will be or will not be satisfied with the replacing surrogate. The user's point of view is being projected into technical parameters of the digital image and into the character and structure of the metadata framework. I.1 The digital image The digital image has the following basic parameters: space resolution and brightness resolution - the latter one is most frequently called colour depth or number of colours. The digital image with which we work in our digitization programmes is a bitmap that can be imagined as a grid filled with coloured dots. These dots altogether give the impression of a continuous colour space. Each dot has its place in this grid, i.e. it can be defined by its vertical and horizontal positions. I.1.1 Resolution The space resolution - simply called resolution - tells how many dots or elements of the picture are necessary to express a unit of length. The picture element is simply called pixel and the resolution is usually given in number of pixels or dots per inch - dpi. The scanning devices or digital cameras are given their resolutions frequently by combination of two numbers, e.g. 2000 x 3000 pixels, or by the total number of applicable pixels, e.g. 6 million = 6 megapixels for the same case. The problem is that any original scanned will be expressed maximally with this number of dots or pixels. If the scanned original is an A5 page of a book, it can be expressed by 6 million dots. In practice, this means that the longer side of such a page has 210 mm and it can be expressed at maximum with the longer side of the scanning element, i.e. we will have 3000 dots per 210 mm. If we recalculate this, we will have the resolution of 360 dpi. However, if our scanned original will be a large size newspaper having, for example, 800 mm at its longer side, then we can have at maximum 3000 dots per 800 mm, i.e. 94 dpi. And if the original will be a large map having 1600 mm at its longer side, then the resolution will be only 47 dpi. Of course, all this is true provided the shorter sides will fit simultaneously into the scanning window. After some testing with various originals and the disposable resolution, we will easily discover that we can digitize some types of originals successfully, while the larger ones - if digitized - will give images of very poor quality, which will be unusable. The available pixels will not be able to cover sufficiently fine details and their quality will not satisfy our user. To solve this problem, it is necessary to know from the beginning, which originals will be digitized and which not. The decision that some documents will be excluded from digitization now, because they form a very small percentage of the materials prepared for processing, can save us substantial amount of money we must invest in the equipment. We should be also aware of the fact that the technological development is very fast and that the future will certainly bring better devices at more affordable costs, which will enable to digitize the put-apart originals at a substantially higher level than we could do now. We started with a digital 2000 x 3000 camera and after two or three years we bought another device with the resolution of 6000 x 8000 dots that enables us to process also other types of documents. For the moment being, such a resolution can cover most of the needs of our libraries and other cultural institutions 2 for digitization. In these days, the company AIP Beroun with which we have been digitizing has installed a third device of comparable resolution as the second one. Nobody speaks today about indirect digitization through high-quality colour slides that was relatively common in the beginning. It is true that it is expensive, but in some cases this technology can solve certain requirements even today, for example, production of high-quality images of very large rare posters. I.1.2 Colour depth Each pixel has not only its two-dimensional surface, but it can be also featured as a three-dimensional element. Its third dimension contains information about colour. If no third dimension exists, the pixel represented by one bit of information can have only two values: one or zero; this means that it can be or black or white. Such an image is called black-and-white, 1-bit, bitonal, or bi-level image. Its typical sample is the classical black-and-white fax or Xerox image. The 1-bit image cannot express all the necessary information sufficiently; therefore, the pixel should be able to express colour. The number of colours that the pixel can express depends on our decision how many bits we can assign to the pixel. If we assign only 4 bits, we can handle 16 colours, but if we assign 8 bits - i.e. 1 byte of information - we will have 256 colours. The so-called true colour image has 24 bits = 3 bytes per pixel that means more than 16.7 million colours. The pixel can be assigned even more bits, but usually these do not express colour, they rather express the colour attributes, e.g. the transparency. In practice, the number of colours increases substantially the volume of the computer file containing the image. If we apply, for example, all the disposable bits of the 2000x3000 pixels of our digital camera into an image, then the image will have 6 million bits and it will have 750 KB in black-and-white. However, the same image in the true colour quality will have 6,000,000 bits x 24 bits = 18 MB. This should be taken into consideration very seriously especially in case of large originals scanned at high resolution. The digitizing team may decide to decrease the number of colours for some originals, especially for monotone textual documents such as newspapers or even textual manuscripts. In this case the preference is rather going towards digitization in 256 shades of grey than in 256 colours. The reason is very simple: homogeneity of the images is preserved when applying 256 shades of grey face to individual and different palettes of 256 colours for each image and possibility of efficient compression as explained below. In some cases also 1-bit image can serve very well the users' needs especially as to simpler documents such as textual periodicals. The 1-bit image has also its problems even if it is very economical. The problem consists in establishment of a threshold indicating which dot should be black and which should remain white. They are many techniques of recalculation from richer images into the black-and-white image and there are also tools for easier definition of acceptable thresholds for automated processing that is important, for example, when scanning the microfilm. The growing digital storage capacities and the poor quality of many damaged and partly deteriorated originals make us prefer the 256 shades of grey today to the 1-bit image whose role begins to be shifted more into the image post-processing during its delivery. I.2 The production processing of the digital image Many digital images, which were produced as direct output of scanning or digital photographing, should be further processed to respond better to the needs of users. First of all, unnecessary parts of the image should be cut off and afterwards the sets of practically applicable images should be produced from the obtained source image. The images should be stored in standardized computer files in concordance with a chosen format. However, which format should be selected? The selection of the appropriate format should take place on the basis of the analysis of applicable 3 parameters confronted with our needs and possibilities. Furthermore, the format is the unit that should be handled by certain viewing tools to enable access. In plus, we should rely on those formats, which will last in time and lower thus additional costs for conversion into the new ones. The graphic format is a framework especially for colour depth and possible compression. Each format is assigned certain extension of colour depth and it supports or it does not support compression. If it supports compression, it does it for concrete compression schemes; therefore, we should also know their properties. For example, the well-known GIF format enables only 256 colours, while compressing the image by the LZW algorithm. On the other hand, another well-known JPEG format supports the true colour image – or, better-said, RGB image. This image cannot be recalculated and then stored in JPEG in only 256 colours to save the storage space, because JPEG does not support fewer colours than 16.7 million. In JPEG, the applied compression scheme will be DCT that stands for discrete cosine transform. In opposition to these two examples, the TGA true colour format supports no compression. I.2.1 Compression The compression can be lossless or lossy. Each bit from the losslessly compressed image returns after decompression into its former position, while in case of lossy compression it does not. The compression schemes have specialized into two domains: 1-bit image and colour image. The development in these two domains takes place rather separately, because the applied techniques vary substantially. As everywhere in the computer world, there are ISO standards and de facto standards. Some of the ISO standards are used as for example DCT in JPEG, but some of them are not, for example JBIG for the compression of the 1-bit image even if it has been here for some 7 years. It is also not true that better schemes are used and the worse ones are not. The practical life follows another line that is dominated by availability of usage comfort. The first condition for a compression scheme to be used is its implementation in a widely accepted and used format. If it does not happen, the scheme is not used, because it cannot be handled: there are (almost) no tools for production and no tools for access. Even if JBIG is superior to the existing best practically used and ISO implemented 1-bit compressor - CCITT Fax Group 4 in TIFF - it is not used because of not having been supported in a largely used format. It will be interesting to see, which future will have the JBIG2, which is excellent and becoming another ISO standard now. On the other hand, not everything that has been implemented in a known format, especially TIFF, is practically usable or makes sense to be used. For example, TIFF 6 enabled, among others, JPEG DCT compression and as such it was - 6 years ago - even recommended for some Memory of the World applications. Nobody uses JPEG DCT in TIFF, but almost everybody uses JPEG, which is also an ISO standard. The answer is very simple: rich availability of various tools for JPEG and almost no tools for TIFF/DCT on the other hand. In plus, JPEG is one of the native Internet formats and Internet is changing the world. Another interesting example is the LZW compression scheme, which was enabled in TIFF 5 for true colour image. It is used, but rarely. Why? There are two reasons: LZW is licensed by Unisys and any developer must buy the license to compress or decompress images in LZW in his editors or viewers - and the user must pay the developer. The second reason is that during the time PNG format was developed and it replaced LZW by its own lossless compressor. PNG is more efficient both for true colour and 1-bit image than LZW and even if it is only a de facto standard, it is a solution with a very prospective future. It has been admitted as the third Internet native graphic format and it is free. Nowadays, the best reliable lossless compression solutions are TIFF/CCITT Fax Group 4 for 1-bit image and PNG for colour image. However, the lossless compression is not able to reduce the size of the image file radically. For the true colour image it may be up to 30-50%, while in the 1-bit domain it is more. To enable faster transfer of image files especially over networks and even their efficient and cheaper storage, much work has been done 4 in the lossy compression domain. The best widely used lossy compression scheme for the RGB image, i.e. true colour and 256-shades-of-grey image, is now the DCT in JPEG. The image file size can be reduced several times, but the image is characterized by loss of information compared with the source image. There is no objective rule, how to set up the compression ratio - frequently called quality factor - for JPEG. It is software dependent and it differs for various types of the image. If the image is photorealistic with many rich and different objects, the compression ratio can be higher than in case of objects having larger smooth areas. The threshold dividing the values of the quality factor into acceptable and unacceptable areas should be established individually for each group or type of images. Our decision is taken in compromise between our wish to have smaller files and the wish of the user to have acceptable comfort. We think, nevertheless, that some algorithms can be defined for characteristic types of original documents through testing the images within the sets with various parameters. This work is done now in a project of ours in which the most important players are our users and their subjective evaluation of the tested images. JPEG DCT is dividing the image in smaller squares from which each one is handled separately. The lossy compression algorithm always tries to accentuate prominent lines and edges, because their acceptable display can dominate the image and thus it can suppress the imperfection of rendering less dominating objects. If the quality factor is too high to enable very tough compression, the above mentioned processing takes place rather separately in each square; therefore, in this case the image can have disturbing square artefacts that are best seen on smooth places or quite on textual pages. The lossy compression is nowadays a domain in which a lot of development is taking place. Its direction is twofold again: 1-bit and RGB areas, while in concrete efforts, the aims are two ISO standards: JBIG2 for 1-bit image and JPEG2000 for RGB image. The new feature is the lossy compression of the 1-bit image face to the lossless CCITT Fax Group 4 scheme. The success of the new solutions lies especially in the pre-processing phase before the compression itself: the small bitmaps groups - frequently featuring various characters - are compared with one another with the help of their dictionaries. Following various threshold algorithms the bitmap clusters are optimized so that their number can be reduced and the patterns displayed several times in various positions within the image. Also unnecessary noise is being removed. In this way, the bitmap segment of the image encoding is getting less voluminous, while the repeating clusters are assigned only their positions. The best solutions must be based on the best pre-processing algorithms. Our tests have shown that today the best marketable and relatively already largely applied compression scheme of this type is JB2 developed by AT&T and distributed today by Lizardtech Inc. within the DjVu format. In fact, it is one of the schemes related to JBIG2. Furthermore, DjVu is able here to use the same cluster dictionary for more images if these are grouped in one file. In this way, the total size of the multipage image is smaller than the sum of the sizes of the same images saved separately. This is also a substantial enhancement compared with, for example, the multipage version of TIFF 6 or other similar solutions. For the colour image the direction applied also in the JPEG2000 draft is a new compression wavelet algorithm. The image is no longer split in squares compressed separately; the wavelet can be rather imagined as sublimation of the characteristic dominants into their dramatically smaller archetypal representation. This idea is not so far from the so-called fractal compression, but the applied algorithms are other ones. After decompression, the image expands into its full representation; it is no more assembled from squares as in the former case. If compressed toughly, the wavelet image has also artefacts, but their character is different from the JPEG ones. They are smoother for the user's eye and continuous compared with the discrete ones from JPEG; therefore, they do not disturb so much at the same compression ratio. The efficiency of the wavelet compression is far superior to DCT and the savings are several times better. Our tests have shown that today the best marketable solution is the LWF format of the Luratech Inc., which is now also very active in implementation of JPEG2000. Another good solution, but slightly less efficient, is IW44, a wavelet component of the already above discussed DjVu format. I.2.2 Graphic format The graphic format is an envelope in which the image is stored; there is information about resolution, colour 5 depth, and applied compression scheme. There are dozens of graphic formats, but if we work for the future, we must seek higher stability in their application. It seems today that those formats, which are efficient and frequently used, have a good future. This usage is also in certain extent evident in the Internet world. The web prefers several formats and several other formats are planned for the web or enabled especially thanks to plug-in or ActiveX technology. I.2.2.1 Vector graphics In our previous discussion, we have left apart the simpler (computer) colour graphics: various diagrams and schemes. For this domain, the photorealistic compressors as DCT or wavelets are not appropriate. It is much better to limit the colour depth and compress them losslessly in GIF or PNG if we stick to the bitmap representation. Sometimes it is even useful to dither them down to 1-bit images and to handle them in this area. Nevertheless, this sort of images is more suitable for vector graphics in which the image is no longer stored as a pixelated grid, but as a vector formula. It is appropriate to mention here some new development occurring with relationship with Internet: it is the so-called SVG format that stands for scalable vector graphics. SVG is the vector image stored as an XML file; much work is done now to implement SVG into the Internet tools. In this case, a very slim text file represents the image; therefore, its transfer is rather efficient. I.2.2.2 Raster graphics (bitmaps) I.2.2.2.1 Traditional solutions The Internet world has also got great impact on usage of the bitmap image formats. Three of them are directly implemented in web browsers: GIF, JPEG, and PNG. The other ones can be used provided their MIME is specified on the servers and within the browsers. Additionally, for direct viewing of other formats in web browsers, the above-mentioned add-ons, such as plug-ins or ActiveX, are necessary to be installed. If so, we can easily work, for example, with TIFF in the browser window or with other formats. The add-ons can also bring additional handling quality to the browsers: zooming, sharpening, cropping, printing, etc. The most appropriate format for efficient lossy storage of digital images of rare library materials is JPEG. It is also a good presentation format. If we stick to lossless compression, PNG should be considered; it is the best solution available and it seems that neither lossless wavelet compression will outperform it significantly. PNG is now a de facto format, but it is very good and it seems that it will gain even more applicability in the future than it has got until today. If we do not like compression, we can remain with the uncompressed TIFF, but we will need a lot of storage space, while knowing that the compression algorithms are well described and that there is no danger to use them. GIF cannot be recommended for serious work with graphic representations of rare materials, because it has the limit of only 256 colours; it is acceptable for preview images or simple graphics. I.2.2.2.2 Emerging solutions We have already mentioned the wavelet compression as well as some of the formats, which are on the market and which enable the wavelet encoding. As there is still some lack of standardization in this domain, the wavelet formats will rather play an important presentation role. They can form a presentation layer existing simultaneously with the source images. It has been also observed that many images consist of various objects from which some are rather textual, while the other ones are more photorealistic or they represent accompanying graphics. It is said that the content is mixed; it is called Mixed Raster Content (MRC). If there are tools able to zone the image into textual and true colour segments and if these segments are compressed by the most efficient compression tools separately and then stored in one single format, the result should be a very efficient and slim image file. 6 We can call such formats as document formats: at present, there are two good solutions of document formats on the market: DjVu and LDF. Both of them work separately with foreground and background segments of the image, while the dominating foreground layer is always a 1-bit image. An efficient 1-bit compressor always compresses this 1-bit image: JB2 for DjVu and LDF 1-bit compressor for LDF. It is to be noted here that these two 1-bit compressors are the best ones on the market today even if new ones are starting to appear as e.g. CVision JBIG2 compatible one. The second foreground layer contains colour information of the textual layer only and it is always compressed by a wavelet algorithm as well as the coloured rest of the image - called background - is. The applied compressors are IW44 for DjVu and LWF for LDF, again ones of the best on the market today. The results are astonishing: a textual newspaper page in 256 shades of grey has as JPEG 2152 KB, while as a typical DjVu image only 130 KB – and it is well readable and thanks to DjVu plug-in also its integration into web browsers is excellent. In plus, it is displayed progressively; thus, it is readable even in case of absence of a considerable part from all the bits contained in the image file. This DjVu performance should be also seen in context with the 1-bit representation of the same page: it has 255 KB when stored in TIFF/CCITT Fax Group 4. If recalculated into 1-bit and compressed in DjVu as 1-bit text, it will have only 73 KB. All these numbers are very eloquent and they indicate that many things can be done for the document delivery area in libraries. Both DjVu and LDF can switch off the zoning and compress also the image as 1-bit (including the corresponding dithering) or they can compress and store it as a photorealistic image only in wavelets. I.3 The delivery of the digital image The digital images form together a virtual representation of the original rare book. The user is no longer given the original for the study, while in most cases the digital copy is a good replacement for him. To be a real good replacement, the user must have some comfort when working with the pages of the virtual document. I.3.1 The preparation of the source image When the digitization device in our digitization programme produces the image of a manuscript page, the image serves for preparation of a set of 5 images fulfilling various roles in the communication with the user. Two preview images are made and stored in GIF: the smaller one has ca. 10 KB and it is used for creation of a gallery representation of the entire manuscript. It enables the user to have a very basic orientation with the whole document: to see, whether there is only text or also images or illuminations, etc. The larger preview image has ca. 50 KB and it assists the user's decision, whether to access the page for further study. Another three images of different quality and resolution are stored in JPEG: Internet quality image of ca. 150 KB, user quality image for normal work and research has ca. 1 MB, while the excellent quality image is for archiving or special use as, for example, printing of a facsimile copy. Especially the starting Internet access to our Digital Library has shown some necessity to reshape and optimize this set. It has been decided to abandon the Internet quality level because of its low readability and rather to optimize the user quality. This will be done through better establishment of the JPEG quality factor for various types of documents with the help of professional users who need the documents for research. The output of this work will be faster image processing for creation of digital copies and probably also economy of storage space. This economy will be favourably met both by Internet users and CD users, because in the latter case, even larger manuscripts will fit into one CD that has not been the case in ca. 10% of digitized documents until today. Also special image processing tools are going to be developed and the Adobe Photoshop used until to date replaced by faster programs optimized for this sort of work. It seems that all the scanning devices of manuscripts - there will be three in a short time - will use one special image processing station. 7 These sets of images are not used for periodicals, where the requirements are different. I.3.2 The delivery of the source image Users must be able to manipulate the image to investigate details, to enhance readability of some paragraphs of the text, to have a good orientation within the image, etc. The basic presentation tool - that is generally a web browser today - does not enable it. If the image is larger than the monitor screen, the work with it can become very unpleasant especially in case of larger formats as newspapers or in case of pages with extremely fine details scanned at very high resolution. Furthermore, we must be also aware of the fact that any bitmap image is displayed on our computer screen in its resolution that has been selected by us. Thus in finer scans - at higher resolution - the displayed image is larger than the original is. To solve this problem even for the web recognized image formats - JPEG, PNG, and GIF - various plug-ins are developed to handle the images. The plug-ins or ActiveX components (for some solutions in MSIE) are installed into the browser and associated with the file extension. When the image file - for example, PNG - is called separately into the browser window or into a frame, it is displayed via these viewing tools, which give some additional value to the presentation of the image. There are several tools of this kind and almost all of them are at least shareware programs. They differ also in their functions and they have one problem in common today: they cannot handle JPEG in the Microsoft Internet Explorer because for this format only the native DLL is used and cannot be replaced. It is a great pity, because JPEG is and it will certainly remain one of the best presentation formats. As also the qualities of some other features in various plug-ins vary, it was decided to develop our own plug-in. It is called ImGear and it can handle several formats including TIFF, PNG, TGA, BMP, etc. There are separate plug-ins for Netscape and for Internet Explorer; neither we have succeeded to implement any enhanced JPEG viewing within the Internet Explorer. If the user accesses our images with the browser, Netscape is recommended for easier work. The user can then work with the manuscripts and periodicals on Internet, when using our Digital Library, or locally with copies written on compact discs. For the local access to manuscripts, there is also another choice: to use a special viewing tool called ManuFreT, which enables also a more sophisticated work with the descriptive metadata. As an image viewer and editor, ManuFreT solves easily access to JPEG with many added graphic functions. The earlier viewer in ManuFreT was a separate solution from the ImGear tool, but nowadays in the new 32-bit ManuFreT software, the image handling is enabled through the same plug-in as in the web browsers. Such a viewing reflects better the user's way of work that he has acquired on Internet. The ImGear interface is being rewritten now to react more appropriately to users' comments and especially to open additional functions for other types of image files. The multipage TIFF viewing and the work with LZW compressed files, such as GIF and TIFF/LZW, are being enabled as well as some postprocessing of 1-bit images to enhance their readability - filling in of non-smooth edges of partial bitmap clusters with grey pixels. The user of our Digital Library is enabled the free use of the ImGear software for non-commercial purposes. I.3.3 The dynamic post-processing of the source image for delivery When the user goes to our Digital Library, he has JPEG and TIFF images available for research. Even if compressed in a very efficient manner while preserving good readability, the files may be quite large: 0.8 - 1 MB for manuscripts and 1 - 3 MB for periodicals in their normal user quality. At low speed or very busy network traffic, this fact can influence negatively the work. In plus, the Internet Explorer users have problems with access to larger sizes of JPEG images. 8 We have performed quite comprehensive tests of mixed raster content approaches and emerging compression schemes in all of the domains and we have decided to apply the DjVu format as a presentation option in our Digital Library. At present, the user can choose, whether he will view the desirable image of a manuscript or a newspaper page in JPEG or TIFF/G4 or whether he will ask the system to convert it into DjVu and sent in this format. We have installed a special DjVu server with an efficient DjVu command line tool. The speed of conversion is very high, the DjVu images are very slim and they can be easily handled in both browsers. This conversion option has also been integrated into our Digital Library interface. The short delay in the beginning of the transfer is imponderable; because it is easily overbalanced by the very short time needed for the file transfer itself. If there is a delay of the image delivery from the Digital Library, it is rather a problem of a slower operation of the robotic mass storage library in case the image is not pre-cached on fast disk arrays and it is requested and searched in magnetic tapes. The user has several options for the on-the-fly DjVu conversion: he can apply the full DjVu philosophy in the typical option or he can switch off one of the two compressors in the photorealistic or bitonal options. He can even set up the background quality factor. II Metadata The metadata are added value to the data: they describe and classify the contents, they structure the digital document, and they bind their components together. They are also necessary to provide technical information for easier processing and access. Our metadata philosophy is based on two alternative ways of access to the digital documents: basic rudimentary access; sophisticated access. The basic rudimentary access must be possible through generally available tools, especially web browsers; therefore, the metadata arrangement follows the basic features of web browsers: HTML formal formatting. As HTML does not enable mark-up and description of contents, it has been completed with additional elements that make it possible. Thus the DOBM SGML-based language and approach have been developed to ensure the metadata management in our digitization programmes. This combines the direct readability of HTML with content mark-up of relevant objects. The file structure of the digital document has a tree character: the main levels of the manuscript description are the entire book and the individual pages, while at periodicals the tree is richer, going from the entirety of the title down to individual pages and even articles. The metadata tree is a gateway through which the user gets access to the desired data files, which are referenced from here. In general, the data files can be images, text, sound, or video files. They are in principal external face to the metadata container. The content-oriented mark-up is based on minimal cataloguing requirements as well as on good practices concerning the description of various objects in the documents, such as, for example, illuminations, music notes, incipit, etc. The language was developed in 1996 and in that year also the mandatory content categories were set up. However, since that time, some more consistent approaches were agreed in various communities in Europe, for example, a definition of a manuscript catalogue record in SGML in the MASTER project or the electronic document format for digitized periodicals in the DIEPER project. Even if these projects can have different goals than our projects have, we consider as necessary to co-operate with them and to share - even in some cases as by-products - our data. We have 9 participated in MASTER and therefore decided to accept its bibliographic record definition for the bibliographic part of our document format for manuscripts. Necessary tools are being developed now to bridge the two programmes. Our aim is that what we do for digitization, we will use for MASTER and what we have done for MASTER, we will use as a component part of the description of digitized manuscripts. As to DIEPER, the situation is different: both we have a document format, but the aim of DIEPER is to provide access especially to scholarly journal articles, while the aim of our programme is to preserve and to provide access to endangered and rare historical periodicals. To share with DIEPER our digitized periodicals, it is necessary for us to deliver their metadata description in the DIEPER format that we have planned to do as soon as the DIEPER structure is declared final. It is foreseen that the central DIEPER service will read our input and that of other libraries and it will try to act as a uniform gateway to digitized periodicals in co-operating institutions. When the user marks what he is interested in and when he demands the access to it - be it an article or an annual set - the central resolution service will redirect him to the on-line service, where the document is stored. Thanks to foreseen unique identifiers of the component parts of periodicals, the access to the requested part will be provided directly from the source library in case it is free - or the user will have to pass through prescribed entrance procedures of the concrete provider. II.1 Formal and content rules of the metadata description II.1.1 Contents There are various points of view that enable description of contents, but the described objects are always defined certain attributes whose values are then classified and identified in concrete situations. Different people can consider different things as important quite in case of the same object. However, the practical work in various areas has led to certain standardization. Thus, we have, for example, cataloguing rules; they say of which elements a catalogue description should consist, which attributes these elements can have, and how they can be identified. Nevertheless, there can be more approaches defined as cataloguing rules. If, for example, two different approaches are used to describe the same element of contents, these descriptions will not be comparable between each other and no system built on both will be efficient. Thus, a very important precondition for each metadata description is application of broadly accepted content descriptive rules, because the value of our work can be enhanced in co-operation and the co-operation is always based on the same or similar understanding of things. Even if it is evident, the practice is not so easy, because various institutions build even their new approaches on their various traditions to maintain internal compatibility of their tools. In this framework, to start anything new for digitization, for example, may mean to reshape much more a lot of other work and procedures within the institution. Very frequently, to co-operate means additional effort. However, even this effort is easier if well-defined content descriptive rules are respected, because between major approaches various compatibility bridges have already been built. In other words, even under the same category of objects it is important to understand the same things. This is the most important requirement for any description. If, for example, we all agree on the definition of author of a document, then all the names put into this category will be comparable provided that also their writing will follow the same rules that is not always the case. Even if we agree on this precondition, it is not sure that we will be able to communicate, because our systems how to say - mark up - that an object is this or that can be rather different. Broadly speaking, however, we should be able to export from them certain standardized output in spite of the tools they use for mark-up of described objects. II.1.2 Formal framework It is evident that at least the communication metadata output or files should be static and well structured to enable easier exchange of information. We can look for our model again at the library cataloguing: if 10 libraries are able to export their records into MARC or UNIMARC, they can interchange them, of course, provided they have followed comparable rules to identify the descriptive objects. The so-called electronic formats for digitized documents are much more complex; therefore, also the danger of non-communication is much higher. Furthermore, there are almost no traditions: the formats are created now on the basis of available standards, rules, and good practices. More than in the traditional library work, the de facto standardization of various good practices takes place in various applications. In many cases, there is also lack of even formal common denominators. An exchange format and also a storage format must be built on clearly shaped systems: they must enable a very good structuring of very complex documents and the description of various kinds of objects. It could be even said that the description of almost everything should be enabled that can be met in documents on any of their structural levels. Such a platform on which to do these things exists and it is called SGML. The question is, however, what to build on it to be able to communicate and, simultaneously, to be able to use existing access tools. After having written our enhanced HTML - called DOBM - we solved the situation for a great deal of time. Much more a problem for us today is acceptation of various modifications of the description of contents than a quick change in the syntactic area of the description environment. Also the emergence and importance of XML is being taken into consideration and our electronic document formats are going to be redefined in this syntax. Simultaneously, the sets of the description elements will be enlarged following the requirements of the programmes with which we intend to co-operate as well our own new requirements produced through research of, for example, other methods of calibration of scanning devices. The main component parts of the metadata description of a digitized manuscript are today: bibliographic description of the whole document, descriptions of individual pages, and technical description. The new XML DTD will adopt the MASTER bibliographic record DTD for the bibliographic description, while the mandatory elements for description of individual pages will be preserved. The technical description will be enlarged to enable a better reproduction of the original. The XML will be first applied for manuscripts and only after that for periodicals. III Access to digitized documents The user may require two types of access: local access to documents or their parts stored on compact discs or other off-line media and Internet access. Both types of access should be built on the same digital document. He may also require additional services, for example, printing, delivery of certain parts of the large documents on CDs, etc. The sophisticated access in both environments is built on enhanced use of metadata descriptions. In certain extent, these must be based - together with our entire digitization approach - on foreseeable requirements of potential users. Nowadays, all our documents are structured following our DOBM SGML language, but their structures are being dissolved in the Digital Library environment, where the documents are indexed to be searchable; indexed metadata and graphic data are then stored separately. However, they can be any time exported into static structures. The interface of the Digital Library is the AIP SAFE system, developed by the Albertina icome Praha Ltd. It is a document delivery system that enables especially sophisticated document delivery from digital archives, interfacing of the digital library for the user, control of the production workflow of the digital documents, and off-line and on-line enhancement of metadata descriptions. 11 DjVu Server Mass Storage ADIC Scalar 1000 AIT Magnetic tapes MrSID Image Server Fibre Channel Disks SAM FS - Sun Solaris Server RetrievalWare AIP SAFE Document System Delivery Producer User Fig. 1 - Functional scheme of the Digital Library AIP SAFE integrates with web browsers and allows the use of various plug-ins for easier data handling. Its heart is the AiPServer, which consists of the following logical entities: SQL server that is connected through TCP/IP to the system database; Sirius web server that creates the interface between external users, web servers, and AIP SAFE system; Storage Server that enables storage of data into large robotic libraries; System applications for administration and other tools. When some years ago we could not imagine especially access to manuscripts on the web, today this is the reality requested by users. We are now in the middle of a testing period of all the components of the Digital Library. During this testing period the interface will be optimized and also some other tools including those for administration. The heart of the Digital Library is a Sun Server with the installed Storage and Archive Manager File System (SAM-FS). It works with a mass storage capacity on magnetic tapes (ADIC Scalar 1000 AIT) and fibre channel disk arrays for storage of indexed metadata and pre-cache of data from the mass storage device. The Digital Library is fed by the two digitization programmes, which are described below. Three special servers or added services are foreseen for a greater comfort of the user: DjVu server as explained above to reduce the volume of transferred image data; MrSID server for access to larger source files, e.g. images of maps; RetrievalWare - Excalibur for search in texts read by OCR. As of June 2001, the DjVu server is fully applied and integrated into the Digital Library, while the integration of the other two services is in development. It is to be also mentioned that OCR will be used on older documents and that it is rather foreseen to use it as hidden source for searching. 12 IV National Framework Our digitization activities have been developed in close relationship with the UNESCO Memory of the World programme. They led to establishment of two separate programmes: Memoriae Mundi Series Bohemica for digital access to rare library materials, especially manuscripts and old printed books - in routine since 1996; Kramerius for preservation microfilming of acid paper materials and digitization of microfilm in routine since 1999. The volume of digital data available in the programmes is today ca. 230,000 mostly manuscript pages and ca. 200,000 pages of endangered periodicals. This volume was dependent on the funding that could be allocated for digitization. For many years, there was no systematic funding in this area, only in 2000 the libraries succeeded to build national frameworks for various activities such as retrospective conversion, union catalogue, digital library, and also for the above mentioned programmes. These exist nowadays as component parts of the Public Information Services of Libraries programme, which is - on its turn - a part of the national information policy. Czech institutions can now apply in the Calls for Proposals launched by the Ministry of Culture to receive support for digitization. They must respect the standards of the National Library and they must agree with provision of access to digitized documents through the Digital Library. It seems nowadays that the interest of various types of institutions is growing; they are libraries, but also museums, archives, and various church institutions. It is expected that thanks to these national sub-programmes, the volume of digitized pages will grow with ca. 80,000 - 100,000 pages of manuscripts and old printed books and ca. 200,000 pages of periodicals annually. 13 V Contents I DATA 1 I.1 The digital image I.1.1 Resolution I.1.2 Colour depth 2 2 3 I.2 The production processing of the digital image I.2.1 Compression I.2.2 Graphic format I.2.2.1 Vector graphics I.2.2.2 Raster graphics (bitmaps) I.2.2.2.1 Traditional solutions I.2.2.2.2 Emerging solutions 3 4 5 6 6 6 6 I.3 The delivery of the digital image I.3.1 The preparation of the source image I.3.2 The delivery of the source image I.3.3 The dynamic post-processing of the source image for delivery 7 7 8 8 II 9 METADATA II.1 Formal and content rules of the metadata description II.1.1 Contents II.1.2 Formal framework 10 10 10 III ACCESS TO DIGITIZED DOCUMENTS 11 IV NATIONAL FRAMEWORK 13 V 14 CONTENTS 14