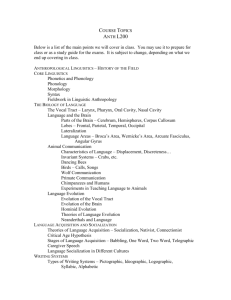

Computational Linguistics in Support of Linguistic Theory

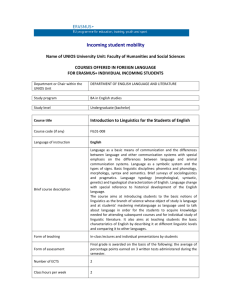

advertisement