Feature Extraction for Genre Classification

advertisement

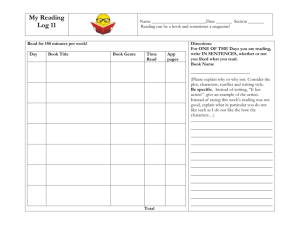

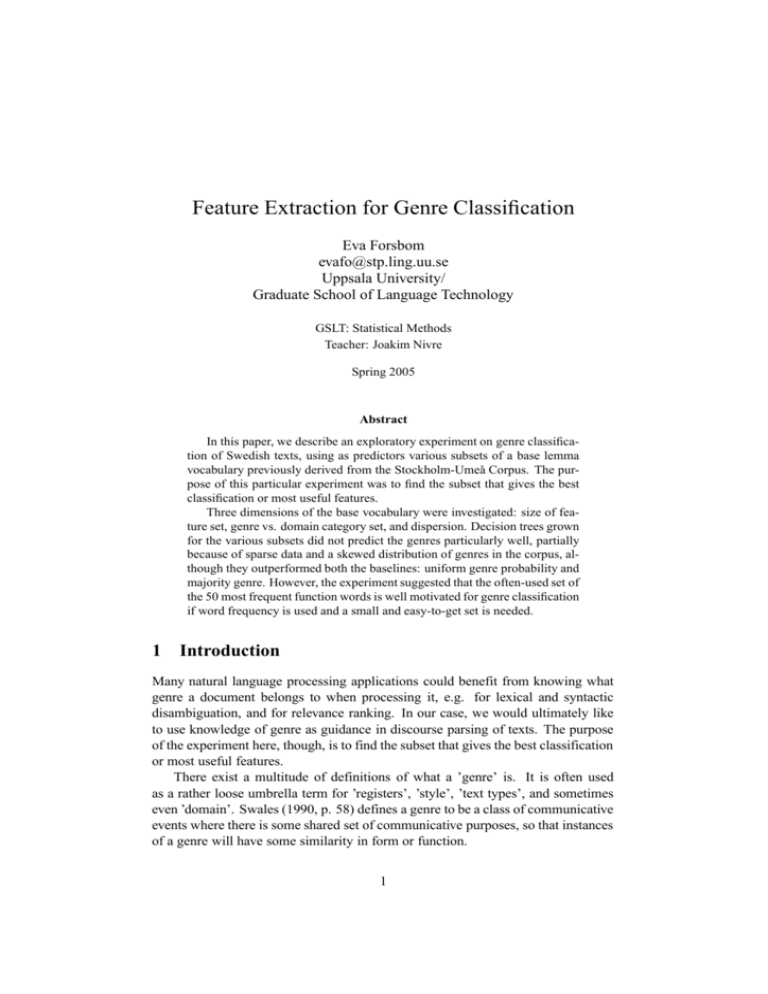

Feature Extraction for Genre Classification Eva Forsbom evafo@stp.ling.uu.se Uppsala University/ Graduate School of Language Technology GSLT: Statistical Methods Teacher: Joakim Nivre Spring 2005 Abstract In this paper, we describe an exploratory experiment on genre classification of Swedish texts, using as predictors various subsets of a base lemma vocabulary previously derived from the Stockholm-Umeå Corpus. The purpose of this particular experiment was to find the subset that gives the best classification or most useful features. Three dimensions of the base vocabulary were investigated: size of feature set, genre vs. domain category set, and dispersion. Decision trees grown for the various subsets did not predict the genres particularly well, partially because of sparse data and a skewed distribution of genres in the corpus, although they outperformed both the baselines: uniform genre probability and majority genre. However, the experiment suggested that the often-used set of the 50 most frequent function words is well motivated for genre classification if word frequency is used and a small and easy-to-get set is needed. 1 Introduction Many natural language processing applications could benefit from knowing what genre a document belongs to when processing it, e.g. for lexical and syntactic disambiguation, and for relevance ranking. In our case, we would ultimately like to use knowledge of genre as guidance in discourse parsing of texts. The purpose of the experiment here, though, is to find the subset that gives the best classification or most useful features. There exist a multitude of definitions of what a ’genre’ is. It is often used as a rather loose umbrella term for ’registers’, ’style’, ’text types’, and sometimes even ’domain’. Swales (1990, p. 58) defines a genre to be a class of communicative events where there is some shared set of communicative purposes, so that instances of a genre will have some similarity in form or function. 1 Biber (1995, p. 9f) uses ’register’ as a synonym to ’genre’, with roughly the same meaning as Swales, i.e. defined by external criteria and commonly recognised. ’Text type’ on the other hand, he sees as purely internal, and defined only by linguistic criteria. He also sees text type categorisation as a prerequisite for genre classification, rather than the opposite. Karlgren (2000, p. 30) defines ’style’ as a consistent and distinguishable tendency to make choices in organising the material, and between synonyms and syntactic constructions, and are aiming to find ’functional styles’ that can be used to classify a text into a genre (to predict the usefulness of a retrieved text in information retrieval). In EAGLES preliminary recommendations for text typology annotation of corpora (EAGLES, 1996), they make use of 3 major external (E) and 2 major internal (I) criteria for text classification: E.1.origin — matters concerning the origin of the text that are thought to affect its structure or content. E.2.state — matters concerning the appearance of the text, its layout and relation to non-textual matter, at the point when it is selected for the corpus. E.3.aims — matters concerning the reason for making the text and the intended effect it is expected to have. I.1.topic — the subject matter, knowledge domain(s) of the text. I.2.style — the patterns of language that are thought to correlate with external parameters. Melin and Lange (2000) define ’text type’ as the professional writers’ collective knowledge of how to adjust to the given conditions in a certain pragmatic or productional situation, in the systemic functional linguistics tradition of Halliday (1994). They reserve ’genre’ for historically established and strongly conventionalised text types, and ’register’ for a professional or social language usage; distinctions that are often used elsewhere in the literature as well. Argamon and Dodick (2004a,b) also view ’genre’ in the systemic tradition. ’Style’ (and ’attitude’) is defined as a general preference for certain choices in the network of possible choices for the same representational meaning, and ’register’ as differences between systemic preferences across genres, in terms of ’mode’ (communication channel), ’tenor’ (social relation between participants), and ’field’ (discourse domain). Following these definitions, we will view ’genre’ as a functional rather than a topical classification. For our purposes, we would like to find linguistic cues to the author’s intention of a certain unit in the text. We assume that the intentions are formulated by means of relational expressions, e.g. function words, mental verbs and attitudinal expressions. 2 The paper is organised as follows: In Section 2, related work on genre classification and features used therein is described. Details on the experimental setup, including descriptions of the feature pool, corpus, and method used, are given in Section 3, and the outcome is discussed in Section 4. Finally, some concluding remarks sum up the paper (Section 5). 2 Genre classification Genre classification is related to, for example, text categorisation, author attribution and identification, but the classification tasks focus on different aspects of language (Manning and Schütze, 1999, p. 575). While text categorisation is about classifying texts in topics or themes, genre and author classification is about distinguishing different styles. In genre classification, it is the functional style that matters, and in author classification, the issue is the individual variation within a functional style (van Halteren et al., 2005). Since genre classification is supposed to be based on functional variations of style, frequences of function words have often been used as the feature set for genre classification, as well as author classification (Lebart et al., 1998, p. 167f). In particular, the highest-frequency function words have been used, alone or as a complement to other stylometrics (Baayen, 2001, p. 214). Biber (1995) used 67 linguistic criteria in a multidimensional analysis of the given registers, as well as some of the subcategories, in the Lancaster-Oslo/Bergen (LOB) and London-Lund corpora to define 7 English text types along 5 dimensions: involved vs. informational production, narrative vs. non-narrative discourse, situation-dependent vs. elaborated reference, overt expression of argumentation, abstract vs. non-abstract style. The 67 criteria belong to 16 major gramatical and functional categories: tense and aspect markers, place and time adverbials, pronouns and pro-verbs, questions, nominal forms, passives, stative forms, subordination features, prepositional phrases, adjectives and adverbs, lexical specificity, lexical classes, modals, specialised verb classes, reduced forms and discontinuous structures, co-ordination, and negation. Like Biber, Kessler et al. (1997) studied dimensions of register, or facets, rather than genre. They categorise previously-used measures used in 4 levels: structural, lexical, character-level, and derivatives of the other levels, such as ratios (e.g. words per sentences). For their experiment involving logistic regression and two kinds of neural networks, they used 55 measures from the last three levels to categorise texts from the Brown corpus into three categoric facets and their sublevels: Brow, Narrative, and Genre. Karlgren (2000, with Cutting 1994) used a subset of Biber’s 67 features, i.e. the ones that could be computed using only a part-of-speech tagger. They evaluated the classifiers, based on the two first functions from discriminant analysis, on the Brown corpus. Santini (2004) also used part-of-speech tagged measures: trigrams, bigrams, 3 and unigrams. She trained the classifiers with a Naı̈ve Bayes algorithm and kernel density estimation, and evaluated them on ten spoken and written genres from the British National Corpus. Trigrams were found to have strong discriminative power. In their review of previously-used measures, Stamatatos et al. (2000a) also mentions various measures of vocabulary richness, but concludes that they are too dependent on text length to be of much use. In their own experiment, they decided to use output from a general-purpose text analysis tool, SCBD, such as sentence and chunk boundaries, but also intermediate information from within the tool, such as number of alternative analyses and number of failures. For training their classifiers, they chose multiple regression and discriminant analysis, and evaluated the classifiers on a Greek corpus extracted from the web. Results were encouraging as the classifiers outperformed existin lexically-based methods. They also tried to find out the minimum size of a corpus for training genre classifiers, and found that for homogeneous categories, 10 texts per category were enough, and that the lower boundary for text lengths is 1,000 words per text. As can be noted, many different measures, learning algorithms, and evaluation methods have been used, which makes comparison between experiments hard. Frequency counts and lexically based features seem to be the most tried measures, and balanced corpora compiled in the manner of the Brown and LOB corpora most frequently used in evaluations, so we will follow line in order to be able to make some comparisons. 3 Experimental setup In the experiment reported here, genre classification is based on subsets from a base lemma vocabulary ranked by frequency distribution and derived from the Stockholm-Umeå Corpus (SUC, 1997). Various subsets are selected with regard to category set size, feature set size, and dispersion, i.e. how many genres contribute to the frequency count for a lemma. Texts from SUC are then used in training and testing decision trees for genre classification, with the text typology given in SUC. 3.1 SUC SUC is a balanced corpus of modern Swedish prose covering approximately 1 million word tokens. The texts are from the years 1990 to 1994, and they were selected and classified according to criteria corresponding to the ones used for the Brown corpus (Francis and Kucera, 1979) and the Lancaster-Oslo/Bergen (LOB) corpus (Johansson et al., 1986), albeit adapted to Swedish culture. The basic idea of the compilation was that it should mirror what a Swedish person might read in the early nineties. The taxonomy of texttypes, i.e. genres and domains, is shown in Appendix A. The distribution of genres (or main categories) is shown in Figure 1 and the distribution of domains (or subcategories) in Figure 2. As can be noted, the dis- 4 150 100 0 50 Frequency 200 250 tribution is not ideal for genre analysis, but although SUC is not compiled for the purpose of genre analysis, and really has too few samples of most genres, it is the only Swedish larger corpus with other text types than news texts and a given text typology. Since it has been compiled in the same spirit as other corpora used for genre classification (cf. Section 2), it is also easier, but not easy, to compare the results. SUC consists of text samples from 1,040 texts, grouped into 500 files with an average of 2,065 tokens per file. The samples were selected at random, but with an effort to choose coherent stretches of text. Version 2.0 of SUC (SUC, forthcoming) contains the same text samples, but has corrected annotations and additional named entity annotation. For this experiment, version 2.0 was used. a b c e f g h j k Genre Figure 1: Distribution of texts per genre in SUC. 3.2 Base lemma vocabulary In many language technology applications, there is a need for a base vocabulary, i.e. a vocabulary that could be reused for most domains and text types. For many languages, there exist one or more frequency dictionaries that contain some kind of base vocabulary, often based on a combination of word frequency and dispersion in a corpus. Although such base vocabularies can be useful for some applications, the corpora they are based on might not be representative for other applications, and so the base vocabularies will be of less use. In our case, there exist some Swedish frequency dictionaries based on various text types collected at various time periods. The most recent and widely known is “Nusvensk frekvensordbok” (NFO) (Allén, 1971), which is based on a 1 million word corpus of news text from the late sixties, Press65, and contains a base vocabulary. 5 120 100 Frequency 80 60 40 20 aa ab ac ad ae af ba bb ca cb cc cd ce cf cg ea eb ec ed fa fb fc fd fe ff fg fh fj fk ga gb ha hb hc hd he hf ja jb jc jd je jf jg kk kl kn kr 0 Domain Figure 2: Distribution of texts per domain in SUC. (Domains are in alphabetical order from the left.) However, since NFO is only representative of news texts, its base vocabulary cannot be considered representative for our present purposes, i.e. as a norm to compare various text types with. Instead, we will use a base vocabulary we have extracted from SUC. The units of the base vocabulary are lemmas, or rather the baseforms from the SUC annotation disambiguated for part-of-speech, so that the preposition om ’about’ becomes om.S and the subjunction om ’if’ becomes om.CS. They are ranked according to relative frequency weighted with dispersion, i.e. how evenly spread-out they are across the subdivisions of the corpus, so that more dispersed words with the same frequency are ranked higher. This is done to compensate for accidental peaks of frequency due to certain texts, domains or genres, and is based on the ideas behind the base vocabularies of most frequency dictionaries of today, including the Swedish NFO, introduced by Juilland (e.g. in Juilland and Chang-Rodriguez (1964)). In NFO, the same weighting scheme for dispersion is used as in Juilland’s works, but it does not discriminate properly for some types of uneven distributions (Muller, 1965). In the German equivalent of NFO, Rosengren (1972, p. 6 XXIX) introduced another weighting scheme, Korrigierte Frequenz ’adjusted frequency’1 , which gives a better discrimination (see Equation 1). This measure is also used in later frequency dictionaries, e.g. the one based on the Brown corpus, and in our base vocabulary. AF where AF di xi n = Pn i=1 √ 2 d i xi = adjusted frequency = relative size of category i = frequency in category i = number of categories (1) The total vocabulary has 69,560 entries, but the base vocabulary is restricted to entries which occur in at least 3 genres, 8,554 entries, since this turned out to give the most stable ranking for adjusted frequency across three category divisions (genre, domain, text). Our base vocabulary, therefore, are those words that are not genre and domain dependent, given the subdivisions of SUC. The top-ranked entries are mostly function words, but also stylistically neutral content words, e.g. words in multi-word function words. These are the words we think would most probably signal discourse patterns. This base vocabulary from which the feature sets are selected requires relatively few resourses to compute, and it is probably useful for other applications as well, e.g. as a basis for stop lists in information retrieval. It contains lexical information and some morphosyntactic information in the reduced part-of-speech bit of the lemma. Although the lemmatising masks information about the actual form used, it is a way of handling disambiguation as well as data sparseness. By combining the lemma information with part-of-speech tag information, one would probably get a better prediction. The information on punctuation distribution gives valuable clues that corresponds to measures such as number of words per sentence (.!?), number of clauses (,), number of attributions (–), and number of declarative, imperative, and interrogative sentences (.!?), without the extra effort of computing the actual measures. 3.3 Decision trees Frequency counts for the selected features were extracted into a feature vector for each SUC text. The counts were log-normalised for text length, in the manner frequently used for topic categorisation (Manning and Schütze, 1999, p. 580). The score, s, in Equation 2 reflects the fact that the importance of an increase in relative frequency is not linearly proportional to the increase. 1 Attributed by Rosengren to J. Lanke of Lund University. 7 sij = round(10 · 1+log tfij 1+log lj ) where sij = score for term i in document j tfij = number of occurrences of term i in document j lj = length of document j (2) The feature vectors were then used in building classification trees through the rpart package of R (R Development Core Team, 2004; Therneau and Atkinson, 2004), which follows the description in Breiman et al. (1984) quite closely. Decision trees are built by recursively splitting the learning sample into smaller groups by some splitting criterion until a stopping criterion is met. The splitting criterion is usually one of Information Gain (Breiman et al., 1984, p. 25f) or the Gini Index (Breiman et al., 1984, p. 103). The Information Gain criterion is based on entropy, while the Gini Index is based on estimated probability of misclassification or variance. It is not really clear which gives the best tree for a given data set (Raileanu and Stoffel, 2004), so we used the default choice in rpart, the Gini Index (see Equation 3): i(t) = where i t J p(j|t) p(i|t) PJ j6=i p(j|t) · p(i|t) = Gini Index = a node in the tree = number of classes = estimated class probability for the current class j = estimated class probability for another class i (3) The most trivial stopping criterion is that all elements at a node have an identical feature vector or the same category so that splitting would not further discriminate between them. To prevent the tree from knowing the training set by heart, i.e. overfitting the training data but performing less well on unseen data, the tree is usually pruned afterwards. We use 10-fold cross-validation and minimal costcomplexity pruning. Cost complexity is based on adding a complexity cost, i.e. the number of terminal nodes weighted by a cost parameter, to the resubstitution estimates of misclassification for a tree (Breiman et al., 1984, p. 34f, 66), see Equation 4. Rα (T ) = R(T ) + α|T | where Rα (T ) α R(T ) |T | = cost complexity of tree T = complexity parameter = resubstitution estimate of misclassification for tree T = number of terminal nodes in T 8 (4) R(T ) is a measure of the estimated misclassification cost for the whole tree. The misclassification cost for a single item could either be uniform (1), based on the probability distribution of classes or supplied by the user. The default in rpart is probability distribution. The complexity parameter of the smallest tree which cross-validated error is less than or equal to the cross-validation R(T ), plus the cross-validation standard error, is then used for pruning the tree (Maindonald and Braun, 2003, p. 275). 4 Results 4.1 Size of category set The SUC corpus is divided into 9 genre categories and 48 domain subcategories. Training the decision trees on the larger set of categories resulted in poorer performance, approximately 10 per cent units worse than for the smaller category set (cf. Tables 1 and 2, and Tables 3 and 4, respectively), although none of the sets gave good predictors in the first place. This is most likely partly due to the skewed category distribution, and the fact that SUC was not compiled to be representative for genres, but to be representative for the distribution of genres a person might read in a year. The larger set also has fewer texts to learn from, which gives the smaller set an advantage. The fewer learning examples might also go in tandem with the slightly bigger trees induced for the larger category set, since less generalisation is possible, and there is a bigger risk that the training set does not contain any samples of a less represented category. However, both sets outperformed both the uniform category probability baseline (1) and the majority category baseline (2), which could be an indicator that the features used are potentially good predictors. Almost the same features appear in the trees for both category sets, and they are mostly function words or punctuation2 , which seems to suggest that both the genre and domain divisions are based also on functional style. As a comparison, in the study by Karlgren (2000) on the Brown corpus, the larger category sets also performed worse than the smaller ones: 2 categories gave 4% misclassifications (corresponding to the SUC category K vs. the rest), 4 categories gave 27% misclassifications (SUC categories ABC, EFG, HJ, K), and 15 categories gave 48% misclassifications (SUC categories A, B, C, E, F, G, H, J, KK, KL, KN, KR). 2 Due to the human factor, information on the mapping from an index to the actual punctuation character was unfortunately lost, except for the full stop, and could not be regained without repeating the whole experiment. Although the mapping information would be very valuable in a final decision tree, it did not seem crucial for the results here. 9 Subset 10 20 30 40 50 60 70 80 90 100 200 300 400 500 R(T) 60.67% 59.62% 61.35% 57.40% 58.85% 58.65% 59.14% 57.50% 60.00% 59.42% 56.92% 58.65% 57.88% 55.19% Splits 2 3 3 5 5 5 5 5 5 5 5 5 5 9 600 700 800 900 1000 2000 Baseline 1 Baseline 2 Mean Std 57.02% 57.12% 59.04% 56.63% 57.12% 56.83% 88.89% 74.13% 58.25% 1.54% 5 5 7 7 7 7 Features och.CC; det.PF han.PF; av.S; att.CS han.PF; kunna.V; av.S han.PF; eller.CC; av.S; att.CS, man.PI = = = = = = = = = han.PF; eller.CC; av.S; musik.NCU, man.PI; politisk.AQ; hon.PF; historia.NCU; kunna.V han.PF; eller.CC; av.S; musik.NCU, man.PI = han.PF; eller.CC; av.S; scen.NCU, man.PI; politisk.AQ, vilja.V = = = Table 1: Genre classification results for the 9 genres of SUC, with various subsets (top N) of the ranked base vocabulary, and with contribution from all 9 genres. 4.2 Dispersion and size of feature set The size of feature sets did not have much effect on performance, except for the very smallest subsets (cf. Tables 1 and 3), but the size and shape of the trees generally changed with size. Regardless of the size of the category set, however, the trees stayed stable between sets of 50 and 100 features; for genres they were stable up to 500 features. Up to 100 features, only words with contribution from all 9 genres are in the set, and from 2000 features, there are no features with contribution from all genres (cf. Table 5). With feature set sizes over 1000, more topical content words like film ’film’ and tränare ’coach’ are starting to break grounds, especially for the larger category set. Since dispersion is used in the weighted ranking of the base vocabulary, it seems as if it would be rather safe to slacken the dispersion restriction and only use the ranking restriction when selecting a subset of the base vocabulary, at least for the top 500 features. It also seems as if the optimal subset is somewhere in the top 50-100 features, which supports the findings of related studies (cf. Section 2). In particular, Stamatatos et al. (2000b) used the most frequent words computed from a much larger corpus, British National Corpus, and tested it on a more homogeneous set of genres from the Wall Street Journal. They found that the best 10 Subset 10 20 30 40 R(T) 78.56% 76.25% 78.17% 71.83% Splits 3 4 4 9 50 60 70 80 90 100 200 72.40% 72.60% 72.89% 71.54% 73.37% 72.40% 70.00% 9 9 9 9 9 9 9 300 400 70.10% 71.25% 9 9 500 600 700 71.83% 71.44% 72.60% 9 9 8 800 900 1000 2000 Baseline 1 Baseline 2 Mean Std 72.31% 72.50% 73.56% 72.31% 97.92% 86.63% 72.89% 2.28% 8 8 8 8 Features vara.V; och.CC; och.CC vara.V; han.PF; och.CC; inte.RG = vara.V; han.PF; hon.PF; inte.RG; den.DF; och.CC, men.CC; X.F*; ska.V = = = = = = vara.V; han.PF; enligt.S; hon.PF; inte.RG; den.DF; och.CC; X.F*; ska.V = vara.V; han.PF; enligt.S; hon.PF; inte.RG; den.DF; kommun.NCU; X.F*; ska.V = = vara.V; han.PF; enligt.S; hon.PF; inte.RG; den.DF; kommun.NCU; X.F* = = = = Table 2: Genre classification results for the 48 domains of SUC, with various subsets (top N) of the ranked base vocabulary, and with contribution from all 9 genres. performance was with the top 30 words set, and from then on the performance degraded. Used in combination with punctuation marks, the set was also more stable for fewer training samples than the other sets. Apart from the features showing up in the trees, information from the nextin-rank primary splits and the surrogate splits, i.e. splits to use when values are missing, gives clues to masked features, which are almost as informative as the top-ranked primary split. For example, the third person pronoun hon ’she’ is lurking in the background when present in the feature set, while han ’he’ is always showing up in the tree when present. Another method which takes more account of covariation would therefore probably be preferable once the feature set has been selected. 5 Concluding remarks In this paper, we described an exploratory experiment on genre classification of Swedish texts, using as predictors various subsets of a base lemma vocabulary previously derived from the Stockholm-Umeå Corpus. Three dimensions of the base vocabulary were investigated: size of feature 11 Subset 100 200 300 400 500 600 700 R(T) 59.42% 58.65% 59.90% 58.85% 59.23% 56.63% 54.71% Splits 5 5 5 5 5 5 9 800 900 55.87% 57.69% 9 9 1000 2000 58.27% 60.29% 9 10 3000 55.48% 7 4000 Baseline 1 Baseline 2 Mean Std 56.25% 88.89% 74.13% 58.30% 1.75% 7 Features han.PF; eller.CC; av.S; att.CS, man.PI = = = = han.PF; eller.CC; av.S; musik.NCU, man.PI han.PF; eller.CC; av.S; musik.NCU, man.PI; politisk.AQ; hon.PF; historia.NCU; kunna.V = han.PF; eller.CC; av.S; scen.NCU, kapitel.NCN; politisk.AQ, de.PF; vilja.V, till exempel.RG = han.PF; eller.CC; av.S; film.NCU, kapitel.NCN; scen.NCU, de.PF; politisk.AQ, till exempel.RG; kunna.V han.PF; eller.CC; av.S; regi.NCU, kapitel.NCN; de.PF; till exempel.RG = Table 3: Genre classification results for the 9 genres of SUC, with various subsets (top N) of the ranked base vocabulary, and with contribution from at least 3 genres. set, genre vs. domain category set, and dispersion. The decision trees grown for the various subsets did not predict the genres particularly well, partially because of sparse data and a skewed distribution of genres in the corpus, but they outperformed both the baselines: uniform genre probability and majority genre. Using the coarser-grained category set of genres gave better predictions than the finer-grained set of domains. Regardless of category set, the trees stayed stable between sets of 50 and 100 features; for genres they were stable up to 500 features. Slacking the dispersion criteria to allow for more genre-specific features increased the number of content words in the size-500 sets and above. The optimal subset, then, seems to be one with a coarse-grained category set and a feature set from the top 50-100 ranked features. In that range, the dispersion criteria does not matter. However, to make any substantial conclusions, one would need a corpus specifically compiled for genre analysis. Decision trees are convincingly easy to interpret and good for feature extraction, but they tend to only look at one feature at a time, and do not account well for dependencies. Features that are potentially useful in combination are easily masked by stronger features. By looking at the lower ranked primary splits and surrogate splits, it is possible to see recurring features that are always masked in the final trees. Therefore, other methods which take dependencies more into account should probably be used for the final classifier. 12 Subset 100 R(T) 72,40% Splits 9 200 71.06% 10 300 70.67% 9 400 70.96% 9 500 600 700 800 900 1000 2000 70.38% 72.31% 72.69% 71.64% 71.64% 72.21% 69.90% 9 9 9 9 9 9 12 3000 70.87% 10 4000 69.90% 11 Baseline 1 Baseline 2 Mean Std 97.92% 86.63% 72.47% 2.34% Features vara.V; han.PF; hon.PF; inte.RG; den.DF; och.CC, men.CC; X.F*; ska.V vara.V; han.PF; enligt.S; hon.PF; inte.RG; den.PF; företag.NCN;liten.AQ; X.F*; ska.V vara.V; han.PF; enligt.S; hon.PF; inte.RG; X.F*;och.CC; X.F*; ska.V vara.V; han.PF; enligt.S; hon.PF; inte.RG; X.F*;och.CC; X.F*, kommun.NCU = = = = = = vara.V; han.PF; film.NCU; Ume˚a.NP; best¨ammelse.NCU; hon.PF; inte.RG; X.F*; företag.NCN; med.S; utst¨allning.NCU; X.F* vara.V; han.PF; film.NCU; Ume˚a.NP; best¨ammelse.NCU; hon.PF; inte.RG; jfr.V; och.CC; X.F* vara.V; han.PF; film.NCU; Ume˚a.NP; best¨ammelse.NCU; hon.PF; inte.RG; jfr.V; tr¨anare.NCU; och.CC; X.F* Table 4: Genre classification results for the 48 domains of SUC, with various subsets (top N) of the ranked base vocabulary, and with contribution from at least 3 genres. Subset ¬9 100 0 200 1 300 2 400 5 500 9 600 15 700 34 800 49 900 69 1000 105 2000 614 3000 1511 4000 2494 Table 5: Introduction of features without full contribution by set size in the base vocabulary. 13 References Sture Allén. Nusvensk frekvensordbok baserad på tidningstext 2. Lemman. [Frequency dictionary of present-day Swedish based on newspaper material 2. Lemmas.]. Data linguistica 4. Almqvist & Wiksell international, Stockholm, 1971. Shlomo Argamon and Jeff T. Dodick. Conjunction and modal assessment in genre classification: A corpus-based study of historical and experimental science writing. In Notes of AAAI Spring Symposium on Attitude and Affect in Text: Theories and Applications, pages 1–8, Stanford University, Palo Alto, California, USA, March 2004a. URL http://lingcog.iit.edu/doc/SSS404ArgamonS.pdf. Shlomo Argamon and Jeff T. Dodick. Linking rhetoric and methodology in formal scientific writing. In Proceedings of the 26th Annual Meeting of the Cognitive Science Society (CogSci 2004), Chicago, Illinois, USA, August 2004b. URL http: //lingcog.iit.edu/doc/ArgamonDodickCR.pdf. R. Harald Baayen. Word Frequency Distributions. Text, Speech and Language Technology 18. Kluwer Academic Publishers, Dordrecht, The Netherlands; Boston, Massachusetts, USA, and London, England, 2001. ISBN 0-7923-7017-1. Douglas Biber. Dimensions of register variation: A cross-linguistic comparison. Cambridge University Press, Cambridge, UK, 1995. ISBN 0-521-47331-4. Leo Breiman, Jerome H. Friedman, Richard A. Olshen, and Charles J. Stone. Classification and Regression Trees. The Wadsworth Statistics/Probability Series. Wadsworth International Group, Belmont, California, USA, 1984. ISBN 0-534-98053-8. EAGLES. Preliminary recommendations on text typology. Preliminary Recommendation EAG–TCWG–TTYP/P, Expert Advisory Group on Language Engineering Standards, June 1996. URL http://www.ilc.cnr.it/EAGLES96/texttyp/texttyp. html. W. Nelson Francis and Henry Kucera. Manual of information to accompany a Standard Sample of Present-day Edited American English, for use with digital computers. Providence, R.I., USA, 1979. URL http://www.hit.uib.no/icame/brown/bcm. html. Original ed. 1964, revised 1971, revised and augmented 1979. M. A. K. Halliday. An Introduction to Functional English Grammar. Edward Arnold, London, third edition, 1994. Stig Johansson, Eric Atwell, Roger Garside, and Geoffrey Leech. The Tagged LOB Corpus Users’ Manual. Bergen, Norway, 1986. URL http://www.hit.uib.no/icame/ lobman/lob-cont.html. Alphonse Juilland and E. Chang-Rodriguez. Frequency Dictionary of Spanish words. The Romance Languages and Their Structure, First Series S 1. Mouton & Co, The Hague, The Netherlands, 1964. Jussi Karlgren. Stylistic experiments for information retrieval, volume 26 of SICS dissertation series. Swedish Institute of Computer Science (SICS), Kista, 2000. ISBN 91-7265-058-3. PhD thesis, Stockholm University. 14 Brett Kessler, Geoffrey Nunberg, and Hinrich Schütze. Automatic detection of text genre. In Proceedings of the 35th Annual Meeting of the Association for Computational Linguistics and the 8th Conference of the European Chapter of the Association for Computational Linguistics (ACL/EACL’97), pages 32–38, Madrid, Spain, July 1997. URL http://xxx.lanl.gov/abs/cmp-lg/9707002.pdf. Ludovic Lebart, André Salem, and Lisette Berry. Exploring Textual Data. Text, Speech and Language Technology 4. Kluwer Academic Publishers, Dordrecht, The Netherlands; Boston, Massachusetts, USA, and London, England, 1998. ISBN 0-7923-4840-0. John Maindonald and John Braun. Data Analysis and Graphics Using R: An Examplebased Approach. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press, Cambridge, UK, 2003. ISBN 0-521-81336-0. Christopher D. Manning and Hinrich Schütze. Foundations of Statistical Natural Language Processing. The MIT Press, Cambridge, Massachusetts, USA and London, England, 1999. ISBN 0-262-13360-1. Sixth printing with corrections 2003. Lars Melin and Sven Lange. Att analysera text: Stilanalys med exempel [Analysing text: Style analysis with examples]. Studentlitteratur, Lund, third edition, 2000. ISBN 91-4401562-3. Charles Muller. Fréquence, dispersion et usage. Cahiers de lexicologie, VII(2):33–42, 1965. R Development Core Team. R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria, 2004. URL http://www. R-project.org. Laura Elena Raileanu and Kilian Stoffel. Theoretical comparison between the Gini index and information gain criteria. Annals of Mathematics and Artificial Intelligence, 41(1): 77–93, May 2004. Inger Rosengren. Ein Frekvenzwörterbuch der deutschen Zeitungssprache. CWK Gleerup, Lund, 1972. Marina Santini. A shallow approach to syntactic feature extraction for genre classification. In Proceedings of the 7th Annual Colloquium for the UK Special Interest Group for Computational Linguistics, Birmingham, UK, January 2004. URL http://www. cs.bham.ac.uk/˜mgl/cluk/papers/santini.pdf. Efstathios Stamatatos, Nikos Fakotakis, and George Kokkinakis. Automatic text categorization in terms of genre and author. Computational Linguistics, 26(4), 2000a. Efstathios Stamatatos, Nikos Fakotakis, and George Kokkinakis. Text genre detection using common word frequencies. In Proceedings of the 18th International Conference on Computational Linguistics (COLING2000), Saarbrücken, Germany, July 31 - August 4 2000b. SUC. Stockholm-Umeå corpus. CD, version 1.0, 1997. SUC. Stockholm-Umeå corpus. Version 2.0, forthcoming. 15 John M. Swales. Genre Analysis: English in academic and research settings. The Cambridge applied linguistics series. Cambridge University Press, Cambridge, UK, 1990. ISBN 0-521-32869-1. Terry M. Therneau and Beth Atkinson. rpart: Recursive Partitioning, 2004. R package version 3.1-20. R port by Brian Ripley <ripley@stats.ox.ac.uk>. S-PLUS 6.x original at http://www.mayo.edu/hsr/Sfunc.html. Hans van Halteren, Harald R. Baayen, Fiona Tweedie, Marco Haverkort, and Anneke Neijt. New machine learning methods demonstrate the existence of a human stylome. Journal of Quantitative Linguistics, 12(1):65–77, 2005. 16 A SUC taxonomy ID A Genre Press: Reportage B Press: Editorial C Press: Reviews E Skills and Hobbies F Popular Lore G Biographies, essays H Miscellaneous J Learned and scientific writing K Imaginative prose ID AA AB AC AD AE AF BA BB CA CB CC CD CE CF CG EA EB EC ED FA FB FC FD FE FF FG FH FJ FK GA GB HA HB HC HD HE HF JA JB JC JD JE JF JG JH KK KL KN KR Domain Political Community Financial Cultural Sports Spot News Institutional Debate articles Books Films Art Theater Music Artists, shows Radio, TV Hobbies, amusements Society press Occupational and trade union press Religion Humanities Behavioral sciences Social sciences Religion Complementary life styles History Health and medicine Natural science, technology Politics Culture Biographies, memoirs Essays Government publications Municipal publications Financial reports, business Financial reports, non-profit organisations Internal publications, companies University publications Humanities Behavioral sciences Social sciences Religion Technology Mathematics Medicine Natural science, technology General fiction Science fiction and mystery Light reading Humour 17