3. Estimation of the Linear Regression Function

advertisement

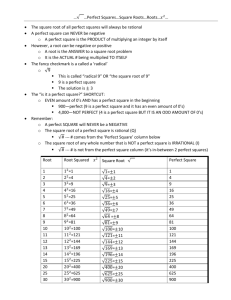

Chapter 3 Statistical Estimation of The Regression Function 3.1 Statistical Estimation If the population can be observed, there is no statistical problem: all the features if the population are known. The problem of statistical inference arises when the available information consists of a limited sample that is randomly drawn from the (possibly infinitely large) population and we want to infer something about the population using the sample at hand. Statistical estimation is one aspect of statistical inference1 - it concerns the estimation of population parameters such as the population mean and variance and the coefficients of a linear regression function. In Chapter 2 the population regression function of Y given X is defined as the conditional mean function of Y given X, written as E(Y | X). An important reason for our interest in this functional relationship is that it allows us predict Y given values of X and to quantify the effect of a change in X on Y (measured by say the derivative with respect to X.) Moreover, the conditional predictions of Y that are produced by the regression function are optimal under specific but generally applicable conditions. This chapter is concerned with the problem of estimating the population regression function using a sample drawn from the population. 3.1.1 Parametric Versus Nonparametric Methods Figure 2.7 and the related discussion illustrates how a sample can be used to estimate a population regression. Since the population regression function of Y given X is the conditional mean of Y given X, we simply computed a sequence of conditional means using the sample and plotted them. Nothing in the procedure constrains the shape of the estimated regression. Indeed, the empirical regression of Size given Price (the plot of in Figure 2.7) wanders about quite irregularly (although as it does so it retains a key feature that we expect of the population regression of S given P, namely that its average slope is steeper than the major axis - the empirical regression starts off below the major axis and then climbs above it.) The method used to estimate the empirical regression functions in Figure 2.7 1 Two other important inference problems are: hypothesis testing and the prediction of random variables. Econometrics Text by D M Prescott © Chapter 3, 2 can be described as nonparametric. While there is a huge literature on nonparametric estimation, this book is concerned almost entirely with parametric models. To illustrate the distinction between parametric and nonparametric methods, consider the equation Y = a + bX. This equation has two parameters (or coefficients): a and b and clearly the relationship between Y and X is linear. By varying the values of a and b the line’s height and slope can be changed, but the fundamental relationship is constrained to be linear. If a quadratic term (and one more parameter) is added: Y = a + bX + cX2 , the relationship between Y and X becomes more flexible than the linear function. Indeed, the quadratic form embraces the linear form as a special case (set c = 0). But the linear form does not embrace the quadratic form: no values of a and b can make the linear equation quadratic. Of course, the three parameter quadratic equation is also constrained. A quadratic function can have a single maximum or a single minimum but not both. Quadratic functions are also symmetric about some axis2. If further powers of X are added, each with its own parameter, the relationship becomes increasingly flexible in terms of the shape it can take. But as long as the number of parameters remains finite, the shape remains constrained to some degree. The nonparametric case is paradoxically not the one with zero parameters but the limiting case as the number of parameters increases without bound. As the number of terms in the polynomial tends to infinity, the functional relationship becomes unconstrained - it can take any shape. As noted above, the method used to construct the empirical regressions in Figure 2.7 did not constrain the shape to be linear, quadratic or any other specific functional relationship. In that sense the method used in Chapter 2 to estimate the population regression can be called nonparametric. In the context of regression estimation, the great appeal of nonparametric methods is that they do not impose a predetermined shape on the regression function - which seems like a good idea in the absence of any information as to the shape of the population regression. However, there is a cost associated with this flexibility and that concerns the sample size. To perform well3, the nonparametric estimator generally requires a large sample (the empirical regressions in Figure 2.7 used a sample of almost 5,000 observations). In contrast, parametric methods that estimate a limited number of parameters can be applied when samples are relatively small. The following examples by-pass the statistical aspect 2 The graph of Y = a + bX + cX2 is symmetric about the line X = - b/(2c) 3 The meaning of “performing well” will be discussed later in the chapter. Econometrics Text by D M Prescott © Chapter 3, 3 of the argument but nevertheless provide some intuition. If you know that Y is a linear function of X, then two points (2 observations) are sufficient to locate the line (and to determine the two parameters.) If you know the relationship is quadratic, just three points are sufficient to plot the unique quadratic function that connects the three points and therefore three observations will identify the three parameters of the quadratic equation. The relationship continues: in general n points will determine the n parameters of an nth order polynomial. 3.2 Principles of Estimation As discussed in Chapter 2, there are examples of bivariate distributions in which the population regression functions are known to be linear. In the remainder of this chapter we will be concerned with linear population regressions and the methods that can be used to estimate them. We begin with a discussion of alternative approaches to statistical estimation - all of which are parametric. 3.2.1 The Method of Moments The quantities are referred to as the first, second and third uncentred moments of the random variable X. The centred moments are measured around the mean The Method of Moments approach to estimating these quantities is to simply calculate their sample equivalents, all of which take the form of averages. Table 3.1 provides the details for the first two moments. Notice the parallels between the expressions for the population moments and their sample counterparts. First, the estimator uses instead of the expectation operator E. Both “take an average”, one in the sample, the other in the population. Second, the estimator is a function of the observations Xi whereas the population moment is defined in terms of the random variable X. Econometrics Text by D M Prescott © Chapter 3, 4 Table 3.1 Population Moment (parameter) Method of Moments Estimator The justification for the Method of Moments approach to estimation is based on a Law of Large Numbers4 which, loosely, states that as the sample size tends to infinity the probability that the sample mean differs from the population mean tends to zero. In other words, the probability limit of the sample mean is the population mean. In fact, the probability limit of any sample average is the expected value of that quantity. In the following expressions plim refers to the probability limit. Recall that the sample covariance is also a sample average, so this too is a consistent estimator of the population covariance. An estimator whose probability limit is identical to the parameter it estimates is said to be consistent. By the Law of Large Numbers, the Method of Moments (MM) estimator is a consistent estimator. An important property of the probability limit is provided by the following theorem: Theorem 3.1 If is a consistent estimator for the population parameter and f( ) is a continuous function then 4 See the Appendix to this chapter for more details on the Law of Large Numbers and the notion of probability limit Econometrics Text by D M Prescott © Chapter 3, 5 Theorem 3.1 implies for example that whereas . Now let’s apply the MM estimator to the bivariate linear regression. Table 3.2 presents the details; they are based on Theorem 2.1 of Chapter 2. That theorem states that for any linear population regression E( Y | X ), the slope and intercept are given by . The MM estimator is simply the sample counterpart to the expression that defines the population parameter of interest. Table 3.2 The Method of Moments Estimator for The Bivariate Linear Regression: E(Y | X) Population Parameters Slope: MM Estimator . Intercept: Later in this chapter we will report the MM estimator for the linear regression of Price on Size using the house-price data that were discussed in Chapter 2. 3.2.2 The Maximum Likelihood Estimator An important difference between the Maximum Likelihood Estimator (MLE) and the MM estimator discussed in the previous section is that the MLE demands that the specific distribution that generated the data be identified. In the previous section we assumed that the population regression is linear, but we did not specify or assume that the random variables X and Y are, for example, bivariate normal. If it is known5 that X and Y are bivariate normal, then intuitively, it seems sensible to take this into account when estimating the parameters of the regression function. An important property shared by 5 In practical situations it can rarely be known with certainty what distribution actually generated the data but through various tests the statistician may be comfortable assuming that X and Y are, say, normally distributed Econometrics Text by D M Prescott © Chapter 3, 6 MM and ML is that both estimators are consistent. In the context of MLE, the researcher is assumed to know the distribution from which the data are drawn - as noted in Chapter 1, it may be helpful to think of this distribution as a “data generating process” in the way that rolling a six-sided die generates data. The principle behind MLE is essentially this: given the data, what are the values of the population parameters that make the observed sample the most likely. That is, what kind of population is likely to have generated this particular sample? Suppose there are two colleges - one specializes in sports the other in music. Suppose the population mean height of female students at these colleges is 1.70 metres (sports) and 1.63 metres (music). A random sample of 20 students is taken from one of the colleges and the sample mean height is 1.64 metres. From which college was the sample drawn? It might never be known for certain, but the music college is more likely than the sports college6 to generate a sample mean of 1.64. The ML principle identifies the music college as the source of the sample. Now consider a more formal example that illustrates how the ML principle is applied. Suppose the object is to estimate the proportion of grade 10 students that smoke cigarettes. In the population of grade 10 students the true proportion is B. A random sample of size n reveals that n1 smoke and n0 do not. The probability of observing n1smokers and n0 non-smokers in a sample of n = n1 + n0 is given by the binomial distribution: where k is the binomial coefficient The MLE treats the sample as given (n and n1 are thought of as fixed) and asks what value of B makes the actual sample most likely (most probable in this case.) Let and be the MLE is some other value. The MLE satisfies: The value of 6 can be found using calculus: take the derivative with respect to B of the We are implicitly assuming the variance of height is the same at the two colleges. Econometrics Text by D M Prescott © Chapter 3, 7 probability of observing the sample and set it to zero7. The solution is = n1/n , namely the proportion of smokers in the sample. The MLE of B is therefore perfectly intuitive: the proportion of smokers in the population is estimated by the proportion of smokers in the sample. To apply the ML principle to the bivariate regression model it is necessary to specify the distribution that generated the data, such as the bivariate normal. Equation [2.9] of Chapter 2 describes the regression of Y given X for the bivariate normal distribution. It is reproduced here: where , is a normally distributed random variable with a mean of zero and a variance . The normal density function is given be equation [1.13]. For the random variable , it has the form The sample consists of n observations (Xi, Yi) , i = 1, 2,..., n. The corresponding values of are not observable, but nevertheless the likelihood of observing the sample can be expressed as the product of the densities: Recall that the exp(a)exp(b) = exp(a+b) - the product of exponentials is the exponential of the summed exponents. Apply this idea to the likelihood function and we get The final step is to substitute for the unobserved ,’s using [2.9]. This expresses the likelihood of the sample in terms of observable data: 7 Treat the probability as a product i.e., use the product rule of differentiation. It can be shown that the first order condition identifies a maximum - not a minimum. Econometrics Text by D M Prescott © Chapter 3, 8 In equation [3.3], X and Y represent the n observed values (Xi, Yi) , i = 1, 2,..., n.. Note also that the likelihood function is seen as a function of the unknown parameters. The ML estimators are the parameter values that maximize the likelihood function, treating X and Y as fixed. If are any other parameter values then the MLE satisfies As in the previous example, calculus can be used to determine the MLE. The details are omitted and we go straight to the solution. It turns out that in this case the MLE of " and $ are identical to the MM estimators given in Table 3.2 3.2.3 Ordinary Least Squares In Chapter 2 it was explained why the population regression function can be described as the “least squares function.” The argument is briefly reviewed here. If the object is to find the best predictor of the random variable Y , say Y*, such that Y* minimises , the solution is Y* = E(Y), the population mean of Y. Further, if X is correlated with Y and the value of X is known when the forecast of Y is made, then the solution to the optimal prediction problem is Y* = E(Y | X) i.e., the conditional mean of Y given X. This is none other than the population regression function of Y given X. The regression function therefore minimises the expected (long run average) squared prediction error. Consider now what this implies if the population regression function is linear. In such a case it can be written as Let Y* = . + 0X be a representative linear equation. Consider the problem: Determine the values of . and 0 that: minimise E (Y - Y* | X)2 = E ( Y - . - 0X | X)2 Econometrics Text by D M Prescott © Chapter 3, 9 Since we know the solution to this minimisation problem is the population regression function E(Y | X) and since in this case it is linear, the solution values are: . = " and 0 = $ where " and $ are the specific parameter values defined above. This analysis suggests that the least squares line drawn through a sample scatter is a viable estimator of the linear population regression function. Table 3.3 compares the properties of the linear population regression with the sample least squares regression. The sample of n observations is represented by the points (Xi , Yi ) for i = 1,2,...,n . Table 3.3 The Least Squares Method of Estimation The Population The Sample Y = " + $X + , Yi = a + b Xi + ei for i = 1,2,...,n . Linear Population Regression Estimated Linear Regression " and $ are the unique values that minimise: The Least Squares Principle: Choose a and b such that the following quantity is minimised: Population Parameter Values The Least Squares Solution Values Table 3.3 emphasises that the population regression function is the least squares function. To estimate the parameters of this function using a sample that has been drawn from the population we find the least squares function within the available sample. Notice that to apply the least squares principle the Econometrics Text by D M Prescott © Chapter 3, 10 expectation operator E (which gives a population mean) is replaced by its sample equivalent (which gives a sample mean). Before looking at the details of how the least squares solution is obtained, consider the numerical example in Table3.4 Table 3.4 Annual Advertising and Sales Data for Eight Stores (Thousands of Dollars) Store No. 1 2 3 4 5 6 7 8 Advertising Expenditures 15 10 12 18 20 28 25 32 Sales 1000 865 945 930 990 1105 1070 1095 The artificial data in Table 3.4 represent the sales for eight stores (the dependent or Y-axis variable) together with each store’s advertising expenditure (the explanatory or X-axis variable.) The data are plotted in Figure 3.1 along with the least squares regression line. Figure 3.1 The Least Squares Regression 1150 1100 Y^ = 800 + 10*18 = 980 Sales 1050 Y^ = a + bX 1000 e = -50 950 (X=18,Y=930) 900 850 10 15 20 25 30 35 Advertising Expenditure The least squares equation is written as: Y$ = a + bX . For each data point, the vertical distance from to the least squares line is referred to as the least squares residual, which is represented by the Econometrics Text by D M Prescott © Chapter 3, 11 symbol e . For the ith data point (Xi, Yi ), the least squares residual is The least squares residual can also be described as a within-sample prediction error since it is the difference between the observed value of Y and the predicted value of Y, that is, the value predicted by the least squares regression equation. The equation of the least squares regression in Figure 3.1 is Y$ = 800 + 10 X . In thousands of dollars, store number 4 spent 18 on advertising and had sales of 930. The L.S. regression line predicts sales of 800 + 10*18) = 980 (thousands of $). The prediction error is therefore (thousands of $). Notice that all the data points below the L.S. regression line have negative residuals since they are over-predicted by the L.S. regression while all points above the line have positive residuals. 3.2.4 Solving the Least Squares Problem The slope and the intercept of the L.S. regression line are chosen in such a way as to minimise the sum of squared residuals, SSR. If the slope and intercept are changed, the residuals will obviously change as well and so too will the sum of squared residuals, SSR. In short, SSR is a function of a and b which can be written as follows: To solution to the L.S. minimisation problem can be found by setting to zero the first derivatives of SSR(a,b) with respect to a and b . The pair of first order conditions provide two equations that determine the solution values of a and b . The two partial derivatives are shown in equations [3.4] and [3.5] Econometrics Text by D M Prescott © Chapter 3, 12 Cons ider equation [3.4] first. Notice that the differential operator can pass through the summation sign because the derivative of a sum of items is the same as the sum of the derivatives of the individual items. Equation [3.4] can be written as: The derivative of the typical element can be evaluated in one of two ways. Either the quadratic term can be expanded and then differentiated, or the function of a function rule can be used. Using the function of a function rule we find that Since the derivative is set to zero at the minimum point of the function S(a, b), the term -2 can be Econometrics Text by D M Prescott © Chapter 3, 13 cancelled. The first order condition [3.4] can therefore be written as Equation [3.6] has an interesting interpretation that will be discussed later. The final step is to rewrite [3.6] in a more useable form. Recall that the sum of n numbers is always equal to n times the mean of the numbers: Also, recall that The final form of the first order condition [3.4] is Now consider the second of the first order conditions, [3.5]. The derivative of the typical element with respect to the slope coefficient b is Equation [3.5] can therefore be written as Dividing through by minus two yields the following equation that is equivalent to equation [3.6]. Econometrics Text by D M Prescott © Chapter 3, 14 After some rearrangement, equation [3.8] implies that the least squares coefficients satisfy: Equations [3.7] and [3.9] can be solved for the least squares coefficients. Equation [3.7] is used to solve for the intercept a. Now substitute for a in equation [3.9] and solve for b: Notice that the least squares equation for the slope coefficient b can be expressed in deviation form, where A Numerical Example Table 3.5 illustrates the calculation of the least squares coefficients for the advertising/ sales data that are plotted in Figure 1. The first two columns present the original data on advertising expenditure and sales at the two stores. The least squares slope coefficient b is calculated according to equation [4.13], which requires the computation of GXiYi and G(Xi)2 as well as the means of X and Y. The squared X values appear in the third column and the cross products between X and Y appear in the fourth column of Table 3.5. These sums and the means of X and Y are presented at the bottom of the appropriate columns. Finally, the least squares formulae are used to compute the intercept and slope of the least squares line for these data. These calculations show that the line drawn in Figure 1 is indeed the least squares line. Econometrics Text by D M Prescott © Chapter 3, 15 Table 3.5 Calculation of the Least Squares Coefficients (Advertising) Xi (Sales) Yi (Xi)2 XiYi 15 1000 225 15000 10 865 100 8650 12 945 144 11340 18 930 324 16740 20 990 400 19800 28 1105 784 30940 25 1070 625 26750 32 1095 (GYi)/n = Y= 1000 1024 35040 G (Xi)2 = 3626 G XiYi = 164260 (GXi)/n = X= 20 3.2.5 Interpretation of the L.S. Regression Coefficients The parameter $ in Table 3.3 can be described as the slope of the population regression function E(Y | X) i.e., it is the derivative of E(Y | X) with respect to X. A more intuitive $ interpretation is that it represents the effect on the conditional mean of Y, E(Y | X), of a unit change in X. $ therefore has units which are equal to the units of Y divided by the units of X. The L.S. estimator b estimates $ and so it is Econometrics Text by D M Prescott © Chapter 3, 16 the estimated effect on E(Y | X) of a unit change in X. Table 3.6 shows the L.S. regression of house price on a constant and house size. These data have been described in Chapter 2. Recall that the data were collected over a six year period, 1983-87. The variable “price” records the price at which the house sold and “size” is its size in square feet. The coefficient on SIZE is $60.5 per square foot and it represents the estimated effect on market price of an increase in SIZE of one square foot. More specifically, it is the effect on the conditional mean price (conditional on size) of a unit increase in size. Consider the population mean price of all houses that are exactly (a) 1500 square feet and (b) 1501 square feet. The difference in these conditional means is estimated to be $60.5 per square foot. Note that the relationship between the conditional mean price and size is linear so this estimate applies over the entire range of house sizes. However, it is best to think of the estimate as being particularly relevant to at the sample mean size, since this is where the weight of the data is concentrated (the balance point of the size distribution.) Also, since the data were collected over a period of 6 years when house prices were rising, it would be appropriate to think of the estimate of $ as applying at a date “in the middle” of the sample period, say January 1985. Table 3.6 The Least Squares Regression of Price on Size Dependent variable: PRICE Number of observations: 2515 Mean of dep. var. = 95248.7 Std. dev. of dep. var. = 43887.6 Variable C SIZE Estimated Coefficient 15476.9 60.5055 The intercept of the L.S. regression is $15,476.90 Note that the intercept has the same units as the dependent or Y-axis variable which is PRICE in this case. In most L.S. regressions the intercept has no meaningful interpretation. On the other hand it is usually important to include the intercept in the equation otherwise the estimated linear relationship between Y and X will be forced through the origin Econometrics Text by D M Prescott © Chapter 3, 17 (0, 0) and this is rarely justified. It could be argued that in the current example, the predicted price of a house of zero size refers to the price of an empty lot. However, since the sample did not include any market transactions in which empty lots were bought and sold it is unlikely that the value of $15, 476.90 is a particularly good estimate of the market value of an empty lot in say January 1985. L.S. chooses a slope and intercept to fit the data and the resulting linear equation is an approximation to the population regression over the range of the available data. In this case the scatter plot is “a long way” from SIZE = 0. What is meant by “a long way?” Table 3.7 shows that the minimum SIZE in the sample is 700 square feet and the standard deviation of SIZE is 392 square feet so SIZE = 0 is 1.8 standard deviations below the minimum size in the sample and 3.4 standard deviations below the sample mean of SIZE. It is extremely important to bear in mind that the interpretation of a particular regression coefficient depends crucially on the list of explanatory variable that is included in the regression. To illustrate this important point consider a model in which there are two continuous explanatory variables. To make the example specific, you might think of X1 as house size and X2 as lot size. The coefficient $1 is the partial derivative of E(Y | X1, X2 ) with respect to X1 . It is therefore the effect of a change in X1 on the conditional mean price while holding X2 constant This “holding X2 constant “ is a new condition that did not apply when X2 was not in the model. To make this point clear, compare the conditional mean of Y at two values, say X1 and X1 + 1. The change in the conditional mean is The important point to note is that the terms cancel only if conditional means. When we consider the coefficient takes the same value in the two we are therefore comparing the mean price in two subpopulations of houses that have the same lot size but differ in house size by one square foot. We now turn to a model of house prices in which there are several explanatory variables. The definitions of these variables is given in Table 3.7 Econometrics Text by D M Prescott © Chapter 3, 18 Table 3.7 Variable Definitions Symbol Description & Units PRICE Transaction price, 1983-987 ($) SIZE House size (square feet) LSIZE Lot size (square feet) AGE Age of house at time of sale (years) BATHP Number of bathroom pieces POOL If pool exists, POOL = 1, otherwise POOL = 0 SGAR If single-car garage, SGAR =1, otherwise SGAR =0 DGAR If double-car garage, DGAR =1, otherwise DGAR =0 FP If fireplace exists, FP = 1, otherwise FP =0 BUSY_RD BUSY_RD = 1 if on busy road, otherwise BUSY_RD = 0 T Time of sale to nearest month. T =1 if Jan. ‘83; T = 2 if Feb. ‘83 etc. Table 3.8 reports summary statistics for the variables described on Table 3.7 and the L.S. regression of PRICE on ten explanatory variables plus a constant term, which allows for the intercept. The coefficient on SIZE is $41.22 per square foot which is just 2/3 the value of the corresponding coefficient in Table 3.6 The reason for this substantial difference is that the regression in Table 3.6 conditions only on SIZE. But in Table 3.8 PRICE is conditioned on a much longer list of variables. From Table 3.8 we infer that a one square foot increase in size increases the mean price of houses by $41.22 while holding constant the lot size, the age of the house, the number of bathroom pieces and so on. If you were to walk round your neighbourhood, you will probably find that bigger houses are likely to be on bigger lots, have more bathroom pieces and perhaps have a double rather than a single garage. This is reflected in the larger L.S. regression coefficient on SIZE in Table 3.6 compared to that in Table 3.8. Now let’s turn to a few other coefficients in Table 3.8. The coefficient on LSIZE is positive, which confirms larger lots add to the market value of houses. On the other hand, older houses sell for Econometrics Text by D M Prescott © Chapter 3, 19 less. The coefficient on AGE suggests that for every additional 10 years since construction, house prices fall by $1,891. Being on a busy road reduces the expected price by $3,215 while a fireplace is estimated to add $6,672 to market value. The coefficient on T is $1,397 which provides an estimate of how quickly prices were rising over the sample period, 1983-87. T records the month in which the transaction took place so an increase in T of 1 means one month has passed. The data suggest that house prices rose $1,397 per month over this six year period. Note that the model is linear in T so it estimates an average monthly increase - a linear time trend in prices. The model as it stands cannot reveal if prices rose slowly at first and then accelerated or rose quickly and then slowed. We would a need a more sophisticated model to track anything other than a linear price path. Table 3.8 Number of Observations: 2515 Mean Std Dev PRICE 95248.74831 43887.55213 SIZE 1318.42346 391.67924 LSIZE 6058.16581 3711.30361 AGE 32.34314 30.36091 POOL 0.043738 0.20455 BATHP 5.88867 2.01060 FP 0.38449 0.48657 SGAR 0.29463 0.45597 DGAR 0.11412 0.31801 BUSY_RD 0.11451 0.31850 T 37.00915 21.98579 Min 22500.0 700.0 1024.0 0.0 0.0 3.0 0.0 0.0 0.0 0.0 1.0 Dependent variable: PRICE Number of observations: 2515 Mean of dep. var. = 95248.7 Std. dev. of dep. var. = 43887.6 Variable C SIZE LSIZE AGE POOL BATHP FP SGAR DGAR BUSY_RD T Estimated Coefficient -25768.0 41.2247 .789266 -189.109 6284.14 1907.28 6672.45 3816.29 12858.7 -3215.07 1397.08 3.2.6 Effects of Rescaling Data on The Least Squares Coefficients Max 345000.0 3573.0 95928.0 150.0 1.0 16.0 1.0 1.0 1.0 1.0 72.0 Econometrics Text by D M Prescott © Chapter 3, 20 In the previous section it was argued that a complete discussion of the least squares must include the units in which the variables are measured. This section presents two rules that show precisely how rescaling the data affects the least squares intercept and slope coefficient. The dependent and independent variables are quantities that have two parts: one component is the numerical part that is perhaps stored in a data file or on paper. The other component is the unit of measurement. Consider a small town with a population of 25,000 people. Clearly the "population" has two parts, a pure number (25,000) and the units (a person). In symbols: Quantity = (number) times (units). The same quantity can be expressed in different ways, for example we may prefer to reduce the number of digits we write down by recording the population in thousands of people: now Quantity = (25) times (thousands of people). Notice that this rescaling can be expressed as Quantity = (number/1000) times (1000xunits). The number component is divided by 1000 and the units component is multiplied by 1000 (the units are transformed from people to thousands of people). In the equation Y = a + bX, X and Y refer only to the number components of the relevant quantities, which is why it is so important to be aware of the units of measure. First consider rescaling the number component X by a scale factor mx; define the result to be X* = mxX. Although X* and X are different numbers they represent the same quantity. Quantity = X times (units of X) = (mxX) times (units of X divided by mx) = X* times (units of X*). The units of X* are the units of X divided by mx. Replacing X with X* in the equation Y = a + bX will result in a new slope coefficient b*. Notice that Y is simply a number and it will not change as a result of this substitution so the new right hand side (a + b*X*) must give the same result. The intercept a remains unchanged and the product of the slope and the X-axis variable, b*X*, is the same as before, i.e., b*X* = bX. The previous equation implies that the new slope coefficient is b* = b(X/X*) = b/mx. The effect of rescaling the X-axis data is summarized in the following rule. Rescaling Rule #1 If the X-axis data are rescaled by a multiplicative factor mx, the least squares intercept is unchanged but the least squares slope is divided by mx. This rule illustrated by the following example. Suppose that the advertising data had been recorded in dollars instead of thousands of dollars but sales continue to be recorded in thousands of dollars. For example, store #1 spent $15,000 on advertising so instead of recording 15, suppose 15,000 Econometrics Text by D M Prescott © Chapter 3, 21 appeared in Table 3.4. The slope of the least squares line can be recomputed using the method presented in Table 3.5 or a computer program such as TSP could be used. The result will be Y = 800 + 0.01X*, where X* is advertising measured in dollars. The new slope coefficient of 0.01 still represents the effect of a one unit increase in advertising expenditures on sales. A one dollar increase in advertising leads to a sales increase of (0.01)x(units of sales) = 0.01x$1 000 = $10. The basic conclusion remains in tact and is entirely independent of the units that the data are measured in.8 The effects of rescaling Y by a multiplicative factor my can be worked out in a similar way. When Y is multiplied by my we obtain Y* = myY. Using Y* to compute the least squares line instead of Y we multiply the original least squares equation by my: Y* = myY = (mya) + (myb)X. In this case, both the intercept and the slope coefficient are multiplied by my. Rescaling Rule #2 If the Y-axis data are rescaled by a multiplicative factor my, both the least squares intercept and slope coefficient are multiplied by my. To illustrate this rule suppose that the sales data are measured in dollars while advertising figures continue to be measured in thousands of dollars. This change would cause all the numbers in the last row of Table 3.4 and all the numbers in the second column of Table 3.5 to be multiplied by my = 1000. If you work through the calculations in Table 3.5 using the new numbers you will find that the new intercept coefficient is 800 000, i.e., the previous intercept is multiplied by 1000. Also, the new slope coefficient ^ is 10 000 - it too is increased by a factor of 1000. The new least squares equation is Y = 800 000 + 10 000X. Again, the rescaling does not make any substantive change to the interpretation of the fitted line. A one unit increase in advertising expenditures ($1 000) raises sales by 10 000 times (units of Y), which amounts to a $10 000 increase in sales since the units of Y are simply dollars. Also, the predicted sales for store #1 are $800 000 + (10 000)($15) = $950 000, just as before. 3.2.7 Some Important Properties of the L.S. Regression The least squares fit has a number of important properties that can be derived from the first order 8 Notice also, that the rescaling of X into X* has no effect on the predicted value of sales for store #1. When advertising is measured in thousands of dollars, the predicted value of sales is 800 + 10x15 = 950, which represents $950,000. When advertising is measured in dollars, the predicted value of sales is 800 + 0.01x15,000 = 950, which also represents $950,000. Econometrics Text by D M Prescott © Chapter 3, 22 conditions [3.4] and [3.5]. In this section the following properties will be demonstrated. Least Squares Property #1 If the least squares line includes an intercept term (the line is not forced through the origin) then the sum and mean of the least squares residuals is zero, i.e. . Least Squares Property #2 The sum of the cross products between the explanatory variable X and the least squares errors is zero, i.e., . When an intercept is included in the least squares equation, this means that Cov(X, e) = 0 and Corr(X, e) = 0. Least Squares Property #3 The sum of the cross products between the least squares errors and the predicted values of the dependent variable, , is zero, i.e., . When an intercept is included in the least squares equation this means that Property #1 is based on equation [3.6] which was derived from the partial derivative of the sum of squared errors (first order condition [3.4]). It is reproduced here for convenience. [3.6] Recall that the least squares errors were defined in equation [4.3] to be so equation [3.6] implies that the sum of the least squares errors is zero, that is . Clearly, if the sum of the least squares errors is zero, then the average least squares error is zero as well. Another way to think of this property of the least squares fit is that the least squares line passes through the mean point of the data The mean of X in the advertising/sales example is 20 and when this is substituted into the equation of the least squares line, the result is Econometrics Text by D M Prescott © Chapter 3, 23 . In other words, when the mean value of X is substituted into the equation of the least squares line, the result is the mean value of Y. This is not an accident due to the numbers we have chosen, it is a property of least squares that holds in every case and is directly related to the fact that the sample mean least squares error is zero. However, it is important to note that these conclusions are derived from the partial derivative of the sum of squared errors with respect to the intercept parameter a. This presupposes that least squares is free to determine the intercept parameter. If the intercept is not included (effectively, fixed at zero), then the least squares errors will generally not sum to zero and the least squares line will not pass through the sample mean. The second of the first order conditions, [3.5], is the basis of L.S. Property #2, which says that the least squares errors are uncorrelated with the explanatory variable X. The partial derivative of the sum of squared errors with respect to the slope coefficient b takes the form of equation [3.8]: It has just been pointed out that the term in parentheses is the least squares error, so [3.8] can be written as Recall that the sample covariance between two variables Z and W is Econometrics Text by D M Prescott © Chapter 3, 24 Clearly, if either (or both) of the means of Z and W is zero, then the covariance formula simplifies to It has already been shown that so it follows from equation [3.10] that the covariance of the least squares errors and the explanatory variable X is zero, i.e., Cov(X, e) = 0. Since the numerator of the correlation coefficient between two variables is the covariance between these same variables, it also follows that e and X are uncorrelated. Let's consider the intuition behind this property of least squares. ^ The basic problem that least squares is trying to solve is to find the particular equation Y = a + ^ bX that best explains the variable Y. The value of Y is broken down into two parts, Y = Y + e. The first ^ component, Y, is the part of Y that is explained by X - the fitted line translates changes in X into changes in predicted values of Y. The second component, e, is the error term and this is the part of Y that cannot be explained by X. But what does it mean to say that X cannot "explain" e? Suppose that X and e are positively correlated so that Cov(X, e) > 0. A scatter plot of X and e would reveal that whenever X is above its average value, e tends to be above its average value as well and when X is below average e tends to be below average. But if this is true, then increases in X would be associated with increases in e. In other words, changes in X would "explain" changes in e. This situation is clearly not consistent with the idea that the error e represents the part of Y that cannot be explained by X. To say that X cannot explain e is the same thing as saying X and e are uncorrelated and this is precisely what equation [3.11] means9. The calculations in Table 3.9 illustrate the two important properties of least squares that have been discussed in this section. The first two columns of Table 3.9 present the original advertising and 9 In fact, if Z and W are uncorrelated we can say only that X cannot be explained by linear equations in Z (and vice versa). As shown in Chapter 2, it is possible to find examples in which Z and W are uncorrelated yet functionally related in a nonlinear way. Econometrics Text by D M Prescott © Chapter 3, 25 sales data. The predicted values of Y corresponding to each level of advertising expenditures are in the third column. These predicted sales levels all lie on the least squares line. The fourth column presents the differences between actual and predicted sales, i.e. the least squares errors ei. Notice that the sum of the least squares errors is zero, i.e. G ei = 0. To demonstrate that the explanatory variable X is uncorrelated with the least squares errors, the fifth column presents the products eiXi. Summing all the numbers in the fifth column shows that GeiXi = 0. Since the mean error is zero, this implies that Cov(X, e) = 0, which in turn means that the correlation coefficient between X and e is also zero. Finally, consider L.S. Property #3 which says that the predicted values of the dependent variable are uncorrelated with the least squares errors. A numerical illustration is given in Table 3.9. The products are obtained by multiplying together the elements in columns three and four. The sum of these products, is (950)(50) + (900)(-35) + .... + (1120)(-25) = 0 The general result can be shown algebraically as follows: Notice that the two unsubscripted constants, a and b, can be factored to the front of the summation signs. Also, the two sums in the second line are both zero as direct results of L.S. Properties #1 and #2. Since the mean error is zero, the result ^ implies that Cov(Y, e) = 0. Econometrics Text by D M Prescott © Chapter 3, 26 Table 3.9 Some Properties of the Least Squares Fit (Advertising) Xi (Sales) Yi 15 1000 950 50 750 10 865 900 -35 -350 12 945 920 25 300 18 930 980 -50 -900 20 990 1000 -10 -200 28 1105 1080 25 700 25 1070 1050 20 500 32 1095 1120 -25 -800 X= 20 Y= 1000 ^ (GYi)/n = 1000 G ei = 0 G eiXi = 0 To better understand why least squares predicted values are uncorrelated with the least squares errors consider the advertising/sales example. Suppose that as the Vice President's research assistant you ^ have calculated a linear relationship between Y and X that produces predicted values Y that are positively ^ correlated with the errors, i.e. Cov(Y, e) > 0. The VP of Sales is likely to point out that your predictions seem to have a systematic error. Stores with high advertising expenditures have high predicted sales and ^ since Cov(Y, e) > 0, these types of stores tend to have positive errors (sales are under predicted since actual sales lie above the fitted line). Also, stores with low advertising budgets and lower than average sales tend to below average (negative) errors, that is, sales are over predicted. Since there is a systematic relationship between the prediction errors and the level of sales, the VP will argue that when you present a sales prediction for a store that has above average advertising expenditures, she should lower your sales prediction because she knows you systematically over predict sales in such cases. However, if you ^ present the VP of Sales with the least squares equation, you can be confident that Cov(Y, e) = 0. The least squares predicted sales figures have errors that exhibit no systematic pattern that could be used to Econometrics Text by D M Prescott © Chapter 3, 27 improve the forecast10. Finally, it should be pointed out that L.S. Property #3 actually follows from L.S. Property #1. ^ Cov(e, Y) = Cov(e, a + bX) = Cov(e, a) + bCov(e, X) Since a is a constant, Cov(e, a) = 0 and L.S. Property #2 states that Cov(e, X) = 0. 3.2.8 Measuring Goodness of Fit By definition, the least squares equation provides the best fitting line through the data. But how good is the best fitting line at explaining the observed variations in sales from store store. One way to judge how well least squares has done is to compute a statistic known as R-squared. Essentially, Rsquared quantifies how useful the information on advertising is for explaining (or predicting) store sales.11 The fundamental problem is to explain the variation in the dependent variable Y. The total variation in Y is referred to as the Total Sum of Squares, TSS, and is measured by Notice that TSS is closely related to the concept of sample variance of Y, which is TSS/n. Recall that the variance is the average value of the squared deviations of Y around its mean. TSS is the total of the squared deviations of Y around its mean. Whereas the variance does not depend on the size of the sample, clearly TSS will tend to increase with the number of observations. 10 The fitted values of Y have been referred to as predicted values of Y, but it would be better to say they are "within sample" predicted values because the actual values of Y are known to the researcher and indeed have been used to compute the "predicted" values of Y. In a real forecasting situation the forecaster does not know what the actual value of Y will be. Such forecasts go beyond the current sample and are referred to as "out of sample" predictions or forecasts. 11 One should keep in mind that it often seems straightforward to explain the past but not as easy to predict the future. R-squared measures how well one can explain the available data, but it is not a guaranteed guide to future predictive performance of the least fit. Econometrics Text by D M Prescott © Chapter 3, 28 An important feature of the least squares fit is that the Total Sum of Squares can be decomposed into two parts: the Regression Sum of Squares, RSS, and the Sum of Squares Residuals, SSR. The explained part of Y is The unexplained part of Y is the least squares residual, e, so SSR = G(ei)2. The decomposition property of least squares can be stated as TSS = RSS + SSR. Algebraically, the decomposition formula is: Proof To prove this important decomposition, begin with the left hand side and substitute Now open up the square brackets treating as two separate terms. The first two terms on the right hand side are SSR and RSS respectively, so to complete the proof it is necessary to show that the last sum is zero. Notice that on the right hand side, the first sum is zero by L.S. Property #3 and the second sum is zero by L.S. Property #1. (Notice that can be brought through the summation sign because it is an unsubscripted constant.) This completes the proof that Econometrics Text by D M Prescott © Chapter 3, 29 that is: TSS = SSR + RSS This decomposition of the total sum of squares provides the foundation for the goodness of fit measure known as R-squared, or R2. Divide through by TSS and obtain 1 = RSS/TSS + SSR/TSS which shows that the proportion of the total sum of squares that is explained by the regression(RSS/TSS) plus the proportion that remains unexplained (SSR/TSS) add up to one. R-squared is defined as the proportion of the total sum of squares that is explained, that is, ] Interpreting R-squared First, it is straightforward to show that the goodness of fit measure R2 always lies between 0 and 1. Since TSS, RSS and SSR are all sums of squared items, it follows that none of these sums can be negative. To better understand what R2 measures, rewrite the decomposition of the total sum of squares in terms of Econometrics Text by D M Prescott © Chapter 3, 30 variances by dividing equation [3.12] throughout by n, the number of observations. The result is This result could also have been found by using the variance of a sum rule (see Chapter 2) But L.S. Property #3 says that , which implies the variance of the dependent variable is the sum of two variances. The first of these is the variance of the explained component of Y, , and the second is the variance of the least squares residuals - the unexplained component of Y. R-squared can be expressed in terms of these variances: This demonstrates that R-squared measures the proportion of the unconditional variance of Y that can be explained by the least squares fit. An interesting observation that can be drawn from equations [3.12] and [3.15] is that the least squares coefficients maximize R-squared - no other line could produce a set of predicted values of Y with a higher variance than the least squares predictions. This follows from the fact that, by definition, least squares minimizes the sum of squared residuals. Figure 3.2 illustrates the decomposition of the variance. Since the concept of “variance” is not easily represented graphically, the range is used to approximate the variance. The L.S. regression line translates the range of X, R(X), into the range of predicts the smallest value of . That is, the minimum value of X in the sample and similarly the maximum value of X predicts the maximum value of in the sample. Notice that since lies on the regression line, the range of is not as large as the Econometrics Text by D M Prescott © Chapter 3, 31 range of the observed values of Y that are dispersed above and below the regression line. This illustrates the point that in all samples Var (Y$ ) ≤ Var (Y ) . What does it mean to say that “X explains Y “? Suppose Y is the market price of a house and X is the house size in square feet. In the housing market, prices vary from house to house and this variability can be measured by the unconditional variance of prices. It is this variance that the model seeks to explain. A regression of price on size yields least squares coefficients and a set of predicted prices that all lie on the fitted regression line. If size “explains” price then the regression equation should predict a wide range of prices for different sizes. Thus if the variance of the predicted prices is large and close to the variance of observed prices, then the regression equation explains a large portion of the variance of prices. In Figure 3.2, a steep regression line contributes to a high R-squared. A relatively flat regression line is associated with a low R-squared. Notice that in the extreme case that the regression line if horizontal (the least squares coefficient on X is precisely zero, R-squared is zero. Figure 3.2 can also explain why R-squared is essentially unaffected by the sample size. Note that the sample size can be increased without affecting the unconditional variance of Y, the variance of the predicted value of Y or the variance of X. Figure 3.2 remains unchanged except that more and more data are packed into the parallelogram around the regression line. The quantity or density of points in this parallelogram has no bearing on R-squared - what matters is the relationship between the variances. In short, simply increasing the sample size will not help to increase the proportion of the variation in Y that can be explained by X. Finally, the fact that the name R-squared has the term "squared" in it raises the question of what R = %(R2) represents. It turns out the R-squared is the square of the correlation coefficient between Y and ^ so R = Corr(Y, Y). It makes intuitive sense that the closer the fitted values, be the R-squared statistic. The proof of this is straightforward. are to Y, the higher will Econometrics Text by D M Prescott © The numerator simplifies to Chapter 3, 32 : To obtain the previous line we have used the fact that the covariance of a variable with itself is its variance and by L.S. Property #3. Substituting this into [3.16], we find that Econometrics Text by D M Prescott © Chapter 3, 33 Figure 3.2 An Illustration of R-Squared