A Parsimonious Structural Model Of Individual Demand For Multiple

A Parsimonious Structural Model Of Individual Demand

For Multiple Related Goods

∗

Andrés Musalem Kenneth C. Wilbur Patricio del Sol

February 4, 2013

∗

Andrés Musalem is Assistant Professor of Marketing at The Fuqua School of Business of Duke University. Kenneth Wilbur is Assistant Professor of Marketing at The Fuqua School of Business of Duke University. Patricio del Sol is Professor at the Industrial and Systems Engineering Department of the Pontificia Universidad Católica de Chile.

The authors thank the Chilean TV network Canal 13 for providing the data used in this paper. Please address all correspondence on this manuscript to Andrés Musalem, 1 Towerview Drive, Durham, NC 27708, Phone: (919) 660-7827,

Fax: (919) 681-6245, amusalem@duke.edu.

A Parsimonious Structural Model Of Individual Demand

For Multiple Related Goods

Abstract

Nearly all theoretically motivated models of consumer demand for multiple goods assume additive separability in preferences, i.e. the consumption utility of each good x is independent of the quantity demanded of another good y . This is a strong restriction that makes the solution of the consumer’s utility maximization problem computationally tractable.

This paper shows that assuming preferences are weakly separable yields a similar simplification. It offers a theoretically founded model of consumer demand for continuous quantities of related goods. It also proposes a Bayesian estimation approach with a parsimonious parameterization that allows for corner solutions. It is the first structural model of individual demand for multiple goods which relaxes additive separability and does not suffer from a curse of dimensionality in the number of chosen goods.

The model is estimated using data on advertisers’ television audience purchases.

We find that advertisers prefer spreading expenditures across time blocks more so than spreading expenditures across networks within a time block. The model nests additively separable preferences as a special case, but the data reject this case. We illustrate how a television network could use the model to assess the consequences of different advertisement pricing policies conditional on competitive response assumptions.

Keywords : advertising, Bayesian econometrics, demand estimation, quantity demand models, television.

1 Introduction

Consider a consumer in a restaurant choosing how many beers and how many scoops of ice cream to buy. Her quantity demanded of beer will be indirectly related to her quantity demanded of ice cream by her budget constraint: more money spent on beer leaves less money for ice cream. But her demands for beer and ice cream may also be directly related. The experience of consuming one option may change the utility of consuming the other. This would happen, for example, if the bitter taste of the beer clashes with the sweet taste of the ice cream.

Such direct utility interdependencies are common. A consumer’s utility of allocating attention to television may be directly influenced by whether she is simultaneously allocating attention to the internet. A worker’s choice of housing location may directly affect her utilities of transportation options. A firm’s profits from internet search advertising may directly depend on whether it also

buys internet display advertising.

These interdependent choice utilities are excluded by any additively separable utility function, that is, any function U defined over an N -vector of consumption quantities ~ = ( q

1

, .., q

N

) that can be monotonically transformed into U ( ~ ) =

N

P u n

( q n

) . Such additively separable utility funcn =1 tions underlie the vast majority of theoretically-motivated models of individual consumer demand.

However, interdependent choice utilities may be important empirical quantities. They may be needed to test theories of consumer behavior, to predict the effects of regulations or to determine marketing strategies for lines of related products. Yet nearly all structural demand models rule out these direct utility interdependencies a priori .

Additive separability has typically not been chosen for its realism. Its advantage is that it facilitates solving the consumer’s constrained utility maximization problem. However, it has several

severe implications for an empirical model’s implications (Deaton and Muellbauer 1980):

• Knowledge of expenditure elasticities alone is sufficient to determine all cross-price elasticities; relative price variation plays no role in identifying those elasticities.

1 Another approach to model choice interdependencies is through multiple constraints, as in the time and budget

through the direct utility function, but these two general approaches need not be mutually exclusive.

1

• When the number of goods is large, Pigou’s Law holds: price elasticities are approximately proportional to expenditures.

• The marginal rate of substitution between any two goods depends only on the quantities consumed of those two goods and is independent of the amount consumed of any other good.

Furthermore, additive separability assumptions have been rejected several times by aggregate de-

mand models; see Barten (1977) for a review.

This paper proposes to replace the assumption of additive separability in direct utility with weak separability. Weak separability requires only that goods may be partitioned into subsets so that preferences within subsets can be described independently of preferences within other subsets

(Gorman (1959), Deaton and Muellbauer 1980). As shown in the next section, this weaker assump-

tion simplifies the solution of the consumer choice problem almost as much as additive separability, without imposing such strong restrictions on the substitution patterns among available goods.

The specific contribution of this paper is to generalize Kim et al. (2002) and Bhat (2008) to

allow for weakly separable preferences in the direct utility model of individual demand. To the best of our knowledge, all other previous approaches suffer from a curse of dimensionality when the choice set is large.

The model and methodology are illustrated using data on Chilean advertisers’ television audience purchases. This is a relevant application because the incremental benefits to an advertiser of airing an advertisement during a particular program may depend on the advertiser’s other audience purchases. It may be that an advertiser has a particular segment of consumers it seeks to target repeatedly, and therefore it optimally spreads its expenditures across time blocks, concentrating spending in the programs its target segment is most likely to watch within any given time block.

Or, the advertiser may prefer to maximize its reach (the number of unique consumers exposed to its message), and therefore might prefer to spread spending across networks within a single time block, in order to reach everyone who is using television at a given point in time.

We find that most advertisers prefer to spread their expenditures across time blocks more so

2

than spreading them across networks within a time block. Moreover, the empirical model nests, and rejects, additive separability as a special case. We also show how the estimated model can be used to assess the consequences of different advertisement pricing policies under different competitive pricing response scenarios.

The rest of the paper is organized as follows. Section 2 describes previous approaches used to

simplify the solution of the individual consumer’s constrained utility maximization problem. Sec-

tion 3 then presents the proposed model, while Section 4 introduces a Bayesian estimation strategy.

Finally, Section 6 concludes the paper and discusses areas for future research.

2 Simplifications to Solve the Agent’s Choice Problem

A structural empirical model of demand requires a theory of consumer choice. The most common assumption is that the agent maximizes a utility, production or profit function subject to a resource constraint. We begin with a general description of a constrained utility maximization problem to illustrate the complexity inherent in its general formulation. Next, we review common approaches to reduce this complexity and show that the large majority of the literature has employed additively separable utility functions along with other strong restrictions. Finally, we discuss existing approaches to relax additive separability and present our proposal, which is based on weak separability in preferences.

This section focuses exclusively on the theoretical model of choice. Estimation of structural demand parameters is conditional on the theoretical model and is thus reserved for later sections.

2.1

Complexity of the General Consumer Problem

Consider an agent with a limited budget y that can be allocated across goods n = 0 , 1 , ..., N .

n = 0 denotes an “outside” good and N is potentially large. The agent chooses a vector of consumption

3

quantities of each good ~ = ( q n

≥ 0 , n = 0 , 1 , ..., N ) to maximize a utility function U ( ~ ;

~

) which is assumed to be strictly increasing, quasi-concave and continuously differentiable in each of its arguments.

~ is a vector of individual-specific preferences which is known to the agent but unknown to the researcher.

To show the complexity that may arise from unrestricted preferences, even in a simple setting, we will initially consider a single agent in a single choice occasion. Further, we will assume that

~

= ( θ

0

, θ

1

, ..., θ

N

) with exactly one utility parameter θ n corresponding to each of the N + 1 options. In later sections we will generalize to multiple agents, multiple choice occasions, larger dimensions of

~ and we will allow each θ n to contain both fixed parameters and unobserved (e.g., utility shocks) components.

Utility maximization requires that ~ solves q

0

≥ 0 ,q

1 max

≥ 0 ,...,q

N

≥ 0

U ( ~ ;

~

) , s.t.

N

X p n q n

≤ y, n =0

(1) where p n denotes the unit price of good n . This solution is characterized by the Kuhn-Tucker conditions

∂U ( ~ ;

~

)

∂q n

∂U ( ~ ;

~

)

∂q n

N

X p n q n n =0

= µp n

, if q

∗ n

> 0 ,

< µp n

, if q

∗ n

= 0 , and

= y,

(2)

(3)

(4) where µ is the Lagrange multiplier.

This basic framework is frequently used in both theoretical and empirical work. In a purely theoretical analysis, a researcher would typically treat the parameter vector

~ as known and solve

~ . In empirical work, the researcher uses data on prices, quantities and budget to find the set of vectors

~ that rationalize the observed choices conditional on a chosen utility function.

4

We therefore require a utility function U ( ~ ;

~

)

which produces at least one solution to (2)-

θ n corresponding to goods with zero demand ( q n

= 0 ) can typically only be set

identified (e.g., Kim et al. 2002). While these corner solutions will play a very important role

later in the paper, the remainder of the current section will focus on how to recover parameters corresponding to goods with positive demand, as this simplifies the exposition.

Without some restrictions on U ( ~ ;

~

)

there is no guarantee that (2)-(4) will provide unique

or closed-form solutions. If there are M goods with positive demand, finding the set of utility parameters that rationalize the agent’s choices requires solutions to a system of M equations in

M unknowns. For large M , finding this solution may be costly even for a single agent in a single choice occasion.

2.2

Common Approaches to Reduce Complexity

Structural demand models combine three approaches to reduce the complexity of the agent’s choice problem (with a few exceptions discussed below):

(a) Specify particular utility functions, such as quasi-linear, Cobb-Douglas or Constant Elasticity of Substitution (CES) to get unique, closed-form solutions.

(b) Constrain the number of positive quantities M to reduce the number of first order conditions which must be evaluated.

(c) Limit the set of possible purchase quantities q n to reduce the number of possible solutions to the system.

Certain combinations of assumptions have been used repeatedly in a variety of disciplines. We restrict our attention to those empirical models that can be applied to individual-level data, consis-

tent with the theoretical model presented in Section 2.1; a more extensive overview was recently

provided by Chintagunta and Nair (2011).

5

2.2.1

Discrete Choice / Unit Demand Models

One of the most common approaches to simplify the agent’s choice problem is to assume a single chosen alternative ( M = 1 ) and unit demand ( q n

∈ { 0 , 1 } ). This reduces the number of possible solutions of the agent’s problem to N + 1 vectors ~ , each with exactly one nonzero element equal to one. The analyst then only needs to specify U ( ~ ;

~

) and find the bounds on

~ that imply that the one-unit utility of the chosen alternative exceeds the one-unit utility of any other alternative. These assumptions are employed in such well-known models as Logit and Probit, usually in tandem with an indirect utility function that is consistent with a Cobb-Douglas U ( ~ ;

~

) .

2.2.2

Discrete Choice / Continuous Demand Models

Choice-quantity models drop the q n

∈ { 0 , 1 }

assumption. Since Hanemann (1984), they admit

some non-negative quantity q n

> 0 of exactly one option ( M = 1 ) and assume that the attributes of any non-chosen option do not affect utility. This effectively imposes additive separability between the single chosen good and any unchosen alternative.

Referring to this final assumption about preferences, Hanemann noted that “this is a restrictive assumption, but it is employed in almost all of the existing theoretical and empirical literature on discrete/continuous choice.”

2.2.3

Multiple Choice / Continuous Demand Models

Work by Hendel (1999), Kim et al. (2002), Dubé (2004) and Bhat (2008) relaxed the single chosen

alternative ( M = 1 ) assumption while allowing each q n to take any non-negative value. This generalization comes at the cost of imposing additive separability on utility, defined as follows:

Additive Separability Condition (ASC). A utility function U ( ~ ;

~

) is additively separable if U can be expressed as the summation of subutilities from each individual consumption good, where each subutility only depends on the quantity consumed of that good and its associated utility parameter; or if there exists a strictly monotone transformation of U ( ~ ;

~

) that meets this condition.

6

Furthermore, this implies that the marginal utility of each good n can be expressed as:

∂U ( ~ ;

~

)

= v n

( q n

; θ n

) .

∂q n

(5) where v n

( q n

; θ n

) is the first derivative of good n ’s subutility with respect to q n

. ASC simplifies the

Kuhn-Tucker condition associated with each q n

> 0 to v n

( q n

; θ n

) = p n

µ.

(6)

Although this system will typically admit multiple solutions, fixing the value of one of the parameters produces a unique solution as long as v n

( q n

; θ n

) is a strictly monotonic function of θ n

. As in

Kim et al. (2002) and Bhat (2008), one can choose any of the goods receiving positive demand,

label it m , and condition on its corresponding preference parameter θ m

.

µ is then solved as a function of θ m

θ n parameter corresponding to a positive quantity ( q n

> 0 ) can in turn be solved for as a function of µ using only the Kuhn-Tucker condition associated with good n . This leads to M systems of one equation in one unknown, far simpler than the system of

M equations in M

unknowns specified in Subsection 2.2.

2.2.4

Models that Relax Additive Separability

We are aware of four approaches to estimate demand at the individual level that simplify the agent’s choice problem without imposing additive separability. We view each of these approaches as a significant advance in the literature.

The first and best known approach is the nested logit model, which is compatible with a weakly separable direct utility function. However, when estimated at the individual level, the model retains the single chosen alternative and unit demand simplifications and therefore cannot be used to model individual demand for multiple related goods.

Another approach is to treat bundles of goods differently from bundle components. For exam-

7

ple, Manski and Sherman (1980) and Train et al. (1987) estimated discrete choice models in which

each unique combination of goods constituted a bundle. However, this approach requires discrete quantities and does not exploit information available in correlations across choices of bundles containing common components.

Gentzkow (2007) generalized this approach by introducing a parameter to represent the differ-

ence between the utility of a bundle of attributes and the sum of the bundle components’ individual utilities. Again, quantities are discretized. Further, if an agent may select any pair of available goods, then a choice set of size N requires estimation of N ( N − 1) / 2 parameters. This curse of dimensionality will compound if the utility function was generalized to admit utility interdependencies among triples or larger tuples of goods instead of just pairs.

Vásquez and Hanemann (2008) and Bhat and Pinjari (2010) relax the additive separability

assumption in direct utility-based models. In their models, the marginal utility of consuming good n contains a good-specific parameter and an interaction term with the consumption of each other good n

0 by means of a pair-specific utility parameter θ nn

0

. A similar approach based on the indirect

utility function was proposed by Song and Chintagunta (2007). However, in all three approaches,

the number of parameters to be estimated grows rapidly with N .

2.3

Parsimonious Approaches to Reduce Complexity Without Imposing Additive Separability

Section 2.2.3 showed that most structural models of individual consumer demand assume addi-

tively separable utility to simplify the consumer choice problem. The approaches in Section 2.2.4

relax this restriction by introducing parameters to represent utility interdependencies, but the number of such parameters required grows rapidly with N . This section points out that additive separability is a stronger condition than necessary to simplify the consumer choice problem.

We first specify an alternative assumption which relaxes ASC while retaining its complexity reduction benefit. We then propose a still weaker condition which may resolve the curse of dimen-

8

sionality problem by employing the researcher’s a priori information about relationships among

2.3.1

Ratios of Marginal Utilities

The following condition is necessary but not sufficient for ASC.

Ratio of Marginal Utilities Condition (RMUC). The ratio of marginal utilities of any pair of inside goods n and n

0 does not depend on any good-specific utility parameters except θ n and θ n

0

:

∂U ( ~ ;

~

)

∂q n

∂U ( ~ ;

~

)

∂q n

0

= r ( ~ ; θ n

, θ n

0

) .

(7)

Note that in the case of an additively separable utility function, r ( ~ ; θ n

, θ n 0

) = v n

( q n

; θ n

) v n

0

( q n

0

; θ n

0

)

. This ratio only depends on ~ , θ n and θ n

0 and thus satisfies RMUC. However, ASC is stronger than

RMUC. This can be easily seen by constructing an example of a utility specification that meets

RMUC without imposing additive separability:

U ( ~ ) = q

θ

1

1

+ q

θ

2

2

+ q

θ

0

0

+ q

1 q

0

+ q

1 q

2

+ q

2 q

0

+ q

1 q

2 q

0

.

(8)

A more general example that meets RMUC can also be constructed:

U ( ~ ) = g

N

X u n

( q n

; θ n

)

!

+ u

0

( q

0

; θ

0

) , n =1

(9) where g ( · ) is a strictly monotonically increasing and twice differentiable function.

Therefore, RMUC is less restrictive than ASC, but still yields a similar simplification to the estimation problem. Conditioning on one good-specific parameter θ m associated with a positive demanded quantity q m

> 0 , we use the ratio of Kuhn-Tucker conditions associated with goods m

2

The complexity reduction comes from the properties of the solution to the consumer’s utility maximization problem; the number of first order conditions is left unchanged.

9

and n to solve for θ n in terms of θ m

. Under RMUC, the ratio of Kuhn-Tucker conditions can be written as: r ( ~ ; θ n

, θ m

) = r ( ~ ; θ n

, θ m

) < p n p m p n p m

,

, if q if q

∗

∗ n n

> 0

= 0

,

.

(10)

(11)

We can now use (10) and (11) to solve for

θ n in terms of θ m and the price and quantity data. More-

over if in Equation (9) each of the

u n

( q n

; θ n

) functions is concave in q n

, this utility formulation allows for multiple positive quantities of multiple inside goods (i.e., M > 1 ).

2.3.2

Subset-Specific Ratios of Marginal Utilities

Even though RMUC is weaker than ASC, RMUC places constraints on the ratios of marginal utilities of all possible pairs of inside goods. It is actually possible to replace it with a weaker condition that imposes a similar assumption on subsets of goods. Suppose that preferences are weakly separable, i.e., the set of goods { 0 , 1 , ..., N } can be partitioned into S disjoint subsets N

1

, .., N

S

. Each subset includes alternatives with consumption levels affecting the utility contribution from other alternatives within the same subset. For instance, each of these subsets might contain goods that serve a similar purpose, e.g. Leisure = { books,movies } , and exclude goods that serve a dissimilar purpose. This implies that the marginal utility of each good within the subset increases (if complements) or decreases (if substitutes) with the quantity consumed of each of the other goods within

The partitioning of the choice set into disjoint subsets has been discussed in numerous papers,

simplify the agent’s choice problem:

3

The approach described here is applied below to subsets of substitutable products. It may also be applicable to groups of complementary products, but care must be taken to ensure that subset utility functions are specified in a way that U ( ~ ; θ ) remains quasiconcave.

10

Subset-Specific Ratio of Marginal Utilities Condition (SRMUC). The ratio of marginal utilities of any pair of inside goods n and n

0 in subset s does not depend on any good-specific utility parameters except θ n and θ n 0

:

∂U ( ~ ;

~

)

∂q n

∂U ( ~ ;

~

)

∂q n

0

= r s

( ~ ; θ n

, θ n

0

) for n, n

0

∈ N s

, (12) where N s denotes the set of all products in subset s . The SRMUC is weaker than the RMUC in the sense that it only restricts the ratio of marginal utilities for goods within subsets without constraining the ratios of marginal utilities of goods across subsets. However, it requires the researcher to define subsets and classify available goods.

Under SRMUC, we can condition on a focal good with positive demand within each subset s with positive demand. Denoting this focal good as m s

, the Kuhn-Tucker conditions for goods m s and n ∈ N s lead to the following expressions:

∂U ( ~ ;

~

)

∂q n

∂U ( ~ ;

~

)

∂q ms

∂U ( ~ ;

~

)

∂q n

∂U ( ~ ;

~

)

∂q ms

= r s

( ~ ; θ n

, θ im s

) =

= r s

( ~ ; θ n

, θ im s

) < p n p m s

, p n p m s

, if if q q

∗ n

∗ n

> 0

= 0 .

, (13)

(14)

θ n as a function of θ m s and require solving a single equation or inequality in one unknown. A simple example of a utility function that satisfies SRMUC corresponds to:

U ( ~ ) =

S

X g s

X u n

( q n

; θ n

)

!

+ u

0

( q

0

; θ

0

) , s =1 n ∈ N s

(15) where, as before, g s

( · ) is a strictly monotonically increasing and twice differentiable function. If, for example, each g s

( · ) function and u n

( q n

; θ n

)

4 It would also be possible to apply a concave transformation to the sum of subutilities from all “inside” subsets.

However, in practice it may be difficult to estimate the parameters of such a transformation, as it depends on observing

11

3 Model

As a special case of the formulation described in Equation (15), we rediscovered and generalize

framework using aggregate data and strictly positive quantities.

We generalize the model to allow for corner solutions, as these will be common in most individual-level consumption datasets, and to allow utility parameters to depend on observed and unobserved alternative and agent characteristics. To facilitate understanding of the model and its

parameters, a list of symbols with their respective meanings is presented in Table 1.

3.1

The Agent’s Utility Function

There is a many-to-one assignment of goods n = 1 , ..., N to the disjoint subsets s = 1 , ..., S .

Recall that the set of goods belonging to subset s is denoted by N s

. An “outside” good n = 0 represents spending on all other goods and does not belong to any of the S subsets.

Each agent i = 1 , ..., I chooses a quantity q inst

≥ 0 of each good n in each subset s at each choice occasion t . Consumption occurs at unit prices p nst and is subject to a budget constraint y it

.

To simplify the exposition, we drop all i and t subscripts from the remainder of this section.

We model the agent’s utility as follows:

U ( ~ ) =

S

X g s

X u ns

( q ns

)

!

+ u

0

( q

0

) s =1 n ∈ N s

(16) where:

(a) u ns

( q ns

) is the utility contribution of consuming good n ∈ N s

, which should be increasing, concave and equal to zero when q ns

= 0 ; substitution between all inside options and the outside option, and (by definition) limited data is available on the characteristics and prices of the outside option.

12

Table 1: List of symbols.

Symbol Description

L

ι z i

φ i

λ inst w st

ν i

ε ist

σ

ε

S +

S

0

N

N

+ s

0 s m s y it p nst

F ( · ) f ( · ) n = 0 , . . .

, N good s = 1 , . . .

, S subset i = 1 , . . .

, I agent t = 1 , . . .

, T choice occasion

U g s q inst

δ inst

κ ist

ρ i

γ i x nst

β i total utility subset utility quantity demanded good-level marginal utility parameter subset-level marginal utility parameter good-level satiation (diminishing returns) parameter subset-level satiation (diminishing returns) parameter observed good characteristics agent valuations of good characteristics unobserved agent-good preference shock observed subset characteristics agent valuations of subset characteristics unobserved agent-subset preference shock scale parameter of agent-subset preference shocks set of subsets with positive consumption set of subsets with zero consumption set of goods with positive consumption within subset s set of goods with zero consumption within subset s reference good with positive consumption within subset s budget good price cumulative distribution function probability density function likelihood agent characteristics vector of agent preference parameters parameter matrix of mean effects of z i covariance matrix of φ i on φ

13

(b) g s is the total utility derived from all goods in subset s , which should be increasing, concave and equal to zero when P n ∈ N s q ns

= 0 ; and

(c) u

0

( q

0

) is the utility derived from consuming the outside option, which should be increasing, concave and equal to zero when q

0

= 0 .

The utility contribution of good n to subset s , u ns

( q ns

) , is modeled as: u ns

( q ns

) =

δ ns

( − 1 + (1 + q ns

)

ρ

)

ρ

(17) where δ ns

> 0 is a scale parameter representing preference for good n ; and ρ is a diminishing returns (e.g., satiation) parameter that allows the marginal utility of good n to change with q ns

− 1 and 1 enter this formulation to ensure that u ns

(0) = 0 and to allow for the possibility of optimal quantities being equal to zero.

We write subset-specific utility in a similar fashion: g s

(

X n ∈ N s u ns

( q ns

)) =

κ s

− 1 +

γ

"

1 +

X n ∈ N s u ns

( q ns

)

#

γ

!

(18) where κ s

> 0 represents a preference for any positive consumption in subset s and γ is a diminishing returns parameter that allows the marginal utility of subset s to change with consumption of any of its elements. Again, − 1 and 1 enter to ensure that subset utility is zero when its consumption is zero and to allow for the possibility of optimal subset demand being equal to zero. Note that if γ = 1

, this model collapses to an additively separable formulation which is equivalent to Bhat

(2008) and similar to Kim et al. (2002). Hence, by just adding one additional parameter (i.e.,

γ ) we are able to relax additive separability. This property highlights the parsimony of this demand model for related goods.

5 The model could potentially allow ρ to vary across alternatives or subsets.

14

Finally, we assume that: u

0

( q

0

) =

δ

0

ρ q

ρ

0

, (19) where δ

0

governs the magnitude of the outside good contribution to total utility. Equation (19)

essentially treats the outside good as existing in a separate subset by itself. We also note that this formulation implies at least some arbitrarily small level of spending on the outside good by all

Given these definitions, it may be readily verified that this model satisfies SRMUC since:

∂U ( ~ ;

~

)

∂q n

= 1 + g s

(

X n ∈ N s u ns

( q ns

))

!

γ − 1

κ s

δ ns

(1 + q nst

)

ρ − 1

, (20) where this expression for the marginal utility implies that for any pair of goods n and n

0 belonging to the same subset:

∂U ( ~ ;

~

)

∂q n

∂U ( ~ ;

~

)

∂q n

0

=

δ ns

(1 + q ns

) ρ − 1

δ n

0 s

(1 + q n

0 s

) ρ − 1

, (21)

Note that this ratio depends on the parameters of alternatives n and n

0

: δ ns

, δ n

0 s and ρ , but not on any other δ js parameters for ( j / n, n

0 } ).

3.2

Parameter Interpretation and Allocation Strategies

Four sets of parameters determine the agent’s spending within and across subsets of goods.

δ ns directly affects the marginal utility of consuming good n within a subset s , while κ s modifies the marginal utility of consuming any alternative within subset s. Given these good- and subsetspecific utility parameters, γ and ρ determine the optimal distribution of spending across and within subsets.

ρ governs the rate at which subset utility decreases with spending on any single good within a subset. Other things equal, an agent with a higher ρ will tend to focus its consumption

6 We have also generalized the estimation methodology to solve and estimate a model allowing for corner solutions in outside good spending. This estimation algorithm, which is substantially more complex, is available from the authors.

15

γ

Table 2: Different budget allocation strategies as a function of ρ and γ .

ρ low high high many alternatives in few subsets few alternatives in few subsets low many alternatives in many subsets few alternatives in many subsets on one good within a subset, while an agent with a lower ρ will tend to spread its consumption across goods within a subset. Meanwhile, γ is the rate at which total utility varies across goods within a subset. Other things equal, an agent with a higher γ will tend to focus its consumption on one subset while an agent with a lower γ will tend to spread its consumption across subsets.

Table 2 describes the relationship between dbeta_{i}ifferent combinations of values of the

ρ and γ parameters and the optimal strategies employed by the agent.

This mapping between agents’ utility parameters and optimal consumption strategies plays an

important role in the identification of model parameters, discussed in Subsection 4.4 below.

4 Estimation

The goal of the model is, using data on agents i = 1 , ..., I on choice occasions t = 1 , ..., T choosing observed quantities q inst at prices p inst under budget constraints y it

, to make inferences about what values ( δ inst

, κ inst

, ρ i

, γ i

) rationalize each agent’s choices on each choice occasion.

I , N and T may all be large, so the number of unknown terms to be estimated is potentially very large. Two simplifications are used to facilitate estimation. First, parameters are specified as

functions of agent and good characteristics and unobservables, as in Lancaster (1971). Second,

using a Hierarchical Bayes modeling approach, distributional assumptions are imposed on subsets of related parameters, while the (hyper)parameters of those distributions are estimated.

Accordingly, Section 4.1 defines model parameters as functions of characteristics data and

unobservables. Section 4.2 derives a likelihood function and shows how SRMUC helps to find

the parameters that rationalize observed consumer choices. Section 4.3 introduces distributional

(i.e., prior) assumptions for the parameters and shows how to estimate their (hyper)parameters in

16

a Bayesian fashion. Section 4.4 discusses identification issues.

4.1

Parameterization

Recall that δ inst is the utility parameter associated with consumption of good n in subset s by agent i on occasion t . We assume that:

δ inst

= exp ( β i

0 x nst

+ inst

) (22) where ~ nst is a vector of observed good characteristics, including a constant; β i is the agent’s vector of preference weights for those characteristics; and inst represents unobserved characteristics that modify the utility contribution of good n belonging to subset s for agent i during period t . The exponential function ensures δ inst

> 0 else product n is not a "good." The outside good has no observed characteristics, so we assume δ i 0 t

= exp( i 0 t

) .

We parameterize subset utility similarly:

κ ist

= exp ( ν i

0 w st

+ ε ist

) , (23) where ~ st is a vector of observed subset characteristics on occasion t ; ν i is the agent’s vector of preference weights for those characteristics; and ε ist denotes unobserved subset characteristics that modify the utility contribution of all goods belonging to subset s for agent i in period t .

Given these specifications for δ inst and κ ist

, the utility model presented in Subsection 3.1 (see equation (16)) can be expressed as follows:

U ( q ~ it

) =

S

X e

ν

0 i w st

+ ε ist

γ i s =1

(

− 1 +

"

1 +

X n ∈ N st e

β

0 i x nst

+ inst

( − 1 + (1 + q inst

)

ρ i )

#

γ i )

+

ρ i

ρ i 0 t q

ρ i

γ i i 0 t

.

i

(24)

Note that all errors inst

, i 0 t and ε ist are known to agent i at time t but not to the econometrician.

17

Thus, the errors represent researcher uncertainty about preferences. An alternative approach to adding and interpreting error terms would be to include stochastic terms directly in the Kuhn-

Tucker conditions (as in Bhat and Pinjari (2010)), implying random errors in utility maximization.

Finally, inst and i 0 t are assumed to be independently distributed extreme value with zero mode and scale σ . Unobserved subset characteristics ε ist are assumed to be independently normally distributed with zero mean and variance σ 2

ε

. Alternative distributional assumptions on and ε are feasible, but these distributions provide computational advantages in terms of calculating the densities and probabilities required to evaluate the likelihood function of the data, as described in the next Subsection.

4.2

Likelihood of the spending data

This section shows how to calculate the likelihood of the data for a single agent on a single choice occasion, so we again suppress subscripts i and t

. Section 4.3 adds heterogeneity to accommodate

multiple agents and multiple choice occasions.

Let S + be the collection of subsets with positive consumption and S 0 be the set of subsets with zero demand. First, we show how to calculate the likelihood of any subset s ∈ S + . This requires finding the values and ranges of the unobserved preferences and ε implied by the subset’s consumption data, using one good within that subset as a reference good. Second, we derive the probability of zero consumption in subsets s ∈ S

0

. Finally, note that, given data on prices, quantities and budget constraints, spending on the outside good can be calculated directly as q

0

= y −

S

P P s =1 n ∈ N s q ns p ns q ns

. Therefore, we only need to determine the probability of observing each of the quantities to assess the likelihood of the data.

For any subset s ∈ S + , let N + s denote the set of goods with positive consumption in the subset and let its complement be N 0 s

≡ N s

\ N + s

. Define m s as the smallest index in N + s

. For example, if the agent purchases 0 units of good 1, 6 units of good 2 and 4 units of good 3 in a three-good subset s , then N s

= { 1 , 2 , 3 } , N

+ s

= { 2 , 3 } , N

0 s

= { 1 } and m s

= 2 .

First we find the range of feasible values of ns for each good with zero consumption in subset

18

s . For any n ∈ N

0 s

, SRMUC implies that the ratio of Kuhn-Tucker conditions for goods m s

n ∈ N

0 s

(equation 3) can be written as:

δ ns

(1 + q ns

) ρ − 1

δ m s s

(1 + q m s s

) ρ − 1

< p ns p m s s

.

(25)

Taking logs and substituting in for δ ns and δ m s s

, ns

< V m s s

+ m s s

− V ns

, ∀ n ∈ N

0 s

, (26) where V ns

≡ β~ ns

+ ( ρ − 1) ln(1 + q ns

) − ln( p ns

) .

Next we find the feasible values of ns for the non-reference goods with positive consumption in subset s . For n ∈ N + s

, the log ratio of Kuhn-Tucker conditions of n and m s implies: ns

= V m s s

+ m s s

− V ns

, ∀ n ∈ N s

+

, n = m s

, (27)

Finally, ε s is obtained from the log ratio of the Kuhn-Tucker conditions for goods m s and the

ε s

= − ν

0 w s

− ( γ − 1) ln(1 +

X u ns

( q ns

)) − V m s s

− n ∈ N s m s s

+ ( ργ − 1) ln( q

0

) +

0

.

(28)

Accordingly, the likelihood of the subset is

19

L +

( { ~ s

} s ∈ S +

|{ m s s

} s ∈ S +

,

0

)

=

×

Y Y

F ( ns

< V m s s

+ m s s

− V ns

)

s ∈ S

+ n ∈ N

0 s

Y Y s ∈ S + n ∈ N

+ s

,n>m s f ( ns

= V m s s

+ m s s

− V ns

)

×

×

"

Y f ( ε s

= − ν

0 w s

− ( γ − 1) ln( A s

( ~ s

)) − V m s s

− s ∈ S

+

| J | m s s

+ ( ρ − 1) ln( q

0

) +

0

)

#

(29) where f ( · ) and F ( · ) denote the p.d.f. and c.d.f., respectively, of a random variable; A s

( ~ s

) ≡

1 + P u ( q ns

) ; J denotes the Jacobian of the transformation of all positive quantities { q ns n ∈ N s

S + , n ∈ N + s

} into the unobserved good and subset characteristics { ns

: s ∈ S + , n ∈ N + s

: s ∈

, n > m s

} and { ε s

: s ∈ S + } , respectively; and | J | denotes the absolute value of the determinant of the

Jacobian. The determinant of the Jacobian enters from the standard change-of-variables formula used to define the quantity vector { q

~ s

} s ∈ S + as a function of the error terms. The derivation of this

Jacobian is presented in the Appendix.

Now, consider a subset s ∈ S 0

, i.e., one in which the agent did not consume any goods. The log ratio between the Kuhn-Tucker conditions for each good n ∈ s and the outside good implies ns

< ( ργ − 1) ln( q

0

) +

0

− ν

0 w s

− ε s

− V ns

, iff q ns

= 0 .

(30)

Conditioning on { ε s

: s ∈ S

0 } and

0

, the likelihood of observing no spending in these subsets is given by:

L 0

( { ~ s

} s ∈ S 0

|{ ε s

} s ∈ S 0

,

0

) =

Y Y s ∈ S 0 n ∈ N s p ( ns

< ( ργ − 1) ln( q

0

) +

0

− ν

0 w s

− ε s

− V ns

) .

(31)

20

Finally, the full likelihood contribution of one agent on one choice occasion is

L ( ~ |{ m s s

} s ∈ S

+

, { ε s

} s ∈ S

0

,

0

) = L

+

( { ~ s

} s ∈ S

+

|{ m s s

} s ∈ S

+

,

0

) L

0

( { ~ s

} s ∈ S

0

|{ ε s

} s ∈ S

0

,

0

) .

(32)

Note that equation (32) depends on the values of unobserved good and subset characteristics

( { m s s

} s ∈ S +

, { ε s

} s ∈ S 0

,

0

), so the unconditional likelihood is obtained by integrating over their distributions. Next, we briefly show how to use Bayesian MCMC methods to accomplish this goal.

4.3

Heterogeneity and Estimation

Until now we have suppressed agent and choice occasion indices. Here we introduce randomcoefficient specifications on the model parameters to account for observed and unobserved agent

heterogeneity. Then we derive a posterior likelihood function based on equation 32.

Considering i = 1 , ..., I agents, we denote by ~ i a vector of observed agent characteristics and we let ϕ i denote a vector of agent parameters given by ( β i

0

, ˜ i

, ˜ i

)

0

, where ˜ i and ˜ i are the logit transformation of ρ i and γ i

(i.e., ˜ i

= ln( ρ i

) − ln(1 − ρ i

) and ˜ i

= ln( γ i

) − ln(1 − γ i

) ). We then assume that each vector ϕ i is normally distributed with mean ϑ

0

~ i and a diagonal variance matrix

λ , which lets each agent’s observed and unobserved characteristics influence its utility parameters.

We next assign prior distributions to each of the hyperparameters of the model: σ

2

ε follows a scaled inverseχ

2 distribution with degrees of freedom df

σ

2

ε and scale o

σ

2

ε

; each element of ϑ and ν is distributed according to a zero mean normal distribution with variance σ 2

ϑ and σ 2

ν

, respectively; each diagonal element of λ k follows a scaled inverseχ 2 distribution with degrees of freedom df

λ and scale o

λ

; and σ is assigned a flat prior in the (0 , ∞ +) interval. Given these definitions, the

21

posterior probability of the model parameters given the data is proportional to: f ( ∗| ~ x, ~ ) ∝

×

" T

Y

I

Y

L ( ~ it

|{ t =1 i =1

I

Y p ( ϕ i

| ϑ

0

~ i

, λ ) im st st

} s ∈ S

+ it

, { ε ist

} s ∈ S

0 it

, i 0 t

; ϕ i p t i =1

× p ( { im st st

} s ∈ S

+ it

, i 0 t

| σ ) x t z i

)

#

× p ( { ε is

} s ∈ S

0 it

| σ

ε

2

)

× π ( ϑ, λ, ν, σ

ε

2

, σ ) , (33) where ∗ denotes the model parameters to be estimated: { ϑ, λ, ν, σ

2

ε

, σ , { ϕ i

, {{ im st st

} s ∈ S

+ it

,

{ ε ist

} s ∈ S

0 it

, i 0 t

} T t =1

} N i =1

} ; L ( ~ it

|{ im st st

} s ∈ S

+ it

, { ε ist

} s ∈ S

0 it

, i 0 t

; ϕ i

, ν, ~ t

, ~ t

, ~ i

) is the likelihood of the demand data for agent i in choice occasion t

which can be computed using equation (32);

and π ( ϑ, λ, ν, σ 2

ε

, σ ) denotes the prior distribution of the corresponding hyperparameters. Given this formulation of the posterior distribution, we use a Markov chain Monte Carlo (MCMC) approach, where each parameter is sampled conditioning on the data and all other parameters (Gibbs sampling). In the case of β i

, ρ i

, γ i

, σ , { ε is

} s ∈ S

0 it and { im st st

} s ∈ S

+ it

, i 0 t we use random walk

In the case of ϑ , λ , ν , and σ

ε we draw these parameters directly from their full-conditional distributions using standard results for normal distribution likelihood functions with conjugate priors.

We note that the likelihood function in Equation (32) has an implicit assumption that the dis-

tributions of the shocks p ( { im st st

} s ∈ S

+ it

, i 0 t

| σ ) and p ( { ε is

} s ∈ S

0 it

| σ 2

ε

) are independent of the prices.

If prices are set with knowledge of agents’ distributions of preferences, then this independence

assumption would bias the estimates of agents’ price responsiveness (Chintagunta et al. 2005). In

this case, one may use an instrumental variables approach or a supply-side theory to derive esti-

mating equations relating the shocks and market prices (e.g., Rossi et al. 1996). We return to how

7

Note that { ε is

} s ∈ S

+ it and { inst

} s ∈ S

+

,n>m st don’t need to be sampled since they are deterministic functions of the it remaining model parameters. Similarly, the terms { inst

} n ∈ N 0 s do not need to be sampled because they are integrated

out in the likelihood (see equations 29 and 31) given the values of all other model parameters.

22

this point applies to the application of the model in Subsection 4.4.

Finally, a simulation experiment was run to verify that the estimation code was able to recover known parameters from synthetic data. This completes the specification of the model and estimation.

4.4

Identification

The model relies on variation in quantities, prices and budgets to identify the elements of δ , κ ,

ρ , and γ

. Bhat (2008) discusses identification of all of these quantities when

γ = 1 . There are two

related identification issues here that were not present in Bhat (2008): how to identify

γ and how to identify γ separately from ρ .

γ is identified by the agent’s tendency to spread or concentrate spending across subsets of goods.

ρ is identified by the agent’s tendency to spread or concentrate spending across goods in the subset, holding spending across subsets constant. In other words, the degree to which each

advertiser’s consumptions conforms to the two-dimensional strategies listed in Table 2 determines

that particular advertiser’s combination of γ and ρ .

The next section describes the application of the model, including the data, institutional context and parameter estimates.

5 Application: Chilean Television Advertisements

A natural application of the model is to estimate the utility parameters of advertisers. Advertisers face complex menus of program audiences and regularly choose positive quantities of a large number of available goods, including many positive and zero quantities. Audiences may be grouped into subsets with clear utility interdependencies between them. This section describes the data, the classification of available goods into subsets, the parameter estimates, and a counterfactual

23

experiment based on the results.

5.1

Data and Institutional Details

Complete price, advertising and audience data were available for the five major Chilean television networks for 139 weeks between 2006 and 2008. For each advertisement that aired on each television network, the data record the identity of the advertising firm, the advertisement’s duration and estimated price, and audience demographic and socioeconomic characteristics. The data were collected by an independent ratings company and supplied to us by one of the leading television networks. These are the same data that underpin the decisions made by all major networks and advertisers in the marketplace.

As in most major television markets, television viewership and advertising revenues are far greater in the evening than at other times of the day. Further, programs typically change on the

hour and advertisers typically make consumption decisions using weekly advertising budgets.

Therefore, we take each week as a choice occasion. Within each week, each alternative was defined as a unique combination of a television network, weekday and prime time hour where prime time is defined expansively as lasting from 7 p.m. until midnight. We refer to each weekday and hour combinations as a “time block.”

The goods were partitioned into subsets in a way to approximate the reach of an advertisement.

That is, assuming that the rate of switching among available programs is not too high, two competing networks’ audiences in the same time block should typically exhibit less audience overlap

than the same two networks’ audiences in any two different time blocks.

Therefore, each agent i

8 The goal here is to facilitate television network decision-making by estimating parameters that predict advertiser behavior. The goal is not to solve individual advertisers’ optimal advertising budget allocation problem or estimate total audience reached.

9 This is justified by the observation that the distribution of advertiser purchases across weeks in the sample has a large mass at zero with almost no mass in the region slightly above zero. That is, within each advertiser, it is commonly observed that the advertiser either spends nothing on the 35 blocks available that week or purchases multiple positive network-block combinations within the week.

10

An important limitation of the data is that they do not report the degree of overlap between any two television programs. This is probably because of the limited number of households in the sample; a relatively small sample size limits one’s ability to reliably estimate the degree of overlap among any two program audiences. One would ideally model advertiser preferences based on reach and frequency, however, those data on those variables are not typically

24

allocates its budget among 35 subsets of products, and each subset contains five networks.

Several options are available to any advertiser, such as:

(a) Program airing concentration: The advertiser may concentrate its spending on the program with the largest audience within a week (e.g., the Super Bowl). This would help the advertiser to reach a large number of viewers. This advertiser would be characterized by high ρ and high γ .

(b) Time block concentration: The advertiser may cover all (or most) networks during the same time block. This would ensure that a large number of unique viewers watching television in that time block are exposed to the advertising efforts, regardless of the network they choose to watch. This advertiser would be characterized by low ρ and high γ .

(c) Network and time block spreading: In an effort to get consumers exposed to an advertisement multiple times, the advertiser may choose to spread its budget across multiple networks and time blocks so that it might be watched by the same target audience. This advertiser would be characterized by low ρ and low γ .

More formally, each advertiser i chooses how much of the budget to invest in each program airing

( n, s, t ) , which is defined as a combination of network (e.g., Channel 13), time block s (e.g.,

Monday 7-8pm) and week t (e.g., first week of February 2006). We consider N = 5 major broadcast networks (channels 4, 7, 9, 11 and 13), while the remaining one (channel 5) is considered in the outside option (outside media) in our model due to data limitations. Each of the program airings belongs to one of S = 35 time blocks that correspond to each of the 5 primetime hours (7 p.m. to midnight) within each of the 7 days of a week.

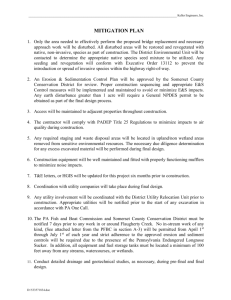

To illustrate some of the main features of the data, Figure 1 depicts how often advertisers

invest in multiple program airings within a time block. To facilitate interpretation, for each week and advertiser, we only consider time blocks with positive demand. This histogram shows that a very common investment approach is to choose just one network within a time block, which available to advertisers or networks.

25

happens with a 57% probability, conditional on spending on at least one program airing. This finding could be due to at least two different reasons. On the one hand, there could be substantial heterogeneity in the value to the advertiser of program airings from different networks within a time block, leading the advertiser to choose only the best of these options within a time block.

On the other hand, it might be the case that the benefits of adding an extra dollar to a program airing do not decrease much, leading the advertiser to select the most attractive program airing and concentrating a large fraction of the budget on that single option.

Figure 1: Histogram of the number of chosen networks in time blocks with positive demand.

Ϭ͘ϳϬ

Ϭ͘ϲϬ

Ϭ͘ϱϬ

Ϭ͘ϰϬ

Ϭ͘ϯϬ

Ϭ͘ϮϬ

Ϭ͘ϭϬ

Ϭ͘ϬϬ ϭ Ϯ ϯ

EƵŵďĞƌŽĨĐŚŽƐĞŶ ŶĞƚǁŽƌŬƐ ϰ ϱ

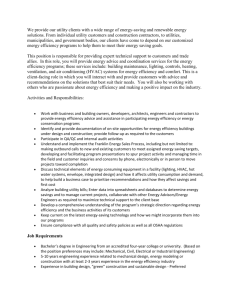

In addition, Figure 2 shows how often advertisers select multiple time blocks in a given week.

This histogram shows that conditional on advertising on at least one time block (which happens with 60.6% probability), on average an advertiser chooses 14.8 time blocks, while the corresponding median number of blocks in that case is 13. This suggests that advertisers prefer to spread their budgets across many (hour of the day, day of the week) combinations. As before, this could be due

26

to at least two different reasons. On the one hand, for a given advertiser there might be several time blocks that are equally (and sufficiently) attractive, leading the advertiser to select many of them.

On the other hand, it might be the case that once an advertiser chooses to invest in a time block, the value of allocating additional resources to the same time block might diminish. For example, if the advertiser wishes to reach a potential consumer multiple times, airing an advertisement simultaneously (i.e., during the same time block) on multiple networks will harm the chances of engaging the same viewer multiple times, since most likely the consumer will be watching content from just one network. As we will show later, our model will allow us to examine which of these two explanations is more consistent with the data.

Figure 2: Histogram of the number of chosen networks in time blocks with positive demand.

Ϭ͘Ϭϯϱ

Ϭ͘ϬϯϬ

Ϭ͘ϬϮϱ

Ϭ͘ϬϮϬ

Ϭ͘Ϭϭϱ

Ϭ͘ϬϭϬ

Ϭ͘ϬϬϱ

Ϭ͘ϬϬϬ ϭ ϯ ϱ ϳ ϵ ϭϭ ϭϯ ϭϱ ϭϳ ϭϵ Ϯϭ Ϯϯ Ϯϱ Ϯϳ Ϯϵ ϯϭ ϯϯ ϯϱ

EƵŵďĞƌŽĨĐŚŽƐĞŶ ďůŽĐŬƐ

The benefits that the advertiser derives from allocating its budget to the different advertising options are originated, for example, from improvements in consumer awareness or changes in perceptions about the products or services offered by the advertiser.

q inst is the number of advertising

27

seconds purchased by advertiser i in the program airimg on network n in time block s in week t .

The advertiser benefits from exposing the audience of a program airing a nst to an advertisement during q inst seconds. In addition, the utility of advertising to that audience size is also a function of its sociodemographic characteristics x nst and block characteristics w st

(described below).

Therefore,

U ( q t

, q

0 t

) =

S

X e

ν

0 w st

+ ε st

γ s =1

− 1 +

"

1 +

N

X e

β

0 x nst

+ nst

( − 1 + (1 + a nst q nst

)

ρ

)

#

γ

!

ρ

+

δ

0 t

ρ q

ργ

0 t

, n =1

(34)

The only difference between this formulation and that in equation (24) is that we make the adver-

tiser benefits a function of not only the quantity purchased of advertising seconds of a program airing q nst

, but also the size of the corresponding audience a nst through the product of these two terms: a nst q nst

. Note that the larger the audience of a program airing, a nst

, the larger the corresponding returns obtained by the advertiser from allocating a portion of the budget to program airing ( n, s, t ) . Advertiser utility is also increasing in the length of the audience’s exposure to its commercial message. Finally, advertiser utility depends on the characteristics of the audience and the time block.

We now describe advertisers, program characteristics, advertiser characteristics, block characteristics, advertising prices and outside media spending:

• The model is estimated at the level of the individual advertiser for 107 advertisers. This set of advertisers accounted for 78% of the total prime time spending observed during the sample period for the networks under consideration.

• Program characteristics ( x nst

): for each program we determine the percentage of viewers that belong to each of six discrete demographic segments and four discrete socioeconomic segments. The demographic segments correspond to those commonly used to determine advertisement prices in Chile: men aged 18-35, women aged 18-35, men aged 35-49, women aged 35-49, men of all other ages and women of all other ages. The socioeconomic segments are classifications according to household income and education labeled, in descending or-

28

For identification purposes, we exclude the variables corresponding to percentage of women of all other ages and C2. When used in the model, each of these variables was mean centered to facilitate interpretation of the estimated coefficients.

• Advertiser characteristics ( z i

): advertisers were classified into the following segments: retail, consumer product goods (CPG), telecommunications, financial services and durable goods.

These variables will be used to account for observed heterogeneity among advertisers in their rates of diminish returns ( ρ i and γ i

) and their valuation of program characteristics ( β i

).

• Program airing audience size ( a nst

): this is defined as the estimated number of viewers during commercial breaks in millions.

• Subset (block) characteristics ( w st

): to account for differences among different days of the weeks and different times of the day, we include fixed effects for time blocks.

• Advertising prices ( p nst

) per second: the data record actual prices paid for ads sold by Canal

13 and estimated ad prices for competing networks provided by a third party research com-

pany (comparable to TNS or Kantar Media in the US).

• Outside media spending ( q i 0 t

): this is computed as the total spending in non-prime time hours and all spending in a sixth network (channel 5) for which audiences were too small to be reported by the independent ratings company.

11 The television network that provided the data suggested that we exclude the D segment from the analysis, as its relatively low commercial value leads advertisers to ignore that component of the audience.

12 We received from Canal 13’s marketing department a complete description of its advertising price setting process.

We cannot publish the details but the document describes the list of variables that systematically influence the network’s advertising prices and quantities. That list did not contain any items that could be interpreted as determinants of unobserved program characteristics. Therefore we use the likelihood in equation (35) for estimation rather than adjusting it to allow the distributions of and ε to depend on prices. We cannot say conclusively that advertising prices were never adjusted to reflect information about specific advertisers demand for unobserved program characteristics; but it does appear to us that this type of price adjustment was not part of the network’s regular pricing policy. If price endogeneity were thought to be a first-order concern, it is feasible to extend the Bayesian estimation methodology in

this paper to include an endogeneity correction as in Rossi et al. (1996).

29

Table 3: Summary statistics of the advertising spending data.

M SD Observations

Program Characteristics m1834 f1834 m3549 f3549 aom abc1 c2 c3 d audience

Advertiser Characteristics retail cpg telecommunications financial services durable goods

Budget Allocation advertising quantity (seconds per advertiser per block) price (US$/second) total spending (thousand US$)

0.06

0.08

0.06

0.08

0.12

0.07

0.18

0.27

0.42

0.25

0.13

0.35

0.08

0.14

0.10

0.34

0.48

0.28

0.35

0.31

3.36

13.68

211.46

176.37

353.04

418.65

0.04

0.05

0.04

0.04

0.06

0.04

0.08

0.10

0.15

0.15

Note: 1 million Chilean pesos was equivalent to US$2,135 in May 2011.

2,602,775

23,242

9,704

24,325

24,325

24,325

24,325

24,325

24,325

24,325

24,325

24,325

24,325

107

107

107

107

107

5.2

Results

Following the methodology described in Section 4, we estimated the model parameters using Gibbs

sampling running 40,000 iterations, using the last 20,000 for inference. We also estimated a restricted version of the same model setting γ i

= 1 for all agents, which yields an additively separable formulation. We obtain very strong support for the model based on weak separability, highlighting the importance of allowing for interdependence among program airings from the same time

block in their contribution to the advertiser’s benefits (2ln(Bayes Factor)>10; see Kass and Raftery

30

1995). As we will show below, for most advertisers

γ i takes a very small value, suggesting that advertisers generally have a tendency to spread their expenditures across blocks. Therefore, additive separability would be an exceedingly poor representation of these data.

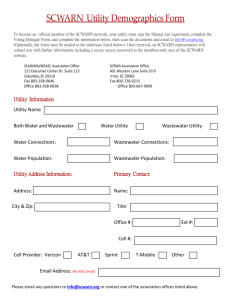

More specifically, the following table shows the parameters that determine the mean and standard deviation of the distribution of ( β i

0

, ˜ i

, ˜ i

) and also the significant interactions between these parameters and advertiser characteristics. Recall that β i is agent i ’s vector of coefficients that determine how utility is affected by program characteristics, while ˜ i and ˜ i are the logit transformations of the program and block diminishing returns (satiation) parameters. From these results we observe that higher audience shares from 18-34 females and lower shares from males and the c3 socioeconomic segment make program airings on average more attractive, although there is substantial heterogeneity in preferences across advertisers for some of these program characteristics (e.g., abc1). We also notice a few significant interactions between advertiser and program characteristics. In particular, retailers appear to derive stronger benefits from advertising since the interaction between the intercept and retail is significantly positive. The opposite is observed for producers of durable goods, while telecommunication companies are very attracted by 35-49 male audiences.

In terms of diminishing returns, when comparing the results for the distribution of ˜ i and γ i

, it is evident that on average within program returns decrease more slowly than those within a time block. Therefore, advertisers are willing to concentrate spending on certain program airings more so than on certain time blocks. Interestingly, when estimating a restricted model constraining γ i to 1 for all advertisers, the logit transformation of the within program rate of diminishing returns is much stronger with an estimated population mean of -3.02 versus 0.10 under the unrestricted model. This implies that the restricted model fits the advertising spending data by overestimating the within program rate of diminishing returns. In addition, regarding interactions between return rates and advertiser characteristics, we note that CPG advertisers more rapidly diminish their returns to advertising in a given program airing, while telecommunication advertisers obtain greater benefits from concentrating spending in a time block, suggesting that “reach” is a more important

31

goal for telecommunication firms than for other types of advertiser.

The overall price elasticity implied by the model is -3.5. Finally, we also report our estimates

(i.e., posterior means) of the scale of program airing shocks and the standard deviation of block shocks ( nst and ε st

) with posterior standard deviations in parenthesis: 1.77 (0.01) and 1.15 (0.01), respectively. These estimates show that the benefits from advertising in a particular program airing are more difficult to predict (to the researcher) than those specific to a time block.

Table 4: Model Results: Estimated distributions of β i

0

, ˜ i

, ˜ i

.

Population Mean Unobs. Heterogeneity (SD) post.M

post.SD

post.M

post.SD

Program characteristics preferences ( β i

) intercept -7.58

0.21

18-34 male -1.79

1.60

18-34 female

35-49 male

35-49 female

All other males abc1 c3

2.78

-4.71

0.58

-4.88

0.65

-1.75

1.18

2.07

1.49

1.24

2.03

0.68

Diminishing returns

Within Program ( ˜ )

Within Block ( ˜ )

0.10

-5.93

0.40

0.60

retail - intercept durable - intercept telecom - 35-49 male cpg ˜ telecom ˜

0.95

7.74

5.30

10.68

6.88

5.63

10.47

3.10

0.07

0.56

0.40

0.79

0.56

0.43

0.77

0.25

1.83

2.59

0.14

0.21

Obs. Heterogeneity (significant interactions) post.M

post.SD

0.46

-0.58

9.72

-1.34

2.36

0.21

0.36

3.84

0.50

1.12

32

5.3

Managerial implications

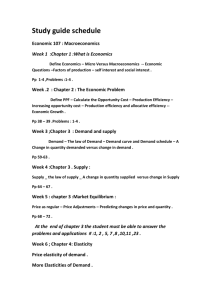

One of the ways the estimated model can be applied is to help a seller of multiple related goods consider the market response to a variety of pricing policies. To illustrate this application, we adopt the perspective of one of the networks (Canal 13) and consider the revenue implications of several different pricing strategies, with and without competitive reactions. That is, given a price change in all of Canal 13’s advertisement prices of -10%, -5%, 5% or 10%, what are the revenue implications during a particular period (week 2) given (a) no reaction by its competitors or (b)

immediate, identical reactions from all four of its competitors.

The following table displays the model’s revenue predictions for Canal 13 and its competitors under each scenario. The first number within each cell is Canal 13’s payoff and the second number

is its four major competitors’ aggregate payoff.

Table 5: Revenues under different pricing scenarios (thousands of US dollars).

Canal 13’s price change

-10%

10%

5%

0%

-5%

-10% 299.2

1,703.8

Competition’s price change

-5% 0% 5%

272.1

1,910.6

278.5

1,905.0

298.5

1,662.6

299.8

1,668.1

299.4

1,686.4

339.8

1,632.1

354.1

1,622.2

10%

297.3

1,657.6

Under the assumption of unilateral action (that is, no competitive reactions), Canal 13’s predicted revenues increase as prices fall, and decrease if price price rises. However, when we assume that the competition would match any price change, price discounts are not predicted to increase demand as much. Similarly, a price increase that is immediately matched by the competition is not predicted to raise Canal 13’s revenues. This could be interpreted as (at least weak) evidence of optimal price setting by Canal 13, because it predicts that the network cannot improve its revenues

13 These predictions assume that audience sizes do not change as a result of the pricing policy.

14 Two of the 107 advertisers (the 7th and 37th largest) were excluded from this calculation due to difficulties to achieve convergence of the advertising quantities for one of the scenarios.

33

in a symmetric equilibrium in which its competitors match its change in pricing policy.

The model could also be used to predict the outcomes of a variety of targeted pricing experiments. For example, the network could use it to determine which advertisers to target with individual pricing discounts. That is, suppose a network was going to replace the program available in a particular block and the new program carried a greater advertising capacity. The network could then identify its current advertisers with low γ as the ones most likely to respond to a targeted price reduction. These advertisers’ tendency to spread their spending among blocks would make them more likely to respond than advertisers with a greater tendency to concentrate spending within a block. It also would be possible to use the model to determine optimal discount rates, or to predict response to quantity discounts or other forms of bundling.

6 Conclusions

This paper proposed a parsimonious theory-driven approach that allows a researcher to estimate individual demand for multiple related goods. It did this by relaxing additive separability to allow for direct utility interdependencies. These interdependencies may be crucial in settings where agents purchase large numbers of related goods. To the best of our knowledge, this is the first approach to estimate direct utility interdependencies that does not exhibit a curse of dimensionality in the size of the consumer’s choice set. Further, we show how to generalize the model to allow for non-negative purchase quantities, explicitly use corner solutions in estimation, and estimate the model using a maximum likelihood approach.

The empirical application of the model showed that these direct utility interdependencies were important in the market for television advertising, since advertisers follow different strategies to spread or concentrate their budgets across networks within time blocks and across time blocks within weeks. For most advertisers we find strong empirical evidence of diminishing returns towards allocating spending to multiple simultaneous program airings. Moreover, in our application we observe that a model that assumes additive separability substantially overestimates the rate at

34

which an agent’s marginal utility of a particular good decreases with additional consumption.

The approach proposed here has a number of limitations which suggest opportunities for future research. For example, our agents’ decision making is inherently static; one could potentially generalize this model to allow for satiation across choice occasions as well as within and across blocks. Our results are based on particular functional forms and heterogeneity distributions; better alternatives may remain undiscovered. Finally, it might be possible to estimate the utility tree jointly with the parameters.

We hope this paper will motivate empirical researchers in marketing, economics, transportation and other fields to explicitly consider direct utility interdependencies among goods when modeling demand. As more and larger choice datasets become available, we will need further methodological work to formulate more flexible yet tractable theory driven specifications that capture how one good’s consumption may impact another’s contribution to utility.

References

Barten, Anton P. 1977. The systems of consumer demand functions approach: a review.

Econometrica 45 (1) 23–50.

Ben-Akiva, M. E., S. R. Lerman. 1985.

Discrete Choice Analysis: Theory and Application to

Travel Demand . MIT Press, Cambridge, MA.

Bhat, C. 2008. The multiple discrete-continuous extreme value (mdcev) model: Role of utility function parameters, identification considerations, and model extensions.

Transportation Research Part B 42 274–303.

Bhat, C.R., A.R. Pinjari. 2010. The generalized multiple discrete-continuous extreme value (gmdcev) model: Allowing for non-additively separable and flexible utility forms. Technical paper,

Department of Civil, Architectural & Environmental Engineering, The University of Texas at

Austin.

35

Brown, M., D. Heien. 1972. The s-branch utility tree: A generalization of the linear expenditure system.

Econometrica 40 (4) 737–747.

Chintagunta, Pradeep, Harikesh Nair. 2011. Discrete choice models of consumer demand in marketing.

Marketing Science 30 (6) 977–996.

Chintagunta, Pradeep K., J.-P. Jean-Pierre Dubé, Khim Yong Goh. 2005. Beyond the endogeneity bias: The effect of unmeasured brand characteristics on household level brand choice models.

Management Science 51 (5) 832–849.

Deaton, Angus, John Muellbauer. 1980.

Economics and consumer behavior . Cambridge University Press: Cambridge, UK.

Dubé, J.-P. 2004. Multiple discreteness and product differentiation: the demand for carbonated soft drinks.

Marketing Science 23 (1) 66–81.

Gentzkow, M. 2007. Valuing new goods in a model with complementarities: Online newspapers.

American Economic Review 97 (3) 713–744.

Gorman, W. M. 1959. Separable utility and aggregation.

Econometrica 27 (3) 469–484.

Hanemann, W. M. 1984. Discrete/continuous models of consumer demand.

Econometrica 52 (3)

541–561.

Hausman, G. Leonard, J., J. Douglas Zona. 1994. Competitive analysis with differentiated products.

Annals of Economics and Statistics 34 159–180.

Hendel, I. 1999. Estimating multiple-discrete choice models: An application to computerization returns.

Review of Economic Studies 66 423–446.

Kass, Robert E., Adrian E. Raftery. 1995. Bayes factors.

Journal of the American Statistical

Association Vol. 90 773–795.

36

Kim, Jaehwan, Greg M. Allenby, Peter E. Rossi. 2002. Modeling consumer demand for variety.

Marketing Science 21 (3) 229–250.

Lancaster, Kelvin. 1971.

Consumer Demand, A New Approach . New York: Columbia University

Press.

Manski, C. F., L. Sherman. 1980. An empirical analysis of household choice among motor vehicles.

ransportation Research A 14 349–366.

Rossi, Peter E., Robert McCulloch, Greg M. Allenby. 1996. The value of purchase history data in target marketing.

Marketing Science 15 321–340.

Sato, K. 1967. A two-level constant-elasticity-of-substitution production function.

The Review of

Economic Studies 34 201–218.

Satomura, Takuya, Jaehwan Kim, Greg M. Allenby. 2011. Multiple-constraint choice models with corner and interior solutions.

Marketing Science 30 (6) 977–996.

Song, Inseong, Pradeep K. Chintagunta. 2007. A discrete-continuous model for multicategory purchase behavior of households.

Journal of Marketing Research 44 (4) 595–612.

Train, Kenneth E., Daniel L. McFadden, Moshe Ben-Akiva. 1987. The demand for local telephone service: A fully discrete model of residential calling patterns and service choices.

RAND Journal of Economics 18 (1) 109–123.

Vásquez, Felipe, Michael Hanemann. 2008. Functional forms in discrete/continuous choice models with general corner solution. Working Paper, Universidad de Concepción.

37

A Jacobian derivation

The elements of the jacobian matrix J are given by the derivatives of each of the elements of

{ ns

: n ∈ N + s

, n > m s

, s ∈ S + } and { ε s