Attribute Based Consideration Sets

advertisement

Attribute Based Consideration Sets

by

Timothy J. Gilbride1 and Greg M. Allenby

Fisher College of Business

2100 Neil Avenue

Ohio State University

Columbus, OH 43210

Phone: (614) 688-8163

Fax: (614) 292-0879

gilbride.1@osu.edu

allenby.1@osu.edu

October, 2002

Acknowledgements

The authors thank Mike Lotti and Eastman Kodak Company for providing the data used in this

study.

1

Corresponding author.

Attribute Based Consideration Sets

Abstract

Consumers choose offerings with attributes that provide benefits. In many product categories

there are numerous offerings, each with multiple attributes, resulting in a complex consumer

decision problem. Consideration sets have been proposed as one mechanism for simplifying the

choice process. However, the simplification that consideration sets provide to consumers leads

to complexities in modeling choice, whenever the consideration set is not revealed. We propose

a consideration set choice model in which consumers evaluate products and attributes using a

conjunctive, disjunctive, or compensatory screening process. Bayesian data augmentation and

Markov chain Monte Carlo methods are used to navigate over the unobserved consideration sets

in a discrete choice model. The method is illustrated using a conjoint study of cameras. The

results indicate that 92% of respondents form consideration sets by screening alternatives on one

or more attributes.

Keywords: Conjoint Analysis, Decision Process, Hierarchical Bayes, Revealed Choice

1

Attribute Based Consideration Sets

1. Introduction

As consumers pursue the tasks and interests of their lives, they consider the attributes and

benefits of marketplace offerings, judging if they are worth purchasing. Consider, for example,

an individual purchasing a single-use (i.e., disposable) camera on his/her way to a family

reunion. Attributes of the camera may include: an automatic film advance/rewind; a flip-up flash

to reduce red-eye; look like a real camera; produce pictures that have a date stamp; develop

pictures instantly; let you know that the film has advanced properly; good for group shots. The

evaluation of all single-use cameras on every attribute is an involved process. The individual

may therefore form a subset of offerings with one or more of these attributes that are critical to

them, which are then evaluated in more detail.

Consideration sets have been proposed as a mechanism for simplifying the choice

process. However, the simplification that consideration sets provide to the consumer results in

complexities in studying consumer choice whenever the set is not revealed. For a product

category with J brands, there are 2J-1 different possible sets, among which the choice probability

for an offering varies. If the consideration set is not revealed to the researcher, it must be

integrated out of the expression for the choice probability when specifying the likelihood

function and predicting consumer choice. The difficulty in executing this operation is greatly

reduced when the number of possible sets is small, and, as a result, most empirical studies have

been limited to choice among a small number of choice alternatives.

In this paper we propose a new approach to modeling and estimating consideration sets

that can accommodate alternative models of set formation. Our approach employs the Bayesian

technique of data augmentation, and uses Markov chain Monte Carlo methods to integrate over

2

the unobserved consideration sets. The approach can handle a large number of potential sets,

and facilitates various set formation rules that include overall utility as well as the levels of

specific attributes, employing both conjunctive and disjunctive screening mechanisms.

The organization of the paper is as follows. In section 2 we describe our modeling

approach and describe how it deals with the complexities of estimating consideration set choice

models.

Alternative models of set formation are then discussed in section 3.

Section 4

introduces an empirical example in the context of a choice-based conjoint study for a new

camera type.

Results are presented in section 5 where we find that 92% of the survey

respondents use some form of non-compensatory screening when choosing among the choice

options. Implications and directions for future research are discussed in Section 6.

2. Estimating Consideration Set Models

Consumers use various decision rules or heuristics to simplify complicated decision

tasks. Theoretical and empirical support for the use of consideration sets by consumers can be

found in Payne and Easton (1978) who elicit decision protocols of consumers in supermarkets,

Bettman (1979) who argues that a phased decision process reduces the cognitive demands of the

decision maker, and Bettman and Park (1980) who provide evidence that the formation of the

consideration set is linked to specific attributes and that the final selection is more holistic.

Consideration set models have been applied in a variety of domains, including the analysis of

survey data (Gensch 1987) scanner panel data (Manrai and Andrews 1998), and data collected in

laboratory settings (Johnson and Meyer 1984). Johnson, Meyer, and Ghose (1989) and Andrews

and Manrai (1998) demonstrate that parameter bias and incorrect inferences result when the

decision process is not correctly modeled.

3

Consideration sets induce a complicated structure in the likelihood function. Consider,

for example, a simple discrete choice model without consideration sets. The choice probability

is:

Pr(i) = Pr(Vi + εi > Vj + εj for all j)

= Pr( εj < Vi – Vj + εi for all j)

+∞

=

∫

−∞

Vi − V j + ε i Vi − Vm + ε i

∫ L ∫ f (ε j ) L f (ε m ) dε j L dε m f (ε i ) dε i

−∞

−∞

(1)

where Vi denotes the deterministic portion of utility of choice alternative i, εi is the stochastic

portion, and it is assumed that the stochastic terms are distributed independently. The probit

model is obtained by assuming that the stochastic terms are distributed normal, and the logit

model is obtained when the stochastic terms are distributed extreme value. The discrete choice

probabilities are derived by partitioning the domain of the error space corresponding to a choice

outcome, and integrating the error densities over the corresponding region. The regions for a

simple discrete choice model are indicated it the upper and lower limits of the integrals.

The likelihood for an observed sequence of choices is the product of the choice

probabilities:

l(V | data ) ≡ π (data | V ) = ∏ Pr(i )

(2)

i

The likelihood surface for simple discrete choice models is continuous and globally concave

(Maddala 1983). Small changes in the deterministic portion of utility (V) results in small

4

changes in the limits of integration, and small changes in the resulting choice probabilities.

Moreover, unique values of the deterministic portion exist that maximize the probability of the

observed choices. The regularity of the likelihood surface allows use of gradient-based methods

(e.g., Newton-Raphson) to solve for values of the deterministic portion (V) that maximize the

likelihood.

The presence of consideration sets destroys the regularity of the likelihood function. As

choice alternatives enter and leave the consideration set, the choice probabilities are obtained by

integrating over an error space of changing support.

Adding an alternative (k) to the

consideration set results in an additional factor in equation (1), with an additional stochastic error

(εk) and appropriate limits of integration. The likelihood surface for a consideration set model is

therefore discontinuous, and not necessarily globally concave.

Researchers have historically dealt with the non-regularity of the likelihood surface by

writing the choice probability as the product of a (conditional) second stage choice probability

and a marginal consideration set probability:

Pr(i,C) = Pr(i|C) × Pr(C)

(3)

where C denotes the consideration set that reduces the number of choice alternatives in equation

(1).

This approach, coupled with the assumption that that the values of the deterministic

components {Vi} are constant across the consideration set, results in a smooth and globally

concave likelihood surface.

Andrews and Srinivasan (1995) use this approach to study

consideration sets in consumer choice using scanner panel data, where the probability that an

5

alternative is considered is modeled with a binary probit model. Ben-Akiva and Boccara (1995)

employ a logit model for the consideration set probability.

The estimation of consideration set models using equation (3) has historically been

approached sequentially, sometimes with independent data. Fader and McAlister (1990) use

display and feature data to identify sets of promoted offerings and assume the consideration set is

either the set of previously purchased brands currently on promotion or all previously purchased

brands. Jedidi, Kohli and DeSarbo (1996) and Roberts and Lattin (1991) have respondents

indicate the acceptability of alternatives in a survey. When independent data are available to

identify the acceptability of an offering, the evaluation of consideration set models can employ

standard maximum likelihood techniques.

If independent data are not available to identify the consideration set, it becomes a latent

construct that must be simultaneously estimated with the observed choices. Complications arise

in the evaluation of the choice probabilities because the partition of the error space described in

equation (1) must also be partitioned with respect to the consideration set (see Mehta, Rafiv and

Srinivasan, 2002).

Only one realization of the error vector, ε, is associated with each

observation, and it is not appropriate to estimate the two components in equation (3) by assuming

that each are associated with a different realization. Such an assumption leads to a non-identified

model specification unless additional assumptions are made to avoid associating each

observation with two errors. The complexity of the evaluation is aggravated by the 2J-1 different

sets that are possible when J offerings are studied. Researchers have therefore tended to focus on

a small number of choice alternatives (J < 4) where the effects of the consideration sets are not as

pronounced.

6

An alternative to partitioning the error space is to directly estimate the probability of all

consideration sets. Chiang, Chib and Narasimhan (1999) consider equation (3) as a hierarchical

Bayes model, where the 2J-1 sets are assigned a prior distribution and posterior estimates of set

composition are obtained.

While this approach avoids the complications involved with

identifying suitable partitions of the error space to derive choice probabilities, the consideration

sets are not linked to the characteristics of the offerings other than through the fact that the

chosen brand is an element of the set. Consideration sets with high posterior probabilities

comprise offerings that have been chosen and exclude offerings that have not been chosen.

Our model structure allows for consideration set formation based on the attributes of the

choice alternatives and/or the overall value of the offering, and can employ either conjunctive

(i.e., and) or disjunctive (i.e., or) rules for combining these components. We navigate the

complicated partitioning of the error space by employing data augmentation and Markov chain

Monte Carlo methods. We next describe the use of data augmentation to evaluate the standard

probit model in equation (1) above. We then show how this method can be used to estimate

choice models with consideration sets.

Data Augmentation

Direct evaluation of the probability in equation (1) can be avoided in a Bayesian

approach to estimation if the parameters {V} are augmented with a vector of latent variables, z

(see Tanner and Wong 1987), where

zi = Vi + εi ,

εi ~ Normal(0,1)

(4)

7

and the model is written hierarchically:

y |z

(5a)

z|V

(5b)

where y denotes the observed choice outcomes. Equation (5a) reflects the censoring of the

continuous variables z, where only the offering with the maximum value of z is revealed to the

researcher by the choice data. Equation (5b) reflects the assumption that the latent variables are

normally distributed, with the mean of each element equal to Vi and variance one. In marketing

applications of the probit model, the mean is typically parameterized Vi = xi'β. Markov chain

Monte Carlo estimation of the probit model proceeds by drawing iteratively from the two

conditional distributions:

π(z|y,V) = Truncated Normal (V,I)

(6a)

π(V|z)

(6b)

= Normal( z , I/n)

where "I" is the identity matrix, and the truncation in equation (6a) corresponds to the alternative

selected being the maximum draw. If Vi is parameterized as xi'β then the mean and variance in

equation (6b) would correspond to the OLS regression estimate and covariance matrix. Detailed

treatments of estimating the probit model with data augmentation can be found in Albert and

Chib (1993), McCulloch and Rossi (1994), Allenby and Rossi (1999) and Edwards and Allenby

(2002).

8

The upper limits of the integral in equation (1) that complicate the evaluation of the

choice probability are replaced with a much simpler procedure that involves drawing from a

truncated normal distribution. The truncation points correspond to the limits of integration, and

the Markov chain is used to navigate the domain of the error space consistent with the observed

data to arrive at the posterior distribution of model parameters.

Thus, data augmentation

provides a clever way of avoiding the estimation of the integral.

Application to Consideration Set Models

The consideration set restricts the set of alternatives that are evaluated for final selection.

The considered choice alternatives are identified with an indicator function, I(x,γ), that equals

one if the decision rule is satisfied and equals zero otherwise. The choice model can then be

written in general form as:

Pr(i) = Pr(Vi + εi > Vj + εj for all j such that I(xj,γ) = 1)

(7)

where xj denotes a generic argument of a function that reflects the decision rule applied to the jth

offering. For example, if price is used to screen the choice alternatives, so that only offerings

above a lower threshold are considered, then I(xj,γ) = I(pricej > γ). If an upper threshold for price

is present, then negative price can be used as an argument, I(xj,γ) = I(-pricej > γ).

This

formulation allows for dynamic consideration sets when an attribute (e.g., price) changes over

the choice history. Moreover, as described below, the indicator function can be specified with

multiple attributes and alternative decision rules.

9

If an alternative is in the consideration set, then its choice probability is determined

relative to the other offerings in the set. If an alternative is not in the consideration set, then its

choice probability is zero. The choice model can be written hierarchically as:

y|z,I(x,γ)

(8a)

z|V

(8b)

where zi = Vi + εi, and estimation proceeds by drawing iteratively from the conditional

distributions:

z|y,V,I(x,γ)

(9a)

γ|y,z,x

(9b)

V|z

(9c)

The conditional distribution in equation (9a) is a truncated normal distribution for those

items in the consideration set. That is, the element of z corresponding to the chosen alternative is

greater than the elements of z that correspond to the alternatives not chosen, given that they are

in the consideration set. If an alternative is not in the consideration set, which is determined by

the indicator function I(x,γ), then z is drawn from a non-truncated distribution. Here we assume

I(x,γ) does not involve the deterministic component of utility, V; changes to the hierarchy to

accommodate this decision rule are discussed below.

10

The conditional distribution for the cutoff parameters, γ, in equation (9b) is dependent on

the observed data, y, and the augmented parameter, z. The conditional distribution takes the

form of an indicator function because equation (8a) is a deterministic function that censors the

continuous latent variables z, identifying the considered offering with the highest value.

Variation in the value of the cutoff parameter changes the set of considered alternatives.

Permissible values of the cutoff parameters are those that lead to a consideration set where the

maximum of the augmented variable, z, corresponds to the observed choice. If a value of the

cutoff leads to a consideration set where the alternative with the maximum z is not the alternative

chosen, then the cutoff parameter is not permissible. This can happen because some values of

the augmented parameter in equation (9a) are drawn from a truncated distribution while others

are not. Hence, variation in z results in different consideration sets by defining the permissible

values of the cutoffs.

Finally, the conditional distribution in equation (9c) is identical to equation (6b).

Standard normal distribution theory can be used to generate the draw of V. If V is parameterized

as a regression function, V=X'β, then draws of β are generated from a normal distribution with

mean and covariance matrix corresponding to the standard OLS model. Details of the estimation

routine for our empirical study, reported below, are provided in the appendix.

The Markov chain defined by equations (9a) – (9c) generates draws from the full

conditional distribution of model parameters despite the irregularity of the likelihood surface.

Given the cutoffs, γ, the model (equation 7) becomes a standard discrete choice model. Given

the set of augmented variables, {z}, some values of the cutoff are acceptable and others are not.

Across multiple draws of the augmented variables, however, the acceptable range of γ varies, and

the Markov chain converges in distribution to the full conditional distribution of all model

11

parameters. The augmented variable z reflects a single error term that gives rise to the joint

probability of the consideration set and the resulting choice.

3. Alternative Decision Rules

Alternative models of consideration set formation can be investigated with the indicator

function relationship, I(x,γ) = 1. Flexibility exists in the specification of this relationship because

its complexity does not increase the complexity of estimating the consideration set choice model.

For each of the non-compensatory models described below, the conditional distributions

described in equations (9a) – (9c) are the same, except for the screening rules leading to different

alternatives for consideration in equation (9a). Changes required by the use of a compensatory

model are discussed below.

Consideration sets formed by a conjunctive decision rule requires that an alternative be

acceptable on all relevant attributes for it to be included in the consideration set. A conjunctive

rule is formed by multiplying indicator functions across the attributes (m) of an offering:

Conjunctive Rule:

Πm I(xjm > γm) = 1

(10)

where xjm is the level of attribute m for choice alternative j. The cutoff value, γm, is the smallest

level of the attribute that needs to be present for the decision maker to consider the offering. If

the cutoff value is smaller than all levels of the attribute, then the attribute is not used to screen.

For nominally scaled attributes (i.e., 0,1 attributes), a cutoff value greater than zero indicates that

the attribute is required.

Use of the multiplicative function, Πm, results in a conjunctive

12

combination of the factors where all components must be satisfied for the combination to be

satisfied.

A disjunctive decision rule is one where at least one of the attribute-levels is acceptable:

Disjunctive Rule:

Σm I(xjm > γm) ≥ 1

(11)

and a compensatory decision rule is one where the deterministic portion of the utility of the

offering must exceed a threshold value to be acceptable.

Compensatory Rule: I(Vj > γ) = 1

(12)

The compensatory decision rule requires a change in the hierarchical model. Since the

deterministic portion of utility, V, is used to determine both the consideration set and the final

choice, the hierarchy is:

z|y,V,I(V,γ)

(9a')

γ|y,z,V

(9b')

V|z ,y

(9c')

The exact form of these conditional distributions and the estimation algorithm is contained in the

appendix.

In addition to the form of the decision rule, researchers can specify that the cutoff

parameters (γ) take on a limited range of values. If an attribute, x, is continuous (e.g., price),

13

then γ can also be continuous. However, if the attribute takes on a restricted number of values,

then the cutoff is not well identified over an unrestricted range. In a conjoint analysis, for

example, there are rarely more than four or five levels of an attribute. In these cases one can

assume that the cutoff parameter takes on a small number of finite values by a prior assumption

that γ is distributed multinomial over a grid of values, and estimating the posterior distribution of

the multinomial mass probabilities. We explain this approach in the context of our empirical

application next.

4. Data and Models

The consideration set model is illustrated in the context of a discrete choice conjoint

study of consumer preferences for the features of a new camera format, the Advanced Photo

System (APS). This photographic system offers several potential advantages over standard

35mm film systems, including mid-roll film change, camera operation feedback, and a magnetic

layer on the film that offers the ability to annotate the picture with titles and dates. A more

complete description of the data is available in Allenby et. al. (2002).

Respondents were screened and recruited by telephone, and interviewed at field locations

in nine metropolitan areas. A total of 302 respondents participated. The screen was designed to

exclude individuals who had little or no interest in photography, and retained approximately 55%

of the population contacted. This compares to approximately 67% of the population that owns

still cameras (Simmons, 1997). Individuals participating in the study were therefore assumed to

be good prospects for cameras in the price range of the new APS offerings, and representative of

the APS camera market.

14

Respondents were asked to evaluate 14 buying scenarios. Each scenario contained full

profile descriptions of three compact 35mm cameras, three new APS cameras, and a seventh no

buy option. Table 1 lists the attributes that describe each of the cameras, and identifies the

attributes that are new and specific to APS cameras. Table 1 also displays restrictions on the

availability of certain attributes. For example, the 4× zoom lens was available only on the "high"

body style. Prices ranged from $41.00 to $499.00, the average price for APS cameras was $247,

and the average price of the compact 35mm cameras was $185.

== Table 1 ==

Each buying scenario requires the respondent to engage in a complex decision. With six

offerings described on eight attributes, plus the no-buy option, we consider this to be a good

dataset to investigate the formation of consideration sets. The no buy option was selected 14.7%

of the time or an average of 2.1 times in the 14 choice tasks. One of the APS camera offerings

was chosen 39.9% of the time, and one of the 35mm cameras was selected 45.4% of the time. In

the analysis that follows, 12 choice tasks per respondent were used to calibrate the model and the

final two retained for predictive testing. This results in 3,624 observations for model estimation

and 604 for hold-out validation.

Five models are fit to the data. In each model we make the assumption that the no buy

option is always in the consideration set. The first model is a heterogeneous probit model that

assumes all choice options are considered by the respondent. For identification, effects-level

coding is used for the non-price attributes, with the lowest level of each attribute set to zero,

except for body style. For the no buy option, all attributes are set to zero. Price is parameterized

as a natural logarithm. The effects-level coding results in 18 parameters, 17 corresponding to

binary indicator variables and the 18th corresponding to ln(price). In order to fix the scale in the

15

probit model, the error term is assumed to be independent and identically distributed across

choice tasks with unit variance. The latent continuous variable in our model setup is therefore:

Pr(j)hi = Pr(zhij > zhik for all k)

(13)

zhi = Xhiβh+ εhi,

(14)

εhi ~ N(0,I)

βh ~ Normal( β , Σβ)

(15)

where h indexes the respondent, i is the index for the buying scenario, and j and k indicate the

choice alternatives within the buying scenario.

The second model assumes that the consideration set comprises choice alternatives with

the deterministic value of utility (Vhi = Xhiβh) greater than a threshold value, γh. That is, the

selected alternative has greatest utility of the options for which Vhik > γh:

Pr(j) hi = Pr(zhij > zhik for all k such that I(Vhik > γh) = 1)

(16)

We allow for heterogeneity in the cutoff value, γh through the normal distribution:

γh ~ Normal( γ , σ γ2 )

(17)

The third model assumes that the consideration set is formed using a conjunctive decision

rule over the m attributes for each alternative:

Pr(j) hi = Pr(zhij > zhik for all k such that Πm I(xhijm > γhm) = 1)

16

(18)

where xhijm is the level of the attribute for respondent 'h' in buying scenario 'i' for alternative 'j'

and attribute 'm'. γhm is a respondent-level parameter representing the threshold, or acceptable

level, of attribute 'm' for respondent 'h'. We employ two alternative heterogeneity distributions

for the threshold parameters. When attribute m is continuously distributed (e.g., price), then we

employ a normal distribution to allow for heterogeneity:

γhm ~ Normal( γ m , σ m2 )

(19)

When an attribute takes on a small number of discrete levels we assume that the threshold

parameter is distributed multinomial. For example, consider the zoom lens attribute in Table 1

taking on values of none, 2x and 4x. We recode the attribute levels (e.g., 0,1,2) and specify a grid

of possible cutoffs (e.g., -0.5, 0.5, 1.5, 2.5) where the lowest cutoff value indicates that all

attribute-levels are acceptable, and the highest level indicates that none of the levels are

acceptable. For nominally scaled attribute-levels, where a prior ordering is not obvious (e.g.,

color), the grid has three points (-0.5, 0.5, 1.5). The full set of attributes, levels, and cutoffs is

contained in Table 5. The distribution of heterogeneity of the cutoff parameters with discrete

levels is:

γhm ~ Multinomial(θm)

(20)

17

where θm is the vector of multinomial probabilities associated with the grid for attribute m.

The fourth model assumes that the consideration set is formed using a disjunctive

decision rule:

Pr(j) hi = Pr(zhij > zhik for all k such that Σm I(xhijm > γhm) ≥ 1)

(21)

The upper and lower grid points specify the role of the attribute in determining the

consideration set, depending on the decision rule. An attribute determines membership in the

consideration set only if it changes the value of the alternative indicator function. For the

conjunctive model, if the threshold for attribute k is equal to –0.5, then I(xhijk > γhk) always

equals one and the value of Πm I(xhijm > γhm) does not change. Since different levels of the

attribute do not affect consideration sets, we conclude that attribute k is not being used to screen

alternatives. For the disjunctive model, if γk = 2.5, then I(xhijk > γhk) always equals zero and the

value of Σm I(xhijm > γhm) does not change across different levels of attribute k.

For the

disjunctive model, the upper value of the threshold implies no screening based on that attribute.

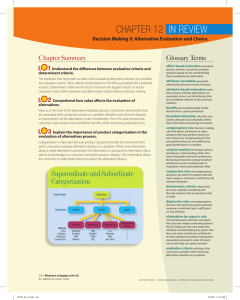

Figure 1 illustrates the procedure and the interpretation.

== Figure 1 ==

The fifth model assumes that consumers are either using a conjunctive (equation 18) or a

disjunctive (equation 21) decision rule.

That is, we allow for the presence of structural

heterogeneity (Yang and Allenby, 2000):

Pr(j) hi = φPr(zhij > zhik for all k such that Πm I(xhijm > γhm) = 1) +

(1-φ)Pr(zhij > zhik for all k such that Σm I(xhijm > γhm) ≥ 1)

18

(22)

where φ is the portion of the sample using the conjunctive decision rule and (1-φ) is the portion

using the disjunctive decision rule.

Inference is conducted via Bayesian MCMC methods. The chain converged quickly in

all models. Convergence was assessed by inspecting time series plot from multiple starting

points. The chain was run for 10,000 iterations with the final 5,000 iterations used to estimate the

moments of the posterior distributions. Details of the estimation algorithms for all models are

provided in the appendix.

5. Results

The results indicate that the conjunctive model fits the data best.

In sample and

predictive fits of the five models are presented in table 2. The log marginal density is computed

using the GHK estimator (Keane, 1994, Hajivassilious, McFadden and Rudd, 1996) and the

importance sampling method of Newton and Raftery (1994). Hit probability is defined as the

average probability associated with the observed choices. Hit frequency is the number of times

the predicted choice matches the actual choice.

The parameters reported below for the

conjunctive model result in 30% of the alternatives screened out of the final consideration set,

which averaged five (out of seven) alternatives including the no buy option.

Fit results for the other models are as follows. The fit for the disjunctive model is slightly

worse than the standard probit model, with parameters that lead to only 2% of the alternatives

screened from final consideration. The slight decrease in the log marginal density for the

disjunctive model is from the increase in the dimension of the model due to the cutoff

parameters. Although the compensatory model screened out 23% of the alternatives to form the

19

consideration set and has a slightly better in-sample fit than the probit model, its predictive

accuracy is nearly identical.

The structural heterogeneity model assigned nearly all the

respondents (99%) to the conjunctive consideration set model as opposed to the disjunctive

model, so the fit statistics closely match those of the conjunctive model. The results provide

evidence that the conjunctive model is superior to both the standard probit and non-conjunctive

models.

Tables 3 and 4 report part-worth estimates for the probit and conjunctive models. Table 3

displays the mean and covariance matrix of the part-worths for the probit model, and table 4

reports the same statistics for the conjunctive model. Substantive differences exist in the partworth estimates for body style, zoom, camera setting feedback and price. The conjunctive model

estimates are much smaller for these attributes, with the average price coefficient moving to zero.

The reason for this difference is discussed below.

== Tables 3 and 4 ==

The cutoff parameters are reported in table 5. For discrete attributes, the cutoffs are

reported in terms of multinomial point mass probabilities associated with the grid described in

figure 1. The probabilities indicate the fraction of respondents with a cutoff parameter (γ) that

screens out choice alternatives. For the conjunctive model, θ1 = .598 indicates that 59.8% of the

respondents are not screening alternatives based on "Body Style," θ2 = 0.324 implies that 32.4%

of the respondents screen out offerings with the low body style, θ3 =0.059 implies that 5.9% of

the respondents screen out offerings with low and medium body style, and θ4=0.015 implies that

1.5% of the respondents screen on all alternative levels. Estimates of θ equal to approximately

2% reflect the influence of the prior distribution and can be disregarded for practical purposes.

== Table 5 ==

20

The use of screening rules to form consideration sets is pervasive among the respondents

in this research. Analysis of the individual-level cutoff values indicates that 92% of respondents

form consideration sets using the conjunctive screening rule; 58% are forming consideration sets

based on a single attribute, 33% are using two attributes, and 2% are using 3 attributes. A

strength of the Bayesian approach outlined in this paper is the ability to make individual level

inferences and estimate not only what attributes are important in forming consideration sets but

also who is forming consideration sets.

Consider, for example, an aggregate analysis of the effect of the price threshold,

estimated to be equal to –6.269. This corresponds to an average price threshold of $527. Since

the maximum price of an APS camera is $499, this implies that, on average, respondents are not

screening on price. However, such analysis masks the tail behavior of the distribution of cutoff

values. As displayed in figure 2, 10% of the respondents will only consider cameras under $276,

20% will only consider cameras under $356, etc. In all, 41% of the respondents are screening

alternatives based on price.

== Figure 2 ==

Figure 3 displays the proportion of respondents screening on each of the attributes. Body

style, zoom, camera settings feedback and price are the attributes most often used to form the

consideration set. These attributes are available on both 35mm and APS cameras, and as a result

respondents are more fully aware of their benefits. In contrast, none of the new APS attributes

(e.g., mid-roll film change) are being used to form consideration sets. In addition, the attributes

used to form consideration sets have substantively different part-worths in tables 3 and 4. Our

results indicate that once the consideration set is formed, the price and body style do not play a

role in the final decision. The role of the zoom and camera feedback features however, continue

21

to play a role in the final selection of a camera beyond just forming consideration sets. Previous

authors have hypothesized that an attribute plays either a role in the screening phase or the

choice phase (Bronnenberg and VanHonacker, 1996) or that once an attribute is deemed

acceptable it will play no role in the final selection process (see Bettman, 1979); these results

provide evidence on this issue.

== Figure 3 ==

The participants in the survey were recruited and screened so that they were a prospect

for cameras in the price range of the APS offering. Despite this pre-qualification, some 41% of

respondents used price to form consideration sets and excluded those cameras above a particular

threshold. Exploratory analysis revealed no relationship between the posterior estimate of the

price threshold value and demographic variables including income. However, replies to the

question "Typically, when you attend informal social gatherings away from your home, how

often do you take a camera along with you?" were associated with the price threshold (p-value =

.0362). Those respondents that indicated they "almost always" take their camera (44% of

respondents) have an average price threshold $68 higher than respondents who take their camera

less frequently. Although exploratory in nature, this analysis shows that other variables correlate

with the price threshold and may be used in conjunction with the model to better identify

prospects in the market place.

The empirical results support the conjunctive consideration set model. Better in-sample

and out of sample fit despite the large increase in the number of parameters argue for the use of

the consideration set model over the more parsimonious probit. Consumers tend to screen

alternatives using attributes they are already familiar with and an exploratory analysis was

conducted to demonstrate how the price threshold can be associated with other variables in a

22

posterior analysis. After controlling for their effect in forming consideration sets, the importance

of the screening attributes in the final choice among acceptable alternatives is smaller than that

indicated by a standard probit model.

6. Discussion

In this paper we introduce a model of attribute based consideration sets and estimate it

with data from a discrete choice conjoint study.

We find support for the formation of

consideration sets and for the conjunctive model in particular. The use of consideration sets is

pervasive with 92% of respondents using this heuristic to manage the complexity of the choice

problem. The empirical results also show that consumers screen alternatives using attributes that

are well known as opposed to the new and novel attributes also included in this study. Some

attributes appear to be only used in forming consideration sets, while others are used in both

stages of the model: screening and final product choice.

Although previous research has

documented the improved fit of consideration set models and statistical biases that result from

ignoring them, our method can apply a variety of screening rules and handle realistic sized

problems. Our ability to capture a non-compensatory screening process and make attribute level

inferences is due to the unique model formulation and estimation technique.

A key element in our model formulation is accurately navigating the likelihood of the

data where only the final choice is observed. Past researchers have attempted to isolate the

consideration set probability Pr(C) in equation (3) to create a smooth likelihood surface. This

approach requires a complex partition of the error space corresponding to the 2J-1 consideration

sets and the choice probability within each set (see Mehta, Rajiv and Srinivasan 2002).

Alternatively, the consideration set probability can be estimated by treating each of the 2J-1

23

partitions as a model parameter (see Chiang, Chib and Narasimhan 1999). Our approach is to

not marginalize the consideration set probability, but to specify the joint event of consideration

set formation and choice, Pr(i,C). The advantage of this approach is that it allows us to model

consideration set formation based on various compensatory and non-compensatory decision

rules.

This research highlights the benefits of and the need for more research on extended

models of choice (Ben-Akiva, et. al. 1999). In this research we have demonstrated that the data

is consistent with a two-stage decision process utilizing decision heuristics to form consideration

sets. In an exploratory analysis we show that the level of the price threshold was related to a

camera usage question thought to encompass varying concerns and interests related to pursuing

photography. It may very well be that the use of any decision heuristic, which decision heuristic

(conjunctive or disjunctive), and/or which attributes to screen are also related to individual

concerns and interests that may vary within an individual across usage occasions or choice

contexts. For example, choosing a camera as a gift for a loved one may result in more effortful

decision making and less reliance on decision heuristics; choosing a single use camera to take to

the beach may be entirely characterized by a lexographic decision process (e.g. the cheapest).

The model proposed in this paper permits testing of various decision-making processes using

revealed choice data.

The prevalence and improved fit of the conjunctive consideration set model illustrates the

important role that individual attributes play in consumer choice processes. This paper and

previous research demonstrate the importance of two-stage decision making and consideration

sets in consumer choice processes (see Shocker, Ben-Akiva, Boccara, and Nedungandi, 1991).

Our study extends this research and examines competing theories of compensatory, conjunctive,

24

and disjunctive consideration set formation within a single dataset and provides evidence in

favor of the conjunctive model.

The conjunctive model eliminates an alternative that is

unacceptable on any single attribute regardless of the other elements in the marketing mix. The

behavioral decision making literature hypothesizes that consumers make either brand based

decisions or attribute based decisions (Bettman, Luce, and Payne, 1998).

Brand based or

alternative based decisions involve an evaluation of all the attributes of an alternative to

determine its acceptability. Attribute based decisions permit the elimination of an alternative

based on a single attribute. The support for the conjunctive consideration set model indicates

that, at the consideration stage, consumers are making screening decisions based on the levels of

the product attributes.

Several improvements on the existing model are possible. First, attribute level thresholds

or the propensity to use one decision process over another in the structural heterogeneity model

can be parameterized as functions of explanatory variables. The Bayesian hierarchical model can

then be expanded by another level. In this data, there was very little evidence that consumers

used a disjunctive decision rule to form consideration sets. It may be that more than twelve

observations per individual are necessary to identify the parameters in this very flexible model.

The methodology presented in this paper can be extended to other choice contexts. The

conjoint exercise used in this paper involved six alternatives described on eight attributes plus a

"none" option. Conjoint researchers may be interested to know if the number of alternatives or

attributes influences respondents' use of consideration sets; this question can be studied using the

proposed model and an experimental design that varies the number of attributes and alternatives.

The model can also be applied to scanner panel datasets or any other panel dataset that captures

25

repeated purchase behaivor. Such a study would reveal the importance of individual attributes

and/or the use of consideration sets in an actual market setting.

Managers frequently define markets based on particular attributes (e.g. body style) or

price points, recognizing that certain consumers will only consider offerings with specific

attribute-levels, regardless of other elements of the product offering. Past methods of identifying

these attributes and levels have tended to rely on compensatory models that may not be a good

representation of the decision process. While compensatory models can often predict as well as

non-linear models, inferences for strategic decisions are often biased. Our model overcomes the

statistical and practical problems of estimating models of attribute based consideration sets.

26

Appendix: Estimation Algorithm

Equations (9a) – (9c) list the conditional distributions for estimating the choice model in (8a) –

(8b). The estimation for the conjunctive screening process, equation (10) is described first.

Extension to the disjunctive process, equation (11) is straightforward. Changes necessary to

estimate the compensatory process, equations (12) and (9a') – (9c') are then described. The

standard hierarchical Bayes probit model (Rossi, et. al. 1996) and structural heterogeneity

models (Yang and Allenby , 2000) have been described elsewhere. The augmented data problem

for the conjunctive model is:

yhij=1 if zhij > zhjk for all k such that I(xhik,γh)=1

(A1)

zhij = x 'hij βh+ εhij

(A2)

ε ~ N(0,σε=1)

I(xhij,γh)=1 if ∏m[I(xhijm > γhm)] = 1

(A3)

Where 'h' indexes the respondent, 'i' the choice set, 'j' the alternative in the choice set, and 'm' the

attributes in each alternative. Heterogeneity on the individual household parameters is introduced

hierarchically with the following distributions:

βh ~ MVN( β , Σβ)

γhm ~ Multinomial(H, θm1, …..,θmn) for discrete attributes γ1, … γ10

γh11 ~ N( γ , σ γ2 ) for γ11 a continuously scaled attribute

The hierarchy is completed by specifying the prior distributions on the hyper-parameters:

β ~ MVN(0,100I)

Σβ ~ IW(ν,∆)

θm ~ Dirichlet(α)

for m = 1,…,10 corresponding to discrete attributes

γ ~ N(0,100)

σ 2γ ~ IG(a,b)

for γ11, continuous attribute

where IW is the inverted Wishart distribution, ν = k+8, and ∆ = νI. θm is a vector of dimension

'n' where 'n' indexes the possible values for γm, and α is a conforming vector with each element

set equal to 6. IG is the inverted gamma distribution and a=10 and b=.1.

A Gibbs type sampler is constructed to draw from the conditional posteriors described in (9a) –

(9c) as well as the distributions for heterogeneity.

27

1. Generate zhi|yhi, Xhi, I(Xhi,γh), βh, σε=1 for i=1, …. I and h=1,…,H

Start with j=1,

if yhij = 1 then zhij ~ TN(xhij'βh, σε=1, zhij > zhjk for all k such that I(xhik,γh)=1)

if yhij=0 and I(xhij,γh)=1 then zhij ~ TN(xhij'βh, σε=1, zhij < zhim, where yhim=1)

else zhij ~ N(xhij'βh, σε=1)

increment j and return to top.

2. Generate γhm|zh, yh, Xh for m = 1, ….10 (discrete attributes) and h=1,…,H

a

Conditioned on the current values for zh and the observed data, the set of allowable γ hmn

p

must be identified from the set of possible γ hmn

. Here, 'n' indexes the number of possible

a

values of γ for each attribute as listed in table 5 (e.g. n=4 for Body Style). Let I( γ hmn

)=1

indicate that γhmn is a member of the set of allowable γhm.

Start with n = 1, test each j=1,…J, i=1,….I

a

If yhij = 1 and ∏m[I(xhijm > γhm*)] = 0, then I( γ hmn

)=0

If yhij = 0 and ∏m[I(xhijm > γhm*)] = 1, then

a

)=0

If zhij > zhik where yhik=1, then I( γ hmn

a

)=1

Else, I( γ hmn

increment n and return to the top.

p

a

Here γhm* includes γ hmn

for the appropriate attribute. Now choose γhm from γ hmn

using a

Metropolis-Hastings type step:

a

γhm = γ hmn

with probability =

a

I(γ hmn

)θ mn

a

∑ I(γ hmn )θ mn

n

This is an example of the "Griddy Gibbs" as described by Tanner (1993).

28

3.

Generate γh11|zh, yh, Xh for (continuous attribute) h=1,…,H

This is a more traditional random walk Metropolis-Hastings step, but the candidate γ (n)

h11 ,

must be checked to make sure it is a member of the allowable set.

(o)

Let γ (n)

h11 = γ h11 + e where e ~ N(0, σe=.5). The value σe is chosen to ensure the acceptance

rate is approximately 50%. γ (o)

h11 is the value of γh11 from the previous iteration of the

Gibbs sampler.

(n)a

(n)

(o)

Determine I( γ (n)a

h11 ) following the steps outlined in 2; if I( γ h11 ) = 0, then γ h11 = γ h11 . If

(n)

I( γ (n)a

h11 )=1, then accept γ h11 with probability:

min:

2

exp[-(2σ 2γ ) −1 ( γ − γ (n)

h11 ) ]

,1

2

exp[-(2σ 2γ ) −1 ( γ − γ (o)

h11 ) ]

4. Generate βh | Xh, zh for h=1, …, H

βh ~ MVN(b, (Xh ‘Xh + Σβ-1)-1)

b = (Xh ‘Xh + Σβ-1)-1 (Xh‘zh + Σβ-1 β )

5. Generate β | {βh}, Σβ

β ~ MVN( b , ((Σβ/H)-1 + (100I)-1)-1)

H

b = ((Σβ/H)-1 + (100I)-1)-1)( Σβ-1 ∑ β h + (100I)-1(0))

h =1

6. Generate Σβ | {βh}, β

Σβ ~ IW(ν + H ,∆ +

H

∑ (β

h =1

h

− β )′( β h − β ) )

29

7. Generate θm| {γhm} for m=1,…,10 (discrete attributes)

Define shmn = 1 if γhm = γhmn or shmn = 0, otherwise. Then,

θm ~ Dirichlet(

H

∑s

h =1

H

hm1 +α1, …., ∑ s hmn +αn)

h =1

See Allenby, Arora, and Ginter (1998) for additional details on drawing θm.

8. Generate γ | {γh11}, σ 2γ

γ ~ N(c, d)

H

γ h11

2

∑

σγ

100

h =1

(0) + 2

c = 2

σ + 100

σ γ + 100 H

γ

d=

100σ 2γ

σ 2γ + 100

9. Generate σ 2γ |{γh11}, γ

H

σ ~ IG(H/2 + a, {b + ½ ∑ (γ h11 − γ ) 2 }-1 )

2

γ

h =1

We now describe the changes necessary to estimate the compensatory consideration set model.

In our application of the model, the deterministic component of utility Vhij = xhij'βh and (A3)

from above equals:

I(xhij,γh)=1 if I(xhij'βh > γh) = 1

(A3')

where γh is a continuous scalar for each household with heterogeneity and prior distributions:

γh ~ N( γ , σ γ2 )

γ ~ N(0,100)

σ 2γ ~ IG(a,b)

Steps 3 and 7 from the previous procedure are no longer needed. Only steps 2 and 4 need to be

altered:

30

2a.

Generate γh|zh, yh, Xh, βh for h=1,…,H

Again, conditioned on the current values of zh and βh and the observed data, the key is to

draw γh from the set of allowable values. The allowable values of γh define consideration

sets such that the chosen alternative always has the maximum value of z.

If yhij = 1 then xhij'βh > γh; let B* = min(xhij'βh for all i and j where yhij = 1)

If yhij = 0 and zhij > zhik where yhik = 1, then xhij'βh < γh;

let A* = max(xhij'βh for all i and j with yhij = 0 and zhij > zhik where yhik = 1)

Then A* < γh < B* defines the allowable range for γh. A* requires that all chosen

alternatives be part of the consideration set. B* requires that any alternative that is not

chosen but has a value of z greater than the chosen alternative, be excluded from the

consideration set.

Following Chib and Greenberg (1995) we perform a Metropolis-Hastings step by

drawing γh directly from the allowable range of the prior; in our case the prior is the

heterogeneity distribution and we draw from the truncated normal distribution:

γh ~ TN( γ , σ γ2 , A*<γh<B*)

4a. Generate βh | yh, Xh, zh for h=1, …, H

Generate a candidate βh:

β hc ~ MVN(b, (Xh ‘Xh + Σβ-1)-1)

b = (Xh ‘Xh + Σβ-1)-1 (Xh‘zh + Σβ-1 β )

Define A* and B* as in step 2a with β hc . If A* > B* reject β hc , draw a new candidate. If

A*<B* then βh = β hc .

This is an example of acceptance/rejection sampling.

31

References

Albert, James H. and Siddhartha Chib (1993) "Bayesian Analysis of Binary and Polychotomous

Response Data," Journal of the American Statistical Association, 88, 669-679.

Allenby, Greg, Neeraj Arora, Chris Diener, Jaehwan Kim, Mike Lotti, Paul Markowitz (2002)

"Distinguishing Likelihoods, Loss Functions, and Heterogeneity in the Evaluation of

Marketing Models," Canadian Journal of Marketing Research, 20, 44-59.

Allenby, Greg M., Neeraj Arora, and James L. Ginter (1998), "On the Heterogeneity of

Demand," Journal of Marketing Research, 35(August), 384-389.

Allenby, Greg M. and Peter E. Rossi (1999) "Marketing Models of Consumer Heterogeneity,"

Journal of Econometrics, 89, 57-78.

Andrews, Rick L. and Ajay K. Manrai (1998) "Simulation Experiments in Choice Simplification:

The Effects of Task and Context on Forecasting Performance," Journal of Marketing

Research, 35 (May), 198-209.

Andrews, Rick L. and T. C. Srinivasan (1995) "Studying Consideration Effects in Empirical

Choice Models Using Scanner Panel Data," Journal of Marketing Research, 32

(February), 30-41.

Ben-Akiva, Moshe and Bruno Boccara (1995) "Discrete Choice Models with Latent Choice

Sets," International Journal of Research in Marketing, 12, 9-24.

Ben-Akiva, Moshe, Daniel McFadden, Tommy Garling, Dinesh Gopinath, Joan Walker, Denis

Bolduc, Axel Borsch-Supan, Philippe Delquie, Oleg Larichev, Taka Morikawa, Amalia

Polydoropoulou, and Vithala Rao (1999) "Extended Framework for Modeling Choice

Behavior," Marketing Letters, 10, 187-203.

Bettman, James (1979), An Information Processing Theory of Consumer Choice. Reading, MA:

Addison-Wesley.

Bettman, James R., Mary Frances Luce, and John W. Payne (1998) "Constructive Consumer

Choice Processes," Journal of Consumer Research, 25, 187-217.

Bettman, James and C. Whan Park (1980), "Effects of Prior Knowledge and Experience and

Phase of the Choice Process on Consumer Decision Analysis: A Protocol Analysis,"

Journal of Consumer Behaivor, 7, 234-248.

Bronnenberg, Bart J. and Wilfried R. VanHonacker (1996) "Limited Choice Sets, Local Price

Response, and Implied Measures of Price Competition," Journal of Marketing Research,

33 (May) 163-173.

32

Chiang, Jeongwen, Siddhartha Chib, and Chakravarthi Narasimhan (1999) "Markov Chain

Monte Carlo and Models of Consideration Set and Parameter Heterogeneity," Journal of

Econometrics, 89, 223-248.

Chib, Sid and Edward Greenberg (1995) "Understanding the Metropolis-Hastings Algorithm,"

The American Statistician, 49, 4, 327-335.

Edwards, Yancy D. and Greg M. Allenby (forthcoming) "Multivariate Analysis of Multiple

Response Data," Journal of Marketing Research.

Fader, Peter S. and Leigh McAlister (1990) "An Elimination by Aspects Model of Consumer

Response to Promotion Calibrated on UPC Scanner Data," Journal of Marketing

Research, 27 (August), 322-332.

Gensch, Dennis H. (1987) "A Two-Stage Disaggregate Attribute Choice Model," Marketing

Science, 6 (3), 223-239.

Hajivassiliou, V., D. McFadden, and P. Rudd (1996), "Simulation of Multivariate Normal

Rectangle Probabilities and their Derivatives," Journal of Econometrics 72, 85-134.

Jedidi, Kamel, Rajeev Kohli, and Wayne S. DeSarbo (1996) "Consideration Sets in Conjoint

Analysis," Journal of Marketing Research, 33 (August), 364-372.

Johnson, Eric J. and Robert J. Meyer (1984), "Compensatory Choice Models of

Noncompensatory Processes: The Effect of Varying Context," Journal of Consumer

Research, 11, 528-541.

Johnson, Eric J., Robert J. Meyer, and Sanjoy Ghose (1989), "When Choice Models Fail:

Compensatory Models in Negatively Correlated Environments," Journal of Marketing

Research, 26 (August), 255-270.

Keane, M. (1994), "A Computationally Practical Simulation Estimator for Panel Data,"

Econometrica 62, 95-116.

Maddala, G.S. (1983), Limited Dependent and Qualitative Variables in Econometrics. New

York: Cambridge University Press.

Manrai, Ajay K. and Rick L. Andrews (1998), "Two-stage Choice Models for Scanner Panel

Data: An Assessment of Process and Assumptions," European Journal of Operational

Research, 111, 193-215.

McCulloch, Robert and Peter E. Rossi (1994) "An Exact Likelihood Analysis of the Multinomial

Probit Model," Journal of Econometrics, 64, 207-240.

33

Mehta, Nitin, Surendra Rajiv, and Kannan Srinivasan (forthcoming), "Price Uncertainty and

Consumer Search: A Structural Model of Consideration Set Formation," Marketing

Science.

Newton, Michael A. and Adrian E. Raftery (1994) "Approximating Bayesian Inference with the

Weighted Likelihood Bootstrap," Journal of the Royal Statistical Society (B), 56, 3-48.

Payne, John W. and Ragsdale E. K. Easton (1978), "Verbal Protocols and Direct Observation of

Supermarket Shopping Behavior: Some Findings and a Discussion of Methods," in

Advances in Consumer Research, Vol. 5, ed. H. Keith Hunt, Chicago: Association for

Consumer Research, 571-577.

Roberts, John H. and James M. Lattin (1991) "Development and Testing of a Model of

Consideration Set Compostion," Journal of Marketing Research, 28 (November), 429440.

Shocker, Allan D., Moshe Ben-Akiva, Bruno Boccara, and Prakash Nedungandi (1991),

"Consideration Set Influences on Consumer Decision Making and Choice: Issues,

Models, and Suggestions," Marketing Letters, 2 (3), 181-197.

Simmons Study of Media and Markets 1997: Choices II Version 5.28.98. Tampa, FL: Simmons

Market Research Bureau.

Tanner, Martin A. (1993), Tools for Statistical Inference. New York: Springer-Verlag.

Tanner, M. A. and W. Wong (1987) "The Calculation of Posterior Distributions by Data

Augmentation," Journal of the American Statistical Association, 82, 528-549.

Yang, Sha and Greg M. Allenby (2000) "A Model for Observation, Structural, and Household

Heterogeneity in Panel Data," Marketing Letters, 11, 137-149.

34

Table 1

Camera Attributes and Levels

Attribute/Level

A Basic body style and standard

features

A1 Low

A2 Medium

A3 High

B Mid-roll Change1

B1 None

B2 Manual

B3 Automatic

C Annotation1

C1 None

C2 Pre-Set List

C3 Customized List

C4 Custom Input method 1

C5 Custom Input method 2

C6 Custom Input method 3

D Camera Operation Feedback1

D1 No

D2 Yes

E Zoom

E1 None

E2 2X

E3 4X

F Viewfinder

F1 Regular

F2 Large

G Camera Settings Feedback

G1 None

G2 LCD

G3 Viewfinder

G4 Both LCD & Viewfinder

Available in

"Low" Body

Style

Available in

"Medium"

Body Style

Available in

"High" Body

Style

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

×

1

×

×

×

×

×

×

Unique to APS camera at time of the study; all other features available on both APS and 35mm

cameras at the time of the study.

35

Figure 1

Example for Specifying Cut-offs for Conjunctive & Disjunctive Model

Discrete Attributes

Attribute

Zoom Lens

Levels

Recoded

Values (x)

If γ = -0.5:

Probability

of Each

Cut-off

None

0

γ(1)= -0.5

θ(1)

2x

1

γ(2)= 0.5

θ(2)

4x

2

γ(3)= 1.5

θ(3)

γ(4)= 2.5

θ(4)

Scenario #1:

Screening

rule:

Possible

Cut-offs

Scenario #2:

Screening

rule:

I(x > γ) = 1

I(0 > -0.5) = 1

I(1 > -0.5) = 1

I(2 > -0.5) = 1

If γ = 0.5:

All attribute levels

are acceptable

I(x > γ) = 1

I(0 > 0.5) = 0

I(1 > 0.5) = 1

I(2 > 0.5) = 1

Camera must have

2x zoom lens to be considered

For the conjunctive model, if γ = -0.5 then Zoom Lens is not

being used to screen alternatives.

Scenario #3:

Screening

rule:

If γ = 1.5:

Scenario #4:

Screening

rule:

I(x > γ) = 1

I(0 > 1.5) = 0

I(1 > 1.5) = 0

I(2 > 1.5) = 1

If γ = 2.5:

Camera must have 4x

zoom lens to be considered

I(x > γ) = 1

I(0 > 2.5) = 0

I(1 > 2.5) = 0

I(2 > 2.5) = 0

None of the attribute levels

are acceptable

For the disjunctive model, if γ = 2.5 then Zoom Lens is not

being used to screen alternatives.

37

Table 2

Model Results

In Sample

Model

HB Probit

Compensatory

Conjunctive

Disjunctive

Structural Heterogeneity

1

LMD

-3468.8

-3449.6

-2990.8

-3586.2

-3012.9

Hit 2

Probability

0.525

0.527

0.583

0.528

0.583

Predictive

Hit

Probability

0.391

0.393

0.418

0.39

0.417

Hit3

Frequency

266

267

276

267

277

1

Log marginal density of the data calculated using the importance sampling method of

Newton and Raftery (1991, p. 21).

2

Posterior mean of predicted probability for selected alternative. Calculated from a

sample of 300 posterior point estimates.

3

Posterior mean of correct predictions for two observations for each respondent; a total of

604 hold-out choice sets. Calculated from a sample of 300 posterior point estimates.

38

Table 3

Part-worth Estimates for the Probit Model

Attribute

Level

Body Style

Low Body

Medium Body

High Body

Mid-Roll Change Manual Change

Automatic Change

Pre-set List

Annotation

Customized List

Input Method 1

Input Method 2

Input Method 3

Beta

Posterior

Mean

1

2

3

4

5

6

7

8

9

10

0.325

2.843

2.224

0.059

0.178

0.362

0.678

-1.036

0.767

-0.985

Attribute

Level

Camera Operation

Feedback

Operation Feedback

2x Zoom

Zoom

4x Zoom

Large Viewfinder

Viewfinder

Settings Feedback LCD

Viewfinder

LCD & Viewfinder

ln(price)

Price

Beta

Posterior

Mean

11

12

13

14

15

16

17

18

0.357

1.122

1.477

-0.158

0.759

0.757

0.902

-0.71

Covariance Matrix - Σβ

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

1

19.605

17.952

15.303

-0.548

-2.077

-0.575

-0.584

-8.252

-1.921

-5.553

-0.808

-0.207

-0.811

-1.394

-0.434

-0.289

-0.472

-2.789

2

20.258

17.674

-0.906

-2.232

-0.309

-0.366

-7.975

-1.52

-5.628

-0.67

0.651

0.54

-1.054

0.06

0.035

-0.042

-3.459

3

17.845

-1.218

-2.153

-0.333

-0.356

-6.735

-1.285

-4.753

-0.665

0.895

1.08

-0.859

0.324

0.161

0.12

-3.278

4

1.803

0.507

0.097

0.147

0.371

0.139

0.432

0.061

-0.009

-0.225

-0.011

-0.075

0.011

0.019

0.144

5

1.4

0.171

0.231

1.134

0.395

0.854

0.173

0.022

-0.08

0.149

0.04

0.044

0.057

0.287

6

0.629

0.255

0.3

0.237

0.238

0.019

0.106

0.225

0.051

0.106

0.083

0.109

-0.045

7

0.685

0.387

0.26

0.224

0.033

0.121

0.173

-0.021

0.12

0.067

0.118

-0.054

8

9

10

11

12

13

14

15

16

17

18

5.392

0.974

2.734

0.494

0.134

0.418

0.592

0.167

0.129

0.255

1.22

1.096

0.726

0.225

0.167

0.251

0.231

0.181

0.179

0.173

0.12

3.315

0.347

-0.056

-0.021

0.487

0.04

0.083

0.084

0.923

1.055

0.115

-0.008

0.064

0.092

0.123

0.106

0.046

1.42

1.07

0.122

0.343

0.298

0.321

-0.412

2.21

0.13

0.454

0.336

0.427

-0.52

0.947

0.141

0.119

0.074

0.142

0.966

0.525

0.526

-0.216

0.976

0.538

-0.206

0.937

-0.192

1.079

Estimates in bold have more than 95% of the posterior mass away from 0.

39

Table 4

Part-worth Estimates for the Conjunctive Model

Attribute

Level

Body Style

Low Body

Medium Body

High Body

Mid-Roll Change Manual Change

Automatic Change

Pre-set List

Annotation

Customized List

Input Method 1

Input Method 2

Input Method 3

Beta

Posterior

Mean

1

2

3

4

5

6

7

8

9

10

-0.34

0.577

0.024

0.257

0.169

0.289

0.604

-0.369

0.655

-0.619

Attribute

Level

Camera Operation

Feedback

Operation Feedback

2x Zoom

Zoom

4x Zoom

Large Viewfinder

Viewfinder

Settings Feedback LCD

Viewfinder

LCD & Viewfinder

ln(price)

Price

Beta

Posterior

Mean

11

12

13

14

15

16

17

18

0.413

0.841

1.231

-0.139

0.374

0.373

0.52

0.056

Covariance Matrix - Σβ

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

1

16.183

15.035

14.839

-1.35

-1.903

-0.637

-0.667

-6.744

-2.246

-5.42

-0.641

0.443

0.106

-1.292

-0.648

-0.65

-0.662

-2.338

2

15.918

15.176

-1.218

-1.821

-0.527

-0.531

-6.458

-2.069

-5.306

-0.479

0.499

0.427

-1.256

-0.611

-0.745

-0.717

-2.463

3

16.419

-1.398

-1.854

-0.591

-0.604

-6.301

-2.022

-5.148

-0.589

0.491

0.387

-1.289

-0.494

-0.736

-0.704

-2.464

4

1.88

0.625

0.117

0.198

0.653

0.319

0.662

0.1

0.035

-0.101

0.057

0.005

0.125

0.15

0.137

5

1.523

0.148

0.194

0.956

0.481

0.876

0.169

-0.051

-0.179

0.154

0.07

0.104

0.112

0.233

6

0.632

0.241

0.349

0.229

0.318

0.007

-0.005

0.112

0.065

0.045

0.043

0.088

0.048

7

0.742

0.399

0.26

0.305

0.009

0.006

0.08

-0.047

0.079

0.059

0.113

0.042

8

4.828

1.191

2.752

0.451

-0.119

0.084

0.616

0.28

0.341

0.381

0.957

9

1.269

1.073

0.205

-0.048

0.014

0.281

0.125

0.143

0.141

0.258

Estimates in bold have more than 95% of the posterior mass away from 0.

40

10

11

12

13

14

15

16

17

18

3.892

0.287

-0.161

-0.234

0.695

0.186

0.302

0.285

0.813

1.122

0.126

-0.015

0.022

0.087

0.065

0.077

0.057

1.056

0.557

-0.049

0.097

0.105

0.122

-0.184

1.653

-0.112

0.139

0.056

0.161

-0.25

1.213

0.102

0.065

0.014

0.209

0.864

0.365

0.38

0.007

0.903

0.422

0.011

0.878

0.026

0.774

Table 5

Threshold Estimates for the Conjunctive Model

Posterior Means

Attri bute

Body Style

Levels

Recoded

Values

Low

0

Medium

1

High

2

Possible

Cut-offs

Probability of

Each Cut-off

γ 1(1)= -0.5

θ1 =.598

γ 1(2)= 0.5

θ2 =.324

γ 1(3)= 1.5

θ3 =.059

γ 1(4)= 2.5

θ4 =.015

γ 2(1)= -0.5

Mid Roll -

None

0

Change

Manual

1

Auto

2

Annotation1

Annotation2

Annotation3

None

0

Pre-set

1

Custom

2

None

0

Input #2

1

None

0

Input #3

1

Attri bute

Annotation4

θ5 =.926

γ 2(2)= 0.5

θ6 =.027

γ 2(3)= 1.5

θ7 =.028

γ 2(4)= 2.5

θ8 =.020

γ 3(1)= -0.5

θ9 =.940

γ 3(2)= 0.5

θ10 =.022

γ 3(3)= 1.5

θ11 =.018

γ 3(4)= 2.5

θ12 =.020

γ 4(1)= -0.5

θ13 =.960

γ 4(2)= 0.5

θ14 =.020

γ 4(3)= 1.5

θ15 =.020

γ 5(1)= -0.5

θ16 =.961

γ 5(2)= 0.5

θ17 =.019

γ 5(3)= 1.5

θ18 =.020

Levels

Recoded

Values

None

0

Input #4

1

Operation

None

0

Feedback

Feedback

1

Zoom Lens

Viewfinder

None

0

2x

1

4x

2

Regular

0

Large

1

41

Probability of

Each Cut-off

γ 6(1)= -0.5

θ19 =.961

γ 6(2)= 0.5

θ20 =.019

γ 6(3)= 1.5

θ21 =.020

γ 7(1)= -0.5

θ22 =.947

γ 7(2)= 0.5

θ23 =.034

γ 7(3)= 1.5

θ24 =.020

γ 8(1)= -0.5

θ25 =.808

γ 8(2)= 0.5

θ26 =.140

γ 8(3)= 1.5

θ27 =.032

γ 8(4)= 2.5

θ28 =.019

γ 9(1)= -0.5

θ29 =.961

γ 9(2)= 0.5

θ30 =.019

γ 9(3)= 1.5

θ31 =.019

γ 10(1)= -0.5

θ32 =.783

γ 10(2)= 0.5

θ33 =.157

Settings

None

0

Feedback

LCD

1

Viewfinder

2

γ 10(3)= 1.5

θ34 =.021

Both

3

γ 10(4)= 2.5

θ35 =.020

γ 10(4)= 3.5

θ36 =.019

Continuous Attribute:

-ln(price)

All estimates have more than 95% of the posterior mass away from 0.

Possible

Cut-offs

γ11 = -6.269

Figure 2

Distribution of Price Threshold

Conjunctive Model

70%

59%

60%

50%

41%

40%

30%

30%

20%

20%

10%

10%

0%

<$276

<$356

<$425

42

<$499

>$499

Figure 3

Proportion of Respondents

Screening on Each Attribute

Conjunctive Model

45%

41%

40%

40%

35%

30%

25%

22%

19%

20%

15%

10%

8%

6%

4%

5%

4%

5%

4%

4%

*Indicates attributes only available on APS cameras at the time of the study.

43

Pr

ic

e

Se

tti

ng

s

fin

de

r

Vi

ew

om

O

p.

Fe

Zo

ck

*

ed

ba

n4

*

An

no

ta

tio

n3

*

An

no

ta

tio

n2

*

An

no

ta

tio

n1

*

ta

tio

An

no

M

id

-R

ol

lC

Bo

dy

ha

n

St

yl

e

ge

*

0%