AI Homework: Information Theory & Decision Trees

advertisement

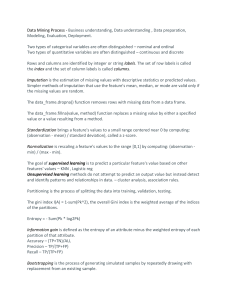

CPS 4801 Artificial Intelligence Spring 2012 Homework 4 Due: Tuesday May 1 1. Consider the following data set comprised of three binary input attributes (A1, A2, and A3) and one binary output: Example x1 x2 x3 x4 x5 A1 T T F T T A2 F F T T T A3 F T F T F Output y F F F T T Use the Information Theory learned in class, answer the following questions. (1) What is the entropy of these five examples before applying any attribute? (2) What is the remaining entropy of the five examples after applying Attribute A1? What is the information gain? (3) What is the remaining entropy of the five examples after applying Attribute A2? What is the information gain? (4) What is the remaining entropy of the five examples after applying Attribute A3? What is the information gain? (5) Which attribute would you choose, so to best split the examples? 2. (Optional) Use the Decision Tree Learning algorithm to draw a decision tree for these data. Show the computations made to determine the attribute to split at each node. 1