Presentation Slides Only

advertisement

Search with a Little Help from Your Friends

Making Web Search more Collaborative

Barry Smyth

CLARITY: Centre for Sensor Web Technologies

University College Dublin

Tuesday 3 May 2011

The Web’s Killer App?

Tuesday 3 May 2011

The Web’s Killer App?

Tuesday 3 May 2011

lycos.com (circa 1996)

Tuesday 3 May 2011

Excite.com (circa 1996)

Tuesday 3 May 2011

webcrawler.com (circa 1997)

Tuesday 3 May 2011

“the verb” (Beta, circa 1998)

Tuesday 3 May 2011

Google.com (circa 1999)

Tuesday 3 May 2011

ask.com (circa 2000)

Tuesday 3 May 2011

metacrawler.com (2000)

Tuesday 3 May 2011

directhit.com (2000)

Tuesday 3 May 2011

Index Size, x (10^x)

12

1.0

2.0

8

4

Terms

0

1994

1996

Terms+Links

1998

2000

2002

2004

2006

2008

Year

Approx. 1 billion queries per day!

Tuesday 3 May 2011

2010

Web Search 101

Query

I

Q

S

Result List

Tuesday 3 May 2011

R

Index

Search Engine

Overview

The State of Web Search - Key Challenges

Potential Solutions - Context in Web Search

Towards Social Web Search - HeyStaks

Tuesday 3 May 2011

Challenges

Tuesday 3 May 2011

Vague Queries

The Vocabulary Problem

One-Size-Fits-All

Content Farming & SEO

Tuesday 3 May 2011

50%

Seach Failure Rate

25% of searches

Average query size (2-3

terms) is insufficient to

guarantee effective

search engine retrieval

click to back button!

!"#$%&'()*+,,

-+.$(/,

!"#$%&#

'(%#

)*+,-#

./00(&&1/2#

.(3%0-#

* iProspect (2006), Jansen & Spink (2007), Morris et al (2008), Coyle et al (2007,2008)

Tuesday 3 May 2011

The Vocabulary Gap

Tuesday 3 May 2011

t

o

N

s

e

Do

One Size Fits All

^

Tuesday 3 May 2011

Tuesday 3 May 2011

?

Tuesday 3 May 2011

Content Farms, SEO, Gaming

Focused SEO

to promote

commissionedcontent.

(!1m items / month)

Tuesday 3 May 2011

The DemandMedia Model

SE/ISP Logs

SE Ad Data

SE Logs

Similar Past Ads

Search

Terms

Ad

Rates

Content

Titles

Projected

Ad Revenue

$x PPC

“how to paint

an easter egg”

Life Time Value (LTV)

easter egg

Freelance

Assignments

$15/article, $20/video

Tuesday 3 May 2011

Black Hat SEO

JC Penny Content Farming

New York Times, Feb 2011

Large-Scale Link Farming

2000+ sites linking to JCP for terms

like “black dresses”, “casual

dresses”, etc.

Significant Benefits to JCP

Millions of inbound visitors during

the holiday season!

Tuesday 3 May 2011

The Google Response

Major Algorithmic Change

Reducing the ranking of low-quality

sites, impacting 12% of queries.

Spam-blocking Extension

Google Chrome extension to allow

users to block low-quality sites

from result-lists.

Major Impact on Many Sites

... including some surprises

(SlideShare, Technorati).

Tuesday 3 May 2011

Vague Queries

The Vocabulary Problem

One-Size-Fits-All

Content Farming & SEO

Tuesday 3 May 2011

Web Search is changing...

Tuesday 3 May 2011

Improving search by

better understanding

user needs and search

context ...

Tuesday 3 May 2011

Context in Search

User Context

C

Q

I

Preferences, usage history, profiles

Document Context

Meta-data, content features

S

Task Context

Current activity, location etc.

R

Tuesday 3 May 2011

Social Context

Leveraging the social graph.

User Context

Personalizing Web Search

Adapting search responses according to the needs/interests of

the searcher.

Modeling User Interests

ODP Categories, query histories, result selections, etc.

Adaptation Strategies

Re-ranking, query modification, etc.

See also Chirita et al (SIGIR 2005), Shen et al (CIKM 2005),Teevan et al (TOCHI, 2010)

Tuesday 3 May 2011

PSearch (Teevan et al,

SIGIR 2005)

Client-Side Profiling

Explicit, Content,

Behaviour

Personalized Ranking

User Context

Tuesday 3 May 2011

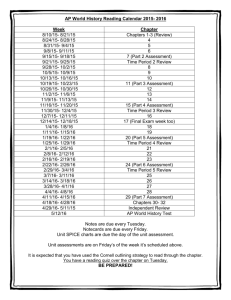

Potential for Personalization

Teevan et al (SIGIR 2008)

How well can a single resultlist satisfy a group of users?

Not every query benefits from

personalization.

Predicting

the

PfP

of

search

Potential for personalization (PfP) curves

queries (click-based measures)

[from Teevan et al (2008)]

Tuesday 3 May 2011

Google AdSense

Document Context

Tuesday 3 May 2011

Tuesday 3 May 2011

Task Context

Activity/Task Context

E.g. writing a talk, planning a trip,

shopping, etc.

Location-Based Search

Google on the iPhone ...

Embedded Web Search

Watson, (Budzik & Hammond, IUI

2000), RemembranceAgent

(Rhodes, IEEE Trans. Comp 2003)

Tuesday 3 May 2011

IntelliZap

Context → Query Augmentation

Finkelstein wt al. WWW 2001

Tuesday 3 May 2011

Task

User

Tuesday 3 May 2011

Doc

So

cia

l

Web Search as a Solitary

Activity

Tuesday 3 May 2011

Recovery vs Discovery

Repeat New

Click Click

Repeat

29%

Query

4%

New

10%

Query

57%

Teevan et al, 2007

Tuesday 3 May 2011

57%

43%

Recovery

Discovery

!"#$"%"

&"'()*+,('")*("-.*"'./(0,#$1"0,("

'()*+,(*",)'")2*()34"-.5$3"35*#$1"

)"6*(7#.5'"'()*+,"'(''#.$89"

:"#$";"

&"'()*+,('")*("-.*"'./(0,#$1"0,)0"

)"'()*+,(*'"-*#($3'".*"+.22()15('"

,)7("*(+($024"-.5$389"

Search should be more personal & collaborative!

* Morris et al (2008), Teevan et al (2007), Smyth et al (2004,2006,2008)

Tuesday 3 May 2011

Web Search as a Social Activity

Tuesday 3 May 2011

!"#$%&'()*+)",'

!-%+#.'/"01-$2,'

/%,01-)*#'

/-,0)*#'

!""#$%&'()*#&'+,-""&'.'

23445'67%07'

2L445'67%07'

8,#%'9*:%;'

/"1),$'!0,I-'

!<4'()$$)"*'8,#%7'

!'>4'D0)$$)"*'B%$,M"*7-)I7'

>445'?6%0)%7@:,A'

O>45'5)$$)"*'7-,0%7@:,A'

B%$%C,*1%'

B%I6P,M"*'

=6%0)%7'

D%057&'E)*F7&'8,#%B,*F'

Tuesday 3 May 2011

G,1%(""F&'HA/I,1%&DJ)K%0&.'

N"556*)M%7'

/"1),$'B,*FQ'

!"#$%&'()*

+,"$'"-*

Tuesday 3 May 2011

!&#$'()*

./00,('1"-*

!"#$%&'()*

Social News

Searching the RTW

!&#$'()*

Semantic Search

Social

Indexing/Filtering

Social Bookmarking

Personalization

Social Q&A

Meta Search

People Search

+,"$'"-*

Tuesday 3 May 2011

./00,('1"-*

Flavours of

Social Search...

Tuesday 3 May 2011

Search & the Real-Time Web

Text

}

Tuesday 3 May 2011

Live Twitter Feed

Exploiting the Social Graph

www.vark.com – Social Q&A

Tuesday 3 May 2011

Text

A Conversational Thread on Aardvark

Tuesday 3 May 2011

Key Features

Social Indexing

People vs Documents: User-Topic / UserUser Relationships

Question Classification

Scored topic list (classification-based

approach)

Query Routing

An aspect model routes questions to

potential answerers.

Damon Horowitz, Sepandar D. Kamvar: The

anatomy of a large-scale social search engine.

WWW 2010: 431-440

Tuesday 3 May 2011

Answer Ranking

Ranked candidate answerers based on

topic, expertise, availability.

Searching Social Content

Harnessing the Social Graph

...

Tuesday 3 May 2011

Smyth et al, 2006

Teevan et al, 2007

Morris et al, 2008

Collaboration in Search

Tuesday 3 May 2011

90% of people have engaged in some form of

collaboration during Web search.

87% of people have exhibited “back-seat searching.”

86% of people go on to share results with others.

25%-40% of the time we are re-searching for things

we have previously found.

66% of the time we are looking for something that a

friends or colleague has recently found.

Repetition & Regularity

in Communities ...

Smyth et al, UMUAI 2004

Tuesday 3 May 2011

Collaborative IR

Tuesday 3 May 2011

Remote

Search

Together

(UIST ’07)

HeyStaks

(UMAP ’09)

Co-Located

CoSearch

(CHI ’08)

?

Synch

Asynch

SearchTogether

Morris et al (2008)

Tuesday 3 May 2011

Tuesday 3 May 2011

HeyStaks

A Case-Study in Social Search

Tuesday 3 May 2011

Motivating HeyStaks

Web Search. Shared!

Harness the collaborative nature of Web Search by providing

integrated support for the sharing of search experiences.

User Control

Support the searcher by providing fine-grained control over

collaboration features and facilities.

Integrate with Mainstream Search Engines

Users want to search as normal, using their favourite search

engines, while, at the same time, benefiting from collaboration.

Tuesday 3 May 2011

HeyStaks: A Search Utility

Create Staks

Sharing

Users can easily create Search Staks (public/

private) as a way to capture search activities.

Share Knowledge

Share Staks with friends and others to grow

community/task-based search expertise.

Search & Promote

Relevance

Tuesday 3 May 2011

As users search within a Stak(s), relevant results

are promoted and enhanced.

Tuesday 3 May 2011

Stak 5

Stak 6

Stak 4

Stak 2

Stak 1

Tuesday 3 May 2011

Stak 3

Stak 7

Task

Multi-Task

Groups

Multi-Task

Individual

Single Task

Groups

Single Task

Individual

Social

Tuesday 3 May 2011

HeyStaks Architecture

Tuesday 3 May 2011

Tuesday 3 May 2011

Tuesday 3 May 2011

Tuesday 3 May 2011

Tuesday 3 May 2011

Tuesday 3 May 2011

HeyStaks Architecture

Tuesday 3 May 2011

Search Staks

page URLS

terms

query, tag, snippet

P1

P2

12

Pn

32

votes

+ -

4

6

9

2

27

12 3

score(qT, Pi, Sj) = Rel(qT, Pi, Sj)∗TFIDF(qT, Pi, Sj)

Tuesday 3 May 2011

The Social Life of Search

Tuesday 3 May 2011

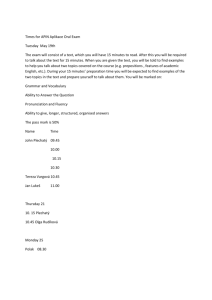

Initial Evaluation

HeyStaks Beta Trial

Focus on 95 early, active HeyStaks-Beta users who registered

with HeyStaks during the period October 2008 - January 2009.

Stak Creation/Sharing

Do users take the time to create and share search staks (and

search experiences)?

Collaboration Effects

Do searchers benefit from the effects of search collaboration

in general, and stak promotions in particular?

Tuesday 3 May 2011

'!"

!"#$%&+#$,(&-*)$#)./0"12).3&+#$,&45..1)(3&'()*&6&'()*&

&#"

()**+,-"

&!"

5"

!"#$%&

%#"

%!"

$#"

./+0,*"

12,34,*"

7$8&9:;&-*)$#)./0"12)./45..1)(&<)*&'()*&&

7=8&+">1$=%)&:(&+"%1#$*?&'()*(&

&!7"

'"

%"

6!7"

!"

$!"

#"

!"

'()*(&

Stak Creation & Sharing

Tuesday 3 May 2011

8/1+3(9,"

8/9+432:"

Producers & Consumers

P

C

Basic Unit of Collaboration

Searcher C selects a promotion previously

selected by P.

Tuesday 3 May 2011

Search Collaboration

Tuesday 3 May 2011

!"#$%&'"()$*+$,*--."*)./*)0$

!"#$%&'()*+&,$-$.'/,)0+&,$

"%%#

('#

'%#

!"#

%#"'$

)*+,-./0,#

10*2-3/0,#

$!#

%&"'$

+,-./012.$

32,4/512.$

*"'$

!"#$

)"'$

&'#

%#

("'$

'"'$

Producers & Consumers

Tuesday 3 May 2011

%&"#$

Search Leaders & Followers

Tuesday 3 May 2011

#!!"

'&!"

!""#$!#%&%'%()$

'%!"

'$!"

'#!"

'!!"

-%./+$!""#$0)$*"+,$!#%&%'%()$

&!"

%!"

($)"

$!"

*++,"

-+./"

%%)"

#!"

!"

!"

#!"

$!"

%!"

&!"

'!!"

'#!"

'$!"

*"+,$!#%&%'%()$

Promotion Sources

Tuesday 3 May 2011

'%!"

'&!"

#!!"

Users create & share staks.

Collaboration commonplace.

Users benefit from peers.

Tuesday 3 May 2011

Reputation

All searchers are not created equal! Staks are likely

composed of a mixture of novice and expert

searchers.

Can we identify the best searchers? Overall or at

stak-level?

Can we use this reputation information to further

influence recommendation?

Tuesday 3 May 2011

Modeling Reputation

rep

P

C

pi

C confers reputation on P

Searcher C selects a promotion previously

selected by P.

Tuesday 3 May 2011

User Reputation

McNally, O’Mahony, Smyth (IUI, 2010)

Tuesday 3 May 2011

Reputation Model

Producer Reputation

Result Reputation

Reputation Ranking

Tuesday 3 May 2011

Initial Evaluation

HeyStaks Reputation Trial

64 undergraduate students participated in a general-knowledge

quiz using HeyStaks to guide their searches.

Multiple Stak Sizes

Users were segregated into different stak sizes (1,5, 9,19, 25) to

analyse the relationship between stak size and performance.

Ground-Truth Based Performance Analysis

Fixed Q&A facilitated a definitive analysis of the relevance of

organic and promoted results.

Tuesday 3 May 2011

Query Coverage

Percentage of queries receiving promotions.

Tuesday 3 May 2011

Organic vs Promoted

Relative relevance of organic and promoted results.

Tuesday 3 May 2011

Reputation Analysis

Tuesday 3 May 2011

Reputation x Time

Tuesday 3 May 2011

Final User Reputation

Tuesday 3 May 2011

Relative Benefit

Tuesday 3 May 2011

www.heystaks.com

Tuesday 3 May 2011

Conclusions

The conservative world of Web Search is changing!

Context in Web search

Adaptation.

Collaboration in Web Search

Harnessing the Social Graph.

From relevance to reputation

Improved click-thru rates.

Tuesday 3 May 2011

Lessons Learned

Mainstream Web Search Integration

There is little value in developing competing Web search

offerings; users want to search as normal using their favourite

search engine (Google,Yahoo, Bing, ...)

Personlization vs User Experience

An improved user experience can translate into much greater

user-takeup than incremental improvements in personalization.

Tuesday 3 May 2011