DSW Report.indb

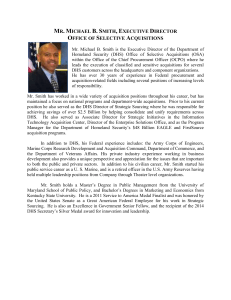

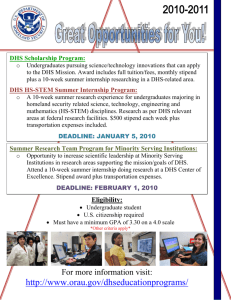

advertisement