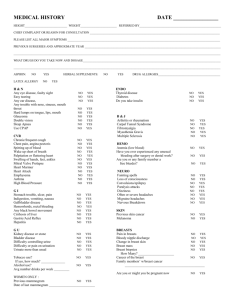

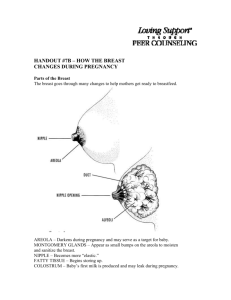

ASSESSMENT OF THE QUALITY OF CANCER CARE

advertisement