Rong Chen

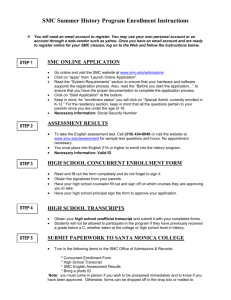

advertisement

Discussion: Population SMC SMC or MCMC – is it still a question? Rong Chen Department of Statistics Rutgers University 1 SMC and MCMC Misconceptions: • SMC is used only for state-space models, HMM and other types of dynamic systems – A sequence of target distributions with increasing dimension • MCMC is (the only solution) for fixed dimensional problems – A single distribution 2 We claim: • SMC is a valid alternative to MCMC • SMC can be a (possibly more) powerful tool in solving fixdimensional problems • MCMC is a special case of SMC 3 Change The dynamic system framework for SMC (Liu and Chen 1998) • A sequence of distributions π1(x1), . . . , πt(xt), . . . • Increasing dimension xt = (xt−1, xt) • Sequential importance sampling: (j) – generate xt (j) conditioned on xt−1 (j) (j) (j) – append: xt = (xt−1, xt ) – update weight What do we do with one single target distribution π(x)? 4 Approach (I): The Growth Principle Decompose a complex problem into a sequence of simpler problems. • Target distribution π(x) where x = (x1, . . . , xN ) • Let xt = (x1, . . . , xt) = (xt−1, xt) • Define a sequence of intermediate distributions πt(xt). • Moving from πt−1(xt−1) to πt(xt) is simple. • Moving from πt−1(xt−1) to πt(xt) is smooth. Z πt(xt−1, xt)dxt ≈ πt−1(xt−1) • πN (xN ) = π(x) 5 Approach (II): Fixed dimension with augmentation • Target distribution π(x), for x ∈ Ω • Let xt = (x1, . . . , xt) ∈ Ωt where xi ∈ Ω. • Construct a sequence of intermediate (joint) distributions πt(x1, . . . , xt) on Ωt, often through a transition rule πt−1 → πt. • The low-dimensional marginal distributions of the giant joint distribution πn(x1, . . . , xn) is in some way related to the target distribution π(x). – e.g. πn(x1) = π(x) or πn(xn) = π(x) 6 Arnaud’s approach: • starting with a relatively trivial π1(x1), where x1 ∈ Ω • augment: πt(x1, . . . , xt), t = 1, . . . , n. • eventually, at t = n, the marginal distribution of the last component πn(xn) is the target distribution π(x) (j) • inference using the samples of the last component xn Chris’ approach: • starting with true π1(x1) = π(x). • augment: πt(x1, . . . , xt) so the marginal distribution of the last component πt(xt) is the target distribution π(x) • inference using samples of all components. 7 MCMC as a special case of SMC: MCMC: • Target distribution π(x) where x ∈ Ω. • A Markov chain x1, . . . , xt, . . ., with a transition kernel k and each xt ∈ Ω. • x1 ∼ π1 • (x1, . . . , xt) ∼ πt(x1, . . . , xt) where πt(xt) = πt−1(xt−1)k(xt | xt−1) • (hopefully) after some N , the marginal distribution of the last component xt, πt(xt) is close to π(x), for t > N . 8 MCMC as a special case of SMC: • A dynamic system through augmentation • The sequence of intermediate distributions is built based on a special type of kernel to ensure that the marginal distributions of the t-th component converge to target distribution • Trial distribution is the same as kernel (often through rejection method, as in M-H) • Does not use importance weights but uses burn-in 9 Open questions: Caution: flexibility comes with more ways of making mistakes • Design the sequence of intermediate distributions πt(xt) • Construct efficient trial distributions • Take advantage of MCMC-like moves • Avoid the problems often encountered with MCMC algorithms. 10