Superfluous Neuroscience Information Makes Explanations of

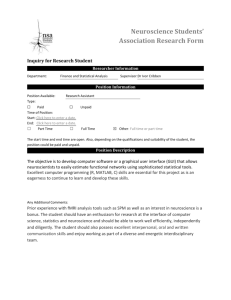

advertisement