D1.2: Artifact Speech and Manipulation Production/Perception setup

advertisement

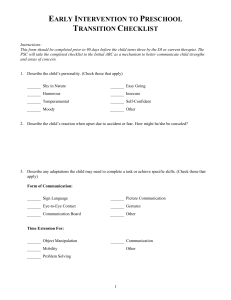

NEST Contract No. 5010 CONTACT Learning and Development of Contextual Action Instrument: Thematic Priority: Specific Targeted Research Project (STREP) New and Emerging Science and Technology (NEST), Adventure Activities Artifact Speech and Manipulation Production-Perception Setup Due date: 01/01/2006 Submission Date: 15/10/2006 Start date of project: 01/09/2005 Duration: 36 months Organisation name of lead contractor for this deliverable: University of Genova (UGDIST) Revision: 1 Project co-funded by the European Commission within the Sixth Framework Programme (2002-2006) Dissemination Level PU Public PU PP Restricted to other programme participants (including the Commission Services) RE Restricted to a group specified by the consortium (including the Commission Services) CO Confidential, only for members of the consortium (including the Commission Services) Contents 1 Introduction 2 2 Embodied artifact 2.1 Artifact for manipulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2.2 Artifact for speech . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2.3 Integration plan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2 2 3 3 3 Articulatory synthesis 4 4 The “linguometer” 4.1 Articulograph (Carstens AG500) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4.2 Ultrasound System (Toshiba Aplio Ultrasound machine) . . . . . . . . . . . . . . . . . 4.3 Integration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5 6 6 7 1 1 Introduction For CONTACT, we are taking a modular approach to the “embodied artifact” that will be used for this project. We expect to integrate all our algorithmic work on a single platform capable of manipulation and speech. This platform will be a humanoid robot. The modules we have identified are as follows: . Embodied artifact: UGDIST is developing a robot with a mechanically sophisticated arm/hand and setting it up for CONTACT. IST (in collaboration with US) is developing a robot head with a sophisticated ear. . Articulatory synthesis: The US, IST, and UGDIST groups are working on adapting speech generation systems that explicitly model the process of articulation. This is a key technology for our artifact to be able to treat speech production as a motor act, without being constrained to a specific language and a pre-selected set of phonemes. . The “linguometer”: We are interested in measuring speech-related phonoarticulatory activity, but tools for this are relatively poorly developed, and the tongue is relatively inaccessible, compared with human arm/hand movement. UNIFE and UGDIST have begun an activity to develop a “linguometer”, a set of instrumentation for measuring articulator activity. This is key to training algorithms for machine perception/production of speech. 2 Embodied artifact In the technical annex, the “BabyBot” robot is described, and is still available for experimentation if needed, but by joint work with other projects we now have access to other robots (see Section 2). In particular, we are collaborating with the RobotCub project (IST-004370). The goal of the RobotCub project is (among other things) to generate a fully open source robot platform. Cooperation on software and hardware is in the interests of both projects: CONTACT can give the RobotCub robot iCub a voice and models for exploring sensorimotor space, and iCub will be an excellent embodiment for the CONTACT project. 2.1 Artifact for manipulation UGDIST has been working on developing a robot platform called “James” with better dexterity, both in terms of mechanical degrees-of-freedom and sensors (see Figure 1). Figure 1: Left: James, a 23-degree-of-freedom robot with a dextrous hand. 2 Figure 2: Left: the robot head. Center: the current design for an artificial pinna for the robot head, for better sound localization. Right: the pinna helps with the locating a sound source. Determining the angle along the horizontal is relatively straightforward ; the pinna helps create a cue that in turn helps identify the elevation of the sound source. 2.2 Artifact for speech IST is investigating sound localization and tracking on a robot head, collaborating with US on ear design (see Figure 2). Without ears, a pair of microphones can only localize sounds in the horizontal plane; with correctly designed ears this limit no longer holds. IST has developed an “artificial pinna” for sound source localization. The form of the pinna is important for sound localization since it gives spectral cues on the elevation of the sound source. The human pinna can be simulated by a spiral. The pinna gives a notch when the distance to the microphone is equal to a quarter of the wavelength of the sound source (and any multiple of half the wavelength). Cues used for localization are ITD (Interaural Time Difference), ILD (Interaural Level Difference) and ISD (Interaural Spectral Difference). IST, with US, have carried out a first experiment where the system is trained with white noise, and tested with speech in an echo-free chamber. A second experiment is learning the audio-motor maps using vision in an office environment. 2.3 Integration plan UGDIST has been examining how to bring speech and manipulation systems into alignment. These systems have important practical differences: . Can the motor space be explored safely through random actions? For speech, yes – the worst that can happen is minor irritation to nearby humans. But for manipulation, the answer is no; certain motor states will break a robot, through self-collision, ripping cables, or a host of similar woes. . How stable is the mapping from motor to perceptual space? Both hearing speech and viewing manipulation are subject to all sorts of environmental distortions. The visual appearance of manipulation is perhaps subject to more radical transformations than the auditory “appearance” of speech, since vision is subject to harsher geometric effects than sound (hence the difficulty of sound localization). . How direct is the effect of motor action on the world? Speech (apart from its own sound) has primarily social-mediated effects, while manipulation (apart from its own appearance) has direct physical effects. These are all significant differences to abstract across. The latter two points are questions of degree, but the first point, safety, is critical. We could conceivably explore the motor space of an artificial articulator automatically from tabula rasa, but this is not true of the motor space of today’s robot hand/arms. Given a motor space that is potentially dangerous to explore, we need to perform some degree of calibration and the analogue of protective “reflexes” and built-in limits. We take motor spaces augmented with 3 such measures as our starting point, to give an “explorable” motor space that is now safe. We consider taking as our basic abstractions the following: . An explorable motor space (a motor space augmented with whatever measures are required to make it safe for exploration). . A “proximal” sensor space, comprising a set of sensors that relate closely to motor action, for example motor encoders, strain gauges, articulator tube resonance model setpoints, etc. . A “distal” sensor space, comprising a set of sensors that relate to motor action indirectly, for example via microphones or cameras. The mapping from “proximal” sensor space to motor space, in the case of manipulation, needs to be at least partially determined manually for our current manipulator platform (the James robot), since it is needed to achieve safety. For speech, this mapping is a candidate for automated learning. So, for now, this mapping is not a point of contact between speech and manipulation, at least for the embodied artifact. The mapping from “distal” sensor space to motor space, on the other hand, is a clear point of contact between speech and manipulation. This is so both for the embodied artifact and for human studies, so this level of abstraction seems appropriate for integration. 3 Articulatory synthesis Current approaches to speech production and speech perception by machine are highly divergent both from each other and also from any notion of being a “motor system”. We are investigating the most reasonable approach to take to speech production for our embodied artifact in order to meet our goal of integrating perception and production for speech and manipulation. The dominant model of automatic speech generation by computer takes text, converts it into a symbolic phonetic representation aligned with prosody, then converts that into actual sounds. This process is very much divorced from the perception of speech or indeed the mechanical process of articulation. At the opposite extreme, there are a small number of robots that produce speech-sounds by physical manipulation of a tube; but the technical challenges are huge. A simulated approach seems more reasonable, and sufficent for our goals. And in fact there is a family of articulatory synthesizers, an approach to speech generation that involves specific modeling of the mechanics of articulation to a greater or lesser degree of abstraction. With such systems, we can throw away all language-specific parameters and work with a continuous control-space of physically meaningful parameters of a model of the human articulatory system, without any specific language bias built in. And we can treat speech as just another motor system, like an arm or hand, because the control input is continuous in time. So far, we have taken an open-source system (GNUSpeech) for articulatory synthesis, and converted it into a continuously running real-time system with motor inputs analogous to a hand/arm (see Figure 3). One of our partners has received access to the source code for a more advanced system created by Shinji Maeda,which deals better (among other things) with extra frication sound sources created with tube constriction. We expect that this is the system we will in the end use, if it can be configured in real-time. IST has been using VTDemo, an implementation of Shinji Maeda’s articulatory synthesizer by Mark Huckvale, University College London (see Figure 4). Via US, we now also have access to Maeda’s own synthesizer. IST is considering the following questions: . Data acquisition: which is more appropriate, articulatory measurements, or speech synthesis? . Motor information: which is better, articulatory or orosensory parameters? . Other modalities: how can visual information help the segmentation process? As shown in Figure 4, IST is working on the adapting the DIVA model as a starting point for exploring the space of articulation. 4 Figure 3: Tube resonant model of GNUSpeech (left). The articulator is approximated as a tube, whose width can be controlled dynamically along its length. We have successfully converted GNUSpeech into a realtime server, receiving numerical inputs that immediately change its configuration, just as any other robotic actuator. A simple interface for testing is shown on the right. Phonemes for English are shown, but correspond only to particular sets of parameter settings, rather than discrete symbols. Figure 4: Left: the VTDemo articulatory synthesizer, adapted at IST. Right: the proposed architecture for exploring speech sounds. 4 The “linguometer” Figure 5 shows some of the instrumentation. We are interested in measuring speech-related phonoarticulatory activity, but tools for this are relatively poorly developed, and the tongue is relatively inaccessible, compared with human arm/hand movement. UNIFE and UGDIST have begun an activity to develop a “linguometer”, a set of instrumentation for measuring articulator activity. We are interested in determining whether knowledge of motor activity during speech can aid learning to perceive speech. In a previous successful project involving a subset of the partners (the MIRROR project, IST-2000-28159) an analogous result was demonstrated for grasp5 Figure 5: Left: the tongue, viewed in real-time via an ultrasound machine. Right: an articulograph, which recovers the 3D pose of sensors placed on the tongue and face. Figure 6: Initial studies at CRIL show that some tongues are easier to sense than others (compare the left image, where tongue profile is visible as a clear white line, to the right image, where profile is much less distinct). For good subjects, the real-time imaging of the tongue is of good quality, although unfortunately only for a 2D slice rather than a 3D volume. ing. To carry out this experiment for speech, we need a way to measure speech-related phonoarticulatory activity. We informally call this the “linguometer”, but in practice this will be a constellation of instruments and software. We have begun a collaboration with CRIL in Lecce to work towards integrating a ”linguometer”. The future integration of the linguometer will rely on two main instruments: 4.1 Articulograph (Carstens AG500) The AG500 articulograph locates the 3D position and orientation of 12 coils within a ”cube” generating a high frequency electromagnetic field. Its accuracy is high, and the output is very easy to interpret since it is geometric in nature. It is limited by the physical dimensions of the cube, having a long and cumbersome setup procedure (including gluing coils to the tongue), and somewhat unstable software. Also, interaction with other devices containing metallic components requires careful handling. 4.2 Ultrasound System (Toshiba Aplio Ultrasound machine) The ultrasound machine gives high frame rate (around 30 frames per second) sensing for 2D slices. Direct real-time access to data from the sensor appears difficult, and we may need to make do with output that has passed through some rescaling and other filters that ideally we would bypass. RAW data is available, but will not be used since the software running on the Toshiba Aplio system has some 6 Figure 7: The current proposed architecture for the “linguometer”, with synchronization via shared audio. constraints that limit the extraction of such data. 2D images provided by the machine give a good view of the tongue profile, although quality varies from subject to subject. For our purposes, at least in the early stages, it is sufficient to find “good” subjects whose tongue can be clearly imaged - we do not need to be able to image arbitrary subjects. An open question is how well, or whether, infants can be imaged in practice. 4.3 Integration The main idea is to record data from the Articulograph and the Ultra Sound System at the same time. This task is quite complicated since the Articulograph detects the position of each sensor using an electromagnetic field and the Ultrasound System probe interferes with it. We are actually testing the result of the synchronous recordings and building physical devices that would allow the speaker to speak naturally and us to record data with the proper accuracy. While testing the possibility of having the two different instruments to cooperate, we are developing the software that will allow us to process, store and share the recorded data. As soon as the constellation of hardware and software will be ready, we will run few test experiments for validating the setup before following the definitive protocol. Either device individually will provide excellent data for CONTACT. Integrating both will be even better, data-wise, but has a high cost in terms of time and complexity. We are evaluating this further. Our general approach for synchronizing these devices, and other devices not mentioned here, is to relate all data to the sound signal. Most devices support sound recording (especially devices designed for speech research!). 7