Statistische Tests bei medizinischen Fragestellungen

advertisement

BIOMETRICS I

Description, Visualisation and

Simple Statistical Tests

Applied to Medical Data

Lecture notes

Harald Heinzl and Georg Heinze

Core Unit for Medical Statistics and Informatics

Medical University of Vienna

Version 2010-07

Contents

Chapter 1 Data collection ...............................................................4

1.1. Introduction ............................................................................ 4

1.2. Data collection ........................................................................ 6

1.3. Simple analyses..................................................................... 14

1.4. Aggregated data .................................................................... 16

1.5. Exercises .............................................................................. 18

Chapter 2 Statistics and graphs ...................................................19

2.1. Overview .............................................................................. 19

2.2. Graphs ................................................................................. 20

2.3. Describing the distribution of nominal variables ......................... 21

2.4. Describing the distribution of ordinal variables........................... 32

2.5. Describing the distribution of scale variables ............................. 33

2.6. Outliers ................................................................................ 57

2.7. Missing values ....................................................................... 63

2.8. Further graphs ...................................................................... 68

2.9. Exercises .............................................................................. 70

Chapter 3 Probability ...................................................................72

3.1. Introduction .......................................................................... 72

3.2. Probability theory .................................................................. 75

3.3. Exercises .............................................................................. 95

Chapter 4 Statistical Tests I .........................................................98

4.1. Principle of statistical tests ...................................................... 98

4.2. t-test ................................................................................. 102

4.3. Wilcoxon rank-sum test ........................................................ 107

4.4. Excercises ........................................................................... 112

Chapter 5 Statistical Tests II .....................................................113

5.1. More about independent samples t-test .................................. 113

5.2. Chi-Square Test................................................................... 119

5.3. Paired Tests ........................................................................ 124

5.4. Confidence intervals ............................................................. 133

5.5. One-sided versus two-sided tests .......................................... 135

5.5 Exercises ............................................................................. 137

Appendices.................................................................................142

A. Opening and importing data files ............................................ 142

B. Data management with SPSS ................................................. 154

C. Restructuring a longitdudinal data set with SPSS ..................... 167

D.

Measuring agreement ......................................................... 178

E. Reference values .................................................................. 189

F. SPSS-Syntax ........................................................................ 195

G.

Exact tests ........................................................................ 201

H.

Equivalence trials ............................................................... 208

I. Describing statistical methods for medical publications .............. 210

J. Dictionary: English-German ................................................... 211

References .................................................................................215

2

Preface

These lecture notes are intended for the PGMW course “Biometrie I”. This

manuscript is the first part of the lecture notes of the “Medical Biostatistics

1” course for PhD students of the Medical University of Vienna.

The lecture notes are based on material previously used in the seminars

„Biometrische Software I: Beschreibung und Visualisierung von

medizinischen Daten“ and „Biometrische Software II: Statistische Tests

bei medizinischen Fragestellungen“. Statistical computations are based on

SPSS 17.0 for Windows, Version 17.0.1 (1st Dec 2008, Copyright © SPSS

Inc., 1993-2007).

The data sets used in the lecture notes can be downloaded at

http://www.muw.ac.at/msi/biometrie/lehre

Chapters 1 and 2, and Appendices A-E have been written by Georg

Heinze. Harald Heinzl is the author of chapters 3-6 and Appendices F-I.

Martina Mittlböck translated chapters 4 and 5 and Appendices G-I.

Andreas Gleiß translated Appendices F, J and K. Georg Heinze translated

chapters 1-3, 6 and Appendices A-E. Sincere thanks are given to the

translators, particularly to Andreas Gleiß who assembled all pieces into

one document.

Version 2009-03 contains fewer typing errors, mistranslations and wrong

citations than previous versions due to the efforts of Andreas Gleiß. The

contents of the lecture notes have been revised by Harald Heinzl (Version

2008-10).

Version 2009-03 is updated to SPSS version 17 by Daniela Dunkler,

Martina Mittlböck and Andreas Gleiß. Screenshots and SPSS output which

have changed only in minor aspects have not been replaced. Note that

older versions of SPSS save output files with .SPO extension, while SPSS

17 uses an .SPV extension. Old output files cannot be viewed in SPSS 17.

For this purpose the SPSS Smart Viewer 15 has to be used which is

delivered together with SPSS 17. Further note that the language of the

SPSS 17 user interface and of the SPSS 17 output can be changed

independently from each other in the options menu.

If you find any errors or inconsistencies, or if you come across statistical

terms that are worth including in the German-English dictionary (Appendix

I), then you will be asked to notify us via e-mail:

harald.heinzl@meduniwien.ac.at

georg.heinze@meduniwien.ac.at

3

Chapter 1

Data collection

1.1. Introduction

Statistics 1 can be classified into descriptive and inferential statistics.

Descriptive statistics is a toolbox useful to characterize the properties of

members of a sample. The tools of this toolbox comprise

•

•

Statistics1 (mean, standard deviation, median, etc.) and

Graphs (boxplot, scatterplot, pie chart, etc.)

By contrast, inferential statistics provides mathematical techniques that

help us in drawing conclusions about the properties of a population. These

conclusions are usually based on a subset of the population of interest,

called the sample.

The motivation of any medical study should be a meaningful scientific

question. The purpose of any study is to give an answer to a scientific

question and not just searching a data base in order to find any significant

associations. The scientific question always relates to a particular

population. A sample is a randomly drawn subset of that population. An

important requirement often imposed on a sample is that it is

representative for a population. This requirement is fulfilled if

•

•

Each individual of the population has equal probability to be

selected, i.e., the selection process is independent from the

scientific question

Each individual is independent from each other, i. e., the selection of

individual a has no influence on the probability of selection of

individual b

Example 1.1.1: Hip joint endoprothesis study. Consider a study which

should answer the question how long hip joint endoprostheses can be used. The

population related to this scientific question consists of all patients who will

receive a hip joint endoprosthesis in the future. Clearly, this population

comprises a potentially unlimited number of individuals. Consider all patients

who received a hip joint endoprosthesis during the years 1990 – 1995 in the

Vienna General Hospital. These patients will be followed for 15 years or until

1

Note that the word STATISTICS has different meanings. On this single page it is used to

denote both the entire scientific field and rather simple computational formulas. Besides

them, there can be other meanings as well.

7B1.1. Introduction

their death on a regular basis. They constitute a representative sample of the

population. An example of a sample which is not suitable to draw conclusions

about the properties of the populations is the set of all patients which were

scheduled for a follow-up examination in the year 2000. This sample is not

representative because we miss any patients who died or underwent a revision

up to that year and the results would be over-optimistic.

A sample always consists of

Observations on individuals (e. g., patients)

Properties or variables which were observed (e. g., systolic blood

pressure before and after five minutes of training, sex, body-massindex, etc.) and which vary in the individuals

•

•

A sample can be represented in a table, which is often called data matrix.

In a data matrix,

rows usually correspond to observations and

columns to variables.

Example 1.1.2: Data matrix. Representation of the variables patient

number, sex, age, weight and height of four patients:

Pat. No.

Sex

1

2

3

4

M

F

F

M

Age

(years)

35

36

37

30

5

Weight

(kg)

80

55

61

72

Height

(cm)

185

167

173

180

8B1.2. Data collection

1.2. Data collection

Case report forms

Data are usually collected on paper using so-called case report forms

(CRFs). On these, all study-relevant data of a patient are recorded. Case

report forms should be designed such that three principles are observed:

•

•

•

Unambiguousness: the exact format of data values should be given,

e. g. YYYYMMDD for date variables

Clearness: the case report forms should be easy to use

Parsimony: only required data should be recorded

The last principle is very important. If case report forms are too long, then

the motivation of the person recording the data will decrease and data

quality will be negatively affected.

Building a data base

After data collection on paper, the next step is data entry in a computer

system. While small data sets can be easily transferred directly to a data

matrix on the screen, one can make use of computer forms to enter large

amounts of data. These computer forms are the analogue of case report

forms on a computer screen. Data are typed into the various fields of the

form. Commercial programs allowing the design and use of forms are,

e.g., Microsoft Office Access or SAS. Epi Info™ is a freeware program

(http://www.cdc.gov/epiinfo/, cf. Fig. 1) using the format of Access to

save data, but with a graphical user interface that is easier to handle than

that of Access.

6

8B1.2. Data collection

Fig.1: computer form prepared with Epi Info™ 3.5

After data entry using forms, the data values are saved in data matrices.

One row of the data matrix corresponds to one form, and each column of

a row corresponds to one field of a form.

Electronic data bases can usually be converted from one program to

another, e. g., from a data entry program to a statistical software system

like SPSS, which will be used throughout the lecture. SPSS also offers a

commercial program for the design of case report forms and data entry

(“SPSS Data Entry”).

When building a data base, no matter which program is used, the first

step is to decide which variables it should consist of. In a second step we

must define the properties of each variable.

The following rules apply:

•

The first variable should contain a unique patient identification

number, which is also recorded on the case report forms.

7

8B1.2. Data collection

•

Each property that may vary in individuals can be considered as a

variable.

•

With repeated measurements on individuals (e.g., before and after

treatment) there are several alternatives:

o Wide format: One row (form) per individual; repeated

measurements on the same property are recorded in multiple

columns (or fields on the form); e. g., PAT=patient

identification number, VAS1, VAS2, VAS3 = repeated

measurements on VAS

o Long format: One row per individual and measurement;

repeated measurements on the same property are recorded in

multiple rows of the same column, using a separate column to

define the time of measurement; e.g., PAT=patient

identification

number,

TIME=time

of

measurement,

VAS=value of VAS at the time of measurement

If for the first alternative the number of columns (fields) becomes too

large such that computer forms become too complex, the second

alternative will be chosen. Note that we can always restructure data from

the wide to the long format and vice versa.

Building an SPSS data file

The statistical software package SPSS appears in several windows:

The data editor is used to build data bases, to enter data by hand, to

import data from other programs, to edit data, and to perform interactive

data analyses.

The viewer collects results of data analyses.

The chart editor facilitates the modification of diagrams prepared by

SPSS and allows identifying individuals on a chart.

Using the syntax editor, commands can be entered and collected in

program scripts, which facilitates non-interactive automated data analysis.

8

8B1.2. Data collection

Data files are represented in the data editor, which consists of two tables

(views). The data view shows the data matrix with rows and columns

corresponding to individuals and variables, respectively. The variable

view contains the properties of the variables. It can be used to define

new variables or to modify properties of existing variables. These

properties are:

•

Name: unique alphanumeric name of a variable, starting with an

alphabetic character. The name may consist of alphabetic and

numeric characters and the underscore (“_”). Neither spaces nor

special characters are allowed.

•

Width: the maximum number of places.

•

Decimals: the number of decimal places.

•

Label: a description of the variable. The label will be used to name

variables in all menus and in the output viewer.

•

Values: labels assigned to values of - nominal or ordinal - variables.

The value labels replace values in any category listings. Example:

value 1 corresponds to value label ‘male’, value 2 to label ‘female’.

Data are entered as 1 and 2, but SPSS displays ‘male’ and ‘female’.

Using the button

one can switch between the display of the values and the value

labels in the data view. Within the value label view, one can directly

choose from the defined value labels when entering data.

9

8B1.2. Data collection

•

Missing: particular values may be defined as missing values. These

values are not used in any analyses. Usually, missing data values

are represented as empty fields and if a data value is missing for an

individual, it is left empty in the data matrix.

•

Columns: defines the width of the data matrix column of a variable

as number of characters. This value is only relevant for display and

changes if one column is broadened using the mouse.

•

Align: defines the alignment of the data matrix column of a variable

(left/right/center).

•

Measure: nominal, ordinal or scale. The applicability of particular

statistical operations on a variable (e. g., computing the mean)

depends on the measure of the variable. The measure of a variable

is called

o nominal if each observation belongs to one of a set of

categories, and it is called

o ordinal if these categories have a natural order. SPSS calls

the measure of a variable

o ‘scale’ if observations are numerical values that represent

different magnitudes of the variable.

Usually (outside SPSS), such variables are called `metric’,

‘continuous’ or ‘quantitative’, compared to the ‘qualitative’ and

‘semi-quantitative’ nature of nominal and ordinal variables,

respectively. Examples for nominal variables are sex, type of

operation, or type of treatment. Examples for ordinal variables are

treatment dose, tumor stage, response rating, etc. Scale variables

are, e. g., height, weight, blood pressure, age.

•

Type: the format in which data values are stored. The most

important are the numeric, string, and date formats.

o Nominal and ordinal variables: choose the numeric type.

Categories should be coded as 1, 2, 3, … or 0, 1, 2, … Value

labels should be used to paraphrase the codes.

o Scale variables: choose the numeric type, pay attention to the

correct number of decimal places, which applies to all

computed statistics (e. g., if a variable is defined with 2

decimal places, and you compute the mean of that variable,

then 2 decimal places will be shown). However, the

computational accuracy is not affected by this option.

10

8B1.2. Data collection

o Date variables: choose the date type; otherwise, SPSS won’t

be able to compute the length of time passing between two

dates correctly.

The string format should only be used for text that will not be analyzed

statistically (e. g., addresses, remarks). For nominal or ordinal variables,

the numeric type should be used throughout as it requires an exact

definition of categories. The list of possible categories can be extended

while entering data, and category codes can be recoded after data entry.

Example 1.2.1: Consider the variable “location” and the possible outcome

category “pancreas” and “stomach”. How should this variable be defined?

Proper definition:

The variable is defined properly, if these categories are given two numeric

codes, 1 and 2, say. Value labels paraphrase these codes:

In any output produced by SPSS, these value labels will be used instead of

the numeric codes. When entering data, the user may choose between

any of the predefined outcome categories:

11

8B1.2. Data collection

Improper definition:

The variable is defined with string type of length 10. The user enters

alphabetical characters instead of choosing from a list (or entering

numbers). This may easily lead to various versions of the same category:

All entries in the column “location” will be treated as separate categories.

Thus the program works with six different categories instead of two.

Further remarks applying to data entry with any program:

•

Numerical variables should only contain numbers and no units of

measurements (e.g. “kg”, “mm Hg”, “points”) or other alphabetical

12

8B1.2. Data collection

or special characters. This is of special importance if a spreadsheet

program like Microsoft Excel is used for data entry. Unlike real data

base programs, spreadsheet programs allow the user to enter any

type of data in any cell, so the user solely takes responsibility over

the entries.

•

“True” missing values should be left empty rather than using special

codes for them (e.g. -999, -998, -997). If special codes are used,

they must be defined as missing value codes and they should be

defined as value lables as well. Special codes can be advantageous

for “temporal” missing values (e.g. -999=”ask patient”, -998=”ask

nurse”, -997=”check CRF”).

•

A missing value means that the value is missing. By constrast, in

Microsoft® Office Excel® an empty cell is sometimes interpreted as

zero.

•

Imprecise values can be characterized by adding a column showing

the degree of certainty that is associated with such values (e. g.,

1=exact value, 0=imprecise value). This allows the analyst to drive

two analyses: one with exact values only, and one using also

imprecise values. By no way should imprecisely collected data

values be tagged by a question mark! This will turn the column into

a string format, and SPSS (or any other statistics program) will not

be able to use it for analyses.

•

Enter numbers without using separators (e. g., enter 1000 as 1000

not as 1,000).

If in a data base or statistics program a variable is defined as numeric

then it is not possible to enter something else than numbers! Therefore,

programs that do not distinguish variable types are error-prone (e. g.,

Excel).

For a more sophisticated discussion about data management issues the

reader is referred to Appendices A and B.

13

9B1.3. Simple analyses

1.3. Simple analyses

In small data sets values can be checked by computing frequencies for a

variable. This can be done using the menu Analyze-Descriptive

Statistics-Frequencies. Put all variables into the field Variables and

press OK.

The SPSS Viewer window pops up and shows a frequency table for each

variable. Within each frequency table, values are sorted in ascending

order. This enables the user to quickly check the minimum and maximum

values and discover implausible values.

lie_3

Valid

120

Frequency

1

Percent

6.7

Valid Percent

6.7

Cumulative

Percent

6.7

125

1

6.7

6.7

13.3

127

1

6.7

6.7

20.0

136

1

6.7

6.7

26.7

143

1

6.7

6.7

33.3

144

1

6.7

6.7

40.0

145

2

13.3

13.3

53.3

150

3

20.0

20.0

73.3

152

1

6.7

6.7

80.0

155

1

6.7

6.7

86.7

165

1

6.7

6.7

93.3

203

1

6.7

6.7

100.0

Total

15

100.0

100.0

The columns have the following meanings:

•

•

•

•

Frequency: the absolute frequency of observations having the

value shown in the first column

Percent: percentage of observations with values equal to the value

in the first column relative to total sample, including observations

with missing values

Valid percent: percentage of observations with values equal to the

value in the first column relative to total sample, excluding

observations with missing values

Cumulative percent: percentage of observations with values up to

the value shown in the first column. E. g., in line 145 a cumulative

percentage of 53.3 means that 53.3% of the probands have blood

pressure values less than or equal to 145. Cumulative percents refer

to valid percents, that is, they exclude missing values.

14

9B1.3. Simple analyses

Frequency tables are particulary useful for describing the distribution of

nominal or ordinal variables:

lie_3c

Valid

Normal

Frequency

Percent

Valid Percent

Cumulative

Percent

11

73.3

73.3

High

3

20.0

20.0

93.3

Very high

1

6.7

6.7

100.0

15

100.0

100.0

Total

73.3

Obviously, variable “lie_3c” is ordinal. From the table we learn that 93.3%

of the probands have normal or high blood pressure.

15

10B1.4. Aggregated data

1.4. Aggregated data

SPSS is able to handle data that are already aggregated, i. e. data sets

that have already been compiled to a frequency table. In the data set

shown below, each observation corresponds to a category constituted by a

unique combination of variable values. The variable “frequency” shows

how many observations fall in each category:

As we see, “frequency” is not a variable defining some property of the

patients, it rather acts as a counter. Therefore, we must inform SPSS

about the special meaning of “frequency”. This is done by choosing DataWeight cases from the menu and putting Number of patients

(frequency) into the field Frequency Variable:.

16

10B1.4. Aggregated data

Producing a frequency table for the variable “ageclass” we obtain:

Age class

Valid

<40

40-60

Frequency

111

Percent

45.5

Valid Percent

45.5

Cumulative

Percent

45.5

23

9.4

9.4

54.9

>60

110

45.1

45.1

100.0

Total

244

100.0

100.0

If the weighting had not been performed, the table would erroneously

read as follows:

Age class

Valid

Cumulative

Percent

25.0

Frequency

3

Percent

25.0

Valid Percent

25.0

40-60

4

33.3

33.3

58.3

>60

5

41.7

41.7

100.0

Total

12

100.0

100.0

<40

This table just counts the number of rows with the corresponding ageclass

values!

17

11B1.5. Exercises

1.5. Exercises

1.5.1. Cornea:

Source: Guggenmoos-Holzmann and Wernecke (1995). In an

ophthalmology department the effect of age on cornea

temperature was investigated. Forty-three patients in four age

groups were measured:

Age group

Measurements

12-29

35.0 34.1 33.4 35.2 35.3 34.2 34.6 35.7

34.9

30-42

34.5 34.4 35.5 34.7 34.6 34.9 34.6 34.9

33.0 34.1 33.9 34.5

43-55

35.0 33.1 33.6 33.6 34.2 34.5 34.3 32.5

33.2 33.2

56-73

34.5 34.7 35.0 34.1 33.8 34.0 34.3 34.9

34.5 34.5 33.4 34.2

Create an SPSS data file. Save the file as “cornea.sav”.

1.5.2.

Psychosis and type of constitution

Source: Lorenz (1988). 8099 patients suffering from endogenous

psychosis or epilepsy were classified into five groups according to

their type of constitution (asthenic, pyknic, athletic, dysplastic,

and atypical) and into three classes according to type of

psychosis. Each patient falls into one of the 15 resulting

categories. The frequency of each category is depicted below:

schizophrenia

manicdepressive

disorder

epilepsy

2632

261

378

pyknic

717

879

83

athletic

884

91

435

dysplastic

550

15

444

atypical

450

115

165

asthenic

Which are the variables of this data set?

Enter the data set into SPSS and save it as “psychosis.sav”.

18

12B2.1. Overview

Chapter 2

Statistics and graphs

2.1. Overview

Typically, a descriptive statistical analysis of a sample takes two steps:

Step 1: The data is explored, graphically and by means of statistical

measures. The purpose of this step is to obtain an overview of

distributions and associations in the data set. Thereby we don’t restrict

ourselves to the main scientific question.

Step 2: For describing the sample (e. g., for a talk or a paper) only those

graphs and measures will be used, that allow the most concise conclusion

about the data distribution. Unnecessary and redundant measures will be

omitted. The statistical measures used for description are usually

summarized in a table (e. g., a “patient characteristics” table).

When choosing appropriate statistical measures (“statistics”) and graphs,

one has to consider the measurement type (in SPSS denoted by

“measure”) of the variables (nominal, ordinal, or scale). The following

statistics and graphs are available:

•

Nominal variables (e. g. sex or type of operation)

o Statistics: frequency, percentage

o Graphs: bar chart, pie chart

•

Ordinal variables (e. g. tumor stage, school grades, categorized

scales)

o Statistics: frequency, percentage, median, quartiles,

percentiles, minimum, maximum

o Graphs: bar chart, pie chart, and – with reservations - box

plot

•

Scale variables (e. g. height, weight, age, leukocyte count)

o Statistics: median, quartiles, percentiles, minimum,

maximum, mean, standard deviation, if data is categorized:

frequency and percentage

o Graphs: box plot, histogram, error bar plot, dot plot, bar

chart and pie chart if data is categorized

19

13B2.2. Graphs

The statistical description of distributions is shown on a data set called

“cholesterol.sav”. This data set contains the variables age, sex, height,

weight, cholesterol level, type of occupation, sports abdominal girth and

hip dimension of 83 healthy probands. First, the total sample is described,

then the description is grouped by sex.

2.2. Graphs

SPSS distinguishes between graphs using the Chart Builder... and

older versions of (interactive and non-interactive) graphs using Legacy

Dialogs. Although the possibilities of these types of graphs overlap, there

are some diagrams that can only be achieved by one or the other way.

Most diagrams needed in the course can be done using the Chart Builder.

It is important to note that the chart preview window within the Chart

Builder dialogue window does not represent the data but only gives a

scetch of a typical chart of the selected type. Further note that graphs

which had been constructed using the menu Graphs - Legacy Dialogs –

Interactive cannot be changed interactively in the same manner as in

version 14, but are also edited using the chart editor.

20

14B2.3. Describing the distribution of nominal variables

2.3. Describing the distribution of nominal variables

Sex and type of occupation are the nominal variables in our data set. A

pie chart showing the distribution of type of occupation is created by

choosing Graphs-Chart Builder... from the menu and dragging

Pie/Polar from the Gallery tab to the Chart Preview field:

Drag Type of occupation [occupation] into the field Slice by? and

press OK. The pie chart is shown in the SPSS Viewer window:

21

14B2.3. Describing the distribution of nominal variables

Double-clicking

the

chart

opens the Chart Editor which

allows to change colors, styles,

labels etc. E. g., the counts

and percentages shown in the

chart above can be added by

selecting Show Data Labels in

the Elements menu of the

Chart

Editor.

In

the

Properties

window

which

pops

up,

Count

and

Percentages are moved to the

Labels Displayed field using

the green arrow button. For

the Label Position we select

labels to be shown outside the

pie and finally press Apply.

22

14B2.3. Describing the distribution of nominal variables

Statistics can be summarized in a table using the menu Analyze-TablesCustom Tables.... Drag Type of occupation to the Rows part in the

preview field and press Summary Statistics:

23

14B2.3. Describing the distribution of nominal variables

Here we select Column N % and move it to the display field using the

arrow button. After clicking Apply to Selection and OK we arrive at the

following table:

Type of

occupation

24

Col %

28.9%

mostly standing

37

44.6%

mostly in motion

16

19.3%

6

7.2%

mostly sitting

retired

Count

The table may be sorted by cell count by pressing the Categories and

Totals... button in the custom tables window, which is sometimes useful

for nominal variables. With ordinal or scale variables, however, the order

of the value labels is crucial and has to be maintained.

24

14B2.3. Describing the distribution of nominal variables

We can repeat the analyses building subgroups by sex. In the Chart

Builder, check Columns panel variable in the Groups/Point ID tab and

then drag Sex into the appearing field Panel?:

25

14B2.3. Describing the distribution of nominal variables

The same information is provided by a grouped bar chart. It is produced

by pressing Reset in the Chart Builder and selecting Bar in the Gallery

tab and dragging the third bar chart variant (Stacked Bar) to the preview

field:

26

14B2.3. Describing the distribution of nominal variables

Drag Type of Occupation to the X-Axis ? and Sex to the Stack: set

color field:

27

14B2.3. Describing the distribution of nominal variables

28

14B2.3. Describing the distribution of nominal variables

Sometimes it is useful to compare percentages within groups instead of

absolute counts. Select Percentage in the Statistics field of the

Element Properties window beside the Chart Builder window and

then press Set Parameters... in order to select Total for Each X-Axis

Category. Do not forget to confirm your selections by pressing Continue

and Apply, respectively. After closing the Chart Builder with OK, the bars

of the four occupational types now will be equalized to the same height,

and then the proportion of males and females can be compared more

easily between occupational types. The scaling to 100% within each

category can also be done ex post in the Options menu of the Chart

Editor.

29

14B2.3. Describing the distribution of nominal variables

The order of appearance can be

changed (e. g., to bring female

on top) by double-clicking on

any bar within the Chart

Editor

and

selecting

the

Categories tab:

The sorting order can also be

changed for the type of

occupation by double-clicking

on any label on the X-axis

within the Chart Editor and

selecting the Categories tab

again.

30

14B2.3. Describing the distribution of nominal variables

To create a table containing frequencies and percentages of type of

occupation broken down by sex, choose Analyze-Tables-Custom Tables

and, in addition to the selections shown above, drag the variable Sex to

the Columns field, and request Row N % in the Summary Statistics

dialogue.

Sex

male

Type of

occupation

10

Col %

25.0%

Row %

41.7%

mostly standing

22

55.0%

mostly in motion

7

17.5%

retired

1

2.5%

mostly sitting

Count

female

Count

14

Col %

32.6%

Row %

58.3%

59.5%

15

34.9%

40.5%

43.8%

9

20.9%

56.3%

16.7%

5

11.6%

83.3%

The Row% sum up to 100% within a row, the Col% values sum up within

each column. Therefore, we can compare the proportion of males between

types of occupation by looking at Row%, and we compare the proportion

of each type of occupation between the two sexes by looking at the Col%.

31

15B2.4. Describing the distribution of ordinal variables

2.4. Describing the distribution of ordinal variables

All methods for nominal variables also apply to ordinal variables.

Additionally, one may use the so-called non-parametric or distribution-free

statistics which make specific use of the ordinal information contained in

the variable. In our data set, the only ordinal variable is “sports”,

characterizing the intensity of leisure sports of the probands. Although the

calculation of nonparametric statistics is possible here, with such crude

classification the methods for nominal variables do better. However, some

attention must be paid to the correct order of categories – with ordinal

variables, categories may not be interchanged. The frequency table and

bar chart for sports, grouped by sex, look as follows:

Sex

male

Sports

7

Col %

17.5%

Row %

50.0%

seldom

22

55.0%

sometimes

11

27.5%

never

Count

female

often

32

Count

7

Col %

16.3%

Row %

50.0%

52.4%

20

46.5%

47.6%

45.8%

13

30.2%

54.2%

3

7.0%

100.0%

16B2.5. Describing the distribution of scale variables

2.5. Describing the distribution of scale variables

Histogram

The so-called histogram serves as a graphical tool showing the distribution

of a scale variable. It is mostly used in the explorative step of data

analysis. The histogram depicts the frequencies of a categorized scale

variable, similarly to a bar chart. However, in a histogram there is no

space between the bars (because consecutive categories border on each

other), and it is not allowed to interchange bars. In the following example,

143 students of the University of Connecticut were arranged according to

their height (source: Schilling et al, 2002). This resulted in a “living

histogram”:

Scale variables must be categorized before they can be characterized

using frequencies (e. g., age: 0-40=young, >40=old). Affording a

histogram with SPSS involves an automatic categorization which is done

by SPSS before computing the category counts. The category borders can

later be edited “by hand”. The histogram is created by choosing the first

variant (Simple Histogram) from the histograms offered in the Gallery

tab of the Chart Builder. To create a histogram for abdominal girth,

drag the variable Abdominal girth (cm) [waist] into the field X-Axis ?

and press OK.

33

16B2.5. Describing the distribution of scale variables

34

16B2.5. Describing the distribution of scale variables

Suppose we want abdominal

girth to be categorized into

categories of 10 cm each.

Double-click the graph and

double-click any bar of the

histogram. In the Properties

window which pops up select

the Binning tab and check

Custom and Interval width in

the X-Axis field.

Entering the value 10 and

confirming your choice by

clicking Apply updates the

histogram to the new settings:

35

16B2.5. Describing the distribution of scale variables

From the shape of the histogram we learn that the distribution is not

symmetric, the tail on the right hand side is longer than the tail on the left

hand side. Distributions of that shape are called “right-skewed”. They

often originate from a natural lower limit in the variable. The number of

intervals to be displayed is a matter of taste and depends on the total

sample size. The number should be specified such that the frequency of

each interval is not too small such that the histogram contains artificial

“wholes”.

36

16B2.5. Describing the distribution of scale variables

Histograms can also be compared between subgroups. For this purpose

perform the same steps within the Chart Builder as in the case of pie

charts:

The histograms use the same scaling on both axes, such that they can be

compared easily. Note that a comparison of the abdominal girth

distributions between both sexes requires the use of relative frequencies

(by selecting Histogram Percent in the Element Properties window

beside the Chart Builder).

37

16B2.5. Describing the distribution of scale variables

Dot plot

The dot plot serves to compare the individual values of a variable between

groups, e. g., the abdominal girth between males and females. In the

Chart Builder select the first variant (Simple Scatter) from the

Scatter/Dot plots in the Gallery tab and drag Abdominal girth and Sex

into the vertical and horizontal axis fields, respectively:

38

16B2.5. Describing the distribution of scale variables

The dot plot may suffer from ties in the data, i. e., two or more dots

superimposed, which may obscure the true distribution. Later a variant of

the dot plot is introduced that overcomes that problem by a parallel

depiction of superimposed dots.

Using proper statistical measures

Descriptive statistics should give a concise description of the distribution

of variables. There are measures describing the position and measures

describing the spread of a distribution.

We distinguish between parametric and nonparametric (distribution-free)

statistics. Parametric statistics assume that the data follow a particular

theoretical distribution, usually the normal distribution. If this assumption

holds, parametric measures allow a more concise description of the

distribution than nonparametric measures (because less numbers are

needed). If the assumption does not hold, then nonparametric measures

must be used in order to avoid confusing the target audience.

39

16B2.5. Describing the distribution of scale variables

Nonparametric statistics

Nonparametric measures make sense for ordinal or scale variables as they

are based on the sorted sample. They are roughly defined as follows (for a

more stringent definition see below):

•

Median: midpoint of the observations when they are ordered from

the smallest to the highest value (50% fall below and 50% fall

above this point)

•

25th Percentile (first or lower quartile): the value such that 25

percent of the observations fall below or at that value

•

75th Percentile (third or upper quartile): the value such that 75

percent of the observations fall below or at that value

•

Interquartile range (IQR): the difference between 75th percentile

and 25th percentile

•

Minimum: the smallest value

•

Maximum: the highest value

•

Range: difference between maximum and minimum

While Median, percentiles, minimum and maximum characterize the

position of a distribution, interquartile range and range describe the

spread of a distribution, i. e., the variation of a feature.

IQR and range can be used for scale but not for ordinal variables as

differences between two values usually make no sense for the latter.

The exact definition of the median (and analogously of the quartiles) is

as follows: the median is that value smaller than or equal to which are at

least 50% of the observations and greater than or equal to which are at

least 50% of the observations.

The box plot depicts all of those nonparametric statistics in one graph.

Surely, it is one of the most important graphical tools in statistics. It

allows to compare groups at a glance without much reducing the

information contained in the data and without assuming any theoretical

shape of the distribution. Box plots help us to decide if a distribution is

symmetric, right-skewed or left-skewed and if there are very large or very

small values (outliers). The only drawback of the box plot compared to the

histogram is that it is unable to depict multi-modal distributions

(histograms with two or more peaks).

The box contains the central 50% of the data. The line in the box marks

the median. Whiskers extend from the box up to the smallest/highest

observations that lies within 1.5 IQR from the quartiles. Observations that

lie farer away from the quartiles are marked by a circle (1.5 – 3 IQR) or

an asterisk (more than 3 IQR from the quartiles).

40

16B2.5. Describing the distribution of scale variables

The use of the box plot for ordinal variables is limited as the outlier

definition refers to IQR.

Box plots are obtained in the Chart Builder by dragging the first variant of

the Boxplots offered in the Gallery tab to the preview field.

41

16B2.5. Describing the distribution of scale variables

Check Point ID Label in the Groups/Point ID tab and drag the

Pat.No.[id] variable to the Point Label Variable? field which appears

in the preview field. Thus potentially shown extreme values or outliers are

labelled by that variable instead of by the row number in the data view.

As before, checking Columns Panel Variable in the same tab would

allow to specify another variable that is used to generate multiple charts

following the values of that variable.

The box plot does well in depicting the distribution actually observed, as

shown by the comparison with the original values (dot plot):

42

16B2.5. Describing the distribution of scale variables

Parametric statistics and the normal distribution

When creating a histogram of body height we can check the Display

normal curve option in the Element Properties window beside the

Chart Builder window:

The bars of the histogram more or less follow the normal curve. This curve

follows a mathematical formula, which is characterized by two

parameters, the mean µ and the standard deviation σ. Knowing these two

43

16B2.5. Describing the distribution of scale variables

parameters, we can specify areas where, e. g., the smallest 5% of the

data are located or where the middle 2/3 can be expected. These

proportions are obtained by the formula of the distribution function of the

normal distribution:

x − µ 2

1

=

exp −

P ( y ≤ x) ∫

2 dx

−∞ 2π

σ

x

P ( y ≤ x ) denotes the probability that a value y which is drawn randomly

from a normal distribution with mean µ and standard deviation σ, is equal

to or less than x. The usually unknown parameters µ and σ are estimated

by their sample values µ̂ (the sample mean) and σˆ (the sample standard

deviation, SD):

Mean = µ̂ =

1

N

N

∑ xi

SD = σˆ =

i =1

1 N

∑ (xi − µˆ )

N − 1 i =1

2

Assuming a normal distribution and using the above formula we obtain the

following data areas:

•

Mean: 50% of the observations fall below the mean and 50% fall

above the mean

•

Mean-SD, Mean+SD: 68% (roughly two thirds) of the observations

fall into that area

•

Mean-2SD, Mean+2SD: 95% of the observations fall into that area

•

Mean-3SD, Mean+3SD: 99.7% of the observations fall into that area

Note: Although mean and standard deviation can be computed from any

variable, the above interpretation is only valid for normally distributed

variables.

Displaying mean and SD: how not to do it

Often mean and SD are depicted in bar charts as the one shown below.

However, experienced researchers discourage from using such charts.

Their reasons follow from the comparison with the dot plot:

44

16B2.5. Describing the distribution of scale variables

Some statistics programs offer bar charts with whiskers showing the

standard deviation to depict mean and standard deviation of a variable.

The bar pretends that the data area begins at the origin of the bar, which

is 150 in our example. When comparing with the dot plot, we see that the

minimum for males is much higher, about 162 cm. Furthermore, the

mean, which is a single value, is represented by a bar, which covers a

range of values. On the other hand, the standard deviation, which is a

measure showing the spread of a distribution, is only plotted above the

mean. This pretends that the variable spreads only into one direction from

the mean. The standard deviation should always be plotted into both

directions from the mean.

Furthermore, the origin of 150 depicted here is not a natural one,

therefore, choice of the length of the bars is completely arbitrary. The

relationships of the bars give a wrong idea of the relationships of the

means, which should always be seen relative to the spread of the

distributions. By changing the y-axis scale, the impression of a difference

can be enforced or attenuated easily, as can be seen by the following

comparison:

45

16B2.5. Describing the distribution of scale variables

Displaying mean and SD: how to do it

Mean and SD are correctly depicted in an error bar chart. Select the third

variant in the second row (Simple Error Bar) of the Bar Charts offered

in the Gallery tab:

Put the variable of interest (height in our example) into the vertical axis

field, and the grouping variable (sex) into the horizontal axis field.

46

16B2.5. Describing the distribution of scale variables

In the field Error Bars Represent of the Elements Properties window

check Standard Deviation.

The multiplier should be set to 1.0 to

have one SD plotted on top of and

below the mean. If the multiplier is

set to 2.0, then the error bars will

cover an area of mean plus/minus

two standard deviations. Assuming

normal distributions, about 95% of

the observations fall into that area.

Usually, one standard deviation is

shown in an error bar plot,

corresponding

to

2/3

of

the

observations.

We obtain a chart showing two error bars, corresponding to males and

females:

47

16B2.5. Describing the distribution of scale variables

The menu also offers to use the standard error of the mean (SEM)

instead of the SD. This statistic measures the accuracy of the sample

mean with respect to estimating the population mean. It is defined as the

standard deviation divided by the square root of N. Therefore, the

precision of the estimate of the population mean is proportional to the

number of observations. However, the SEM does not show the spread of

the data. Therefore, it should not to be used to describe samples.

Verifying the normal assumption

In small samples it can be very difficult to verify the assumption of normal

distribution in a variable. The following illustration shows the variety in

observed distribution of normally and right skewed data sets. Values were

sampled from a normal distribution and from a right-skewed distribution

and then collected into data sets of size 10, 30 and 100. As can be seen,

the histograms of the samples of size N=10 often look asymmetric and do

not reflect a normal distribution. By contrast, some of the histograms of

the right-skewed variable are close to a normal distribution. This effect is

caused by random variation, which is considerably high in small samples.

Therefore it is very difficult to obtain a unique decision about the normal

assumption in small samples.

48

16B2.5. Describing the distribution of scale variables

Histograms of normally distributed data of sample size 10:

Count

8

Count

Count

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

2

6

4

2

8

6

4

2

8

Count

1

4

8

6

4

2

8

Count

0

6

6

4

2

50 75 100 125

50 75 100 125

Normal

50 75 100 125

Normal

Normal

50 75 100 125

Normal

50 75 100 125

Normal

50 75 100 125

Normal

Histograms of normally distributed data of sample size 30:

0

1

2

3

4

5

6

7

8

9

Count

12

8

4

0

Count

12

8

4

0

Count

12

8

4

0

50

75

100

Normal

125

50

75

100

Normal

125

Histograms of normally distributed data of sample size 100:

0

1

25

Count

20

15

10

5

2

25

Count

20

15

10

5

50

75

100

125

Normal

49

16B2.5. Describing the distribution of scale variables

Histograms of right-skewed data of sample size 10:

0

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

Count

10

8

6

4

2

Count

10

8

6

4

2

Count

10

8

6

4

2

Count

10

8

6

4

2

Count

10

8

6

4

2

2.5

5.0

7.5 12.5

10.0

rightskew ed

2.5

5.0

7.5 12.5

10.0

rightskew ed

2.5

5.0

7.5 12.5

10.0

rightskew ed

2.5

5.0

7.5 12.5

10.0

rightskew ed

2.5

5.0

7.5 12.5

10.0

rightskew ed

2.5

5.0

7.5 12.5

10.0

rightskew ed

Histograms of right-skewed data of sample size 30:

25

0

1

2

3

4

5

6

7

8

9

Count

20

15

10

5

25

Count

20

15

10

5

25

Count

20

15

10

5

2.5

5.0

2.5

7.5 10.0 12.5

5.0

7.5 10.0 12.5

rightskew ed

rightskew ed

Histograms of right-skewed data of sample size 100:

0

75

1

Count

50

25

0

2

75

Count

50

25

0

2.5

5.0

7.5

10.0

12.5

rightskewed

50

16B2.5. Describing the distribution of scale variables

A comparison of an error bar plot with the bars extending up to two SDs

from the mean and a dot plot may help in deciding whether the normal

assumption can be adopted. Both charts must use the same scaling on the

vertical axis. If the error bar plot reflects the range of data as shown by

the dot plot, then it is meaningful to describe the distribution using the

mean and the SD. If the error bar plot gives the impression that the

distribution is shifted up or down compared to the dot plot, then the data

distribution should be described using nonparametric statistics.

As an example, let us first consider the variable “height”. A comparison of

the error bar plot and the dot plot shows good agreement:

2 00 .0 0

A

A

1 90 .0 0

1 80 .0 0

]

1 70 .0 0

]

Height (cm)

Height (cm)

1 90 .0 0

1 80 .0 0

1 70 .0 0

n= 40

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

1 60 .0 0

1 60 .0 0

A

A

A

n= 43

m al e

fe ma l e

m al e

Sex

fe ma l e

Sex

The situation is different for the variable “Abdominal girth” (waist)”. Both

error bars seem to be shifted downwards. This results from the rightskewed distribution of abdominal girth. Therefore, the nonparametric

statistics should be used for description.

51

16B2.5. Describing the distribution of scale variables

A

1 00 .0 0

]

]

7 5.0 0

Abdominal girth (cm)

Abdominal girth (cm)

A

1 25 .0 0

1 25 .0 0

1 00 .0 0

7 5.0 0

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

A

n= 40

5 0.0 0

5 0.0 0

n= 43

m al e

fe ma l e

m al e

Sex

fe ma l e

Sex

In practice, box plots are used more frequently than error bar plots to

show distributions in subgroups. Using SPSS one may also symbolize the

mean in a box plot (see later). However, error bar plots are useful when

illustrating repeated measurements of a variable, as they need less space

than box plots.

Computing the statistics

Statistical measures describing the position or spread of a distribution can

be computed using the menu Analyze-Tables-Custom Tables.... To

compute measures for the variable “height”, in separate rows according to

the groups defined by sex, choose that menu and first drag the variable

sex to the Rows area in the preview field. Then drag the height variable to

the produced two-row table such that the appearing red frame covers the

right-hand part of the male and the female cells:

52

16B2.5. Describing the distribution of scale variables

We have seen from the considerations above that height can be assumed

to be normally distributed. Therefore, the most concise description uses

mean and standard deviation. Additionally, the frequency of each group

should be included in a summary table. Press Summary Statistics...

and select Count, Mean and Std. Deviation:

After pressing Apply to Selection and OK, the output table reads as

follow:

53

16B2.5. Describing the distribution of scale variables

Sex

male

Height (cm)

female

Height (cm)

Count

40

Mean

179.38

Std Deviation

6.83

43

167.16

6.50

The variable “Abdominal girth” is best described using nonparametric

measures. These statistics have to be selected in the Summary

Statistics... submenu:

Sex

male

Abdominal girth (cm)

female

Abdominal girth (cm)

Count

40

Median

91.50

Percentile 25

86.00

Percentile 75

101.75

Range

51.00

43

86.00

75.00

103.00

86.00

54

16B2.5. Describing the distribution of scale variables

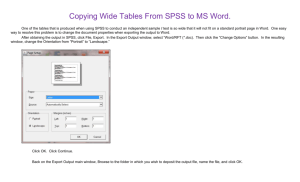

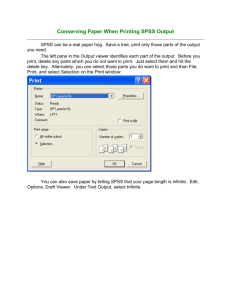

Transferring output to other programs

After explorative data analysis, some of the results will be used for

presentation of study results. Parts of the output collected in the SPSS

Viewer can be copied and pasted in other programs, e. g. MS Powerpoint

or MS Word 2007.

•

Charts can simply be selected and copied (Strg-C, Ctrl-C). In the

target program (Word or Powerpoint 2007) choose Edit-Paste

(Strg-V, Ctrl-V) to paste the as an editable graph.

•

Tables are transferred the following way: select the table in the

SPSS viewer, and choose Edit-Copy (Strg-C, Ctrl-C) to copy it, and

in the target program choose Edit-Paste (Strg-V, Ctrl-V) to paste it

as an editable table.

55

16B2.5. Describing the distribution of scale variables

Summary

To decide which measure and chart to use for describing the distribution

of a scale variable, first verify the distribution using histograms (by

subgroups) or box plots.

Check

histogram

or box

plot:

Normally distributed?

m ale

Not normally distributed?

fe ma le

m ale

fe ma le

10.0

7.5

Count

Count

15

10

5.0

5

2.5

0

160.00

170.00

180.00

190.00

160.00

Height (cm )

170.00

180.00

0.0

60.00

190.00

Height (cm )

80.00

100.00

120.00

140.00

60.00

80.00

Abdom inal girth (cm)

A

A

120.00

140.00

A

1 40 .0 0

Abdominal girth (cm)

1 90 .0 0

A

Height (cm)

100.00

Abdom inal girth (cm)

A

1 80 .0 0

1 70 .0 0

A

1 60 .0 0

1 20 .0 0

1 00 .0 0

8 0.0 0

A

A

m al e

fe ma l e

6 0.0 0

Sex

m al e

fe ma l e

Sex

Measure

of center:

Mean

Measure

of spread:

Standard Deviation

Chart:

Median

Additionally, minimum and

maximum may be used

Error bar plot

Large N?

1st, 3rd

quartiles

Box plot

(including

means)

Small N?

Minimum,

Maximum

Box plot

A

A

A

1 40 .0 0

170.00

]

Height (cm)

Height (cm)

180.00

n= 40

1 80 .0 0

A

A

a

1 70 .0 0

a

]

A

Abdominal girth (cm)

1 90 .0 0

1 20 .0 0

1 00 .0 0

8 0.0 0

1 60 .0 0

A

A

160.00

n= 43

male

f emale

Sex

m al e

fe ma l e

Sex

6 0.0 0

m al e

fe ma l e

Sex

The section “Further graphs” shows how to include means into box plots.

56

17B2.6. Outliers

2.6. Outliers

Some extreme values in a sample may affect results exorbitantly. Such

values are called outliers. The decision on how to proceed with outliers

depends on their origin:

•

•

The outliers were produced by measurement errors or data entry

errors, or may be the result of untypical circumstances at time of

measurement. If the correct values are not available (e. g., because

of missing case report forms, or because the measurement cannot

be repeated), then these values should be excluded from further

analysis.

If an error or an untypical concomitant can be ruled out, then the

outlying observation must be regarded as part of the distribution

and should not be excluded from further analysis.

An example, taken from the data file “Waist-Hip.sav”:

There appears to be an observation with height less than 100 cm. This

points towards a data entry error. Using box plots, such implausible values

are easily detected. The outlier is identified by its Pat.No., that has been

set as Point ID variable. Alternatively, we could identify the outlier by

57

17B2.6. Outliers

sorting the data set by height (Data-Sort Cases). Then the outlying

observation will appear in the first row of data. To exclude the observation

from further analyses, choose Data-Select Cases... and check the

option If condition is satisfied.

Press If... and specify height > 100. Press Continue and OK. Now the

untypical observation is filtered out before analysis. A new variable

“filter_$” is created in the data set. If the box plot request is repeated, we

still obtain the same data area as before.

58

17B2.6. Outliers

59

17B2.6. Outliers

The scaling of the vertical axis must be corrected by hand, by doubleclicking the chart and subsequently double-clicking the vertical axis:

60

17B2.6. Outliers

In the Scale tab uncheck the Auto option at Minimum and insert a value of

150, say. Finally, after rescaling the vertical axis we obtain a correct

picture:

61

17B2.6. Outliers

62

18B2.7. Missing values

2.7. Missing values

Missing values (sometimes called “not-available values” or NA’s) are a

problem common to all kind of medical data. In the worst case they can

lead to biased results. There are several reasons for the occurrence of

missing data:

•

•

•

•

•

Breakdown of measurement instruments

Retrospective data collection may lead to values that are no longer

available

Imprecise or fragmentary collection of data (e. g., questionnaires

with some questions the respondent refused to reply)

Missing values by design: some values may only be available for a

subset of patients

Drop-out of patients from studies

o Patients refusing further participation

o Patients are lost to follow-up

o Patients are dead

Reports on studies following the patients over a long time should always

include information about frequency and time of drop-outs. This is best

accomplished by a flow chart, which is e.g. mandatory for any study

published in the British Medical Journal. Such a flow-chart may resemble

the

following,

taken

from

Kendrick

et

al

(2001)

(http://bmj.bmjjournals.com/cgi/content/abstract/322/7283/400,

a

comparison of referral to radiography of the lumbar spine and

conventional treatment for patients with low back pain):

63

18B2.7. Missing values

64

18B2.7. Missing values

Furthermore, values may be partly available (censored). This is quite

typical for a lot of medical research questions. Censored means that we

don’t know the exact value of the variable. All we know is an interval

where the exact value is contained:

•

Right censoring: Quite common when studying survival times of

patients. Assume e.g. that we are interested in the survival of

patients after colon cancer surgery. If a patient is still living at the

time of statistical analysis, then all we know is a minimum survival

time of, say, 7.5 years for this patient. His true but unkown survival

time is within 7.5 years and infinity. This upper limit of infinity is

mathematical convenience, in our example an upper limit of, say,

150 years would suffice to cover all possible values for human

survival times.

•

Left censoring: Here a maximum for the unknown value is known.

This happens quite often in laboratory settings, where a smaller

value than a certain level of dection (LOD) cannot be observed. So

all we know is that the true value falls within the interval between 0

and LOD.

•

Interval censoring: Both a lower and upper limit for the unknown

value is known. For instance, we are interested in the age when a

person becomes HIV-positive. The person is medically examined at

an age of 22.1 years and 27.8 years. If the HIV-test is negative at

the first examination but positive at the second one, then we will

know that the HIV-infection happened between an age of 22.1 and

27.8 years, but we won’t know the exact age when the infection

occurred.

According to their impact on results, one roughly distinguishes between

•

Values missing at random: the reason for missing values may be

known, but is independent from the variable of interest

•

Nonignorable missing values (also called missing not at random):

the reason for missing values depends on the magnitude of the

(unobserved) true value; e. g., if patients in good condition refuse

to show up at follow-up examinations

65

18B2.7. Missing values

Strategies for data analysis in the presence of missing values include:

1. Complete case analysis: patients with missing values are excluded

from analysis

2. Reconstructing missing values (imputation):

o In longitudinal studies with missing measurements, often the

last available value is carried forward (LVCF) or a missing

value is replaced by interpolating the neighboring observations

o Missing values are replaced by values from similar individuals

(nearest neighbor imputation)

o Missing values are replaced by a subgroup mean (mean

imputation)

3. Application of specialized techniques to account for missing or

censored data (e. g., survival analysis)

Any methods attempting to reconstruct missing values (second strategy,

see above) need to assume that the values are missing at random. This

assumption cannot be verified in a direct way (like the normality

assumption). Therefore, the first option (complete case analysis) is often

preferred unless special techniques are available (e.g., for survival data in

the presence of right-censoring). The better alternative is an improved

study design that tries to avoid missing values.

Especially in cases where nonignorable missing values are plausible a

careful sensitivity analysis should be performed.

66

18B2.7. Missing values

Example 2.7.1: Quality of life. Consider a variable measuring quality of

life (a score consisting of 10 items) one year after an operation. Suppose that

all questionnaires have been filled in completely. Then the histogram of scores

looks like the following:

40

Frequency

30

20

10

Mean =50.8947

Std. Dev. =20.01785

N =114

0

0.00

20.00

40.00

60.00

80.00

100.00

120.00

Quality of life

20

15

Frequency

Now suppose that some patients

could not answer some items, such

that the score is missing for those

patients

(assuming

missing-atrandom). The histogram of quality-oflife scores doesn’t change much:

10

5

Mean =49.7727

Std. Dev. =18.91584

N =66

0

0.00

20.00

40.00

60.00

80.00

100.00

120.00

Quality of life

40

30

Frequency

Now

consider

a

situation

of

nonignorable missing values: suppose

that patients suffering from heavy

side effects are not able to fill in the

questionnaire. If the histogram is

based on data of the remaining

patients, we obtain:

20

10

Mean =61.8429

Std. Dev. =15.88421

N =70

0

0.00

20.00

40.00

60.00

80.00

100.00

120.00

Quality of life

The third histogram shows a clear shift towards the right, and the mean is

about 10 units higher than with the other two histograms. This reflects the

bias occurring if the missing-at-random precondition is not fulfilled.

67

19B2.8. Further graphs

2.8. Further graphs

Dot plots with heavily tied data

With larger sample sizes and scale variables of moderate precision, data

values are often tied, i. e., multiple observations assume the same value.

A simple dot plot as shown above superimposes those observations such

that the density of the distribution at such values is obscured. To

overcome that problem, a dot plot that puts tied values in parallel can be

created. First create a Simple Scatter plot as shown above for

Abdominal girth by Sex in the “cholesterol.sav” data set. Then check the

Stack identical values in the Element Properties window:

68

19B2.8. Further graphs

After rescaling the vertical axis to a minimum of 50 and a maximum of

150 the resulting chart looks as follows:

69

20B2.9. Exercises

2.9. Exercises

The following exercises are useful to practice the concepts of descriptive

statistics. All data files can be found in the filing tray “Chapter 2”. For

each exercise, create a chart and a table of summarizing statistics.

2.9.1.

Coronary arteries

Open the data set “coronary.sav”. Compare the achieved

treadmill time between healthy and diseased individuals! Use

parametric and nonparametric statistics and compare the results.

2.9.2.

Cornea (cont.)

Open the data set “cornea.sav”. Compare the distribution of

cornea temperature measurements between the age groups by

creating a chart and a table of summary statistics.

2.9.3.

Hemoglobin (cont.)

Open the data set “hemoglobin.sav”. Compare the level of

hemoglobin between pre-menopausal and post-menopausal

patients using adequate charts and statistics. Repeat the analysis

for hematocrit (PCV).

2.9.4.

Body-mass-index and waist-hip-ratio (cont.)

Open the data set “bmi.sav”. Compare body-mass-indices of the

patients in the four categories defined by sex and disease. Use

adequate charts and statistics. Repeat the analysis for waist-hipratio. Pay attention to outliers!

2.9.5.

Cardiac fibrillation (cont.)

Open the data set “fibrillation.sav”. Compare body-mass-index

between patients that were successfully treated and patients that

were not (variable “success”). Repeat the analysis building

subgroups by treatment. Repeat the analysis comparing

potassium level and magnesium level between these subgroups.

2.9.6.

Psychosis and type of constitution (cont.)

Open the data file “psychosis.sav”. Find an adequate way to

graphically depict the distribution of constitutional type, grouped

by type of psychosis. Define “frequency” as the frequency

variable! Create a table of suitable statistics.

2.9.7.

Down’s syndrome (cont.)

Open the data file “down.sav”.

Depict the distribution of mother’s age. Is it possible to compute

the median?

Depict the proportion of children suffering from Down’s syndrome

in subgroups defined by mother’s age. Is there a difference when

compared to absolute counts?

2.9.8.

Flow-chart

Use the data file “patientflow.sav” and fill in the following chart

showing the flow of patients through a clinical trial.

70

20B2.9. Exercises

Registered patients

N=

Refused to participate

N=

Randomized

N=

Active group

N=

Placebo group

N=

Lost to follow up N=

Refused to continue N=

Died N=

Lost to follow up N=

Refused to continue N=

Died N=

3 weeks

Treated per protocol

N=

Treated per protocol

N=

Lost to follow up N=

Refused to continue N=

Died N=

Lost to follow up N=

Refused to continue N=

Died N=

6 weeks

Treatment completed

per protocol

N=

2.9.9.

Treatment completed

per protocol

N=

Box plot including means

Use the data set “waist-hip.sav”. Generate box plots which

include the means for the variables body-mass-index and waisthip-ratio, grouped by sex.

2.9.10. Dot plot

Use the data set “waist-hip.sav”. Generate dot plots, grouped by

sex, for the variables body-mass-index and waist-hip-ratio as

described in section 2.5. Afterwards, generate dot plots which put

tied values in parallel as described in section 2.8.

71

21B3.1. Introduction

Chapter 3

Probability

3.1. Introduction

In the first two chapters various methods to present medical data have

been introduced. These methods comprise graphical tools (box plot,

histogram, scatter plot, bar chart, etc.) and statistical measures (mean,

standard deviation, median, quartiles, quantiles, percentages, etc.).

These statistical tools have one particular purpose in the field of medicine: