Communication Studies 783 Notes

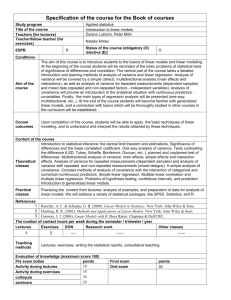

advertisement