Assignment 5 Solution: 12.4 1. (a) Yes , Sailors.sid<50,000 (b) Yes

advertisement

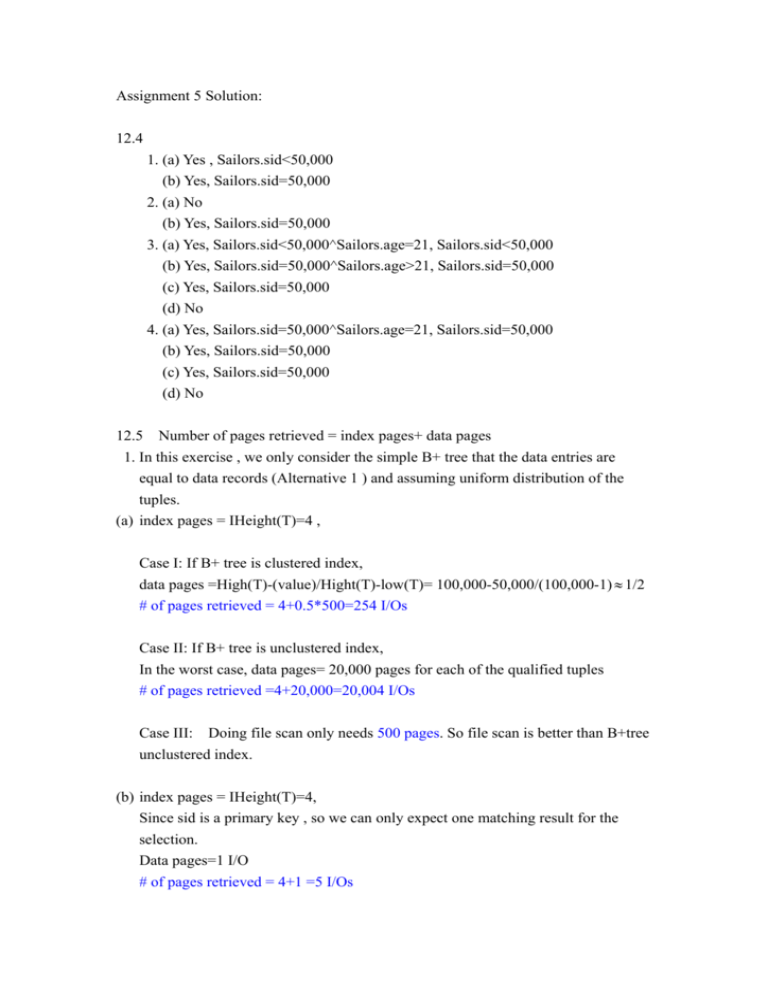

Assignment 5 Solution: 12.4 1. (a) Yes , Sailors.sid<50,000 (b) Yes, Sailors.sid=50,000 2. (a) No (b) Yes, Sailors.sid=50,000 3. (a) Yes, Sailors.sid<50,000^Sailors.age=21, Sailors.sid<50,000 (b) Yes, Sailors.sid=50,000^Sailors.age>21, Sailors.sid=50,000 (c) Yes, Sailors.sid=50,000 (d) No 4. (a) Yes, Sailors.sid=50,000^Sailors.age=21, Sailors.sid=50,000 (b) Yes, Sailors.sid=50,000 (c) Yes, Sailors.sid=50,000 (d) No 12.5 Number of pages retrieved = index pages+ data pages 1. In this exercise , we only consider the simple B+ tree that the data entries are equal to data records (Alternative 1 ) and assuming uniform distribution of the tuples. (a) index pages = IHeight(T)=4 , Case I: If B+ tree is clustered index, data pages =High(T)-(value)/Hight(T)-low(T)= 100,000-50,000/(100,000-1) ≈ 1/2 # of pages retrieved = 4+0.5*500=254 I/Os Case II: If B+ tree is unclustered index, In the worst case, data pages= 20,000 pages for each of the qualified tuples # of pages retrieved =4+20,000=20,004 I/Os Case III: Doing file scan only needs 500 pages. So file scan is better than B+tree unclustered index. (b) index pages = IHeight(T)=4, Since sid is a primary key , so we can only expect one matching result for the selection. Data pages=1 I/O # of pages retrieved = 4+1 =5 I/Os (2) (a) Hash index can’t select range query. So hash index is not useful in this case. We need to do file scan . # of pages retrieved = 500 I/Os (c) index pages= IHeight(T)=2, data pages = 1 I/O # of pages retrieved = 2+1 =3 I/Os 13.3 Assumption: External Merge-Sort Algorithm is used. Total # of records =4500 Size per record= 48 bytes Page size =512 bytes Control size per page= 12 bytes Buffer pages=4 pages (1) remaining space for records per page= 512-12=500 bytes # of records per page = ⎣500 / 48⎦ = 10 records Total pages = 4500/10 = 450 pages # of runs= ⎡450 / 4⎤ = 113 (2) # of passes = ⎡log 3 113⎤ + 1 = 6 (3) Total I/O cost= 2*450*6= 5400 I/Os (4) N is the number of pages in the file. To sort in two passes, ⎡log 3 N / 4⎤ = 1 => maximum N=12, the largest file size = 12 * 10=120 records Buffer pages =257, N= 257*256= 65792, largest file size= 65792*10=657920 records (5) CASE I : index using Alternative 1 Assuming 67 % occupancy in the B+tree leaf nodes, The number of leaf pages = 450 pages/0.67=672 pages Total cost = traversing cost from root to left most leaf+ 672 CASE II : index using Alternative 2 and unclustered In the worst case , scan the leaf pages of data entries and then retrieve data pages. Data entry pair=<key, rid> , size =4+8 =12 bytes Assuming 67 % occupancy in leaf pages of data entries, # of leaf pages =(4500*12)/(512*0.67)=158 pages Total cost = 4500 + 158 pages =4658 pages CASE III : largest file size of two passes for clustered and unclustered (i) B+ tree index using Alternative (1), Assuming 67 % occupancy Total cost =65792 /0.67=98197 pages + traversing cost from root to left most leaf (ii) B+ tree index using Alternative (2) and unclustered, Assuming 67 % occupancy in data entries, # of leaf pages= (657920*12)/(512*0.67)=23015 pages Total cost = 657920+23015=680935 pages 14.4 R has 1000 pages and S has 200 pages. 1. Let the outer relation be S, and the inner relation be R Total cost = 200 + 200 * 1000= 200,200 pages The minimum buffer pages required is 3 . (2 buffer pages for R and S each and 1 buffer for output). 2. Total cost = 200 + ⎡200 /(52 − 2)⎤ *1000 = 4,200 pages The minimum buffer pages required is 52. If the block buffer pages for S is less than 50 (say 49) ,then the number of inner scan would be 5 . 3. buffer pages 52 > 1000 > 200 , use the refinement of the sort-merged join that was discussed in text book page 462 Total cost = 3*(1000+200) =3600 pages Let Buffer pages B=25, Sorting pass split R into 20 runs of size 50 (2B) and S into 4 runs of size 50 (2B) approximately. These 24 runs can be merged in next pass, with one buffer page as output. If B < 25 , then merging cannot achieve in one pass . So the minimum buffer pages required is 25 pages. 4. Sailor table has smaller pages . B (52) > 200 So we can assume there is uniform partitioning from hash function. Total cost = 3(M+N)= 3*(1000+200) =3600 pages The minimum buffer pages for hash join is B > f * 200 5. The optimal cost would be achieved if each relation was only read only once. Store the entire smaller relation in memory . Read each page of the larger relation. Total cost = smaller relation total pages + input page for larger relation + output page= 200 + 1+1 = 202 pages. 6. S.b is the primary key , so any tuple in R can match at most one tuple in S. The maximum number of tuples is equal the number of tuples in R. R , S has 10 tuples per page. The result tuple that joins R and S is twice the current size. Only can store 5 tuples per page for each result tuple. Total pages = 1000 * 2 = 2000 pages. 7. The foreign key constraint tells that for every R tuple there is exactly one matching S tuple. In Page-Oriented Nested loop, we let the outer relation be R because for each tuple of R , we only have to scan S until a match is found. Average required 50 % of scanning S. Total cost = 1000 + 200/2 * 1000 = 101, 000 In Block Nested Loop, also let the outer relation be R Total cost= 1000 + 200 /2 * ⎡1000 / 50⎤ =3,000 Sort-Merge and Hash join are not affected. The minimum buffer pages required remain the same.