what you should be able to explain, what you

advertisement

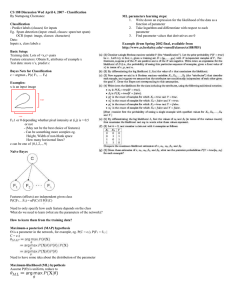

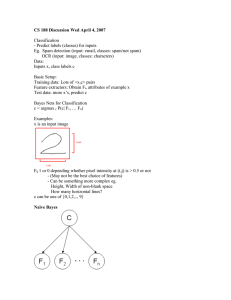

what you should be able to explain, what you should be able to do Concept learning explain o type of learning task o instances o concepts o version space o more-general-ordering o sets S and G do o develop a simple example o let the version space algorithm run on a very simple example Decision tree learning explain o type of learning task o instances o decision tree o attribute selection o Shannon entropy o how to prevent too fine-grain trees o pruning do o develop a simple example o construct decision tree - estimation of information gain is sufficient, you must not compute entropy formulas Perceptron learning explain o type of learning task o instances o model of a perceptron, bias weight instead of threshold o linear separability, hyperplane, halfspaces o example of an non linear separable problem o how does perceptron algorithm work o what happens in case of a linearly separable problem - why o what happens in case of a non linearly separable problem do o execute a few steps of perceptron algorithm Perceptron networks explain o false negatives etc o explain what upstart algorithm does o explain what tower algorithm does o explain architecture of a rosenblatt perceptron o show examples of tasks a rosenblatt perceptron cannot solve o what is the conclusion for the architecture of multi layer perceptrons Multi-layer perceptron explain o explain architecture o what sort of activation functions, derivatives o forward pass, formulas o error function o gradient descent, partial derivatives o problem of local minima, oscillation, flat plateaus, deep valleys o heuristics to solve these problems, momentum, Super-SAB, RProp o backward pass, chain rule, formulas o explain applications Generalization and learnability explain o overview over regularization techniques o explain preference for small initial weights, weight decay o optimal brain damage o skeletization o learnability framework: unknown rule, unknown data distribution, hypotheses space o training set and training error o generalization error o which parameters play a role: error rate, confidence, training set size o learning a rectangle o explain definition of VC-dimension, give an example o how are error rate and confidence related to training set size (not exact formula required, but explain rough quality of estimations) to do o explain recipe for a MLP project Hopfield networks explain o explain architecture o asynchronous and synchronous dynamics o stable states o energy function o Hebb training o storage capacity o correlation based learning to do o let a small Hopfield network do a few asynchronous steps o determine some (not all) stable states Boltzmann machines explain o explain architecture o stochastic dynamics o influence of temperature o Markov chains o limit distribution, stable distribution o being irreducible o being aperiodic o what is correlation training o definition of Kullbach-Leibler divergence o correlation training is gradient descent w.r.t. Kullbach-Leibler divergence to do o compute development of distributions over time for a very simple Markov chain Support vector machines explain o explain architecture o what is margin, how is it computed o normalize problem, final form of minimization problem o minimization under equality constraints o Lagrange multiplies o convexity o minimization under inequality constraints o Karush-Kuhn-Tucker conditions o primal-dual o dual function for margin maximization problem o what are support vectors, why are they particularly interesting o Kernel-functions and kernel trick o give some examples of kernel-functions o what is meant by soft-margin to do o solve a very simple minimization problem under equality constraints Bayes Learning and Hidden markov models explain o basic probabilities that occur in Bayes Learning (5 different probabilities) o Bayes rule o ML and MAP learning o relation to concept learning o multy layer perceptrons that learn the probability of classification o what is meant by optimal Bayes classificator o what is meant by naive Bayes classificator: explain an example o what are hidden markov models, give an example o Viterbi algorithm o present an example of a Bayes network Principle Component Analysis networks explain o what is the meaning if first, second ... principle component o what is the relation to Hebb rule for linear neuron o does Hebb rule lead to convergence of weight vector o normed Hebb rule: disadvantage o explain Oja's rule o explain Sanger' rule Winner Take All networks explain o architecture o what role does the winner neuron play o relation to clustering o what is k-means clustering o how difficult is the general clustering problem o explain main approaches to vector quantization (basics, improved approaches as neural gas, learning vector quantization) o what are self-organizing maps (SOM's) o what sort of neighbourhoods are used in SOM's o how are SOM's trained o explain usage of SOM's with an example