PerceptronLearning

advertisement

CS855 Pattern Recognition and Machine Learning

Perceptron Learning In-class Exercise

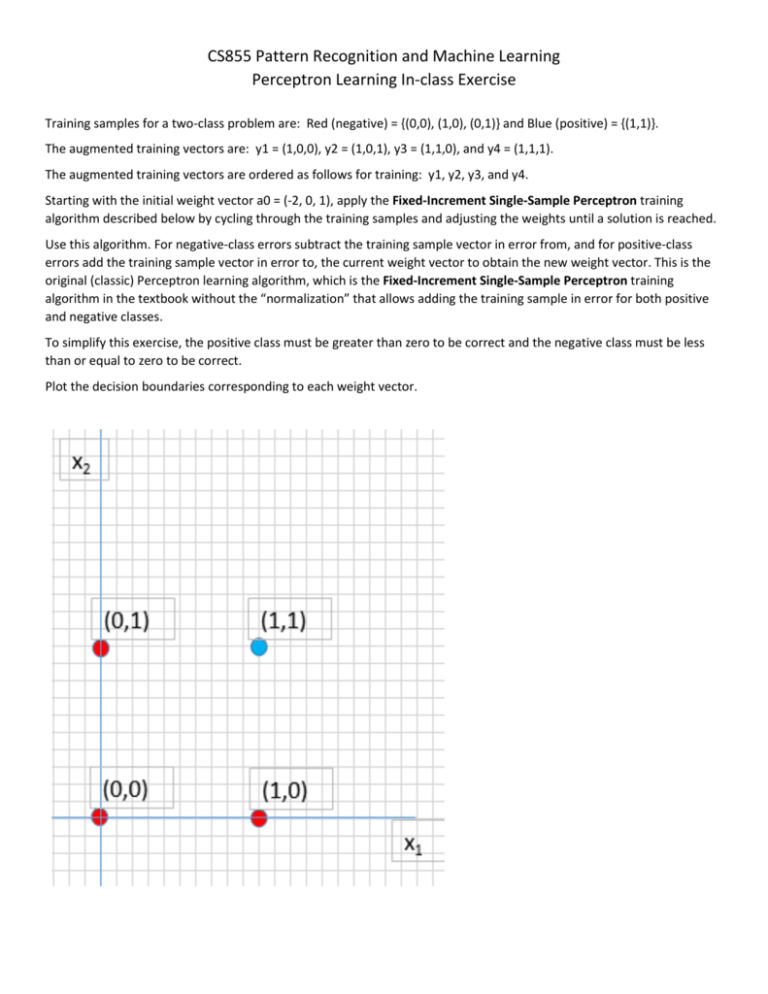

Training samples for a two-class problem are: Red (negative) = {(0,0), (1,0), (0,1)} and Blue (positive) = {(1,1)}.

The augmented training vectors are: y1 = (1,0,0), y2 = (1,0,1), y3 = (1,1,0), and y4 = (1,1,1).

The augmented training vectors are ordered as follows for training: y1, y2, y3, and y4.

Starting with the initial weight vector a0 = (-2, 0, 1), apply the Fixed-Increment Single-Sample Perceptron training

algorithm described below by cycling through the training samples and adjusting the weights until a solution is reached.

Use this algorithm. For negative-class errors subtract the training sample vector in error from, and for positive-class

errors add the training sample vector in error to, the current weight vector to obtain the new weight vector. This is the

original (classic) Perceptron learning algorithm, which is the Fixed-Increment Single-Sample Perceptron training

algorithm in the textbook without the “normalization” that allows adding the training sample in error for both positive

and negative classes.

To simplify this exercise, the positive class must be greater than zero to be correct and the negative class must be less

than or equal to zero to be correct.

Plot the decision boundaries corresponding to each weight vector.