Week 11 - Naive Bayes, Perceptron

advertisement

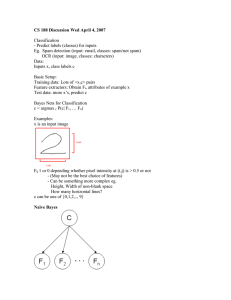

CS 188 Discussion Wed April 4, 2007 - Classification

By Nuttapong Chentanez

Classification

- Predict labels (classes) for inputs

Eg. Spam detection (input: email, classes: spam/not spam)

OCR (input: image, classes: characters)

Data:

Inputs x, class labels c

Basic Setup:

Training data: Lots of <x,c> pairs

Feature extractors: Obtain Fi, attributes of example x

Test data: more x’s, predict c

Bayes Nets for Classification

c = argmax c P(c| F1, … Fn)

Examples:

x is an input image

Fij 1 or 0 depending whether pixel intensity at (i,j) is > 0.5

or not

- (May not be the best choice of features)

- Can be something more complex eg.

Height, Width of non-blank space

How many horizontal lines?

c can be one of {0,1,2,.., 9}

Naïve Bayes

Features (effects) are independent given class

P(C|F1…Fn) = αP(C) Π P(Fi|C)

i

Need to only specify how each feature depends on the class

What do we need to learn (what are the parameters of the network)?

How to learn them from the training data?

Maximum-a posteriori (MAP) hypothesis

Ө is a parameter in the network, for example, eg. P(C = c1), P(F1 = f1,1 |

C = c1)

Need to have some idea about the distribution of the parameter

Maximum-likelihood (ML) hypothesis

Assume P(Ө) is uniform, reduce to

ML parameters learning steps:

1. Write down an expression for the likelihood of the data as a

function of parameter

2. Take logarithm and differentiate with respect to each

parameter

3. Find parameter values that derivatives are 0

Example (from Spring 2002 final, available from

http://www.cs.berkeley.edu/~russell/classes/cs188/f05/)

Generalization and Over fitting

ML is easier but can have problem of over fitting.

Eg. If I flip a coin once, and it’s heads, what is P(heads)?

How about 10 times, with 8 heads?

How about 10M times with 8M heads?

Idea: Small data-> trust prior, Large Data -> trust data

Multiclass perceptron

If we have > 2 classes, have weight vector for each class, calculate an

activation for each class, highest activation wins

Laplace Smoothing,

assume seeing every outcome 1 extra times.

eg. for example (a) above, add p and n by 1

for example (b) above. append entries for all possible values of

X1,X2,Y (not so

useful in this case)

Generative vs. Discriminative

Generative: eg. Naïve Bayes: Build causal model of the variables, then

query the model for causes given evident

Discriminative: eg. Perceptron: No causal model , no Bayes rule, no

prob (mostly).

Try to predict output directly. (Mistake driven rather than model

driven)

Perceptron Update Rules

Start with 0 weight

Try to classify

If correct, no change.

If wrong, lower score of wrong answer, raise score of

right answer (c* is the correct answer)

Binary Perceptron

This is not the ideal update rules: Better update rule will result in one of

the State of the art classifier (SVM), when used with the idea presented

below (beyond the scope of this class)

Non-Linear Seperators

Original feature space can always be mapped into higher dimension

where it’s linearly seperable

If activation is positive, output 1, negative, output 0

Eg.

i1 i2 y0

0 0 0

0 1 0

1 0 0

1 1 1

How to represent AND?

Geometric Interpretation

Treat input as points in high dimensional space, find a hyper plane that

separate points into two group. Will work perfectly if data is “linearly

separable”

Note: Summation Dot product