Distributed Observation-Interpretation Networks for the Human

advertisement

Distributed Observation-Interpretation Networks for the

Human Genome Project and Beyond

Christopher J. Lee∗

April 26, 2002

Abstract

• A Coordination problem: How should the activities of many independent agents be coordinated [1]? How should work be distributed

among them? I will refer to this category as

the question of what agent model is preferred

for a given problem.

Multi-agent networks are an attractive solution for

many problems. A key challenge is finding ways

to break large, coupled problems into subtasks that

can be completed in parallel but which still ensure

a valid global solution. This creates a combined

problem of algorithmic decoupling and inferential

decoupling. I argue that a general key for solving

this problem is the concept of inferential integrity,

meaning that a parallel algorithm guarantees adherence to rigorous statistical inference rules globally, invariant to the grouping or ordering of how

observations are processed by independent agents.

I describe a series of properties of Hidden Markov

Model computations which enable large, coupled

computations to be split up while guaranteeing inferential integrity. We have used this approach extensively for analyses of the human genome and its

internal complexity.

1

• A Computational Complexity problem: a network is typically designed to solve some problem, and its design is in effect an algorithm

for solving that problem. This algorithm

must break the computational complexity of

the problem down to an order that the network can perform in an acceptable amount of

time for the range of problem sizes it will encounter [2]. Moreover, the algorithm must

be a parallel algorithm [3]. In other words,

it must break the problem down into quasiindependent computations by the individual

agents. This allows the network to be scalable

for larger problems simply by adding more

agents. I will refer to this as the algorithmic

decoupling problem.

Introduction

• An Inference problem: the network must be

able to make accurate inferences about entities and events in its environment [4]. Moreover, to achieve significant autonomy, individual agents in the network also need this ability to some extent. How can we attain this,

and also combine the inferences of independent agents in a way that ensures an optimal

overall inference by the network (even when

The construction of autonomous intelligent networks is a large problem requiring solutions to at

least three very different kinds of problems:

∗

Department of Chemistry and Biochemistry,

University of California, Los Angeles, California

90095,

leec@mbi.ucla.edu

Work

supported

by NSF grant IIS 0082964.

Project home page:

www.bioinformatics.ucla.edu/leelab/db

1

individual agents may disagree)? I will refer

to this as the inference decoupling problem.

t

In this paper, I will present a simple model of

“Distributed Interpretation-Observation Networks”

that we have used extensively for large-scale scientific computations such as analysis of the human genome [5, 6, 7]. This model attempts to address all three of these aspects in a single consistent

way, as I will illustrate with an example application

(D ECON) that uses this network model to analyze

the vast amount of raw experimental data for candidate human genes and their complex structures

in the human genome. The problem of finding and

understanding human genes has turned out to be

surprisingly difficult [8], and has driven the development of our agent model, because such a distributed model is required to solve it in a scalable

way.

2

1-t

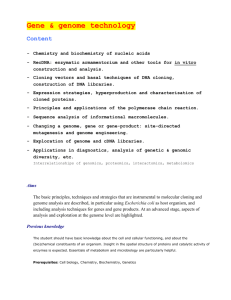

Figure 1: Modeling genes as a graph of directed

edges, whose weights τ reflect the transition probabilities within the graph.

2.1 Algorithmic Decoupling

Let’s consider a simple problem of interpreting

event sequences. For example, the Human Genome

Project had to infer from many short sequencing observations the complete sequence and gene

structure of the human genome [5]. Assume

there exists a hidden state graph G consisting of

nodes representing states σ connected by directed,

weighted edges representing state transitions τ .

Assume that each state σ emits observable events

e with some probability p(e|σ), drawn from an “alphabet” α of possible events e ∈ α. Both the states

σ, the emission probabilities p(e|σ) and transition probabilities τ1→2 ≡ p(σ2 |σ1 ) are hidden (unknown, and not directly observable). These transition probabilities are normalized. In other words,

for all the edges that go from a given node i to any

other node j,

X

τi→j = 1

Autonomy Challenges

Building networks of agents with increasing autonomy can be considered to be a gradual transition from supervised learning algorithms to unsupervised learning algorithms. In the first case, a

training set of fully and correctly classified data is

analyzed by a supervised learning algorithm (such

as a Hidden Markov Model [9]) to produce a set of

optimal parameters for a model that will best predict the correct classifications of new data [10]. In

the second case, no classification of the training set

is provided initially, and the algorithm must itself

discover the classification in the data (for example by clustering). In both cases the goal of training is to produce a set of optimized model parameters, that are then applied to classify real-world

data. However, in the unsupervised case there is

no convenient separation between the problems of

training the model (from a given classification) and

of prediction (from a given model). Instead both

are joined as a coupled computation, producing a

potentially catastrophic explosion in the computational complexity of the problem.

j

Rather than assuming that there is one state sequence σ1 σ2 ... which we must infer from the set of

observed events, the τ transition probabilities represent a “hidden mixture” of many possible hidden

state sequences. Different event sequences may

have been emitted by entirely different state sequences within the graph. The complexity of this

2

hidden mixture is controlled by the τ parameters.

If they are all binary (τ = 0 or 1), the graph consists of a single unbranched path (state sequence),

whereas fractional τ values represent nodes with

multiple allowed outgoing edges–i.e. branch points

where more than one path is possible (figure 1).

This property has been essential for our detection

of the diversity that exists in the human genome

(i.e. that it is different in different people, and expressed differently in different cells) [6, 7].

Under this model, any traversal through G forms

a Markov chain, and we can make inferences about

G based on a large number of observed event sequences o = {e1 e2 ...} using the Hidden Markov

Model approach [9]. This is a classic machine

learning problem, applicable to many scenarios

such as speech recognition (inferring the phonemes

or words a person has pronounced, on the basis of

the observed audio signal) [11]. Generally speaking, this involves calculating the posterior probability of the graph model G given a set a set of observations O via Bayes’ Law

p(O|G)p(G)

p(G|O) = P

∀g p(O|g)p(g)

allowing the calculations for different observations o to be performed separately. I will

refer to this as observation factoring.

Second, because the model obeys the Markov

chain property [12], the probability of a single

observation can be broken down into separate

contributions for disjoint paths π through G.

p(o|G) =

X

p(o|π)p(π|G)

(3)

π∈G

where the π are disjoint paths through G. For

the speech recognition example, for one audio observation o, p(o|“Wachovia Bank”) and

p(o|“watch over your bank”) can be calculated

separately. This implies that the calculation

could be distributed efficiently over a network

of many agents. I will refer to this as Markov

independence.

• Global observation coupling: unfortunately,

while these likelihood components p(o|π) can

be calculated separately, the total posterior

p(G|O) tightly couples the set of all observations. For example, the probability of a single observation o being emitted from a particular path π within the model, p(o|π) depends

on the transition probabilities τ that lie on the

path π. However, there is no way to estimate

these τ values on the basis of a single observation. The τ can be thought of as the “hidden mixture parameters” within the model,

that show what fraction of events will take one

path vs. another at a particular branch point in

the model. As such they are inherently a property of the entire observation set, and can only

be estimated from the total set of observations,

via the calculation of p(G|O). This coupling

is a real barrier to distributing the computation

in parallel: in principle we cannot compute

p(G|O) without having all the p(o|π), and we

cannot compute any p(o|π) without already

having p(G|O).

(1)

and finding the model G∗ that maximizes this.

If we wished to solve this problem with a network of agents performing unsupervised learning,

what algorithmic challenges would we have to

overcome? Let’s consider the ways in which different aspects of this problem are coupled:

• The model G and observations O are distributable: because the model obeys the

Markov chain property, and the observations

consist of many independent event sequences

o, in principle the calculation of the total probability of the observations over the set of possible models p(O|G) can be broken down into

many separate calculations. First, the independent observations O = {o1 o2 ...} simply

factor

Y

p(o|G)

(2)

p(O|G) =

• Global computational complexity is O(N 2 ) or

worse: Can we solve the coupled global op-

o∈O

3

timization problem that this presents? There

are standard algorithms (such as the HMM

forward-backward algorithm [9, 11]) for calculating the likelihood crossing each edge in

the graph model, and iterative optimization

methods such as the Expectation Maximization (EM) algorithm for converging an initial

“guess” graph model to an optimal solution

[13]. How long will this take? For a single

observation sequence consisting of n events,

versus a graph model consisting of N states

each with an average of ² incoming edges,

the computational complexity of the forwardbackward algorithm will be O(²nN ). If we

assume that the total number of events in all

observation sequences is some multiple βN

of the total number of model states N which

we are trying to infer (and β > 1 is required

to hope for fully determining these states with

strong posterior probabilities), then the total

computational complexity is O(β²N 2 ). Finally, if ² is not a constant, but increases with

the size of the graph such that ² = γN , then

the complexity increases to O(βγN 3 ).

the computations required to interpret the observations are distributed over many agents performing

largely independent calculations. In the latter case,

agents simply report their observations to a central

“interpreter” that performs a global, coupled computation on the set of all data. The choice of algorithm type determines the agent model. Local,

decoupled algorithms are easy to distribute over

autonomous agents, whereas global, coupled algorithms are hard.

In the Human Genome Project, observations

were generated by a network of multiple observers

(different sequencing centers) [5, 14]. This distributed observation model is typically joined to

one of two models for interpreting the data. In a

centralized interpretation model, all of the raw data

must be sent to a central location. This has the advantage of allowing a global analysis of the total

observations, but is totally dependent on communication, and reduces the role of agents to being passive sensors. At the opposite extreme, interpretation is performed locally by the agents making the

observations. Their interpretations may be communicated to others. This has the advantage of making

the agents “smart” (able to interpret and act on their

own observations immediately), but blocks a fully

global analysis, since information tends to be lost

during local interpretation.

Neither of these models is acceptable for difficult

unsupervised learning problems like analysis of the

human genome. These problems are too hard for

simplistic local algorithms. On the other hand, the

computational complexity of the global, coupled

calculation is far too large for a centralized cpu.

Instead, I will describe a distributed observationinterpretation network model, in which local interpretation is performed, but in a way that is consistent with rigorous global interpretation algorithms.

For large problems such as human genome assembly (inferring the complete genome sequence

from observations of short fragment sequences, as

was actually done in the Human Genome Project

[5]), N can be very large (3 × 109 for the human

genome). O(N 2 ) and O(N 3 ) algorithms are useless for such large problems. This is representative

of a general challenge. To deliver the power of networks of computational agents to such problems,

we must find an algorithmic decoupling for breaking down their computational complexity.

2.2

Interpretation Networks

A parallel can be drawn between the distinction

of local algorithms (computing on a single observation o, or a single path π through the model)

vs. global algorithms, and distributed vs. centralized interpretation paradigms. In the former case,

2.3 Inferential Decoupling

The key question is whether the global inference

problem can be broken down into separate, local

inference problems that can later be re-combined

4

EST Sequence

Lower Probability

Matches

High Probability

Matches

Calculated Evidence-confidence:

0

0

6.8

7.5

Pseudogenes?

in equation 1), and those which are not (hidden parameters).

For example, to infer gene structure for the human genome, we must determine which edges τ

in the graph representing the genomic sequence

are actually followed by the cellular machinery

that produces an active gene product (“expressed

sequences” or “EST”s [14]). Human genes are

“spliced”; that is, they consist of large regions in

which each node is linked to the next node, with occasional “splices” where a large segment of nodes

is skipped over (figure 2) [15]. On the basis of the

observed expressed sequences (ESTs) we want to

infer which edges are real splices (i.e. have a nonzero τ value).

Inferential integrity can be achieved by any factoring of Equation 1 into separate calculation steps

that does not change its value. Fortunately, the nature of equation 1 permits many powerful ways of

subdividing its calculation:

Lower Probability

Matches

0

0

0

Strong Gene Evidence

0

Paralogous Gene?

Genomic Sequence

Figure 2: D ECON uses all possible matches of individual ESTs vs. the whole genome sequence to

measure evidence for genes, exons, introns, polyA

sites, etc., by calculating the probability drop due

to excluding each feature.

to produce the global solution. Let’s define this

property as inferential integrity. Such an algorithm

must be insensitive to different ways of grouping the observations, and different orders of recombining them; the total result should remain the

same. This would solve both of the problems raised

in the previous two sections. The true barrier to

algorithmic decoupling is that overly simplistic algorithms may simply get the wrong answer. By

contrast, to the extent we can find decouplings that

guarantee (or strongly approximate) inferential integrity, we can take full advantage of them to accelerate the computation. Moreover, inferential integrity provides a bridge between purely local vs.

purely global interpretation network models. A

computation with this property provides a flexible

mix of both strategies.

3

• Observation factoring: As shown in equation

2, we can separate the calculation into factors for independent observations, which can

be recombined to obtain the global solution by

simply multiplying them.

• Markov independence: As shown in equation

3, we can go further and break the calculation

for a single observation into separate contributions from different (disjoint) paths π through

G.

• Diagonalization: knowledge of the general

structure of the graph can provide additional

ways to divide the calculation. For example,

for the gene structure problem we know that

splices are rare, so the comparison between

observed EST and genomic sequence should

show long regions of match (diagonals in Figure 2) separated by occasional splices. This

allows us focus the calculation on just those

parts π of the model which could emit a given

EST with non-negligible probability, by run-

Inferential Integrity

What are the essential principles for obtaining this

property? First, my model for the rigorous global

solution is simply Bayesian statistical inference,

maximizing the posterior probability of the model

as given by equation 1. This approach is very general, and has as its key principle the distinction between things which are directly observable (e.g. O

5

4 Conclusions

ning a fast diagonal search algorithm such as

BLAST [16].

What general utility does this approach have for

the problem of constructing autonomous intelligent

networks? I suggest that the property of inferential

integrity is a key for designing an agent network

that can move flexibly across the spectrum of “independence” versus “cooperation”. Inferential integrity is valuable for decoupling a rigorous inference process both to reduce its computational complexity (the algorithmic decoupling problem emphasized in the Introduction), and also so that it

can be distributed efficiently over a large number

of compute agents (the agent model question mentioned in the Introduction).

For our work in the Human Genome Project

we have used this to build distributed observationinterpretation networks, in which each agent is

both an observer and also an interpreter. Such

networks have many advantages over pure localinterpretation or pure global-interpretation. It can

combine the advantages of each of these extremes,

while avoiding many of their respective disadvantages. It combines the scalability and robustness of

a distributed approach, with the rigorous statistical

inference of a fully centralized, global calculation.

It maximizes local resource utilization by breaking

down a large calculation into a very fine granularity

that can be distributed well, while keeping communication bandwidth requirements as low as possible (through mechanisms such as graph reduction).

Since the computational models we’re using are

very general (statistical inference, Hidden Markov

Models, graph reduction, etc.), it seems likely that

these basic ideas could be applicable to seeking inferential integrity for many other problems.

• Graph reduction: a further consequence of diagonalization is that we can separately calculate the p(o|π) over the possible paths for

a given observation o, and store it as much

reduced form of the original graph. Specifically, we eliminate all nodes and edges with

negligible probability, and collapse all linear

segments (i.e. without internal branching) to

a single node, saving the probability on each

segment.

• τ factoring: this graph reduction allows us to

do the single observation p(o|π) calculation

before we have τ , by using reasonable estimates for τ and simply factoring them back

out from the probability factors stored on the

reduced graph.

Using these separations, calculations for all the

individual observations can be performed on a distributed network with little or no communication.

Each agent simply performs the calculations for its

own observations. Finally, the reduced probability graphs for the observations are combined. This

also can be separated into many independent calculations, one for each individual τ . For each τ , only

a tiny subset of observations will be found to cross

that edge with high probability, and all of these will

have been identified efficiently by the diagonalization and graph reduction calculations. Only these

observations need be considered to determine this

τ . Moreover, only a small amount of information

(the reduced probability graphs for those observations) needs to be communicated between agents

to allow this calculation to be performed independently by a single agent. Thus all stages of the 5 Acknowledgements

computation can be distributed efficiently, and a

globally optimal solution produced with minimal This research was supported by NSF Grant IIS

communication demands.

0082964.

6

References

[10] T. Mitchell, Machine Learning. McGraw Hill,

1997.

[1] M. Barbaceanu, M.S. Fox, “COOL: a language

for describing coordination in multiagent sys- [11] L.R. Rabiner, “A tutorial on hidden Markov

models and selected applications in speech

tems,” Proceedings of the First International

recognition,” Proceedings of the IEEE 77,

Conference on Multi-Agent Systems. 17–24,

257–286, 1989.

1995.

[12] D.R. Cox, H.D. Miller, The Theory of

[2] J.T. Schwartz, M. Scharir, J. Hopcroft, PlanStochastic Processes. Chapman Hall, 1965.

ning, Geometry and Complexity of Robot Motion. Ablex Publishing Corp. 1987.

[13] A.P. Dempster, N.M. Laird, D.B. Rubin,

“Maximum likelihood from incomplete data

[3] T. Oates, M.V. Nagavendra Prasad, V.R.

via the EM algorithm,” J. Royal Stat. Soc. B

Lesser, Cooperative Information Gathering:

39, 1–38, 1977.

A Distributed Problem Solving Approach.

UMass Computer Science Technical Report [14] M.S. Boguski, G. Schuler, “ESTablishing

94-66. 1994.

a human transcript map,” Nature Genet. 10,

369–371, 1995.

[4] J. Binder, D. Koller, S. Russell, K. Kanazawa,

“Adaptive probabilistic networks with hidden [15] W. Gilbert, “Why genes in pieces?” Nature

271, 501, 1978.

variables,” Machine Learning 29, 213–244,

1997.

[16] S.F. Altschul, W. Gish, W. Miller, E.W.

Myers, D.J. Lipman, “Basic local alignment

[5] International Human Genome Sequencing

search tool,” J. Mol. Biol. 215, 403–410, 1990.

Consortium, “Initial sequencing and analysis

of the human genome,” Nature 409, 860–921,

2001.

[6] K. Irizarry, et al., C. Lee, “Genome-wide analysis of single-nucleotide polymorphisms in human expressed sequences,” Nature Genet. 26,

233–236, 2000.

[7] B. Modrek, et al., C. Lee, “Genome-wide detection of alternative splicing expressed sequences of human genes,” Nucleic Acids Res.

29, 2850–2859, 2001.

[8] S. Karlin, A. Bergeman, A.J. Gentles,

“Genomics: annotation of the Drosophila

genome,” Nature 411, 259–260, 2001.

[9] L.R. Rabiner, B.H. Juang, “An introduction to

hidden Markov models,” IEEE ASSP Magazine 3, 4–16, 1986.

7