Inverse and Elementary Matrices

advertisement

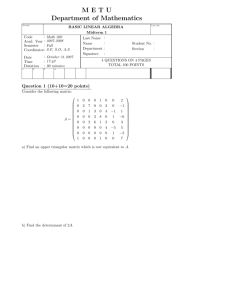

Inverse and Elementary Matrices 1. Inverse matrices Recall the economy problem: B" œ total production of coal B# œ total production of steel B$ œ total production of electricity Based on an economic model, have system of equations for B3 À B" Þ#B# Þ%B$ œ " (*) Þ"B" Þ*B# Þ#B$ œ Þ( Þ Þ"B" Þ#B# Þ*B$ œ #Þ* Ô B" × Recall: we defined \ œ B# à Õ B$ Ø Ô " Þ" E œ Õ Þ" Þ# Þ* Þ# Þ% × Þ# Þ* Ø Ô " × Þ( F œ Õ #Þ* Ø [known] [known] Then equation for unknown matrix \ is: E\ œ F [recall any system of equations can be written this way]. How to solve in general for \ ? Definition 1: An 8 ‚ 8 matrix is called the identity matrix M if it has 1's along the diagonal and zeroes elsewhere. Ô" Ö! Example 1: M œ % ‚ % identity matrix œ Ö ! Õ! ! " ! ! ! ! " ! !× !Ù Ù ! "Ø . [note size of the matrix is usually understood]. NOTE: 7 œ 8. Note: a b − ‘% À M#$ œ !à M$# œ !à M$$ œ ". Ô" Ö! Mb œ Ö ! Õ! ! " ! ! ! ! " ! That is, M78 œ ! unless ! ×Ô ," × Ô ," × ! ÙÖ ,# Ù Ö ,# Ù ÙÖ Ù œ Ö Ù œ bÞ ! ,$ ,$ ØÕ Ø Õ " ,% ,% Ø Similarly this holds for all sizes 8 of the identity. Proposition 1: If M is an 8 ‚ 8 identity matrix and a is an 8-vector, then M a œ aÞ Proof: Ô" Ö! Ö Ma œ Ö ! Ö ã Õ! ! " ! ã ! ! ! " ã ! á á ä á ! ×Ô +" × Ô +" × ! ÙÖ +# Ù Ö +# Ù ÙÖ Ù Ö Ù ! ÙÖ +$ Ù œ Ö +$ Ùß ÙÖ Ù Ö Ù ã ã ã " ØÕ +8 Ø Õ +8 Ø as seen directly by multiplication. Proposition 2: For any matrix E for which the product makes sense, M8 E œ EÞ Proof: ME œ MÒa" á a7 Ó œ ÒM a" á M a7 Ó œ Òa" á a7 Ó œ EÞ Definition 2: Given a square matrix E, a matrix F is called the inverse of E if EF œ M and FE œ MÞ A matrix for which an inverse exists is called invertible. Example 2: " E œ ” # # à "• " E" œ "$ ” # # Þ " • Theorem 2: If E has an inverse matrix F, then F is unique (i.e., there is no other inverse matrix). Proof: If F and G are both inverses of E then: F œ FM œ FÐEGÑ œ ÐFEÑG œ MG œ Gß so that any two inverses are the same, i.e., there is only one inverse. Theorem: Given square matrices E and F, if FE œ M , then EF œ M , i.e., F is automatically the inverse of E. (Proved later in this lecture - after invertible matrix theorem) Now: + Let's find inverse of E œ ” + ”- , : .• , B D " œ ” •” • . C A ! Get: +B ,C œ " -B .C œ ! B D Find \ œ ” C A• ! Þ "• s.t. +D ,A œ ! -D .A œ " Augmented matrix for the first pair: + ”- , . l l " !• , . l l ! "• Augmented matrix for second pair: + ”Solve in parallel: + ”- , . l l " ! ! "• Use row reduction: " ”- ,Î+ . l l Ä” Ä” " ! " ! ,Î+ " ! " l l œ” ! " Ä” • " ! "Î+ ! " ! l l ,Î+ Ð+. ,-ÑÎ+ "Î+ -ÎÐ+. ,-Ñ l l ! "• ! +ÎÐ+. ,-Ñ • "Î+ -,ÎÐ+Ð+. ,-ÑÑ -ÎÐ+. ,-Ñ ! " l "Î+ l -Î+ ,ÎÐ+. ,-Ñ +ÎÐ+. ,-Ñ • .ÎÐ+. ,-Ñ ,Ð+. ,-Ñ -ÎÐ+. ,-Ñ +Ð+. ,-Ñ • But note the entries in the first column on the right should be the solution for B and C: B .ÎÐ+. ,-Ñ ” C • œ ” -ÎÐ+. ,-Ñ •ß since the solution for B and C is obtained by manipulating the first column of the right hand side. And similarly the second column on the right should be D ,ÎÐ+. ,-Ñ ” A • œ ” +ÎÐ+. ,-Ñ • So the inverse is: B D .ÎÐ+. ,-Ñ E" œ F œ ” œ ” • C A -ÎÐ+. ,-Ñ œ Moral: to invert E œ ” + ”- , . l l " ! + - " . ” +. ,- ,ÎÐ+. ,-Ñ +ÎÐ+. ,-Ñ • , + • , ß row reduce .• ! " Ä” • " ! ! " l l B D œ ÒMlE" Ó (after row reduction) C A• that is, after row reduction the identity will be in the left block, and the desired inverse matrix appears in the right block. Thus: \E œ Mà can also check that E\ œ MÞ Check: + ”- , .• " +.-, . ” - , " œ ” • + ! ! Þ "• Thus we have a general formula for # ‚ # matrices. Note this is a general method Definition 3: A matrix which has an inverse is called nonsingular Theorem 3: If E and F are nonsingular then EF is also nonsingular and ÐEFÑ" œ F " E" Þ Pf: Note that ÐF " E" ÑÐEFÑ = ", so that ÐEFÑ" œ F " E" . Note that the other direction giving the identity can also be checked. [Note: this generalizes to larger products of matrices in the expected way; see corollary 1.1; you will do the proof in exercises.] [Note there are some other theorems in the book along same lines; proofs are simple] 2. Conclusion of the original matrix problem: Recall: We had B" Þ#B# Þ%B$ œ " Þ"B" Þ*B# Þ#B$ œ Þ( (*) Þ"B" Þ#B# Þ*B$ œ #Þ* Ô B" × Showed that if we defined \ œ B# à Õ B$ Ø Ô " Þ" E œ Õ Þ" Þ# Þ* Þ# Ô " × Þ( F œ Õ #Þ* Ø then to find \ we solve E\ œ Fß Þ% × Þ# Þ* Ø [known] [known], for the unknown matrix \ . How to do that? Suppose that matrix E has an inverse matrix E" : then E\ œ F Ê ÐE" EÑ\ œ E" F Ê \ œ E" F Thus we would have the unknown vector X as a product of two known matrices. How to find E" for a $ ‚ $ matrix? We'll find out later. Example (2 ‚ 2; a previous example revisited): Consider a metro area with constant population of 1 million. Area consists of city and suburbs. Analyze changing urban and suburban populations. Let G" and W" denote the two populations in the first year of our study, and in general G8 and W8 denote the populations in the 8>2 year of the study. Assume that each year 15% of people in city move to suburbs, and 10% of people in suburbs move to the city. Thus next year's city population will be .85 times this year's city population plus .10 times this year's suburban population: G8" œ Þ)& G8 Þ" W8 Similarly W8" œ Þ"& G8 .*! W8 Þ A) If the populations in year 2 were .5 and .5 million, what were they in year 1? Þ& œ Þ)& G" Þ" W" Þ& œ Þ"& G" .*! W" System of equations for G" and W" Þ Write as matrix: Þ)& Þ" G" Þ& ” Þ"& Þ* • ” W • = ” Þ& • " ðóóñóóò ï ï E \ F E" œ œ " Þ* ” Þ"& Þ* † Þ)& Þ" † Þ"& " Þ* ” Þ"& Þ(& Þ" "Þ# œ ” • Þ)& Þ# Þ" Þ Þ)& • Þ"$$$ "Þ"$$$ • Thus: "Þ# \ œ E" F œ ” Þ# Þ"$$$ Þ& Þ&$$% †” • œ ” • "Þ"$$$ Þ& Þ%''( • Which give original populations of the city and suburbs. 3. Elementary Matrices: Definition: An elementary matrix is a matrix obtained from the identity by a single elementary row operation. Ex 1: Elementary matrix of type I [interchange rows]: Ô! ! Õ" ! " ! "× ! !Ø Elementary matrix of type II [multiply row by a constant]: Ô" ! Õ! ! $ ! !× ! "Ø Elementary matrix of type III [add multiple of one row to another]: Ô" ! Õ$ !× ! "Ø ! " ! 4. Some theorems about elementary matrices: Note: now we will prove some theorems about elementary matrices; we will make them statements (most of which I will prove; will state when not proving them)Þ This is a story about elementary matrices we will be writing. Theorem 1: Let E be a matrix, and let F be the result of applying an ERO to E. Let I be the elementary matrix obtained from I by performing the same ERO. Then F œ IEÞ Proof is exercise. Ô" Example 1: Start with M œ ! Õ! to get the elementary matrix: Ô" Iœ ! Õ$ !× ! ß and add 3 † row 1 + row 3 "Ø ! " ! ! " ! !× ! Þ "Ø Now note that multiplying any matrix E by I performs the same operation on E: Ô" IE œ ! Õ$ ! " ! ! ×Ô # ! " ØÕ " # " " " !× Ô # # œ " $Ø Õ ) " " % !× # Þ $Ø Thus conclude: If have matrix E, and apply series of ERO's to E: E Ä E" Ä E# Ä E$ Ä á Ä E 8 Each ERO can be obtained by multiplying the previous matrix by an elementary matrix: E" œ I" E E# œ I# E " œ I # I " E E$ œ I$ E # œ I $ I # I " E ã (*) E8 œ I8 I8" á I# I" E Conclusion 1: If E8 is the result of applying a series of row operations to E, then E 8 can be expressed in terms of E as in (*) above. Note that every elementary row operation can be reversed by an elementary row operation of the same type. Since ERO's are equivalent to multiplying by elementary matrices, have parallel statement for elementary matrices: Theorem 2: Every elementary matrix has an inverse which is an elementary matrix of the same type. Proof: See book 5. More facts about matrices: Assume henceforth E is a square 8 ‚ 8 matrix. homogeneous system Î Ô B" × Ñ Ð ÖB Ù Ó : Ð E œ [E34 ]; \ œ Ö # Ù Ó ã Õ B% Ø Ò Ï Suppose we have E\ œ ! Þ Assume that only solution is \ œ ! (i.e. zero matrix for \ ). What can we conclude about E? Reduce E to reduced row echelon form: E Ä F Then F\ œ ! has the same solutions. But F has to have all non-zero rows, since if a row is 0 then there will be nontrivial solutions for \ (less equations than unknowns; see results from class last time). Therefore, F must be the identity. Conversely, if E is row equivalent to M , then E\ œ ! is equivalent to M\ œ !, so the only solution is \ œ !Þ We have just proved: Conclusion 2: E is row-equivalent to I, the identity matrix, 30 0 E\ œ ! has no nontrivial solutions. Example: " E œ ” 2 # % • " Ä ” ! # ! • System of equations: E\ œ ! Lots of solutions: B# œ B# B" œ #B# Þ Ä B" #B# œ ! !! œ ! B # Thus \ œ ” " • œ B# ” •ß B# arbitrary B# " solutions). (infinite number of Conclude: exist nontrivial solutions. Thus E is not row-eqivalent to M . [as can be checked directly] Again we assume here our matrices are square: 1. Nonsingular matrices are just products of elementary matrices Notice that if a matrix E is nonsingular (invertible), then if E\ œ !, then: E\ œ ! Ê E" E\ œ E" ! Ê M\ œ ! Ê \ œ !Þ Thus E\ œ ! has no nontrivial solutions. Thus E is row equivalent to I. Thus there exist elementary matrices I" ß á ß I5 such that: I5 I5" I5# á I# I" E œ M Ê E œ ÐI"" I#" á I5" ÑM œ I"" I#" á I5" Þ So E is a product of elementary matrices. Also, note that if E is a product of elementary matrices, then E is nonsingular since the product of nonsingular matrices is nonsingular. Thus Conclusion 3: E is a product of elementary matrices (note inverses of elementary matrices are also elementary) iff E is nonsingular, Now notice that: if E is nonsingular, then E œ I" I # á I 5 œ I " I # á I 5 M œ equivalent to MÞ a matrix row Can reverse the argument: if E row equivalent to M then E œ I" I# á I5 Mß so E is nonsingular. Conclusion 4: E 3s row equivalent to M iff E is nonsingular. Example 2: # E œ ” " " #• V" V # Í V" V # "Î# V# V" Í "Î#V# V" " ”" "Î#V" Í #V" " ”! "Î# $Î# • " ”! "Î# # • #Î$ V# Í $Î#V# " ”! "Î# " • ! . "• Thus E is equivalent to the identity, so by conclusion 4, we have that E is nonsingular. Thus E must be a product of elementary matrices. But note we can reverse the above process and go from I to E as shown above. # Thus E œ ” ! ! " • ” " " ! " • ” " ! ! " • ” $Î# ! "Î# " • ” " ! ! "• œ I" I # I $ I % M Thus since E is nonsingular it is indeed a product of elementary matrices. 2. Conclusions: We have shown the following are equivalent (if you follow through the arguments): 1. E is nonsingular 2. E\ œ ! has only trivial soln. 3. E is row equivalent to M 4. E\ œ F has a unique solution for every given column matrix F . 5. E is a product of elementary matrices. [Note that other equivalences are shown in section 2.2; these are the important ones for now] T <990 À We have showed 2, 3, are equivalent in Conclusion 2. 1 and 5 are equivalent by Conclusion 3. And 1 and 3 are equivalent by Conclusion 4. To show that % is equivalent to ", note that if 1 holds, then the equation E\ œ F implies E" E\ œ E" F which implies \ œ E" Fß which means \ has a unique solution, so that 4 holds. Conversely, if 4 holds then E\ œ F has a unique solution for each F. Setting F œ !, we get that E\ œ ! has only trivial solution, which shows E is nonsingular via condition 2 above. We thus have 2 Í 3 Í 1 Í 5, and that 1 Í 4. This shows that all statements are equivalent. 3. A useful Corollary: Corollary: Let E be a 8 ‚ 8 matrix. If the square matrix F satisfies FE œ M , Ð"Ñ EF œ M Ð#Ñ then automatically also i.e., F œ E" . Similarly, Ð#Ñ also implies Ð"ÑÞ Proof: Suppose Ð"Ñ holds. Then E\ œ ! Ê FE\ œ ! Ê \ œ ! Ê only trivial solution Ê E" exists. Also we have from Ð"Ñ: FEE" œ ME" Ê F œ E" Þ Now suppose Ð#Ñ holds. Then reversing the roles of E and F, it follows that (1) holdsÞ 4. Inverting a matrix: If E is invertible then E is row-equivalent to M , so I5 I5" á I" E œ Mß where the I5 are ERO which get M . Thus E" œ I5 I5" á I" Mß i.e. to get E" we need to perform the same elementary row operations on M that we perform on E to turn it into identity. Example 1: Find inverse of Eœ” # " " # • [note we already know how to invert this in another way] Inversion process: # ” " " # " Ä ” " " Ä ” ! " Ä ” ! " Ä ” ! ! "• l " l ! "Î# # l l "Î# ! ! "• "Î# $Î# l l "Î# "Î# ! "• "Î# " ! " l l l l "Î# "Î$ #Î$ "Î$ ! #Î$ • "Î$ #Î$ • So #Î$ E" œ ” "Î$ "Î$ Þ #Î$ • [Note that material below completes our coverage of material related to the inverse matrix theorem] 5. Inverses and linear transformations Recall if E is a nonsingular square matrix, and x is a vector, then we can define the function X ÐxÑ œ ExÞ Recall properties X Ðx yÑ œ EÐx yÑ œ Ex Ey œ X ÐxÑ X ÐyÑ X Ð- xÑ œ E- x œ -Ex œ -X ÐxÑÞ Thus X is a linear transformation. [recall X can be viewed as a rule for taking the vector x to Ex] We define the inverse X " of X as the operation which takes X x back to x. Thus X " ÐX ÐxÑÑ œ x [note this happens only if X is 1-1 and onto] Figure: E viewed as a linear transformation: Ex œ X ÐxÑÞ Note that X " inverts the operation. Note that E" ÐX xÑ œ E" ÐExÑ œ xÞ Thus it must be that X " ÐX xÑ œ E" ÐX xÑ so that X " corresponds to multiplication by E" . [this is stated as a theorem in the text]