Clementine Application Template

for Analytical CRM in

Telecommunications 7.0

®

For more information about SPSS® software products, please visit our Web site at

http://www.spss.com or contact

Marketing Department

SPSS Inc.

233 South Wacker Drive, 11th Floor

Chicago, IL 60606-6307

Tel: (312) 651-3000

Fax: (312) 651-3668

SPSS is a registered trademark and the other product names are the trademarks of SPSS Inc. for

its proprietary computer software. No material describing such software may be produced or

distributed without the written permission of the owners of the trademark and license rights in the

software and the copyrights in the published materials.

The SOFTWARE and documentation are provided with RESTRICTED RIGHTS. Use,

duplication, or disclosure by the Government is subject to restrictions as set forth in

subdivision (c)(1)(ii) of The Rights in Technical Data and Computer Software clause at

52.227-7013. Contractor/manufacturer is SPSS Inc., 233 South Wacker Drive, 11th Floor,

Chicago, IL 60606-6307.

General notice: Other product names mentioned herein are used for identification purposes only

and may be trademarks of their respective companies.

This product includes software developed by the Apache Software Foundation

(http://www.apache.org).

Windows is a registered trademark of Microsoft Corporation.

UNIX is a registered trademark of The Open Group.

DataDirect, INTERSOLV, SequeLink, and DataDirect Connect are registered trademarks of

MERANT Solutions Inc.

Clementine® Application Template for Analytical CRM in Telecommunications 7.0

Copyright © 2002 by Integral Solutions Limited

All rights reserved.

Printed in the United States of America.

No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any

form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the

prior written permission of the publisher.

Contents

1

Introduction to Clementine Application

Templates

6

What Is a Clementine Application Template? . . . . . . . . . . . . . . . . 6

2

Introduction to the Telco CAT

8

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Introduction to Analytical CRM . . . . . . . . . . . . . . . . . . . . . . . . . 9

Life Cycle Model for CRM . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Why Analytical CRM? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

3

Getting Started

11

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

CAT Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

CAT Streams . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

CAT Modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

How to Use the CAT Streams . . . . . . . . . . . . . . . . . . . . . . . . . 14

Notes on Reusing CAT Streams . . . . . . . . . . . . . . . . . . . . . . . 15

3

4

Working with CAT Streams

16

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Module 1--Churn Application . . . . . . . . . . . . . . . . . . . . . . . . . 16

P1_aggregate.str-Aggregate Call Data and Merge with Customer Record. . . .

P2_value.str--Customer Value and Tariff Appropriateness . .

E1_explore.str--Visualize Customer Information and Value . .

E2_ratios.str--Visualize Derived Usage Category Information

P3_split.str-Derive Usage Category Information and Train/test Split. . . .

M1_churnclust.str-Customer Clustering and Value/churn Analysis . . . . . . . .

M2_churnpredict.str--Model Propensity to Churn . . . . . . .

D1_churnscore.str--Score Propensity to Churn . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

19

21

23

26

. . . 29

. . . 31

. . . 34

. . . 36

Module 2--Cross-Sell Streams . . . . . . . . . . . . . . . . . . . . . . . . 37

P4_basket.str--Produce Customer Product Basket Records . . .

P5_custbasket.str--Merge Customer, Usage, and Basket Data .

E3_products.str--Product Association Discovery . . . . . . . . .

M3_prodassoc.str--Customer Clustering and Product Analysis .

E4_prodvalue.str--Product Groupings Based on Customer Value

M4_prodprofile.str--Propensity to Buy Grouped Products . . . .

D2_recommend.str-Product Recommendations from Association Rules . . . . . . . .

Appendix A

Telco CAT Data Files and Field Names

.

.

.

.

.

.

39

40

41

43

45

47

. 48

51

Raw Data Files for Modules 1 and 2 . . . . . . . . . . . . . . . . . . . . . 51

Intermediate Data Files for Module 1 . . . . . . . . . . . . . . . . . . . . 53

Intermediate Data Files for Module 2 . . . . . . . . . . . . . . . . . . . . 57

4

Appendix B

Using the Data Mapping Tool

60

Mapping New Data to a CAT Stream . . . . . . . . . . . . . . . . . . . . .60

Mapping Data to a Template .

Mapping between Streams. .

Specifying Essential Fields . .

Examining Mapped Fields. . .

.

.

.

.

Index

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.61

.63

.64

.65

67

5

Chapter

1

Introduction to Clementine

Application Templates

What Is a Clementine Application Template?

A Clementine Application Template (CAT) is a collection of materials for use with

the Clementine data mining system that illustrates the techniques and processes of

data mining for a specific application. These materials are centered around a library

of Clementine streams (or stream diagrams) that illustrate the data mining techniques

commonly used in the selected application. The purpose of these streams is for you to

study and reuse them in order to simplify the process of data mining for your own

similar business application.

Reusing an application template is simply a matter of fit. Although every data

mining application is different, there are many techniques commonly used throughout

data mining (such as propensity modeling, clustering, and profiling). This means that

certain data mining processes, such as clustering, will apply to almost all data mining

projects. However, if your industry is similar to the one used for a particular CAT, you

will likely be able to use even more of the illustrated techniques. For example, when

a Clementine stream is constructed to perform a particular task in an application, there

is often enough structural similarity to allow reuse of the stream in a similar

application. Reusing the stream can save you significant amounts of time and effort.

Clementine application templates are designed to make use of this similarity

between related data mining projects by providing sample projects to guide you.

Within a particular application type (such as customer relationship management, or

CRM, for the telecommunications industry), there are many standard tasks and

analyses that you can reuse if applicable to your data mining project.

6

7

Introduction to Clementine Application Templates

A Clementine application template consists of:

n A library of Clementine streams.

n Synthetic data that allow the streams to be executed for illustrative purposes

without modification. CAT data are supplied in flat files to avoid dependence on a

database system. The data used in the CATs may be classified into two types: raw

and intermediate. Raw data files are the starting point of each CAT. Intermediate

files can be generated by the preprocessing streams supplied.

n A user’s guide that explains the application, the approach and structure used in the

stream library, the purpose and use of each stream, and how to apply the streams

to new data.

Chapter

2

Introduction to the Telco CAT

Overview

The Telco CAT is a Clementine application template for analytical customer

relationship management (CRM) in the telecommunications industry. It illustrates the

data mining techniques applicable to churn management and cross-selling described

below:

n Preprocessing. This phase of analytical CRM, or data mining, handles the merging

and aggregation of customer and call data, the derivation of customer value and

tariff-related fields, and the preprocessing steps for producing "basket-style"

customer product data.

n Exploration. This phase uses a wide range of exploratory techniques, such as

histograms and distribution charts, to understand the overall properties of the data,

including the factors that influence customer churn and product purchase.

n Modeling and analysis. This phase illustrates the use of clustering and profiling to

understand customer churn and assist targeted cross-selling. Additionally,

predictive techniques are used in Module 1 to predict the occurrence of churn, and

association discovery is used in Module 2 for cross-selling.

n Deployment. The final phase illustrates the use of the Clementine Solution

Publisher to deploy churn prediction and cross-sell recommendation techniques.

The techniques used in these data mining phases help to answer the business questions

typically encountered in the telecommunications industry. The Telco CAT will help

you to see how this is accomplished.

8

9

Introduc tion to the Telco CAT

Introduction to Analytical CRM

In the modern business world, customer focus is increasingly important. The unit of

business activity has become the customer relationship as a whole, rather than the

individual sale. The concept of customer relationship management arises from the fact

that to be successful, businesses must manage not only the processes of production and

distribution but also the customer relationships themselves.

Customer relationship management (CRM) has two components--an operational

component and an analytical one.

n Operational CRM ensures that the operational aspects of the business treat the

customer relationship as a unit--for example, by making all of the information

about interactions with a particular customer available at every customer touchpoint.

n Analytical CRM provides a greater understanding of customers, both individually

and as a group, allowing the business to meet the needs of the customer at all levels,

from individual transactions to overall strategy.

Data mining is at the heart of analytical CRM because it is used to uncover the hidden

meaning in customer interactions, allowing businesses to understand their customers

and predict what they will do.

Life Cycle Model for CRM

To understand how CRM benefits a business, take a closer look at the nature of the

customer relationship. A customer relationship is like a story with a beginning, a

middle, and an end. At each point in the story, or "customer life cycle," CRM can focus

on a particular goal:

n At the beginning, to attract more and more-profitable customers.

n In the middle, to maximize the value of each customer to the business.

n At the end, to delay or reduce the loss of valuable customers.

These goals are summarized in the following graph:

10

Chapter 2

Figure 2-1

Customer Life Cycle, Value, and CRM

Why Analytical CRM?

Data mining for CRM, also known as analytical CRM, can assist a business in

achieving all of the benefits discussed in this guide--better customer acquisition, better

cross-selling, and better customer retention. Specifically, analytical CRM can help

your business in the following ways:

n Improved customer acquisition through an increased understanding of customer

segments and value. Specific segments, sometimes of a particularly high value, can

be targeted in campaigns.

n Improved cross-selling through a better understanding of customer segments and

their relationship to product purchase. Knowledge of customers allows you to

understand what they are likely to buy.

n Improved customer retention by understanding when and why customers are likely

to leave the customer base, enabling you to take remedial action where appropriate.

The Telco CAT illustrates analytical CRM to achieve all of these benefits through

specific applications of data mining.

Chapter

3

Getting Started

Overview

The Telco CAT is structured into two modules or "virtual applications" that explore

Clementine operations typical to the telecommunications industry.

n Module 1 is a churn application designed to increase customer retention.

n Module 2 is a cross-sell application that processes product information and merges

it with customer information from Module 1 for more targeted cross-selling.

Each application consists of a number of streams that work either from raw data files

or from intermediate files produced by the preprocessing streams.

CAT Data

The data provided with the CAT are based on a fictitious telecommunications

company; the data is entirely synthetic and bears no relation to any real company.

The raw data files are:

custinfo.dat

Basic customer information

cdr.dat

Call data aggregated by month

tariff.dat

Details of the tariff scheme in use

products.dat

Table of products or services purchased by each

customer

11

12

Chapter 3

The Telco CAT also contains six intermediate data files produced by stream operations.

In several cases, these intermediate data files are then used in other stream operations.

CAT Streams

The two modules of the Telco CAT consist of 15 streams. The streams are organized

according to the Cross-Industry Standard Process for Data Mining (CRISP-DM)

methodology and contain a prefix indicating the appropriate data mining phase. For

example, P1_aggregate.str is the first stream used in the preprocessing phase to

aggregate data. As illustrated in the table below, the prefix codes used for Telco CAT

streams are: P - preprocessing, E - exploration and understanding, M - modeling, and

D - deployment.

CRISP-DM Phase

Prefix

Code

Module 1

Streams

Module 2

Streams

Total

Streams

Data Preparation

P

3

2

5

Data Understanding

E

2

2

4

Modeling & Evaluation

M

2

2

4

Deployment

D

1

1

2

8

7

15

Totals

CAT Modules

The Telco CAT is grouped into two modules that illustrate the types of applications in

analytical CRM for telecommunications.

Module 1

Module 1 is a churn application specific to the telecommunications industry. It

consists of eight streams used to explore several data sets, prepare them for modeling,

and create and deploy churn prediction models. This module uses several steps to move

from the exploration of data to modeling and deployment:

Preprocessing streams produce a merged and augmented data file, cust_call_plus.dat,

which describes both customers and their behavior.

13

Getting Started

Several more streams perform a number of explorations and clustering exercises on

cust_call_plus.dat.

Additional preprocessing occurs and the data set is split into training and test data files

(train and test) used for predictive modeling.

Several clustering and association rule models are built in the modeling stream.

Finally, a deployment stream illustrates the deployment of a churn prediction model

that runs directly from the raw data. First, it performs all the required preprocessing

and then scores the customer base on propensity to churn.

For more information, see "Module 1--Churn Application" in the chapter Working with

CAT Streams.

Module 2

Module 2 is a cross-sell application specific to the telecommunications industry. It

uses seven streams to perform product analysis and cross-sell recommendations. This

module uses the sequence of explorations and operations listed below to produce a

recommendation model:

A preprocessing stream runs from the raw "till-roll" data (products.dat) that lists the

separate purchases made by each customer. This stream then produces a "basket" form

of the data with one record per customer (cust_prod.dat).

Further preprocessing merges the basket data with the customer/behavioral data from

Module 1 (churn application) to produce a final preprocessed file (cust_call_prod).

Various explorations and modeling exercises are performed on the preprocessed data.

Unlike those of the churn application, the cross-sell streams often make direct use of

the raw data.

As a final step, a deployment stream illustrates the techniques of product

recommendation using an association model.

For more information, see "Module 2--Cross-Sell Streams" in the chapter Working with

CAT Streams.

14

Chapter 3

How to Use the CAT Streams

There are two ways to use the streams that ship with the CAT:

n Use streams as examples or illustrations of techniques to study as you build your

own business-specific application. Simply load the streams into Clementine,

execute them on the data provided with the CAT, and examine their composition

using the stream information provided in this guide.

n Use the CAT streams as prepackaged components that you can attach to your

existing data. With the minor modifications detailed in this guide, you can use the

templates directly for your own data mining applications.

To determine which method is better for your business needs, address the

questions below.

How well does the CAT match your technical situation?

To determine a technical match, you should address issues such as:

n Data format

n Organization of the database

n Overlay between the attributes used in the CAT data and those available from

your data

It is not necessary that the match between the CAT data and your data be exact before

the streams can be reused. For example, additional customer information, such as

postal code, may be used without any need to change the stream. On the other hand,

the inclusion of new usage categories that are used in various preprocessing steps,

would require minor changes to some streams (for example, the addition, deletion, or

modification of Derive nodes). A completely different organization of data in the

database might require significant restructuring of the streams or the addition of new

preprocessing streams to bring the data organization into line with that of the CAT.

How well does CAT answer your business questions?

To answer the second question, you should determine whether the specific questions

addressed by the CAT match your business questions, such as What is the relationship

between churn and dropped calls? You may have business reasons for believing that

this relationship is not relevant to your specific situation, in which case, you could omit

15

Getting Started

this exploration as you use the streams. On the other hand, you may want to address

other business questions that are not considered in the Telco CAT. In this case, you

would want to supplement the CAT with additional streams of your own. As a general

rule, the greater the divergence of your business questions from those addressed in the

CAT, the greater the likelihood that streams will need to be modified or used for

illustration rather than reuse.

Notes on Reusing CAT Streams

In general, the CAT streams can be reused to perform the same function with broadly

similar data. The Data Mapping tool reduces the need for hand editing when reusing

streams; however, in some cases, hand editing is necessary.

For more information, see "Mapping New Data to a CAT Stream" in the chapter

Using the Data Mapping Tool.

When reusing streams, you should also consider the following:

n In Server mode, the file paths for Source nodes may not be valid by default. You

may need to specify a different path in the Source node dialog box.

n When publishing a stream, be sure to check that the output file path is not set to the

$CLEO directory.

Chapter

4

Working with CAT Streams

Overview

Now that you have had an introduction to data mining in the telecommunications

industry, you are ready to go into greater detail. In this chapter, you can examine indepth the streams of each module. You can see how the data are prepared and how the

models are built. Read on for a closer look at how the Telco CAT works.

Module 1--Churn Application

The streams in Module 1 illustrate a churn prediction data mining application. As with

any data mining application, there are data preparation and exploration phases.

However, the core propensity modeling takes three primary approaches:

n Cluster the customers and look for high-churn clusters.

n Build rules or profiles that describe those customers likely to churn.

n Build scoring models indicating the degree to which a customer is similar to those

who have churned.

The first approach is illustrated in M1_churnclust. The second and third approaches

are shown in M2_churnpredict. Any of these approaches produce models that can be

deployed in a churn prediction application. In this case, deployment is illustrated

using a neural net scoring model (in the stream D1_churnscore).

16

17

Working with CAT Streams

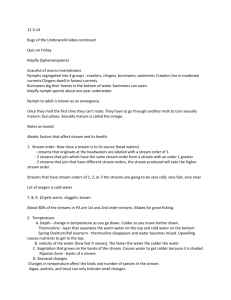

The following diagram illustrates how the streams fit together to comprise the churn

application.

Figure 4-1

Data files and streams in Module 1

Telco CAT Module 1 - Churn Application

E1_explore.str

P1_aggregate.str

Cust_calls.dat

P2_value.str

Cust_call_plus.dat

E2_ratios.str

P3_split.str

M1_churnclust.str

Cust_info.dat

Cdr.dat

Tariff.dat

D1_churnscore.str

Modeling

Train data

Test data

M2_churnpredict.str

Key:

Data

Stream

P1_aggregate. The first preprocessing stream takes two raw data files (custinfo and cdr)

and produces an intermediate file (cust_calls.dat). Three preprocessing steps are

performed:

n Aggregate the monthly call data into six monthly totals.

n Produce averages and various combined fields from these totals.

n Merge the customer information with this aggregated call data.

P2_value. The second preprocessing stream merges the intermediate file cust_calls and

the tariff details file to produce a new intermediate file cust_call_plus.dat. This new file

deals with the higher-level issues of customers’ total spending and the appropriateness

of the tariffs. The stream also compares what each customer spends with what they

would have spent on the "next higher" tariff and flags those who would be "better off"

on a higher tariff.

18

Chapter 4

E1_explore1. A number of visualizations are performed in this stream that examines the

churn indicator against a number of attributes considered likely to be relevant to churn

behavior. The goal is to get a picture of the "shape" of the data before more detailed

analyses are undertaken.

E2_ratios. This stream performs explorations that require preprocessing. These

explorations fall into five categories:

n Usage--How do usage bands, unused phones, and gender relate to churn?

n Ratios--How do the relations between different usage categories relate to churn?

n Handset--Do different types of handsets have different churn patterns?

n Dropped calls--How does the rate of dropped calls relate to churn?

n Tariff--Do tariff and tariff appropriateness have a relation to churn?

M1_churnclust. At this point in the module, business questions might focus on the

relationship between certain churn and spend groups. This stream attempts to answer

some of these questions as it produces clustering models and examines the relation of

the discovered clusters to churn and customer value (total spending). Then, it produces

rule-based profile models of the clusters. Characterizing the relevant clusters will allow

churn reduction campaigns to be targeted accurately. Profiling the high-churn groups

will also help you understand the reasons for churn. The derived fields from the

explorations in E2_ratios are included in this analysis via the SuperNode called

added_fields.

P3_split. This stream prepares the augmented customer data, cust_call_plus, for

predictive modeling. The fields from the explorations in the stream E2_ratios are

added by a SuperNode, and then the data is split randomly in half as training and test

data sets.

M2_churnpredict. This is the main predictive modeling stream for the churn

application. It builds a number of different predictive churn models using the training

data and then compares their performance on the test data.

D1_churnscore. This stream illustrates the deployment of scoring models using a neural

net scoring model as an example. It is important to note that, because this stream will

be deployed to run outside the context of Clementine, it must perform all of the

preprocessing from the raw data independent of any intermediate files.

19

Working with CAT Streams

P1_aggregate.str--Aggregate Call Data and Merge with Customer Record

This is the first data preparation step for churn analysis. This stream takes monthly call

data and aggregates it into six-month totals and then merges it with static customer

information.

Figure 4-2

Stream diagram for P1_aggregate.str

Stream Notes

Telco data is usually segmented into several different tables. For the purpose of data

mining, these tables need to be combined into a single one. In this example, there are

two types of data: CDR data (call data records) and customer information (length of

service, tariff, age, handset, etc.).

CDR data usually exists at several levels of aggregation:

n The lowest level is individual calls, which are usually too fine-grained for data

mining purposes.

n The next level is monthly aggregate calls by type (peak, off-peak, weekend, and

international). This type of data is often used for billing purposes.

The Telco CAT data set includes CDR data at the monthly level and contains call

minutes and the number of calls for each call type. This data is aggregated further to

give a six-month average that smooths out monthly fluctuations and is a more reliable

indicator of usage.

20

Chapter 4

The SuperNode Avgs & Counts derives additional fields for average call times

(AvePeak, AveOffPeak, AveWeekend, etc.). From this analysis, you can see that clients

who make longer calls and possess certain other attributes form a significant segment

of the customer base.

Figure 4-3

Detailed view of SuperNode Avgs & Counts

Also derived in the SuperNode are All_calls_mins (sum of all minutes used for all call

types) and a total and average length for national calls (all call types except

international). These will be used to derive usage ratios in the exploration streams.

Figure 4-4

Deriving All_calls_mins

21

Working with CAT Streams

P2_value.str--Customer Value and Tariff Appropriateness

During the second phase of data preparation, this stream adds tariff information to

allow calculation of total customer revenue and value. It also calculates a tariff

appropriateness flag.

Figure 4-5

Stream diagram for P2_value.str

Stream Notes

In this stream, the previously merged customer/call information is merged again with

the tariff details table so that each customer is tagged with the details of the type of

tariff they are on.

The SuperNode Tariff approp then calculates the several factors associated with

cost. Cost has two elements: the fixed cost of the tariff and the cost of the calls. All the

tariffs have some free minutes of call time, so customers pay only for calls over and

above their free minutes. International calls are not included in free minutes.

Call_cost_per_min is a calculation of the cost of all national calls before free minutes

divided by the total number of national minutes. The higher the proportion of off-peak

and weekend calls, the smaller this number will be.

22

Chapter 4

The SuperNode also calculates whether the user is on the correct tariff. In other words,

you could ask Would their cost be less on the tariff above? The answer is calculated

by comparing the difference between the tariff fixed charges with the amount spent on

(nonfree national) calls. The calculation assumes that the general type of tariff (Play

or CAT) is always correct but that the customer might be on the wrong tariff within

that type (for example, on CAT 50 when he or she should be on CAT 100). The

SuperNode also creates "usage bands" so that usage can be categorized for certain

types of analysis.

Figure 4-6

Customer usage bands for National mins

By examining the graphs, you can learn that customers flagged as high for their tariff

are more likely to churn.

23

Working with CAT Streams

Figure 4-7

Distribution of tariff appropriateness overlaid with churn

E1_explore.str--Visualize Customer Information and Value

Data exploration is the first step in a churn analysis. This stream shows typical

exploratory analyses on both raw and augmented data.

Figure 4-8

Stream diagram for E1_explore.str

24

Chapter 4

Stream Notes

The upper part of the stream shows analysis of attributes from the raw data. The lower

part looks at aggregated or derived fields.

Throughout the exploration phase, a range of indicator fields is examined. In each

graph, the fields are overlaid with the churn flag to determine if there is a simple

relationship between the variable and churn. Typically, what you look for here is an

increase or decrease in churn behavior that might indicate different customer segments.

For example, a histogram might reveal a trend or a specific band where churn is

stronger. Similar to distributions, these values might be associated with a higher churn

rate than others.

Many of the churn segments in this module are characterized by a combination of

several variables. Typically, single variable graphs like those used in this stream do not

show any obvious trends. In this particular data set, however, you can see some

patterns emerge.

The following list describes the conclusions drawn from several of the graphs in

this stream:

Age. In general, younger people tend to churn at a higher rate, and this is reflected in

the data set.

L_O_S (length of service). Churn often occurs just after a contract expires. The key

period in this data set is 12-15 months.

Handset. The cost to the customer of a handset is often subsidized. In general, service

providers want customers to keep their handset for at least a year to recoup this cost.

People with older handsets tend to churn because it is often a cheap way to upgrade

their handsets. People with high-tech handsets may want to upgrade to the latest

version as soon as it is available. For high-tech handsets, this period is normally less

than six months. In this data set, the handsets have different product codes. The hightech handsets are ASAD with the larger version number being the newest.

Dropped calls. This graph indicates service quality (although it can also be related to

handset problems). Clients with high dropped calls tend to churn.

25

Working with CAT Streams

Figure 4-9

Relation of dropped calls to churn

Tariff. Some tariffs may be more vulnerable to churn than others because competitors

offer a more attractive package for this usage segment. Low-cost users in the cheapest

tariffs tend to churn more as they find that even their current tariff is too expensive.

Total_Cost (the total spending of the customer). Low-spending customers tend to churn

more. Call cost without tariff (actual call cost) reveals this pattern even more strongly

(this excludes international calls). Usage also reveals this trend more clearly in the

usage bands than in the histogram (All_call_mins).

Usage fields. These fields are worth examining in separate graphs in order to check for

trends in usage segments related to churn. The presence of trends may depend on

particular tariff structures compared to competitors and type of call (for example,

international, peak, off-peak, weekend).

The fields examined in this stream are those one might examine in any churn

application. As data exploration shows, not all of the fields have identifiable patterns,

but some do. These relationships will help you determine the next step for your data

mining project.

26

Chapter 4

E2_ratios.str--Visualize Derived Usage Category Information

This stream performs deeper data exploration by adding high-level attributes to the

data set that enable the identification of market segments with a relation to churn.

Figure 4-10

Stream diagram for E2_ratios.str

Stream Notes

One way of characterizing churn is to partition the customer base into usage segments

and then analyze these for propensity to churn. Deriving higher-level attributes helps

this process and enables simpler rules that describe the segments and predict churn.

The upper right part of this stream derives a set of usage ratios that can be used to

describe customers in terms such as high offpeak calls when a large proportion of their

calls are off-peak.

Four ratios are derived:

Peak ratio

Peak minutes to national minutes

OffPeak ratio

Off-peak minutes to national minutes

Weekend ratio

Weekend minutes to national minutes

Nat-Internat ratio

International minutes to national minutes

27

Working with CAT Streams

The ratios show the proportion of all calls by those in a particular category (rather

than ratios between categories). This method avoids the distortion caused by very

low numbers when "pure" ratios are used. Examining the graphs for these ratios,

you can see:

n In general, the relation of these ratios to churn will depend on the tariff structure

and the competitive environment. Some tariffs favor peak or off-peak calls.

n In this data set, the OffPeak ratio is related to tariff and to high-churn

customer segments.

Figure 4-11

Graph of weekend ratio overlaid with churn

The upper left part of the stream, as viewed in the stream canvas, explores the

phenomenon of no usage. A no usage flag identifies those customers who have not

used their phones in the period covered by the data. This segment has a higher

propensity to churn, with the exception of people who use their phones for emergency

purposes only. The Select node called No Usage explores this segment in more detail.

The lower left part of the stream examines the relation of tariff and the tariff

appropriateness indicator to churn.

28

Chapter 4

The middle right part of the stream examines the churn properties of different

handsets. The distribution of handsets shows that some handsets are particularly

associated with churn. The churn score and aggregate branch of the stream calculates

a churn score for each handset (the average churn fraction) and ranks handsets in order

of score.

Figure 4-12

Distribution of churn with handset type

The lower right part of the stream examines dropped calls. You can explore the

Dropped_calls histogram and generate a Derive node to flag records with a high

number of dropped calls. The resulting distribution graph, high Dropped calls, shows

the increased propensity to churn in this group.

The derived fields in this stream will be included in the next preprocessing stage.

29

Working with CAT Streams

P3_split.str--Derive Usage Category Information and Train/test Split

This stream prepares the data for modeling. It adds the additional fields derived when

exploring the data and randomly splits the data into a test and training set.

Figure 4-13

Stream diagram for P3_split.str

Stream Notes

The SuperNode added fields adds the higher level attributes derived in e2_ratios.

Figure 4-14

SuperNode stream segment

The Derive node called Split generates a random number (either 1 or 2). This field is

used to partition the data set into training and test subsets that are written into separate

files. These files are used for modeling in M1_churnclust.str.

30

Chapter 4

Figure 4-15

Derive node generating a random number

The stream also contains a Type node that can be saved and reused in the predictive

modeling stream. This step is necessary to ensure that the Type node in use for

modeling has been instantiated with all the data (and not just the training subset).

31

Working with CAT Streams

M1_churnclust.str--Customer Clustering and Value/churn Analysis

This stream clusters the data-producing customer segments using two different

clustering techniques. The resulting clusters are analyzed for value and

propensity to churn.

Figure 4-16

Stream diagram for M1_churnclust.str

Stream Notes

Clustering is an alternative to predictive analysis and can give you insight into the

"natural" segments in the data. For example, if high-churn clusters can be identified,

business actions such as special offers might be tailored to that segment. Clustering can

also be used for value analyses such as identifying high spending clusters and crosssell opportunities.

The SuperNode added fields adds the attribute fields that were derived in

e2_ratios. Two clustering techniques are then used: Kohonen and K-means. The

32

Chapter 4

upper part of the stream analyzes the Kohonen clustering, and the lower part

analyzes the K-means clustering.

The Kohonen network produces a two-dimensional grid of clusters. The Derive

node Cluster No labels these fields, making it possible to analyze individual clusters.

The top branch of the stream adds a churn score (either 0 or 1) and then aggregates to

show the average churn per cluster. These clusters are then ranked and displayed in the

table called churn.

Figure 4-17

Table illustrating clusters ranked by churn score

A related branch of the Kohonen network (ending in the table called value) calculates

the average value per cluster and ranks the clusters in order to identify high-spending

clusters. The relation of the clusters to churn and value is also examined by

visualization. Another related branch uses C5.0 rule induction to create a ruleset that

profiles the clusters. This model is called Cluster No.

Comparison of the value, churn, and profile associated with particular clusters can

give you detailed insight into the customer base. For example, cluster 11 is a highchurn, medium-value cluster associated with high-usage males with certain tariffs

and handsets.

33

Working with CAT Streams

Figure 4-18

Clusters segmented by customer value

The lower part of the stream performs a similar analysis for a K-means cluster model.

The relationship of the clusters to value and churn are explored, the value and churn

cluster rankings are calculated, and the clusters are profiled using C5.0.

34

Chapter 4

M2_churnpredict.str--Model Propensity to Churn

This is the main stream for predictive churn modeling. It builds several models based

on the training data and evaluates them on the test data set.

Figure 4-19

Stream diagram for M2_churnpredict.str

Stream Notes

This stream builds four different models that predict churn:

n C5.0 ruleset

n C&RT decision tree

n Logistic regression model

n Neural network

The C5.0, C&RT, and logistic regression models are categorical in that they make a

yes/no prediction for churn. The neural network is a scoring model because the churn

flag is replaced by a number (0.0 or 1.0, calledchurn score) used as the prediction

35

Working with CAT Streams

target. The neural network thus predicts churn on a continuum between 0 and 1. The

C5.0 and C&RT predictions are also converted into scores between 0 and 1 using the

confidence values (visualized in the histograms Score C and Score R). Logistic

regression models produce probability fields that can be used directly for scoring.

When using multiple models, you might ask How do I know which model to

select for scoring?

To answer this question, you can compare the performance of the three categorical

models using the Analysis node in the lower stream and the nearby evaluation chart

that compares the gains curve of all three models. The performance of the neural

network is analyzed separately in the lower part of the stream, but the performance of

all the models can be compared in the lower evaluation chart.

Figure 4-20

Evaluation chart comparing all four models

A second issue to consider is the likely value of the clients that may churn. The plot of

Total_Cost v. $N-Churnscore is useful for devising a campaign matrix. The first clients

to contact would be those with high value and high score (most likely to churn)

followed by high value and medium score, and medium value and high score.

At this point, you have completed all the data preparation and modeling for Module 1

and should have enough information to facilitate decision making. However, if you

would like to deploy these streams independently of the Clementine application, read

on for more information.

36

Chapter 4

D1_churnscore.str--Score Propensity to Churn

This is an example model deployment stream. Starting from raw data, it performs all

the necessary preprocessing to score customers using the neural network model built

in stream M2_churnpredict. This stream can be "published" using the Clementine

Solution Publisher, producing a deployable scoring application.

Figure 4-21

Stream diagram for D1_churnscore.str

Stream Notes

The deployment stream runs independently of Clementine and therefore has to

combine all the operations needed to create the data in a single stream. Three input files

are required: cdr.dat (call data), custinfo.dat (customer information), and tariff.dat.

The lower left part of the stream duplicates the processing of p1_aggregate.str, the

upper left part duplicates p2_value.str, and the second SuperNode duplicates the fields

derived in p3_split.str. The data is then run through the neural net scoring model from

m2_churnpredict.str.

The final Publisher node is used to generate the stand-alone application.

37

Working with CAT Streams

Module 2--Cross-Sell Streams

Module 2 contains streams that illustrate the general structure of a cross-sell data

mining application. As with the Module 1 churn application, there are preprocessing

and exploration phases. The main product opportunity analysis has a slightly different

structure from that of the churn application because there is no one event or product on

which to focus the application. Instead, this module focuses on:

n Associations between products (illustrated in E3_products)

n Groupings of products (illustrated in E4_prodvalue and M4_prodprofile)

n Groupings of customers (illustrated in M3_prodassoc)

In all of these manipulations, you should focus on discoveries that will allow you to

predict purchasing patterns at a higher level than individual products. Again, any of

these approaches can be deployed to make purchase recommendations (that is, to

indicate likely purchases for individual customers). In this module, the deployment of

association rules for recommendations is illustrated in D2_recommend.str.

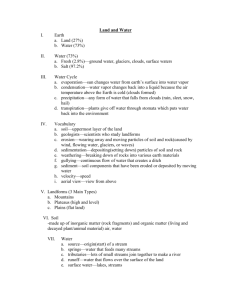

Figure 4-22

Data files and streams in Module 2

Telco CAT Module 2 - Cross-sell Application

M3_prodassoc.str

Products.dat

P4_basket.str

Cust_prod.dat

P5_custbasket.str

Cust_call_prod.dat

E4_prodvalue.str

Groupings

D2_recommend.str

Association rules

Cust_call_plus.dat

from Module 1

E3_products.str

M4_prodprofile.str

Key:

Data

Stream

38

Chapter 4

P4_basket. This stream performs a simple set-to-flag or basket transformation on the

raw till-roll style customer/product information. This process produces a basket data

format with one record per customer and one flag field per product. The stream uses

products.dat and produces cust_prod.dat.

E3_products. This stream explores the relationships between product purchases using a

web display and association rule modeling (Apriori).

D2_recommend. This stream illustrates how association rules can be deployed to

produce recommendations, or likely purchases for customers based on what they have

already purchased.

P5_custbasket. This stream combines the basket-style data produced by P4_basket.str

(cust_prod.dat) with the augmented customer/call information (cust_call_plus.dat) to

produce a new file (cust_call_prod.dat).

M3_prodassoc. This stream builds a Kohonen clustering model based on customer and

call information and then explores the relationships between the clusters and product

purchases. The goal is to discover groups of customers with propensities for certain

purchases. This stream is potentially useful for cross-selling recommendations.

E4_prodvalue. This stream explores the relationship between product purchase and total

customer spending (or value). Value-related product groups are discovered.

M4_prodprofile. This stream profiles the value-related product groups discovered in

E4_prodvalue in terms of customer and call information. The goal is to discover

profiles of customers likely to buy the products in each group. The stream illustrates

how customers predicted to buy the products in a group can be selected as targets for a

cross-selling campaign.

39

Working with CAT Streams

P4_basket.str--Produce Customer Product Basket Records

This is the initial stream of the Module 2 cross-sell application. It takes the raw product

information (one record for every product sold) and produces a single basket record for

each customer.

Figure 4-23

Stream diagram for P4_basket.str

Stream Notes

The raw product data is in the form of a till-roll, where each record links a customer

ID to one product purchased. The Set-to-Flag node takes the set of all products and

creates a flag field for each product and then aggregates by customer ID. The result is

a "basket" record for each customer, containing T in the fields for products they have

purchased and an F in the other (nonpurchased) product fields.

Executing this stream will produce a new Source node called cust_prod.dat. This

Source node is then merged with data from another source as shown in the next stream,

p5_custbasket.str.

40

Chapter 4

P5_custbasket.str--Merge Customer, Usage, and Basket Data

In this second phase of data preparation, you will merge the augmented customer call

data from Module 1 with the product data used in this module. This synthesis enables

more detailed analysis of product purchases and customer profiles.

Figure 4-24

Stream diagram for P5_custbasket.str

Stream Notes

The Merge node combines the basket data with the cust_call_plus data. This latter data

set includes both call data records and customer information, such as demographics.

The Derive node splits customer value into five bands to assist the exploration of

relationships between products and customer value or spending.

41

Working with CAT Streams

Figure 4-25

Splitting customer value into bands using a Derive node

E3_products.str--Product Association Discovery

Following the CRISP model, this stream moves from data preparation to the data

understanding and exploration phases. In E3_products.str, you can explore the

relationships within product purchases.

Figure 4-26

Stream diagram for E3_products.str

42

Chapter 4

Stream Notes

The stream explores the purchasing relationships (which products are purchased

together) in the basket data. The Web node called Products looks at pairwise

associations between products.

Figure 4-27

Web analysis of product associations

The Apriori node called Products performs a basket analysis and can confirm these

binary patterns while discovering more complex (multiproduct) purchasing patterns.

You can tune the Apriori node, changing the thresholds to control the number of

relationships found. The Web node can be used to estimate appropriate thresholds for

confidence and coverage.

43

Working with CAT Streams

M3_prodassoc.str--Customer Clustering and Product Analysis

During data mining, it is typical to build several models while exploring the data. This

stream builds a Kohonen clustering model based on call usage and customer

information and then analyzes the clusters in terms of product purchases. The purpose

is to identify cross-selling opportunities.

Figure 4-28

Stream diagram for M3_prodassoc.str

Stream Notes

In this stream, the Kohonen network is used to cluster customers based on call usage

data (behavioral data) and customer information. The rest of the stream analyzes the

characteristics of these clusters.

n The lower left part of the stream calculates the total and average customer value for

each cluster.

n The right side of the stream merges the clustered customer data with the individual

product list. This step helps you to characterize each cluster in terms of products

purchased. To identify cross-selling opportunities, you can select clusters that have

a high proportion of purchases of the product of interest and then try to sell the

product to remaining clients in that cluster who have not purchased the product.

44

Chapter 4

The Matrix node called cluster x Product breaks down the sales of each product by

cluster, thus highlighting the high-selling clusters for each product.

n The upper right branch sorts product purchases by cluster. Products are sorted in

terms of the number purchased in each cluster, showing the top-selling products for

each cluster.

The distribution graphs at the bottom of the stream canvas help to clarify the analysis:

n Cluster is a distribution of clusters overlaid with products, indicating the relative

importance of the different products in each cluster. For example, products 11 and

12 are relatively unimportant in cluster 02 but relatively important in cluster 32.

n ValueBand gives a distribution of value band overlaid by product. For

example, products 11 and 12 are relatively important in certain areas, such as

low-value bands.

n Finally, Product shows the converse relationship, the relative importance of the

different value bands to each product. In the distribution graph, the product

groupings are clearly visible. For example, products 1-4 are associated with highvalue customers.

Figure 4-29

ValueBand distribution showing products purchased in each value band

45

Working with CAT Streams

E4_prodvalue.str--Product Groupings Based on Customer Value

This stream explores the relationships between customer value bands and products

purchased. It also derives value-based product groupings.

Figure 4-30

Stream diagram for E4_prodvalue.str

Stream Notes

The upper part of the stream analyzes the distribution of products purchased in

different value bands. The Directed Web node called ValueBand x Products shows

these relationships. Four groups of product associations, revealed by this web and the

previous stream (e3_products), have been coded into the Derive nodes as flags for

groups 1-4. The relations between these groups and the value bands are then explored

in the directed web called ValueBand x Groups.

46

Chapter 4

Figure 4-31

Web map of product groups and value bands

The lower part of the stream uses the raw product records and merges them with the

total cost and value band information in order to count products per customer.

The upper branch of the stream calculates the average number of products per

customer in each of the five value bands and ranks the bands in terms of this average.

The lower branch calculates the total for each product purchased in each value band.

The aggregated data is then sorted to give a ranked list of value bands for each product.

This ranking helps answer questions such as For a given product, in which value bands

does it sell best?

47

Working with CAT Streams

M4_prodprofile.str--Propensity to Buy Grouped Products

This stream builds C5.0 rulesets showing the profiles for purchasers of the product

groups identified in e4_prodvalue.str.

Figure 4-32

Stream diagram for M4_prodprofile.str

Stream Notes

Previous streams (e3_products.str and e4_prodvalue.str) have been used to discover

product groupings or sets of products that customers purchase together. In this

stream, these groups are flagged by derived indicators in the SuperNode called

Groups. Such flagging is helpful for cross-selling, for example, when there is a threeproduct grouping and you have a number of customers with only two of the three

products. You could use this information to identify these customers and to offer

them the third product.

This stream builds profiles for three of the four identified product groupings using

customer behavioral and descriptive information. Some groups (in this case, group 1)

produce no useful profile. The three models built from this data have quite different

characters: the model for group 2 is very simple, the model for group 4 is moderately

complex, and the model for group 3 is very complex. This complexity appears to have

48

Chapter 4

an inverse relationship to the quality of the model. In other words, the simpler the

model, the more accurate (as illustrated by the Analysis node results) and better the

gains chart is (as shown by the evaluation chart).

Figure 4-33

Evaluation of different propensity models

The models, once built, can be used to predict which clients will buy a particular

product grouping. Similarly, those who have not purchased all products in their group

can be targeted for cross-selling. The lower right branch of the stream shows the

selection of targets for group 2 products.

D2_recommend.str--Product Recommendations from Association Rules

Following the CRISP-DM model, the final phase of most data mining projects is

deployment. This stream implements a recommendation engine for association rules

that can be deployed outside of Clementine. It compiles a basket of items for a client

and uses the association ruleset to recommend additional items to purchase sorted in

order of rule confidence.

49

Working with CAT Streams

Figure 4-34

Stream diagram for D2_recommend.str

Stream Notes

The upper part of the stream produces an association rule model and converts it into a

form where it can be used for product recommendations. The lower stream uses the

converted rules to recommend additional products for a user’s "basket" - the basket of

products already purchased can be provided using a User Input node. The format of the

input basket is a single record for each product purchased containing a user id and the

product. This input format allows you to make recommendations for multiple users

simply by substituting a file containing user id and product purchases (the file

products.dat provides such an example.)

There are actually three streams in this file. The top left stream generates the

association rules using Apriori. The unrefined association rule model will appear in the

Generated Models palette of the Managers window. To complete the first stage of rule

preparation, you should browse the model and select Show criteria, then export the

model as a text file (in this case assoc_rules.txt).

50

Chapter 4

The second stream converts the ruleset into a form that can be used for

recommendations. The association rules are saved in the form:

instances support confidence consequent antecedent1 ...

Components are separated by tabs. In this case, the rules are interpreted to mean that if

the basket contains the antecedents then purchasing the consequent is recommended

(provided they haven’t already purchased it). The antecedents may contains one or

more items. The stream assigns a rule number (Rule) to each rule and converts the rules

into a record for each condition. It also adds a variable (Conds) which is the total

number of conditions in each rule. The stream can process rulesets that have up to three

conditions. (Further branches would have to be added for rules with more than three

conditions.)

The stream determines whether the conditions consist of one, two or three items by

examining the antecedent fields. All rules have at least one condition (Cond1), so every

rule/record passes thround the upper branch; the second and third branches are used

only if there are second and third conditions (Cond2 and Cond3). The different

branches select these cases and produce a separate record for every condition; the rule

number (Rule) and the total number of conditions (Conds) are attached to each

condition. These condition records are appended together into the file conditions.txt,

which is used in the final stream for recommendations.

The lower stream is the recommendation engine. A user ID and basket is entered via

the User Input node. The basket items are entered in single quotes ’01’ ’02’ etc.

separated by spaces. This produces a record for each product purchased. The Derive

node Condition converts each product into the same form as it appears in the conditions

file from the association rules. The user basket is then merged with the conditions. All

conditions that appear in the user basket will be matched. The resultant "matched

conditions" data is then aggregated by user and rule number (Rule) and only those rules

where all the conditions are matched are retained (Select node matched).

The Derive node AllProducts accumulates the customer basket and selecting the last

record for a customer yields the total product basket. (Note: this will work for multiple

customers.) The total basket (AllProducts) is merged with the matched rules and those

rules which recommend products that are already in the basket are discarded. The

remaining rules are sorted on customer ID and rule confidence; the Distinct node then

discards any products that have been recommended for the same customer more then

once. The recommendations, already sorted in order of confidence, are displayed in the

table. The Clementine Solution Publisher node can be used to deploy this stream as a

standalone recommendation engine.

Appendix

A

Telco CAT Data Files and

Field Names

Raw Data Files for Modules 1 and 2

Data file: custinfo.dat

Field name

Explanation

Customer_ID

Unique customer key

Gender

Sex--male or female

Age

Age in years

Connect_Date

Date phone was "connected"--start of customer relationship

L_O_S

Length of service in months (since connect date)

Dropped_Calls

Number of dropped calls during 6-month period

Pay Method

Method of payment--either pre- or post-paid

tariff

Tariff type

Churn

Flag--churned or active

Handset

Name of handset type

51

52

Appendix A

Data file: cdr.dat

Field name

Explanation

Customer_ID

Unique customer key

Peak_calls

Number of peak-time calls in month indicated

Peak_mins

Number of peak-time call minutes in month indicated

OffPeak_calls

Number of off-peak calls in month indicated

OffPeak_mins_Sum

Number of off-peak minutes in month indicated

Weekend_calls

Number of weekend calls in month indicated

Weekend_mins

Number of weekend minutes in month indicated

International_mins

Number of international-call minutes in month indicated

Nat_call_cost_Sum

Cost of national calls (peak + off-peak + weekend) in month

indicated

month

The month described by the record--6 months supplied for each

customer

Data file: tariff.dat

Field name

Explanation

tariff

Tariff type

fixed_cost

Fixed monthly cost for this tariff type

Free_mins

Number of free (national) call minutes for this tariff type

peak_rate

Cost per minute for peak-time calls beyond free minutes for this

tariff type

OffPeak_rate

Cost per minute for off-peak calls beyond free minutes for this

tariff type

Weekend_rate

Cost per minute for weekend calls beyond free minutes for this

tariff type

International_rate

Cost per minute for international calls for this tariff type

Voicemail

Cost of voicemail service (not used)

SMS

Cost of SMS service (not used)

Data file: products.dat

Field name

Explanation

Customer_ID

Unique customer key

Product

One product bought by this customer (a customer may have

several rows)

53

Telco CAT Da ta Files and Field Names

Intermediate Data Files for Module 1

Data file: cust_calls.dat--new fields added by p1_aggregate.str

Field name

Explanation

Customer_ID

Inherited from custinfo.dat

Gender

Age

Connect_Date

L_O_S

Dropped_Calls

Pay Method

tariff

Churn

Handset

Peak_calls_Sum

Total number of peak-time calls in 6-month period

Peak_mins_Sum

Total number of peak-time call minutes in 6-month period

OffPeak_calls_Sum

Total number of off-peak calls in 6-month period

OffPeak_mins_Sum

Total number of off-peak minutes in 6-month period

Weekend_calls_Sum

Total number of weekend calls in 6-month period

Weekend_mins_Sum

Total number of weekend minutes in 6-month period

International_mins_Sum

Total number of international-call minutes in 6-month period

Nat_call_cost_Sum

Total cost of national calls (peak + off-peak + weekend)

AvePeak

Average duration of peak-time calls during 6-month period

AveOffPeak

Average duration of off-peak calls during 6-month period

AveWeekend

Average duration of weekend calls during 6-month period

National_calls

Total number of national calls in 6-month period

National mins

Total number of national minutes in 6-month period

AveNational

Average duration of national calls during 6-month period

All_calls_mins

Total number of call minutes in 6-month period (national +

international)

54

Appendix A

Data file: cust_call_plus.dat--new fields added by p2_value.str

Field name

Explanation

Customer_ID

Inherited from custinfo.dat

Gender

Age

Connect_Date

L_O_S

Dropped_Calls

Pay Method

tariff

Churn

Handset

Peak_calls_Sum

Inherited from cust_calls.dat

Peak_mins_Sum

OffPeak_calls_Sum

OffPeak_mins_Sum

Weekend_calls_Sum

Weekend_mins_Sum

International_mins_Sum

Nat_call_cost_Sum

AvePeak

AveOffPeak

AveWeekend

National_calls

National mins

AveNational

All_calls_mins

Usage_Band

A banding of national call minutes

Mins_charge

Number of chargeable national call minutes in 6-month

period (national minutes - free minutes)

Continued

55

Telco CAT Da ta Files and Field Names

Field name

Explanation

call_cost_per_min

Cost of national calls per minute ignoring free minutes

actual call cost

Cost of national calls after free minutes removed--indicates

call mix

Total_call_cost

actual call cost + cost of international calls

Total_Cost

Total call cost + fixed cost of tariff

Tariff_OK

Flag to indicate tariff appropriateness

average cost min

Total cost / all call minutes (average call cost per minute

including tariff cost and international calls)

Data files: train.dat and test.dat--new fields added by p3_split.str--also derived and

explored in e2_rations.str and m1_churnclust.str

Field name

Explanation

Customer_ID

Inherited from custinfo.dat

Gender

Age

Connect_Date

L_O_S

Dropped_Calls

Pay Method

tariff

Churn

Handset

Peak_calls_Sum

Inherited from cust_calls.dat

Peak_mins_Sum

OffPeak_calls_Sum

OffPeak_mins_Sum

Weekend_calls_Sum

Weekend_mins_Sum

International_mins_Sum

Nat_call_cost_Sum

AvePeak

Continued

56

Appendix A

Field name

Explanation

AveOffPeak

AveWeekend

National_calls

National mins

AveNational

All_calls_mins

Usage_Band

Inherited from cust_call_plus.dat

Mins_charge

call_cost_per_min

actual call cost

Total_call_cost

Total_Cost

Tariff_OK

average cost min

Peak ratio

Ratio of peak-time minutes / national minutes

Offpeak ratio

Ratio of off-peak minutes / national minutes

Weekend ratio

Ratio of weekend call minutes / national minutes

Nat-InterNat Ratio

Ratio of international call minutes / national minutes

High Dropped calls

Number of dropped calls above threshold

No usage

Client has made 0 calls in the 6-month period

57

Telco CAT Da ta Files and Field Names

Intermediate Data Files for Module 2

Data file: cust_prod.dat

Field name

Explanation

Customer_ID

Unique customer key

Product_01

For each product, a flag indicating whether the customer bought this

product (one record per customer)

Product_02

Product_03

Product_04

Product_05

Product_06

Product_07

Product_08

Product_09

Product_10

Product_11

Product_12

Data file: cust_call_prod.dat--created by p5_custbasket.str

Field name

Explanation

Customer_ID

Inherited from custinfo.dat

Gender

Age

Connect_Date

L_O_S

Dropped_Calls

Pay Method

tariff

Churn

Handset

Continued

58

Appendix A

Field name

Explanation

Peak_calls_Sum

Inherited from cust_calls.dat

Peak_mins_Sum

OffPeak_calls_Sum

OffPeak_mins_Sum

Weekend_calls_Sum

Weekend_mins_Sum

International_mins_Sum

Nat_call_cost_Sum

AvePeak

AveOffPeak

AveWeekend

National_calls

National mins

AveNational

All_calls_mins

Usage_Band

Inherited from cust_call_plus.dat

Mins_charge

call_cost_per_min

actual call cost

Total_call_cost

Total_Cost

Tariff_OK

average cost min

Product_01

Inherited from cust_prod.dat

Product_02

Product_03

Product_04

Product_05

Product_06

Continued

59

Telco CAT Da ta Files and Field Names

Field name

Product_07

Product_08

Product_09

Product_10

Product_11

Product_12

Explanation

Appendix

B

Using the Data Mapping Tool

Mapping New Data to a CAT Stream

Using the mapping tool, you can connect new data to a pre-existing stream. A

common use is to replace the source node defined in a Clementine Application

Template (CAT) with a source node that defines your own data set. The Mapping tool

will not only set up the connection but will also help you to specify how field names

in the new source will replace those in the existing template. In essence, mapping data

results simply in the creation of a new Filter node, which matches up the appropriate

fields by renaming them.

There are two equivalent ways to map data:

Select Replacement Node. This method starts with the node to be replaced. First, you

select the node to replace; then, using the Replacement option from the context menu,

select the node with which to replace it. This way is particularly suitable for mapping

data to a CAT.

Map to. This method starts with the node to be introduced to the stream. First, select

the node to introduce; then, using the Map option from the context menu, select next

the node to which it should join. This way is particularly useful for mapping to a

terminal node. Note: You cannot map to Merge or Append nodes. Instead, you should

simply connect the stream to the Merge node in the normal manner.

60

61

Using the Data Mapping Tool

Figure B-1

Selecting data mapping options

In contrast to earlier versions of Clementine, data mapping is now tightly integrated

into stream building, and if you try to connect to a node that already has a connection,

you will be offered the option of replacing the connection or mapping to that node.

Mapping Data to a Template

To replace the data source for a template stream with a new source node bringing your

own data into Clementine, you should use the Select Replacement Node option from

the Data Mapping context menu option. This option is available for all nodes except

Merge, Aggregate, and all terminal nodes. Using the data mapping tool to perform this

action helps ensure that fields are matched properly between the existing stream

operations and the new data source. The following steps provide an overview of the

data mapping process.

Step 1: Specify Essential Fields in the original source node. In order for stream operations

to execute properly, essential fields should be specified. In most cases, this step is

completed by the template author. For more information, see "Specifying Essential

Fields" below.

62

Appendix B

Step 2: Add new data source to the stream canvas. Using one of Clementine’s source

nodes, bring in the new replacement data.

Step 3: Replace the template source node. Using the Data Mapping options on the

context menu for the template source node, choose Select Replacement Node. Then

select the source node for the replacement data.

Figure B-2

Selecting a replacement source node

Step 4: Check mapped fields. In the dialog box that opens, check that the software is

mapping fields properly from the replacement data source to the stream. Any

unmapped essential fields are displayed in red. These fields are used in stream

operations and must be replaced with a similar field in the new data source in order for

downstream operations to function properly. For more information, see "Examining

MappedFields" below.

After using the dialog box to ensure that all essential fields are properly mapped, the

old data source is disconnected and the new data source is connected to the template

stream using a Filter node called Map. This Filter node directs the actual mapping of

fields in the stream. An Unmap Filter node is also included on the stream canvas. The

Unmap Filter node can be used to reverse field name mapping by adding it to the

stream. It will undo the mapped fields, but note that you will have to edit any

downstream terminal nodes to reselect the fields and overlays.

63

Using the Data Mapping Tool

Figure B-3

New data source successfully mapped to the template stream

Mapping between Streams

Similar to connecting nodes, this method of data mapping does not require you to set

essential fields beforehand. With this method, you simply connect from one stream to

another using the Data mapping context menu option, Map to. This type of data

mapping is useful for mapping to terminal nodes and copying and pasting between

streams. Note: Using the Map to option, you cannot map to Merge, Append, and all

types of source nodes.

Figure B-4

Mapping a stream from its Sort node to the Type node of another stream

64

Appendix B

To map data between streams:

Right-click the node that you want to use for connecting to the new stream.

From the context menu, select:

Data mapping

Map to

Use the cursor to select a destination node on the target stream.

In the dialog box that opens, ensure that fields are properly matched and click OK.

Specifying Essential Fields

When mapping to a Clementine Application Template, essential fields will typically be

specified by the template author. These essential fields indicate whether a particular

field is used in downstream operations. For example, the existing stream may build a

model that uses a field called Churn. In this stream, Churn is an essential field because

you could not build the model without it. Likewise, fields used in manipulation nodes,

such as a Derive node, are necessary to derive the new field. Explicitly setting such

fields as essential helps to ensure that the proper fields in the new source node are

mapped to them. If mandatory fields are not mapped, you will receive an error

message. If you decide that certain manipulations or output nodes are unneccessary,

you can delete the nodes from the stream and remove the appropriate fields from the

Essential Fields list.

Note: In general, template streams in the Solutions Template Library already have

essential fields specified.

To set essential fields:

Right-click on the source node of the template stream that will be replaced.

From the context menu, select Specify Essential Fields.

65

Using the Data Mapping Tool

Figure B-5

Specifying Essential Fields dialog box

Using the Field Chooser, you can add or remove fields from the list. To open the Field

Chooser, click the icon to the right of the fields list.

Examining Mapped Fields

Once you have selected the point at which one data stream or data source will be

mapped to another, a dialog box opens for you to select fields for mapping or to ensure

that the system default mapping is correct. If essential fields have been set for the

stream or data source and they are unmatched, these fields are displayed in red. Any

unmapped fields from the data source will pass through the Filter node unaltered, but