Inferential Statistics: Introduction to Confidence Intervals and

advertisement

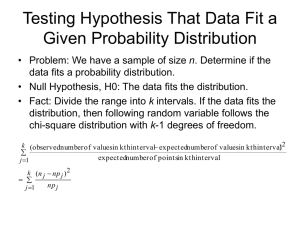

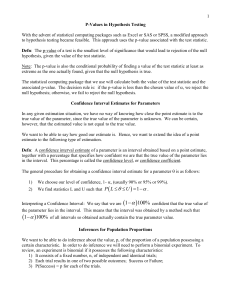

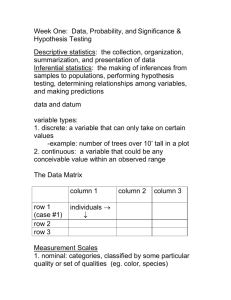

Inferential Statistics: Introduction to Confidence Intervals and Hypothesis Tests We will now look at two basic tools of inferential statistics: A basic example: Suppose a law firm specializes in class action lawsuits with large payouts for their clients. We will take the role of an outsider to the firm (either an interested client, a Revenue Canada agent, or even a criminal investigator). 1) the confidence interval (or parameter estimation) 2) the hypothesis test In this unit we will look at both tools under very simplified conditions. Once we have covered the basic ideas, we will expand our methods to more realistic situations in the course’s final units. Again, our general goal in inferential statistics is to make predictions/decisions on a population based on limited sample data. While we may not have access to the company’s internal files (with all of their payout records), we certainly should be able to collect a sample of recent claims and the size of the payouts. Suppose we have collected a random sample of 50 individual claims. The average payout for this sample is 0=$116,000. This is, of course, just a sample average, and may or may not reflect the true value of the population average :, i.e. the average payout across all clients in this firm. We are interested in the value of : (perhaps we are interested in hiring this firm to represent us, or we are investigating them for potential tax evasion). We will address the question of “What is the average payout for claims by this law firm?” in two ways: Page 1 of 26 Page 2 of 26 1. We may simply want to estimate the average payout to all clients. We have collected data and calculated a sample average 0, now we want to take this statistic and use it to make our best possible estimate of the value of :. To begin with, we will look at both of these processes under very simplified conditions. Specifically, we will assume that: i) The sample we collected was a simple random sample with no difficulties (such as non-response errors) This process is called parameter estimation. ii) The variable under investigation (here, the client payout amount) is normally distributed. 2. We may question or challenge a claim made by the law firm. Perhaps their advertising states that their average payout is at least $120,000 per client. Is the sample statistic that we collected (which, at 0=$116,000 is less than the claimed value of :$$120,000) enough to reject the company’s claim? This process is called a confidence test. iii) We know the population standard deviation, F. For this example, we will assume that F=$18,000. In rare cases these assumptions are reasonable. We certainly can take an SRS from some populations. Many variables are at least approximately normal. And we may know the value of F from previous studies (e.g. a census) and can reasonably assume that the variation has not changed much. In this case, We will deal with more realistic situations (in particular, where (ii) and (iii) do not hold), in later units. Page 3 of 26 Page 4 of 26 Estimation of Parameters using Confidence Intervals A second option in parameter estimation is to assign a range of values to a parameter. This is called an interval estimator. How do we estimate a parameter (e.g. :) from sample data (e.g. 0). The simplest option would be to simply use our found value of 0 as our “best” estimate of :. Here we call 0 a point estimator of :. In other words, we estimate that :=$116,000. e.g. we may say that the value of : is somewhere between $115,000 and $117,000. We can then make a probabilistic statement about the nature of this interval estimate. Advantages: e.g. we may say that the above interval was produced using a method that produces correct results 95% of the time. Disadvantages: We would combine the above interval and probability to say that a 95%confidence interval for the value of : is $115,000 < : < $117,000 Typical unbiased point estimators are Now, these particular two numbers were just “pulled out of thin air”. Let’s look at the process to determine the actual interval range. Parameter Statistic (Point Estimator) Mean µ 0 Standard Deviation F s Proportion p (i.e. binomial) (p hat) Page 5 of 26 Page 6 of 26 Example 1: Example 2: A sample of 50 claim payments by a law firm has mean $116,000. We will assume (as per our simple conditions) that claim levels are normally distributed and that the standard deviation for claims across all clients is F=$18,000. A sample of 40 randomly selected Canadians males has a mean height of 182.3 cm. We will assume that height is normally distributed, and we know that F=9.6 cm. The sampling distribution for the sample averages is normally distributed with Under these assumptions, construct a 95% confidence interval for the height of Canadian males. mean :0 = and standard deviation F0= Recall that, by the empirical rule, 95% of all cases lie within two standard deviations (i.e. $5091) of the mean. That is, the interval $116,000 - $5091 = $110,909 to $116,000 + $5091 = $121,091 is obtained from a process that includes the population mean in 95% of all samples. We say that the 95% confidence interval for : is $110,909 < : < $121,091 Page 7 of 26 Once again, be sure to understand the interpretation of the above statement. Our particular interval estimate may or may not actually “catch” the true value of :. However, 95% of all possible samples of size n=40 would have a sample average within 3.04 cm of :. Page 8 of 26 Example 3: Terminology: Given the same conditions as in Example 2, how would we construct a 90% confidence interval for :? The interval estimate that we construct (e.g $110,909 to $121,091) is called a confidence interval, and the two numbers are the confidence limits. Our probability is called the confidence level (if expressed as a percentage 95%) or the confidence coefficient (if expressed as a proportion 0.95), and denoted 1-". (here 1-"=.95, so "=.05, i.e. " is the area left out in the two “tails” of our distribution). We sometimes use the notation zx to indicate the z-value that has a proportion “x” of the normal curve beyond it, e.g. in a 95% confidence interval, we are looking for z.025, as we want 2.5% of the graph to be at each tail end. Note that z.025 = z"/2 Finally, the confidence interval for our population mean is constructed as 0- z"/2 F0 < : < 0+ z"/2 F0 The value z"/2 F0 is also called the maximum error of estimate (and sometimes denoted E). It measures the largest possible distance the true population mean could have from your sample mean at the given confidence level, i.e. our “margin of error”. Page 9 of 26 Page 10 of 26 Some Notes: Example 1. Don’t think that a higher confidence level is always better. As the confidence level increases, Speed limit on Highway 11 is 110 km/h. A sample of 32 cars on Highway 11 have average speed of 0=104 km/h. You may assume that the population is normal with F=34.1 km/h. With confidence level 99%, a) find the maximum error of estimate 2. You must always decide on the confidence level before your experiment. If you try out different levels and pick the one that you think fits best, you are introducing your own bias into the results. 3. A common misconception: The statement “Poll results are accurate to ±3% 19 out of 20 times” means that a 95% confidence interval has been constructed, and the maximum error of estimate was 3%. This does not mean that there is a 95% chance that the population mean will be in the given interval! b) construct the 99% confidence interval for : You can only say that 95% of all confidence intervals constructed in this manner will contain the population mean. This particular confidence interval may be way off (and you will never know)! This is a subtle, but important point. Note: this interval contains the cut-off value 110 km/h! What does this imply? Page 11 of 26 Page 12 of 26 Determining a reasonable sample size Depending on our confidence level (90%, 95%, 99%) and our desired maximum error of estimate (e.g. up to 2 units away from the mean) we can determine what a good sample size would be. Since E = z"/2 F0 i.e. E = z"/2 (F//n) we can solve this for n=(z"/2 F / E)2 Example: Suppose you wish to pick a sample of lightbulbs to estimate their mean lifetime (in hours). You wish to be 95% confident that the estimate is accurate within 20 hours. How many lightbulbs should you sample? (you can assume that the population is normal with F=74 hours). Page 13 of 26 Page 14 of 26 Hypothesis Testing - The Basics We can’t say for sure, there are two possibilities: Let us now return to our original example (the law firm client payouts). Recall that the law firm claimed that their average payout was at least :=$120,000. We took a sample of 50 claims and found that the sample average was 0=$116,000. 1) The population average is in fact not $120,000 as claimed, and our sample simply reflects this fact. Using an assumed value for F=$18,000, we previously showed that a 95% confidence level for the population average payout is $110,909 < : < $121,091. We will now consider the same situation from the point of view of the law firm’s claim. In this case we would reject the claim that the average payout (for all clients) is $120,000. 2) The population mean payout is in fact $120,000, but we happened (by chance) to pick a sample of 50 cases with lower payouts from that population. In this case we wouldn’t have enough evidence to reject the claim that the average payout is $120,000. A claim is being made on a population parameter, in this case the claim is that population average payout is at least :=$120,000. Our sample shows a lower average payout of 0=$116,000. The basic question: given that our sample average is lower, should we doubt (or reject) the company’s claim? How likely is Scenario #2? Let’s assume the population average payout really is $120,000. If we take a sample of 50 cases from this population, the resulting sampling distribution would be approximately normal with and . We can use these values to calculate how likely is it that we draw a sample of 50 cases with a sample average of $116,000 or less. Page 15 of 26 Page 16 of 26 If we find this probability is very (!) low, then we can conclude that this couldn’t reasonably have happened by chance, hence our actual mean couldn’t reasonably be $120,000 (but is likely lower). Terminology The hypothesis test is a very structured process with several steps that need to be followed precisely. We will use the following definitions: If, on the other hand, this probability is reasonable, then it is certainly possible that - even with a population mean of $120,000, we still picked a random sample with sample mean $116,000. Null Hypothesis H0: The hypothesis that the value of : remains unchanged from some value k. What is our cut-off probability, i.e. how small a probability do we allow? This could be written in one of two ways: This is entirely our choice, and is called the level of significance of our test. If we want to reject the claim if our probability drops below 5%, then our level of significance would be "=0.05 1) :=k. In this case we would reject this hypothesis if we think that : is either larger or smaller than k. This is called a two-tailed test. In other words, if we use "=0.05, we will reject the company’s claim of :=$120,000 if the probability of picking our random sample with 0=$116,000 is less than 5%. In that case, we would say that our sample average is significantly different enough from the claimed population average to reject that claim. Now calculate the probability and make your decision: ... Page 17 of 26 2) :#k or :$k. In this case we’d only reject the hypothesis if we think that : is larger (!) or smaller (!) than k. This is called a one-tailed test. In our example, the company’s claim was that payouts were at least $120,000. Hence the Null Hypothesis is H0: :$$120,000 and we will only reject the claim if we feel that : is in fact less than $120,000. Page 18 of 26 Alternate Hypothesis H1: Errors: The hypothesis that the value of : was changed, i.e. the “opposite” of H0. Note that, in reality, H0 may or may not be true. This is either written as : k, :>k, :<k, depending on the Null Hypothesis. * In our example H1: :<$120,000 (the opposite of H0: :$$120,000) “Defendant was innocent, but jury convicted them.” * Statistical Test: The process of calculating a sample statistic test-value (such as a mean) to decide whether or not there are sufficient grounds to reject a Null Hypothesis. Important: We can only reject H0 or not reject H0. We can never accept H0! (i.e. we can only answer the claim saying “we don’t believe the average payout is over $120,000” or “we have no reason not to believe your claim”. We would never answer “we believe your claimed value”. The reason for this will become apparent later.) Page 19 of 26 If H0 is indeed true (the population average is indeed $120,000) but based on our sample we reject H0 (i.e. we happened to pick 50 cases with lower payouts) then we have made a Type I Error. If H0 is false (the average payout is not $120,000) but based on our sample we do not reject H0 (i.e. we happened to pick 50 cases close to $120,000) then we have made a Type II Error. “Defendant was guilty, but jury let them go.” Note that, using the law-and-order example, a very “trigger-happy” jury would be more likely to make a Type I error, while a very “laid-back” jury would be more likely to make a Type II error. On which side would you rather fall? Page 20 of 26 Critical Values: Example Usually, we wish to avoid making a error, hence we set a low level of probability of making such an error. This cut-off level for our probability is again called our level of significance and denoted ". An insurance company states that the mean payout for automobile accidents is at least $18,500. A researcher wants to test the accuracy of this value by sampling 36 random cases. Their mean is found to be $17,585. Assuming that the population standard deviation for payouts is $2,600, test the insurance company’s claim at a significance level of "=0.05. The associated cut-off test statistic is called the critical value (for example, if we use z-scores, the z-value that would result in a probability of 5% would be our critical value). Any z-value beyond the critical value is said to be in the rejection region. The Five Step Approach: To summarize all of these ideas, we tend to go through a hypothesis test in five fairly rigid steps. Step 1: Step 2: Step 3: Step 4: Step 5: Page 21 of 26 Page 22 of 26 Example Example A researcher stated in a 2004 report that the average child watches 520 minutes of TV per week. To test whether or not this value has changed since then, the TV viewing habits of 40 children are monitored. The sample watches an average of 500 minutes of TV per week. Assuming that F=80 minutes is given, is the 2004 claim still reasonable? Perform a hypothesis test at the 0.1 level of significance. A snack food manufacturer claims that their chips bags contain at most 80g of fat. A random sample of 45 chips bags has mean fat content 80.5g (assume F=1.6g). Perform a hypothesis test at a 0.025 level of significance. Page 23 of 26 Page 24 of 26 Using P-values in Hypothesis Testing Example So far, we have found a critical point (and hence a rejection region) and have based our conclusion on whether or not a test-statistic falls into the rejection region or not. A healthy invididual has an MCV (average volume of a red blood cell) of 90 femtoliters (fl). (Note: a femtoliter is 10-15 litres). A particular chemotherapy drug is believed to cause a change in MCV levels. A sample of 88 patients has a mean volume of 92 fl. Assume a population standard deviation of 8.3 fl. No indication is made how close or how far a statistic is from the rejection region. To indicate this, we can also use a P-value: the probability of getting a sample statistic as far away from the population parameter as we did. Using "=0.01 and calculating the P-Value, test the claim that the drug causes a change in MCV levels. For example: If the population of chip bags had mean fat content of 80 g with F=1.6g, what is the probability that a random sample of size 45 has sample average 80.5g? That probability is called the P-value. In our case we found the test-statistic to be z=2.10. From the z-table we find an area of A=.9821, so .0179 of the curve lies beyond this z-value. This is our P-value, the probability of getting a sample that is as far (or further) from the population mean as we got is 0.0179. We know the rejection region contains 0.025 of the curve’s area (a onetailed test at 95% level of significance). Hence, as 0.0179<0.025 our teststatistic lies beyond the critical value, we (again) reject the nullhypothesis. Since 1.79% is not that far below 2.5%, it is however not a very “strong” rejection. In our Five Step Approach, we would calculate the p-value in Step 3. Page 25 of 26 Page 26 of 26