What is Computation? (How) Does Nature

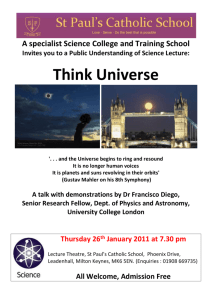

advertisement