Task assignment in heterogeneous multiprocessor platforms 1

advertisement

Task assignment in heterogeneous multiprocessor platforms∗

Sanjoy K. Baruah

Shelby Funk

The University of North Carolina

Abstract

In the partitioned approach to scheduling periodic tasks upon multiprocessors, each task is

assigned to a specific processor and all jobs generated by a task are required to execute upon the

processor to which the task is assigned. In this paper, the partitioning of periodic task systems

upon uniform multiprocessors – multiprocessor platforms in which different processors have

different computing capacities – is considered. Partitioning of periodic task systems requires

solving the bin-packing problem, which is known to be intractable (NP-hard in the strong

sense). Sufficient utilization-based feasibility conditions that run in polynomial time are derived

for ensuring that a periodic task system can be successfully scheduled upon such a uniform

multiprocessor platform.

1

Introduction

In hard-real-time systems, jobs have deadlines associated with them, and it is imperative for the

correctness of the application that all jobs complete by their deadlines. In multiprocessor realtime systems, there are several processors available upon which these jobs may execute: all the

processors have exactly the same computing capacity in identical multiprocessors, while different

processors may have different speeds or computing capacities in uniform multiprocessors.

A periodic real-time task T is characterized by an execution requirement e and a period p, and

generates an infinite sequence of jobs with successive jobs arriving exactly p time units apart, and

∗

Supported in part by the National Science Foundation (Grant Nos. CCR-9704206, CCR-9972105, CCR-9988327,

and ITR-0082866).

1

each job needing to execute for e units by a deadline that is p time units after its arrival time. A

sporadic real-time task is similar, except that the period parameter now specifies the minimum,

rather than exact, temporal separation between the arrival of successive jobs. A periodic/ sporadic

task system consists of several periodic/ sporadic tasks.

We restrict our attention in this paper to preemptive scheduling — i.e., we assume that it

is permitted to preempt a currently executing job, and resume its execution later, with no cost

or penalty. Different preemptive multiprocessor scheduling algorithms for scheduling systems of

periodic/ sporadic tasks may restrict the processors upon which particular jobs may execute: partitioned scheduling algorithms require that all the jobs generated by a particular task execute

upon the same processor, while global scheduling algorithms permit different jobs of the same

task to execute upon different processors (or indeed, allow a job which has been preempted while

executing upon a processor to resume execution upon the same or a different processor).

Global scheduling algorithms are predicated upon the assumption that it is relatively inexpensive to migrate the state associated with a periodic task (or an executing job) from one processor

in the platform to another during run time. If this assumption is not valid, then the partitioned

approach to multiprocessor scheduling is preferred. In this approach, the tasks comprising the

task system are partitioned among the various processors comprising the multiprocessor platform

prior to run-time; at run-time, all the tasks assigned to a single processor are scheduled upon that

processor using some uniprocessor scheduling algorithm.

This research.

In previous work [3], we have studied the global scheduling of real-time systems

comprised of periodic and sporadic tasks upon uniform multiprocessors. We obtained utilizationbased conditions for determining whether a given system τ of sporadic and periodic tasks is successfully scheduled upon any specified uniform multiprocessor system using the Earliest-Deadline

first scheduling algorithm (EDF).

In this paper, we study the partitioned approach to scheduling systems of periodic and sporadic tasks upon uniform multiprocessor platforms. In order to completely specify a partitioned

scheduling scheme, we must specify two separate algorithms – (i) the task assignment algorithm

2

that determines which processor each task be assigned to, and (ii) the (uniprocessor) scheduling algorithm that executes on each processor and schedules the tasks assigned to the processor.

Once again, EDF is our choice for the scheduling algorithm — given the known optimality of EDF

upon preemptive uniprocessors, this is clearly the “best” scheduling algorithm that could be used

to schedule the individual processors once tasks have been assigned (provided that no additional

constraints are placed upon the system design – see Section 9 for a discussion). The choice of a

task assignment algorithm for partitioning the tasks among the available processors is not quite

as clear — even in the simpler case of identical multiprocessors, optimal task assignment requires

solving the bin-packing problem which is known to be NP-complete in the strong sense (and hence

unlikely to be solved efficiently). Hence, this paper focuses mainly upon the partitioning problem.

Among our contributions here are the following.

• We prove in Section 3 that the global and partitioned approaches to EDF scheduling on

uniform multiprocessors are incomparable, in the sense that there are uniform multiprocessor

real-time systems that can be scheduled using global EDF but not partitioned EDF, and there

are (different) uniform multiprocessor real-time systems that can be scheduled using global

EDF but not partitioned EDF. Hence, it behooves us to study both kinds of EDF scheduling

algorithms, since different ones are more suitable for different systems.

• However, the problem of determining an optimal partitioning of a given collection of periodic

tasks on a specified uniform multiprocessor platform is intractable – NP-hard in the strong

sense (Theorem 1). This intractability result motivates the search for approximation algorithms. Accordingly in Section 4, we extend approximate bin-packing algorithms to develop

a polynomial-time approximation scheme (PTAS) for task assignment on uniform multiprocessors. This PTAS allows the system designer to trade system computing capacity for speed

of analysis – there is a parameter δ with value between 0 and 1 that the system designer gets

to choose, with the tradeoff that the partitioning algorithm runs faster for smaller δ but can

guarantee to use only a fraction δ of the computing capacity of the platform.

• While this approximation algorithm is shown to run in polynomial time for a fixed value of

3

δ, its run-time may still be unacceptably high particularly for on-line situations. Hence we

propose an alternate partitioning algorithm (in Section 5) and associated utilization-based

feasibility test (in Section 7) which, while merely sufficient (rather than exact), has more

desirable run-time behavior for on-line situations in the sense that much of the analysis can

be done during system design time (instead of during run-time). We provide in-depth analysis

of this new feasibility test — its run-time complexity, its error bound, etc., — and illustrate

its working by means of a detailed example (Section 8).

In Section 9, we place this research in a larger context of research being conducted at the University

of North Carolina and elsewhere, into the uniform multiprocessor scheduling of systems of periodic

and sporadic real-time tasks.

2

Model; definitions

For the remainder of this paper, we will, unless explicity stated otherwise, assume that π =

(s1 , s2 , . . . , sm ) denotes an arbitrary uniform multiprocessor platform with m processors of speeds

s1 , s2 , . . . , sm respectively, with s1 ≥ s2 ≥ s3 ≥ · · · ≥ sm . We use the notation S(π) to denote

def P

the cumulative computing capacity of all of π’s processors: S(π) = m

i=1 si . The quantity λ(π) is

defined as follows:

Pm

j=i+1 sj

m

λ(π) = max

(1)

si

i=1

Periodic task systems.

!

A periodic/ sporadic real-time task T = (e, p) is characterized by

the two parameters execution requirement e and period p, with the interpretation that the task

generates an infinite sequence of jobs of execution requirement at most e units each, with successive

jobs being generated exactly/ at least p time units apart, and each job has a deadline p time units

after its arrival time. A system τ = {T1 , . . . , Tn } of periodic or sporadic tasks is comprised of

several such tasks, with each task Ti having execution requirement ei and period pi . We refer to

def P

the quantity ei /pi as the utilization of task Ti ; for system τ , Usum (τ ) = Ti ∈τ (ei /pi ) is referred

def

to as the cumulative utilization (or simply utilization) of τ , and Umax (τ ) = maxTi ∈τ (ei /pi ) is

referred to as the largest utilization in τ .

4

We will refer to a (uniform multiprocessor platform, periodic/ sporadic task system) ordered

pair as a uniform multiprocessor system; when clear from context, we may simply refer to it

as a system.

The condition, derived in [3], for guaranteeing that periodic/ sporadic task system τ is successfully scheduled to meet all deadlines upon uniform multiprocessor platform π using the global EDF

scheduling algorithm is

S(π) ≥ Usum (τ ) + Umax (τ ) · λ(π) .

(2)

That is, the cumulative computing capacity of the uniform multiprocessor platform must exceed

the cumulative utilization of the task system by at least the product of the platform’s λ-parameter

and the task system’s largest utilization.

3

Incomparability

By requiring that all jobs of each task execute upon exactly one processor, the partitioned approach to scheduling places further restrictions upon the permissible schedules than does the global

approach. One may at first therefore assume that global EDF upon uniform multiprocessors is

at least as powerful as partitioned EDF, in the sense that any task system schedulable by partitioned EDF upon a given uniform multiprocessor platform will also be successfully scheduled upon

the same platform using global EDF. In fact, this turns out to not be true: as we show below,

there are periodic/ sporadic task systems that can be scheduled using partitioned EDF, but not

global EDF, upon a given uniform multiprocessor platform, and there are (other) periodic/ sporadic task systems that can be scheduled using global EDF, but not partitioned EDF, upon specified

uniform multiprocessor platforms. These results motivate us to further study both the global and

the partitioned approaches, since it is provably the case that neither one is strictly superior to the

other.

Lemma 1 There are uniform multiprocessor systems that can be scheduled using global EDF that

cannot be scheduled using partitioning.

2

5

Proof:

Consider the uniform multiprocessor platform π consisting of 4 processors of computing

capacities (8U −4), (4U −2), (2U −), and (2U −) respectively, where U is an arbitrary positive

number and is a very small positive number U . For this platform, it can be verified that

= (8U − 4) + (4U − 2) + (2U − ) + (2U − )

= 16U − 8

2U − (2U −)+(2U −) (2U −)+(2U −)+(4U −2)

and λ(π) = max 2U

,

=1

− ,

4U −2

8U −4

S(π)

Consider now the periodic task system τ comprised of 13 identical tasks, each of utilization U ; it

may be verified that

Usum (τ ) = 13U

and Umax (τ ) = U

By the EDF-schedulability test of [3] (Equation 2 above), it follows that τ is successfully scheduled upon π by global EDF, since

S(π) − λ(π) · Umax (τ )

= (16U − 8) − 1 · U

= 15U − 8

≥ 13U

However, the 13 tasks in τ cannot be partitioned among the 4 processors of π; the fastest processor

can handle only 7 tasks, the next-fastest, 3, and the remaining two, one each, for a total of 12 tasks.

Lemma 2 There are uniform multiprocessor systems that can be scheduled using partitioning that

cannot be scheduled using global EDF.

2

Proof:

Let U, D denote positive real numbers. Consider the uniform multiprocessor platform

π consisting of 2 processors of computing capacities 2U and U respectively, and the periodic task

system τ = {T1 = (4U D, 2D), T2 = T3 = ( U2D , D)}. Task system τ is successfully scheduled upon π

6

using partitioned EDF: simply assign T1 to the faster processor and the other 2 tasks to the slower

processor.

To see that global EDF may miss deadlines while scheduling τ , consider the situation when jobs

of all three tasks arrive simultaneous (without loss of generality, at time instant zero). The jobs of

T2 and T3 have earlier deadlines, and are therefore scheduled first. It is straightforward to observe

that this will result in the job of T1 missing its deadline.

4

An approximation algorithm for task assignment

Given a uniform multiprocessor platform π = (s1 , s2 , . . . , sm ) and a periodic/ sporadic task system

τ = {T1 = (e1 , pi ), T2 = (e2 , p2 ), . . . , Tn = (en , pn )}, we wish to determine whether the tasks in τ can

be partitioned among the m processors in π such that all the tasks assigned to each processor are

schedulable upon that processor using the uniprocessor EDF scheduling algorithm. Unfortunately,

this problem is easily shown to be intractable:

Theorem 1 Determining whether a uniform multiprocessor system can be successfully scheduled

using partitioned EDF is NP-complete in the strong sense.

2

Proof Sketch: Reduce the bin-packing problem to the problem of partitioning on identical multiprocessors. Since identical processors are a special case of the more general uniform multiprocessors,

this implies that the more general problem is NP-hard in the strong sense as well.

The intractability result (Theorem 1 above) makes it highly unlikely that we will be able to

design an algorithm that runs in polynomial time and solves this problem exactly. If we are willing

to settle for an approximate solution, however, we show in this section that it is possible to solve

the problem in polynomial time. More specifically, we will use results proved by Hochbaum and

Shmoys [4, 5] to design a polynomial-time algorithm that, for any uniform multiprocessor system

(π, τ ) makes the following performance guarantee:

For any constant δ of our choosing satisfying 0 < δ < 1, if τ is schedulable using

partitioned EDF upon a platform π 0 which consists of the same number of processors as

7

π and in which each processor is at least δ times as fast as the corresponding processor

in π (i.e., if π 0 = (δs1 , δs2 , . . . , δsm )), then our algorithm will successfully schedule τ

upon π.

In other words, if we are willing to “waste” a fraction (1 −δ) of each processor’s computing capacity

in π and imagine that we instead have a platform in which each processor is only δ times as fast as

its actual speed, then our algorithm successfully schedules τ on π provided τ is schedulable using

partitioned EDF upon this (imaginary) slower platform.

Although our algorithm’s performance is “approximate” in that it may incorrectly fail to schedule task systems that are not schedulable upon π 0 but are schedulable upon π, its deviation from

optimality is bounded in the following sense: Any τ that turns out to not be schedulable upon π

cannot, by very definition, be successfully scheduled upon π by any algorithm. By choosing the

constant δ to be close enough to unity, we can reduce the likelihood that an arbitrary task system

falls in this category, at a cost of increased run-time complexity of the algorithm.

Bin-packing with variable bin sizes.

The problem of bin-packing with variable bin sizes can be

formulated as follows: Given a collection of n items of size p1 , p2 , . . . , pn respectively, can these items

be packed into m bins of capacity b1 , b2 , . . . , bm respectively? I.e., can a given multiset of positive

real numbers p1 , p2 , . . . , pn be partitioned into m multisets of size b1 , b2 , . . . , bm respectively?1

A PTAS for bin-packing with variable bin sizes.

Since bin-packing with variable bin sizes is

a generalization of the bin-packing problem, it, too, is NP-hard in the strong sense. Hochbaum and

Shmoys [4, 5] presented a polynomial-time approximation scheme (PTAS) for solving the problem

of bin-packing with variable bin-sizes. That is, they proposed a family of algorithms {A }, ∈ R+ ,

such that each algorithm A has run-time polynomial in the size of its input (but not in 1/), and

behaves as follows: When given input items of size p1 , p2 , . . . , pn and bins of capacity b1 , b2 , . . . , bm ,

• if the items can indeed be packed in the bins, then A produces a partition of p1 , p2 , . . . , pn

1

The terminology “variable bin sizes” is somewhat confusing in that one may expect that the sizes of the bins are

variables (permitted to vary). However, this terminology seems to be the accepted standard in the literature and we

have chosen to retain it rather than add to the confusion by introducing yet another term for this concept.

8

into m multisets of size (1 + ) · b1 , (1 + ) · b2 , . . . , (1 + ) · bm respectively (i.e., each bin may be

“over-filled” by a fraction – such a packing of the items into the bins is called an -relaxed

packing;

• if A fails to produce an -relaxed packing, then no (actual) packing of p1 , p2 , . . . , pn into the

bins of capacity b1 , b2 , . . . , bm is possible.

That is, A runs in time polynomial in the size of the input (although not in 1/) and provides

an -relaxed packing whenever the input has a feasible packing. The run-time complexity of the

2 +3)

Hochbaum-Shmoys algorithm [4, 5] is O((2m(n/2 )(2/

A PTAS for partitioning.

) · 2/2 ).

Suppose that we are given the specifications of a uniform multi-

processor platform π = (s1 , s2 , . . . , sm ), a periodic/ sporadic task system τ = {T1 = (e1 , p1 ), T2 =

(e2 , p2 ), . . . , Tn = (en , pn )}, and a constant δ < 1. Let

1

def

= ( − 1)

δ

pi

def

for 1 ≤ i ≤ n

bi j

def

for 1 ≤ j ≤ m

= ei /pi ,

= δ × sj ,

If the n items of size p1 , . . . , pn can be packed into bins of capacity b1 , b2 , . . . , bm , then the algorithm

A of Hochbaum and Shmoys described above produces a partition of p1 , p2 , . . . , pn into m multisets

of size (1 + ) · b1 , (1 + ) · b2 , . . . , (1 + ) · bm respectively. Since

(1 + ) · bj

1

= (1 + ( − 1)) · (δ × sj ) = sj ,

δ

a partitioning of the tasks in τ among the m processors can be directly obtained from this partition

— if bi is assigned to the j’th bin by A , then simply assign task Ti to the j’th processor. On the

other hand, if algorithm A fails to produce such an -relaxed partition then it must be the case

that the tasks in τ cannot be partitioned among m processors of computing capacity (δs1 , δs2 ,

. . ., δsm ). Thus, we can use the PTAS of Hochbaum and Shmoys described in Section 4 above to

design an algorithm that determines in time polynomial in n and m a partitioning of the tasks in

τ upon the processors of π such that each partition can be scheduled to meet all deadlines upon

9

its processor using the preemptive uniprocessor EDF scheduling algorithm, provided that such a

partitioning of the tasks of τ among m processors of speed (δs1 , δs2 , . . . δsm ) is possible.

5

The FFD-EDF scheduling algorithm

In Section 4 above, we described a PTAS for partitioning the n tasks of a periodic/ sporadic task

system τ among the m processors of a uniform multiprocessor platform π, which for any constant

δ < 1 is guaranteed to produce a successful partitioning provided that the tasks of τ can be

partitioned (by an optimal partitioning algorithm) among processors of speed δ times as fast as the

processors in π.

This PTAS resolves the important theoretical question of whether an approximate solution to

the problem of determining partitioned EDF schedulability can be determine in "polynomial time. #

2

δ

However, it can be verified that the run-time expression for this algorithm contains 3 + 10 ×

1−δ

in the exponent. Hence for reasonable values of δ, this run-time may be unacceptably high. (For

example, choosing δ = 0.9 – corresponding to a effective utilization of 90% of each processor’s

capacity – would yield an exponent of 3 + 10 × 92 , i.e., 813. Clearly, this is unreasonable for even

small values of n.) Due to these considerations, it behooves us to consider alternative approaches

to partitioning periodic/ sporadic task systems upon uniform multiprocessor platforms, despite

the polynomial-time behavior of the approach of Hochbaum and Shmoys. The approach we

have adopted, and describe in the remainder of this paper, uses the first-fit-decreasing

(FFD) bin-packing heuristic to partition the tasks among the processors, and then

uses uniprocessor EDF to schedule the individual processors.

First-fit decreasing task assignment.

We have chosen to consider the First Fit Decreasing

(FFD) bin-packing algorithm for assigning tasks onto processors. The FFD task assignment algorithm (Figure 1) considers tasks in non-increasing order of their utilizations, and processors in

non-increasing order of their computing capacities. When a task is considered, it is assigned to the

first processor considered upon which it will fit. Let gap(j) denote the amount of remaining capacity

available on si . Therefore, each task Ti is assigned to processor sj , where j is the smallest-indexed

10

FFD task assignment (τ, π)

Let τ = {T1 , T2 , . . . , Tn } denote the tasks, with peii ≥ pei+1

for all i

i+1

Let π = {s1 , s2 , . . . , sm } denote the processors, with sj ≥ sj+1 for all j

1. for j ← 1 to m do gap(j) := sj

2. for i ← 1 to n do

3.

Let jo denote the smallest index such that gap(jo ) ≥ ei /pi

4.

If no such jo exists declare τ FFD-infeasible on π; return

5.

Assign Ti to processor jo

6.

gap(jo ) := gap(jo ) − ei /pi

7. end do

Figure 1: The FFD task-assignment algorithm.

processor with gap(j) ≥ ei /pi . If no processors have large enough gap to fit Ti , then τ is said to

be FFD-infeasible on π. Otherwise, the FFD partitioning algorithm can assign each task of τ to

some processor of π without overloading any processors and τ is said to be FFD-feasible on π.

The run-time computational complexity of FFD task assignment is O(n log n) (for sorting the tasks

in non-increasing order of utilizations) + O(m log m) (for sorting the processors in non-increasing

order of capacities) + O(n × m) (for doing the actual assignment of tasks to processors), for an

overall computational complexity of O(n · (log n + m)) for most reasonable combinations of values

of n and m.

Our use of FFD for task partitioning upon uniform multiprocessor platforms represents a

scheduling application of the FFD bin packing algorithm. While the FFD algorithm has been

studied in detail for identical bins, less attention has been paid to this algorithm for the variable

bin packing problem. The FFD algorithm is known to be a superior bin-packing algorithm for

identical bins (e.g., it has the best known competitive ratio when identical bin sizes are considered [6]); therefore, we opted to explore partition scheduling on uniform multiprocessors using FFD

to determine the partitioning scheme.

11

6

The utilization bound function

The utilization bound function of a multiprocessor platform generalizes the utilization bound concept as defined for uniprocessors. The utilization bound of a scheduling algorithm is defined to be

the largest utilization such that all periodic (sporadic) task systems with cumulative utilization no

larger than this bound are guaranteed schedulable by that algorithm, and there are task systems

with cumulative utilization larger than this bound by an arbitrarily small amount that the algorithm fails to schedule. For example, it is known [7] that any periodic task system τ is schedulable

upon a single preemptive processor of unit computing capacity using the rate monotonic scheduling

algorithm provided Usum (τ ) is at most ln 2 ≈ 0.69, and by EDF provided Usum (τ ) at most one; it is

also known that there are task systems with utilization (ln 2 + ) that the rate-monotonic algorithm

fails to schedule, and task systems with utilization (1 + ) that EDF fails to schedule, for arbitrarily

small positive . Therefore, the utilization bound of rate-monotonic scheduling upon a preemptive

uniprocessor is ln 2, and the utilization bound of EDF upon a preemptive uniprocessor is 1.

Upon multiprocessor platforms, it has been shown that tighter utilization bounds can be obtained if a priori knowledge of the largest possible utilization of any individual task is known.

For instance, it can be shown that the utilization bound of any partition-based algorithm cannot

exceed ((m + 1)/2) upon m unit-capacity processors; however, if it is known that no individual

task’s utilization exceeds U , then a somewhat better bound of

1 + m · b1/U c

1 + b1/U c

was proven by Lopez et al. [8]. In the case of global EDF scheduling upon m unit-capacity multiprocessors, the corresponding bound, proven in [9] is

m − (m − 1) · U

— in both cases, the utilization bound increases with decreasing U , and asymptotically approaches

m (i.e., the platform capacity) as U → 0. We refer to these bounds as utilization bound functions.

Definition 1 (Utilization bound function Uπ (a)) With respect to any scheduling algorithm

A, the utilization bound function Uπ for uniform multiprocessor platform π is a function from

12

the real numbers to the real numbers [0, S(π)] with the interpretation that for all real numbers a,

• any periodic/ sporadic task system τ satisfying

Umax (τ ) ≤ a and Usum (τ ) ≤ Uπ (a)

is successfully scheduled upon π by algorithm A, and

• there is some task system τ 0 with

Umax (τ 0 ) ≤ a and Usum (τ 0 ) = (Uπ (a) + )

that is not successfully scheduled upon π by Algorithm A, where is an arbitrarily small

positive number.

2

(That is, a sufficient condition for a periodic/ sporadic task system τ with to be successfully

scheduled upon π by algorithm A is that Usum (τ ) ≤ Uπ (Umax (τ )) ; furthermore, for all a there is

some task system with largest utilization equal to a and cumulative utilization larger than Uπ (a)

by an arbitrarily small amount, which is not successfully scheduled by algorithm A on platform π.)

If for a particular scheduling algorithm A the associated utilization bound function Uπ (U ) is

efficiently determined for any π and any U , then we can easily obtain an efficient utilization-based

schedulability test for scheduling algorithm A: to determine whether task system τ is successfully

scheduled upon a given platform π by that particular algorithm, simply compute Usum (τ ) and

Umax (τ ) — this can be done in time linear in the number of tasks in τ — and ensure that

Usum (τ ) ≤ Uπ (Umax (τ )) .

If Uπ is difficult to compute, an alternative would be to compute an upper bound function for Uπ –

a function f such that f (a) ≥ Uπ (a) for all a ∈ R+ , since

Usum (τ ) ≤ f (Umax (τ ))

would then also be a sufficient test for determining whether τ is successfully scheduled upon π

by algorithm A. (Of course, the “closer” function f is to function Uπ , the better this sufficient

13

schedulability test is, in the sense that it is less likely to reject as unschedulable task systems that

are in fact schedulable.)

7

The utilization bound function of FFD-EDF

With respect to FFD-EDF upon uniform multiprocessors, we do not yet have an efficient algorithm

for computing the function Uπ from the specifications of uniform multiprocessor platform π. Rather,

in the remainder of this paper we describe an algorithm that, for any given π, precomputes an upper

bound on Uπ (a) for all a in the range (0, S(π)]. While this precomputation is not particularly

efficient — our algorithm takes time exponential in the number of processors in π — notice that

the precomputation algorithm needs to be run only once, when the uniform multiprocessor platform

is being synthesized. Our vision is that such an upper bound on the utilization bound function will

be precomputed and made available with any uniform multiprocessor platform that may be used

for partitioned scheduling, in much the same manner that other information about the platform

– the individual processor speeds, interprocessor migration costs, voltage information, etc., — is

made available. If this is done, then performing a sufficient schedulability analysis test for task

system τ reduces to the linear-time computation of Usum (τ ) and Umax (τ ), followed by table lookup to determine whether the condition Usum (τ ) ≤ f (Umax (τ )) is satisfied. Figure 4 illustrates

a utilization bound for the uniform multiprocessor π = (2.5, 2, 1.5, 1). Given any task system

τ , if (Umax (τ ), U (τ )) is below the line shown in the graph (after a minor adjustment), then τ is

FFD-EDF-feasible on π. This example is discussed in more detail in Section 8.

Our approach in the remainder of this section is as follows. We begin by exploring properties

of task systems that are successfully scheduled by the FFD-EDF scheduling algorithm. Based on

insights gained from this exploration, we modify the problem slightly so as to greatly reduce the

size of the search space. Finally, we present an approximation algorithm for the utilization bound

of the modified problem.

Consider the FFD task-assignment of any task system τ that is FFD-EDF-infeasible on π.

Since we are trying to determine a lower bound on the utilization of infeasible tasks, we are most

concerned with the first task that cannot be scheduled. We observe the following about the FFD

14

task-assignment of τ prior to attempting to assign the first unschedulable task to a processor.

Observation 1 Let τ = {T1 , T2 , . . . , Tn } be any task system that is FFD-EDF-infeasible on π.

Assume the tasks of τ are indexed so that ui ≥ ui+1 for 1 ≤ i < n and let Tk be the first task of

τ that can not fit on any processor. Thus, τ 0 = {T1 , T2 , . . . , Tk−1 } is FFD-EDF-feasible on π. Let

gap(j) denote the gap on the j’th processor after the FFD task-assignment of τ 0 . Then

for all i, 1 ≤ i ≤ k, ui > max{gap(j) | 1 ≤ j ≤ m}.

(3)

2

Proof Sketch: Since Tk can not fit on any of the processors uk must be strictly greater than g.

The result follows since FFD sorts tasks according to utilization.

This important property is the basis of our approximation algorithm. Based on this observation,

we convert any FFD-EDF-infeasible task system τ to another task set τ 0 with a very specific structure

— this structure can be exploited to find a bound for Uπ . We call such a task system modular on

π.

Definition 2 (modular on π) Let π = (s1 , . . . , sm ) denote an m-processor uniform multiprocessor and let τ = {T1 , T2 , . . . , Tn } denote a task system. Then τ is said to be modular on π if the

tasks of τ have at most m + 1 distinct utilizations u01 , u02 , . . . , u0m+1 and there exists a partitioning

of the tasks in τ among the m processors of π that satisfies the following properties.

1. For all Ti , Tj ∈ τ \ {Tn }, if Ti and Tj are both partitioned onto the k’th processor then ui = uj

and we denote this utilization u0k ,

2. for all k ≤ m, there are bsk /u0k c tasks of utilization u0k partitioned onto the k’th processor,

and

3. Tn fits on the processor with the largest gap. (I.e., un = u0m+1 = max{sj mod u0j | 1 ≤ j ≤

m}. This implies that for all j ≤ m, u0j > u0m+1 ).)

15

Task systems that are modular on π can be represented by an m-tuple; the m-tuple (u01 , u02 , . . . , u0m )

is a valid representation of a modular task system on π if u0i > max{sj mod u0j | 1 ≤ j ≤ m}.

2

By the 3rd property in Definition 2 above, the utilization of Tn (the task with the smallest

utilization) is max{si mod ui | 1 ≤ i ≤ m}. Therefore, ModUtil(u1 , u2 , . . . , um ), the utilization of

the modular task system (u1 , u2 , . . . , um ), is given by the following equation:

ModUtil(u1 , u2 , . . . , um ) = S(π) −

m

X

(si

i=1

mod ui ) + max {si

1≤i≤m

mod ui }.

(4)

The partition described in the definition of modular task systems (Definition 2 above) might

not be produced by the FFD task-partitioning algorithm, since it is not required that uj > uj+1 for

all j. Nevertheless, modular task systems are important to us because they are so nicely structured.

For small m, the modular tasks systems can be exhaustively searched to approximate a utilization

bound. Below we show that we can use modular task systems to find a bound for Uπ (a).

Notice that Property 3 of the modular task system definition bears a close resemblance to

Equation 3 (our observation about infeasible task systems). Taking note of this similarity, we can

find a modular task system τ 0 that corresponds to any FFD-EDF-infeasible task system τ by first

reducing the utilization of τ appropriately until the task system is “just about feasible”, and then

“averaging” the feasible task system to make it modular on π. Both these steps are described in

more detail below.

Definition 3 (feasible reduction) Let τ = {T1 , T2 , . . . , Tn } denote a task system that is FFD-EDF-infeasible on π. Assume the tasks of τ are indexed so that ui ≥ ui+1 for all 1 ≤ i < n and let

Tk be the task of τ upon which the FFD task-assignment algorithm reports failure . Let gap(j)

denote the gap on the j’th processor after performing the FFD task-partitioning algorithm on the

def

first (k − 1) tasks of τ and let g = max{gap(j) | 1 ≤ j ≤ m}. Then τ 0 is an FFD-EDF-feasible

reduction of τ if the following holds.

• If g = 0, τ 0 = {T1 , T2 , . . . , Tk−1 },

• otherwise τ 0 = {T1 , T2 , . . . , Tk−1 , Tg }, where Tg is any task with utilization equal to g.

16

2

Thus, the FFD-EDF-feasible reduction of τ relates Equation 3, our observation about infeasible

task systems, to Property 3 of modular task systems. However, the FFD-EDF-feasible reduction of

τ may not be a modular task system since tasks on the same processor do not necessarily have the

same utilization. The following lemma demonstrates how any FFD-EDF-feasible reduction τ can

be “averaged” into a modular task system on π. We restrict our attention to those task systems

whose total utilization is strictly less than S(π) because we are exploring these systems to find Uπ .

Since we know that no partitioning algorithm is optimal on multiprocessors, it must be the case

that Uπ < S(π). Therefore, we can ignore any FFD-EDF-feasible reduction whose utilization equals

S(π) without reducing the accuracy of our analysis.

Lemma 3 Let π = (s1 , s2 , . . . , sm ) denote a uniform multiprocessor and let τ = {T1 , T2 , . . . , Tn } be

a feasible reduction of some FFD-EDF-infeasible task system on π with U (τ ) < S(π). Assume that

Tn has the minimum utilization of the tasks in τ . Consider the FFD task-assignment of τ \ {Tn }.

Let Ui denote the total utilization of the tasks assigned to the i’th processor and let ni denote the

def

number of tasks. Thus, u0i = Ui /ni is the average utilization of the tasks of τ \ {Tn } assigned to the

i’th processor of π. Then the m-tuple (u01 , u02 , . . . , u0m ) is a valid representation of a modular task

system. Moreover, any modular task system represented by (u01 , u02 , . . . , u0m ) satisfies the following

properties.

1. U (τ ) = U (τ 0 ), and

2. Umax (τ ) ≥ max u0i .

1≤i≤m

2

Proof: We first show that (u01 , u02 , . . . , u0m ) is a valid representation of a modular task system on

π — i.e., u0i > max{sj mod u0j | 1 ≤ j ≤ m} for all 1 ≤ i ≤ m. Since U (τ ) < S(π) and τ is an

FFD-EDF-feasible reduction on π, the utilization of Tn must be strictly less than the utilization of

any other task in τ . Therefore, for any i, un must be smaller than u0i = Ui /ni (since the mean of

a set is at least as large as the smallest value in the set). Furthermore, Tn fits exactly into the

17

largest gap after performing the FFD task-partitioning algorithm on {T1 , T2 , . . . , Tn−1 }. Therefore,

un = max{si − Ui | 1 ≤ i ≤ n}. This shows that for any u0i ,

u0i > si − Ui = si − ni · u0i ≥ 0

(5)

— i.e., the gap on the i’th processor is (si mod u0i ). Thus, u0i > un = max{sj mod u0j | 1 ≤ j ≤ m}

for all 1 ≤ i ≤ m, so (u01 , u02 , . . . , u0m ) is a valid representation of a modular task system on π.

We now show the two conditions hold. Consider any i, 1 ≤ i ≤ m. The modular partitioning

of τ 0 assigns bsi /u0i c tasks to the i’th processor. By Equation 5, bsi /u0i c = ni . Therefore, the total

utilization of these tasks on the i’th processor is ni · u0i = Ui . Thus,

U (τ 0 ) = ModUtil(u01 , u02 , . . . , u0m ) =

n

X

(ni · u0i ) + max {si

1≤i≤m

i=1

mod u0i } = Ui + un = U (τ ).

Thus, Condition 1 holds. Also, u0i must be between u1 and un (the maximum and minimum utilizations of tasks in τ ) because it is the mean of values in the range [un , u1 ]. Therefore, Condition 2

must hold.

Lemma 3 demonstrates that any FFD-EDF-feasible reduction can be “modularized” without

changing the total utilization or increasing the maximum utilization. As Theorem 2 states, these

modular task systems can be used to find a bound for Uπ (a).

Theorem 2 Let π = (s1 , s2 , . . . , sm ) be any uniform multiprocessor and let

def

Mπ (a) = min{U (τ ) | τ is modular on π and Umax (τ ) ≤ a}.

(6)

Then Uπ (a) ≥ Mπ (a) for all a ∈ (0, s1 ].

2

Proof: By Lemma 3

Mπ (a) ≤ min{U (τ ) | τ is an FFD-EDF-feasible reduction on π and Umax (τ ) ≤ a}.

Since every task system τ that is FFD-EDF-infeasible on π has an FFD-EDF-feasible reduction τ 0

with U (τ 0 ) < U (τ ) and Umax (τ 0 ) = Umax (τ ),

Mπ (a) ≤ min{U (τ ) | τ is FFD-EDF-infeasible on π and Umax (τ ) ≤ a} = Uπ (a).

18

Therefore, any task system τ with U (τ ) ≤ Mπ (a) and Umax (τ ) ≤ a is FFD-EDF-feasible on π.

Because modular task systems have a limited number of distinct utilizations and they have such

a rigid structure, for small values of m we can approximate Mπ (a) by doing a thorough search of

valid m-tuples (u1 , u2 , . . . , um ) with ui ∈ (0, si ]. Since this is a continuous region, we can not search

every possibility within this range. Instead, we allow the utilizations to be chosen from a finite set

V . Figure 2 illustrates the approximation algorithm which considers all the valid utilization combinations in the set V m (the set of m-tuples of elements in V ). The function MinUtilGraph(π, V )

finds the graph of Mπ (a) for a ∈ (0, s1 ]. It calls the function MinUtilLeqA(π, V, a), which returns

the value of Mπ (a) for a specific value of a. Of course, the values in V must be chosen carefully to

ensure the approximation is within a reasonable margin of the actual bound. In particular, given

any > 0 we want to find V so that for all a ∈ (0, s1 ], |MinUtilLeqA(π, V, a) − Mπ (a)| ≤ .

Assume that opt= (u1 , u2 , . . . , um ) is a modular system with maximum utilization a and

U (opt) = Mπ (a). We wish to choose V so as to ensure MinUtilLeqA(π, V ) will consider some

m-tuple (u01 , u02 , . . . , u0m ) with maximum utilization no more than a and ModUtil(u0i , u02 , . . . , u0m ) −

ModUtil(opt) ≤ . To do this analysis, we need to consider the function (si mod x) more carefully. Figure 3 illustrates the graph of y = (6 mod x). Notice that y decreases as x increases until

x divides 6, at which point (6 mod x) = 0. Once x increases beyond this point, y jumps up to

the line y = x and the process repeats. Thus, this graph is a series of straight lines with slope

(−n) between the points (6/(n + 1), 6/(n + 1)) and (6/n, 0). The graph of (si mod x) follows this

general pattern for every value si .

We wish to choose V to ensure that for any two consecutive points x1 and x2 and for any x

between these two points,

|(si

mod x) − (si

mod x2 )| < /(m + 1).

Clearly, V must contain the following set of “jump” points

[ si

si (m + 1)

def

Jπ () =

|1≤n≤

,n ∈ N .

n

(7)

(8)

1≤i≤m

These are all the points where, for some i ≤ m, the graph of (si mod x) suffers a discontinuity.

19

function MinUtilGraph(π, V )

% π = (s1 , s2 , ..., sm ) is the uniform multiprocessor

% V = {v1 , v2 , ..., vk } is the set of allowable utilizations in decreasing order

U = S(π)

while Umax > vk

% min util is the minimum total utilization

% min a is the minimum of the set {Umax (τ ) | U (τ ) = U and Umax (τ ) ≤ Umax }

(min util, min a) =MinUtilLeqA(π, V, Umax )

if min util > U

draw a line from (Umax , U) to (min a, U)

Umax = min a; U = min util

function MinUtilLeqA(π, V, Umax )

% uses a recursive function to find the minimum feasible utilization

min util = S(π); min a = s1 % global variables for the recursion

for (u1 in V ) and (u1 ≤ Umax ))

% call ConsiderNextProc recursively

ConsiderNextProc(2, (s1 mod u1 ), (s1 mod u1 ), u1 )

return (min util, min a)

function ConsiderNextProc(proc, , max gap, gap sum, min util)

% there are two exit conditions — proc = m + 1 or none of the current tasks fit on proc

if ((proc = m + 1) or ((sproc < min util) and ((sproc < max gap) or (max gap = 0))))

Pproc−1

util = i=1

si − gap sum + max gap

a = max{si | 1 ≤ i < proc}

if ((util < min util) or ((util = min util) and (a < min a)))

min util = util; min a = a

return

% the exit conditions are not yet, consider next processor (proc + 1)

for (uproc in V ) and (uproc ≤ max{Umax , sproc }))

ConsiderNextProc(proc + 1, max{max gap, (sproc mod uproc )},

(gap sum + (sproc mod uproc )), min{min util, uproc })

Figure 2: Approximating the minimum utilization bound of modular task systems.

20

6

3

y

2

1

@

@

@

@

A

@

A

@

A

B

@

A

C B

@

A

D C B

@

A

EE EE D C B

E

@

A

EEE E E E E D C B

EE E E E E E D C B

A

@ -

1

2

3

4

5

6

x

Figure 3: The graph of y = 6 mod x

In addition, V must contain points that are close enough to one another to ensure Inequality 7

is satisfied. Recall that the slope of (si mod x) between (si /(n + 1), si /(n + 1)) and (si /n, 0) is

(−n) = −dsi /xe. Clearly, this slope is steepest when si is maximized and x is minimized — i.e., the

steepest slope is (−ds1 /(/(m + 1))e). Thus, the distance between consecutive points of V should

be no greater than ((/(m + 1))/ds1 (m + 1)/e) ≤ 2 /(s1 (m + 1)2 ), so V should also contain the

following points

(

def

Kπ () =

2

+n·

|0≤n≤

m+1

s1 (m + 1)2

s1 (m + 1)

)

2

,n ∈ N

(9)

With these points included in V , the following theorem holds.

Theorem 3 Let π = (s1 , s2 , . . . , sm ) be any uniform multiprocessor. Then for any , there exists a

set V such that MinUtilLeqA(π, V , a) ≤ Mπ (a) + for all a > 0. Moreover, |V | = O((m · s1 /)2 ).

2

Proof:

Let V = Jπ ()

S

Kπ (). The first set in V contains points of the form si /n in between

/(m + 1) and s1 . The second set is all points that are 2 /(s1 (m + 1)2 ) distance apart in the same

range. Notice that |V | = S(π) · (m + 1)/ + ((m + 1) · s1 /)2 = O((m · s1 /)2 ).

Let (u1 , u2 , . . . , um ) be a modular task system with ModUtil(u1 , u2 , . . . , um ) = opt. For each

i, 1 ≤ i ≤ m, let u0i = min{v ∈ V | v ≥ ui }. We will prove the theorem by showing that

21

ModUtilMin(pi,V) ≤ ModUtil(u01 , u02 , . . . , u0n ) ≤ opt + . We consider two cases depending on the

def

value of umin = min{ui | 1 ≤ i ≤ n}.

Case 1 : umin < /(m + 1).

Recall all processor gaps are smaller than umin . Therefore,

ModUtil(u1 , u2 , . . . , un ) ≥

S(π) − (m − 1) · umin ≥

S(π) − (m − 1) · /(m + 1) >

S(π) − .

Therefore, MinUtilLeqA(π, V , a) cannot be greater than Mπ (a) + .

Case 2 : umin ≥ /(m + 1).

Let M = max{si mod ui | 1 ≤ i ≤ m} and let M 0 = max{si mod u0i | 1 ≤ i ≤ m}. We begin

by showing that (u01 , u02 , . . . , u0n ) is a valid modular task system — i.e., that u0i > M 0 for all i. If this

condition holds, then ModUtilMin evaluates the utilization of (u01 , u02 , . . . , u0n ). Hence, the function

can not return a value greater than ModUtil(u01 , u02 , . . . , u0n ). Since u0j ≥ uj and uj > M for all

j ≤ m, it suffices to show that M ≥ M 0 . We do this by showing that (si mod ui ) ≥ (si mod u0i )

for all i ≤ m.

Consider any i ≤ m. If ui = si /n for some n ∈ N then ui ∈ V . Therefore, u0i = ui and (si

mod u0i ) = (si mod ui ). Now assume si /(n + 1) < ui < si /n for some n ∈ N. Since Jπ () ⊆ V , it

must be the case that si /(n + 1) < ui ≤ si /n. Therefore, (si mod x) is decreasing between ui and

u0i , so (si mod ui ) ≥ (si mod u0i ). Since this holds for all i ≤ m, we have shown M ≥ M 0 . Thus,

(u01 , u02 , . . . , u0m ) is a valid modular task system so ModUtilMin(π, V ) ≤ ModUtil(u01 , u02 , . . . , u0m ).

It remains to show that ModUtil(u01 , u02 , . . . , u0n ) ≤ opt + . By our choice of V , we know that

0 ≤ (si mod ui ) − (si mod u0i ) ≤ /(m + 1) for all i ≤ m. We will now show that the maximum

22

gaps of each system differ by no more than /(m + 1). Assume that (s` mod u0` ) = M 0 . Then

M0 − M

max {si

1≤i≤m

=

mod u0i } − max {si

mod ui } =

mod u0` − max {si

mod ui } ≤

s`

1≤i≤m

1≤i≤m

s`

mod u0` − s`

mod u` ≤

/(m + 1).

Pulling this all together, we have

MinModUtil(pi,V) ≤

ModUtil(u01 , u02 , . . . , u0m ) =

m

X

S(π) −

(si mod u0i ) + M 0 ≤

i=1

S(π) −

m

X

((si

mod ui ) − /(m + 1)) + M 0 ≤

i=1

S(π) −

m

X

(si

mod ui ) + (m · )/(m + 1) + (M + /(m + 1) =

i=1

S(π) −

m

X

(si

mod ui ) + M + =

i=1

opt + Corollary 1 Let π be any uniform multiprocessor. For any > 0, let V be the set specified in

Theorem 3. Let τ be any task system with U (τ ) ≤ MinUtilLeqA(π, V , Umax (τ )) − . Then τ is

FFD-EDF-schedulable on π.

2

The subroutine MinUtilLeqA examines all valid modular task systems whose task utilizations

are in V . Thus the complexity of this subroutine is O(|V |m ). The algorithm MinUtilGraph

calls MinUtilLeqA at most |V | times. Therefore, the complexity of MinModUtil is O(|V |m+1 ) =

O((m · s1 /)2(m+1) ).

23

6

7

6

Mπ (a)

5

4

-

1

2

a

3

Figure 4: The graph of Mπ (a) for π = (2.5, 2, 1.5, 1) with error bound = 0.1.

8

Example

Figure 4 illustrates the graph that results from executing MinUtilGraph on the uniform multiprocessor π = (2.5, 2, 1.5, 1) with a maximum error of = 0.1. For a ∈ (1, 2.5], the value of

MinUtilGraph(π, V ) returns the value 4.5. By Theorem 3 4.4 ≤ Mπ (a) ≤ 4.5 for all a ∈ (1, 2.5].

Thus, if τ is a task system with Umax (τ ) ∈ (1, 2.5] and U (τ ) ≤ 4.4, τ is FFD-EDF-feasible on π.

As a approaches 0, the value returned by MinUtilGraph(π, V ) approaches S(π) in a near-linear

fashion. This is because when a is very small, the gaps on each processor must also be very small

(recall that the gaps are smaller than the task utilizations).

In this example |V | = 611. Notice this is much smaller than the estimate (m · s1 /)2 = 10, 000,

which is a worst case estimate. The size of |V | depends primarily on m, s1 and . The other

processor speeds si , i > 1 also influence |V | slightly. See [1] for an exact formulation of this set.

9

Context and Conclusions

Because scheduling algorithms typically execute upon the same processor(s) as the real-time task

system being scheduled, it is important that the scheduling algorithms themselves not have prohibitively complex runtime behavior. The overhead of both computing the priorities of jobs and of

24

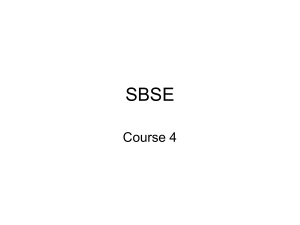

global scheduling

restricted migration

partitioned

this research

static

fixed

dynamic

Table 1: A classification of algorithms for scheduling periodic task systems upon identical multiprocessor

platforms. Priority-assignment constraints are on the x axis, and migration constraints are on the y axis. In

general, increasing distance from the origin may imply greater generality.

migrating jobs to different processors has a performance cost at run time. To alleviate this cost,

system designers often choose to place constraints upon the manner in which job priorities are

defined, and also on the amount of job migration that is permitted.

Under the assumption that job preemption is permitted (regardless of whether it incurs a cost

or not), we have chosen to distinguish among three different categories of uniform multiprocessor

scheduling algorithms based upon the freedom with which priorities may be assigned: static priority schemes, fixed priority schemes, and dynamic priority schemes. In static priority schemes,

all jobs of a given task have the same priority. In fixed priority schemes, different jobs of the same

task may have different priorities, but the priority of a job remains fixed. In dynamic priority

schemes, jobs may change priority over time. We also distinguish among three different categories

of algorithms based on the amount of interprocessor migration permitted partitioned scheduling

algorithms, restricted migration algorithms, and global scheduling algorithms. These two axes

of classification are orthogonal to one another in the sense that restricting an algorithm along one

axis does not restrict freedom along the other. Thus, there are 3 × 3 = 9 different categories of

scheduling algorithms according to these classifications; these nine categories are shown in Table 1.

While the corners of Table 1 represent extremes (e.g., forbidding migrations entirely versus permitting arbitrary migrations, or fixing priorities of entire tasks all at once versus permitting arbitrary

variation in each job’s priority) the middle choices (i.e., fixed priority, and restricted migrations)

represent reasonable mediums. The focus of this paper has therefore been the bottom cell of the cen25

tral column of Table 1. For the class of partitioned, fixed-priority scheduling algorithms that

populate this cell, we have proven that feasibility-analysis is intractable (NP-hard in the strong

sense) in general, and have also shown that partitioned fixed-priority scheduling is incomparable

with restricted migration fixed-priority scheduling. We have presented Algorithm FFD-EDF, a partitioned fixed-priority scheduling algorithm. We have developed an algorithm that maps any a to its

associated bound Mπ (a). Once this graph has been determined for a given uniform multiprocessor,

a feasibility test can be performed in linear time by checking whether a task Mπ (Umax (τ )) ≥ U (τ ).

In prior work [2], we found a similar feasibility test for the fixed-priority global scheduling algorithms. In the future, we plan to examine the third cell of the fixed-priority column — fixed priority,

restricted migration algorithms.

References

[1] Baruah, S. K., and Funk, S. Task assignment in heterogenous multiprocessor platforms. Tech. rep.,

Department of Computer Science, University of North Carolina at Chapel Hill, 2003.

[2] Funk, S., and Baruah, S. Characteristics of EDF schedulability on uniform multiprocessors. In

Proceedings of the Euromicro Conference on Real-time Systems (Porto, Portugal, 2003), IEEE Computer

Society Press.

[3] Funk, S., Goossens, J., and Baruah, S. On-line scheduling on uniform multiprocessors. In Proceedings of the IEEE Real-Time Systems Symposium (December 2001), IEEE Computer Society Press,

pp. 183–192.

[4] Hochbaum, D. S., and Shmoys, D. B. Using dual approximation algorithms for scheduling problems:

Theoretical and practical results. Journal of the ACM 34, 1 (Jan. 1987), 144–162.

[5] Hochbaum, D. S., and Shmoys, D. B. A polynomial time approximation scheme for scheduling on

uniform processors using the dual approximation approach. SIAM Journal on Computing 17, 3 (June

1988), 539–551.

[6] Johnson, D. S. Near-optimal Bin Packing Algorithms. PhD thesis, Department of Mathematics,

Massachusetts Institute of Technology, 1973.

[7] Liu, C., and Layland, J. Scheduling algorithms for multiprogramming in a hard real-time environment.

Journal of the ACM 20, 1 (1973), 46–61.

26

[8] Lopez, J. M., Garcia, M., Diaz, J. L., and Garcia, D. F. Worst-case utilization bound for

EDF scheduling in real-time multiprocessor systems. In Proceedings of the EuroMicro Conference on

Real-Time Systems (Stockholm, Sweden, June 2000), IEEE Computer Society Press, pp. 25–34.

[9] Srinivasan, A., and Baruah, S. Deadline-based scheduling of periodic task systems on multiprocessors. Information Processing Letters 84, 2 (2002), 93–98.

27