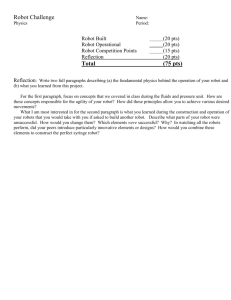

Massachusetts Institute of Technology Department of Electrical

advertisement