7. Extra Sums of Squares

advertisement

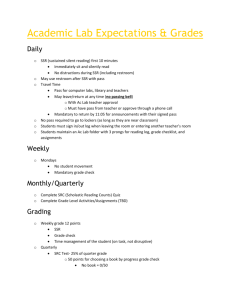

7. Extra Sums of Squares Football Example: Yi = #points scored by UF football team in game i Xi1 = #games won by opponent in their last 10 games Xi2 = #healthy starters for UF (out of 22) in game i Suppose we fit the SLR Yi = β0 + β1Xi1 + ²i and plot the residuals ei against Xi2: 1 6 4 residuals −2 0 2 Q: What do we conclude from this ? −6 −4 A: The residuals appear to be linearly related to Xi2, thus, Xi2 should be put into the model. 10 12 14 16 18 #healthy starters 20 22 2 Another Example: Yi = height of a person Xi1 = length of left foot Xi2 = length of right foot Suppose we fit the SLR Yi = β0 + β1Xi1 + ²i and plot the residuals ei against Xi2: 3 Q: Why no pattern? residuals A: Xi2 is providing the same information about Y that Xi1 does. Thus, even though Xi2 is a good predictor of height, it is unnecessary if Xi1 is already in the model. length of right foot 4 Extra sums of squares provide a means of formally testing whether one set of predictors is necessary given that another set is already in the model. Recall that SSTO = SSR + SSE n n n X X X (Yi − Ȳ )2 = (Ŷi − Ȳ )2 + (Yi − Ŷi)2 i=1 i=1 R 2 = i=1 SSR SSTO Important Fact: R2 will never decrease when a predictor is added to a regression model. 5 Consider the two different models: E(Yi) = β0 + β1Xi1 E(Yi) = β0∗ + β1∗Xi1 + β2∗Xi2 Q: Is SSTO the same for both models? A: Yes! Thus, SSR will never decrease when a predictor is added to a model. 6 Since SSE and SSR are different depending upon which predictors are in the model, we use the following notation: SSR(X1): SSR for a model with only X1 SSR(X1, X2): SSR for a model with X1 and X2 SSE(X1) and SSE(X1, X2) have analogous def’s Note SSTO = SSR(X1) + SSE(X1) SSTO = SSR(X1, X2) + SSE(X1, X2) We also know SSR(X1, X2) ≥ SSR(X1). Thus SSE(X1, X2) ≤ SSE(X1). Conclusion: SSE never increases when a predictor is added to a model. 7 Reconsider the Example: Yi = height of a person Xi1 = length of left foot; Xi2 = length of right foot Q: What do you think about the quantity SSR(X1, X2) − SSR(X1) A: Probably small because if we know the length of the left foot, knowing the length of the right won’t help. Notation: Extra Sum of Squares SSR(X2|X1) = SSR(X1, X2) − SSR(X1) SSR(X2|X1) tells us how much we gain by adding X2 to the model given that X1 is already in the model. 8 We define SSR(X1|X2) = SSR(X1, X2) − SSR(X2) We can do this with as many predictors as we like, e.g. SSR(X3, X5|X1, X2, X4) = SSR(X1, X2, X3, X4, X5) − SSR(X1, X2, X4) = SSR(all predictors) − SSR(given predictors) 9 Suppose our model is: E(Yi) = β0 + β1Xi1 + β2Xi2 + β3Xi3 Consider tests involving β1, β2, and β3. One Beta: H0 : βk = 0, HA : not H0 k = 1, 2, or 3 In words, this test says “Do we need Xk given that the other two predictors are in the model?” Can do this with a t-test: q t∗ = bk / MSE · [(X0X)−1]k+1,k+1 10 Two Betas: (some of the Betas) H0 : β1 = β2 = 0 HA : not H0 H0 : β1 = β3 = 0 HA : not H0 H0 : β2 = β3 = 0 HA : not H0 For example, the first of these asks “Do we need X1 and X2 given that X3 is in the model?” All Betas: H0 : β1 = β2 = β3 = 0 HA : not H0 This is just the overall F-Test We can do all of these tests using extra sum of squares. 11 Here is the ANOVA table corresponding to the model E(Yi) = β0 + β1Xi1 + β2Xi2 + β3Xi3 ANOVA Table: Source of variation SS Regression SSR(X1, X2, X3) p−1=3 Error SSE(X1, X2, X3) n−p=n−4 Total SSTO n−1 df 12 Partition SSR(X1, X2, X3) into 3 one df extra sums of squares. One way to do it is: SSR(X1, X2, X3) = SSR(X1) + SSR(X2|X1) + SSR(X3|X1, X2) Modified ANOVA Table: Source of variation SS df Regression SSR(X1, X2, X3) 3 SSR(X1) SSR(X2|X1) SSR(X3|X1, X2) 1 1 1 Error SSE(X1, X2, X3) n−4 Total SSTO n−1 Note: there are 6 equivalent ways of partitioning SSR(X1, X2, X3). 13 Three Tests: (p = 4 in this example) • One Beta: H0 : β2 = 0 vs. HA : not H0 SSR(X2|X1, X3)/1 SSE(X1, X2, X3)/(n − p) Rejection rule: Reject H0 if F ∗ > F (1 − α; 1, n − p) Test statistic: F ∗ = • Some Betas: H0 : β2 = β3 = 0 vs. HA : not H0 SSR(X2, X3|X1)/2 SSE(X1, X2, X3)/(n − p) Rejection rule: Reject H0 if F ∗ > F (1 − α; 2, n − p) Test statistic: F ∗ = • All Betas: H0 : β1 = β2 = β3 = 0 vs. HA : not H0 SSR(X1, X2, X3)/3 SSE(X1, X2, X3)/(n − p) Rejection rule: Reject H0 if F ∗ > F (1 − α; p − 1, n − p) Test statistic: F ∗ = 14 Let’s return to the model E(Yi) = β0 + β1Xi1 + β2Xi2 + β3Xi3 and think about testing H0 : β2 = β3 = 0 Test statistic: F ∗ = vs. HA : not H0 SSR(X2, X3|X1)/2 MSE(X1, X2, X3) How do we get SSR(X2, X3|X1) if we have SSR(X1), SSR(X2|X1), and SSR(X3|X1, X2)? SSR(X2, X3|X1) = SSR(X2|X1) + SSR(X3|X1, X2) What if we would have SSR(X2), SSR(X1|X2), and SSR(X3|X1, X2)? Stuck! 15 lm(Y ∼ X1+X2+X3) SSR(X1) SSR(X2|X1) SSR(X3|X1, X2) lm(Y ∼ X2+X1+X3) SSR(X2) SSR(X1|X2) SSR(X3|X1, X2) 16 Example: Patient Satisfaction Yi Xi1 Xi2 Xi3 = = = = patient satisfaction (n = 23) patient’s age in years severity of illness (index) anxiety level (index) Model 1: Consider the model with all 3 pairwise interactions included (p = 7) E(Yi) = β0 + β1Xi1 + β2Xi2 + β3Xi3 + β4Xi1Xi2 + β5Xi1Xi3 + β6Xi2Xi3 and think about testing the 3 interaction terms: H0 : β4 = β5 = β6 = 0 17 vs. HA : not H0 Denote the interaction Xj Xk by Ijk . Then Test statistic: F ∗ = SSR(I12, I13, I23|X1, X2, X3)/3 MSE(X1, X2, X3, I12, I13, I23) Rejection rule: Reject H0 if F ∗ > F (1 − α; 3, n − p) How do we get this extra sum of squares? 18 Q: How many partitions of SSR(X1, X2, X3, I12, I13, I23) into 6 one df extra sums of squares are there? A: 6 × 5 × 4 × 3 × 2 = 6! = 720 Q: Which ones will allow us to compute F ∗? A: The ones with I12, I13, and I23 last. SSR(·) = SSR(X1) + SSR(X2|X1) + SSR(X3|X1, X2) +SSR(I12|X1, X2, X3) +SSR(I13|X1, X2, X3, I12) +SSR(I23|X1, X2, X3, I12, I13) Add the last 3 (the interaction terms) to get SSR(I12, I13, I23|X1, X2, X3) 19 > summary(mod1 <- lm(sat ~ age + sev + anx + age:sev + age:anx + sev:anx)) Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 241.57104 169.91520 1.422 0.174 age 0.28112 4.65467 0.060 0.953 sev -6.32700 5.40579 -1.170 0.259 anx 24.02586 101.65309 0.236 0.816 age:sev 0.06969 0.10910 0.639 0.532 age:anx -2.20711 1.74936 -1.262 0.225 sev:anx 1.16347 1.98054 0.587 0.565 20 > anova(mod1) Analysis of Variance Table Response: sat Df Sum Sq Mean Sq F value Pr(>F) age 1 3678.44 3678.44 32.20 3.45e-05 *** sev 1 402.78 402.78 3.53 0.079 . anx 1 52.41 52.41 0.46 0.508 sev:age 1 0.02 0.02 0.00 0.989 sev:anx 1 1.81 1.81 0.02 0.901 age:anx 1 181.85 181.85 1.59 0.225 Residuals 16 1827.90 114.24 F∗ = (0.02+1.81+181.85)/3 114.24 = 0.54 is compared to F (0.95; 3, 16) > qf(0.95, 3, 16) [1] 3.238872 Because F ∗ < F (0.95; 3, 16) = 3.24 we fail to reject H0 (Interactions are not needed). 21 Model 2: Let’s get rid of the interactions and consider E(Yi) = β0 + β1Xi1 + β2Xi2 + β3Xi3 Do we need X2 (severity of illness) and X3 (anxiety level) if X1 (age) is already in the model? H0 : β2 = β3 = 0 vs. HA : not H0 Test statistic: F ∗ = SSR(X2, X3|X1)/2 MSE(X1, X2, X3) Rejection rule: Reject H0 if F ∗ > F (1 − α; 2, n − p) How do we get this extra sum of squares? SSR(X2, X3|X1) = SSR(X2|X1) + SSR(X3|X1, X2) 22 > summary(mod2 <- lm(sat ~ age + sev + anx)) Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 162.8759 25.7757 6.319 4.59e-06 *** age -1.2103 0.3015 -4.015 0.00074 *** sev -0.6659 0.8210 -0.811 0.42736 anx -8.6130 12.2413 -0.704 0.49021 > anova(mod2) Analysis of Variance Table Response: sat Df Sum Sq Mean Sq F value Pr(>F) age 1 3678.4 3678.4 34.74 1.e-05 *** sev 1 402.8 402.8 3.80 0.0660 . anx 1 52.4 52.4 0.49 0.4902 Residuals 19 2011.6 105.9 23 F∗ = (402.8+52.4)/2 105.9 = 2.15 is compared to > qf(0.95, 2, 19) [1] 3.521893 Because F ∗ < F (0.95; 2, 19) = 3.52 we again fail to reject H0 (X2 and X3 are not needed). 24 Model 3: Let’s get rid of X2 (severity of illness) and X3 (anxiety level) and consider the SLR with X1 (age) E(Yi) = β0 + β1Xi1 > summary(mod3 <- lm(sat ~ age)) Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 121.8318 11.0422 11.033 3.37e-10 *** age -1.5270 0.2729 -5.596 1.49e-05 *** > anova(mod3) Analysis of Variance Table Response: sat Df Sum Sq Mean Sq F value Pr(>F) age 1 3678.4 3678.4 31.315 1.49e-05 *** Residuals 21 2466.8 117.5 25 Let’s construct 95% CI’s for β1 and for E(Yh) = X0hβ, where X0h = (1 40 50 2), based on these 3 models. > new <- data.frame(age=40, sev=50, anx=2) 26 Model 3: (p = 2) p b1 ± t(0.975; 21) MSE/SXX = (−2.09, −0.96) > predict(mod3,new,interval="confidence",level=0.95) fit lwr upr [1,] 60.75029 56.0453 65.45528 Model 2: (p = 4) p b1 ± t(0.975; 19) MSE[(X0X)−1]22 = (−1.84, −0.58) > predict(mod2,new,interval="confidence",level=0.95) fit lwr upr [1,] 63.94183 55.85138 72.03228 Model 1: (p = 7) p b1 ± t(0.975; 16) MSE[(X0X)−1]22 = (−9.59, 10.15) > predict(mod1,new,interval="confidence",level=0.95) fit lwr upr [1,] 63.67873 54.9398 72.41767 27 Correlation of Predictors Multicollinearity Recall the SLR situation: data (Xi, Yi), i = 1, . . . , n r2 = SSR/SSTO describes the amount of total variability in the Yi’s explained by the linear relationship between X and Y . Because of SSR = b21SXX , where b1 = SXY /SXX , and with SY Y = SSTO, the sample coefficient of correlation between X and Y is √ SXY 2 r = sign(b1) r = √ SXX SY Y and gives us information about the strength of the linear relationship between X and Y , as well as the sign of the slope (−1 ≤ r ≤ 1). 28 2.8 2.6 level of anxiety 2.2 2.4 Patient Satisfaction: 1.8 2.0 Correlation between Xi2 = severity of illness Xi3 = anxiety level r23 = 0.7945 (see below) 45 50 55 severity of illness 60 29 For a multiple regression data set (Xi1, . . . , Xi,p−1, Yi) rjY is the sample correlation coefficient between Xj and Y , rjk is the sample correlation coefficient between Xj and Xk . • If rjk = 0 then Xj and Xk are uncorrelated. When most of the rjk ’s are close to 1 or −1, we say we have multicollinearity among the predictors. > cor(patsat) sat age sev anx sat 1.0000 -0.7737 -0.5874 -0.6023 age -0.7737 1.0000 0.4666 0.4977 sev -0.5874 0.4666 1.0000 0.7945 anx -0.6023 0.4977 0.7945 1.0000 30 Uncorrelated vs. correlated predictors Consider the 3 models: (1) E(Yi) = β0 + β1Xi1 (2) E(Yi) = β0 + β2Xi2 (3) E(Yi) = β0 + β1Xi1 + β2Xi2 and the 2 cases: • X1 and X2 are uncorrelated (r12 ≈ 0), then b1 will be the same for models (1) and (3) b2 will be the same for models (2) and (3) SSR(X1|X2) = SSR(X1) SSR(X2|X1) = SSR(X2) 31 • X1 and X2 are correlated (|r12| ≈ 1), then b1 will be different for models (1) and (3) b2 will be different for models (2) and (3) SSR(X1|X2) < SSR(X1) SSR(X2|X1) < SSR(X2) When r12 ≈ 0, X1 and X2 contain no redundant information about Y . Thus, X1 explains the same amount of the SSTO when X2 is in the model as it does when X2 is not. 32 Overview of the Effect of Multicollinearity The standard errors of the parameter estimates are inflated. Thus, CI’s for the regression parameters may be to large to be useful. Inferences about E(Yh) = X0hβ, the mean of a response at X0h, and Yh(new), a new random variable observed at Xh, are unaffected for the most part. The idea of increasing X1, when X2 is fixed, may not be reasonable. E(Yi) = β0 + β1Xi1 + β2Xi2 Interpretation: β1 represents “the change in the mean of Y corresponding to a unit increase in X1 holding X2 fixed”. 33 Polynomial Regression Suppose we have SLR type data (Xi, Yi), i = 1, . . . , n. If Yi = f (Xi) + ²i, where f (·) is unknown, it may be reasonable to approximate f (·) using a polynomial E(Yi) = β0 + β1Xi + β2Xi2 + β3Xi3 + · · · Usually, you wouldn’t go beyond the 3rd power. Standard Procedure: • Start with a higher order model and try to simplify. • If X k is retained, so are the lower order terms X k−1, X k−2, . . . , X. Warning: • The model E(Yi) = β0 + β1Xi + · · · + βn−1Xin−1 always fits perfectly (p = n). • Polynomials in X are highly correlated. 34 Polynomial Regression Example: Fish Data Yi = log(species richness + 1) observed at lake i, i = 1, . . . , 80, in NY’s Adirondack State Park. We consider the 3rd order model: E(Yi) = β0 + β1pHi + β2pHi2 + β3pHi3 > lnsr <- log(rch+1) > ph2 <- ph*ph; ph3 <- ph2*ph > summary(m3 <- lm(lnsr ~ ph + ph2 + ph3)) Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -16.82986 7.44163 -2.262 0.0266 * ph 7.07937 3.60045 1.966 0.0529 . ph2 -0.87458 0.56759 -1.541 0.1275 ph3 0.03505 0.02930 1.196 0.2354 --- 35 Residual standard error: 0.4577 on 76 df Multiple R-Squared: 0.447, Adjusted R-squared: 0.425 F-statistic: 20.45 on 3 and 76 df, p-value: 8.24e-10 > anova(m3) Analysis of Variance Table Response: lnsr Df Sum Sq Mean Sq F value Pr(>F) ph 1 7.9340 7.9340 37.8708 3.280e-08 *** ph2 1 4.6180 4.6180 22.0428 1.158e-05 *** ph3 1 0.2998 0.2998 1.4308 0.2354 Residuals 76 15.9221 0.2095 Looks like pH 3 is not needed. 36 Let’s see if we can get away with a SLR: H0 : β2 = β3 = 0 vs. HA : not H0 Test statistic: F∗ = = SSR(pH 2, pH 3|pH)/2 MSE(pH, pH 2, pH 3) (4.6180 + 0.2998)/2 = 11.74 0.2095 Rejection rule: Reject H0 if F ∗ > F (0.95; 2, 76) = 3.1 Thus, a higher order term is necessary. 37 Let’s test H0 : β3 = 0 vs. HA : β3 6= 0 SSR(pH 3|pH, pH 2)/1 Test statistic: F = 2 3 = 1.43 MSE(pH, pH , pH ) ∗ Rejection rule: Reject H0 if F ∗ > F (0.95; 1, 76) = 4.0 Conclusion: Can’t throw away pH and pH 2 so the model we use is E(Yi) = β0 + β1pHi + β2pHi2 > summary(m2 <- lm(lnsr ~ ph + ph2)) Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -8.1535 1.6675 -4.890 5.40e-06 *** ph 2.8201 0.5345 5.276 1.18e-06 *** ph2 -0.1975 0.0422 -4.682 1.20e-05 *** --- 38 Residual standard error: 0.459 on 77 df Multiple R-Squared: 0.436, Adjusted R-squared: 0.422 F-statistic: 29.79 on 2 and 77 df, p-value: 2.6e-10 > anova(m2) Analysis of Variance Table Response: lnsr Df Sum Sq Mean Sq F value Pr(>F) ph 1 7.9340 7.9340 37.66 3.396e-08 *** ph2 1 4.6180 4.6180 21.92 1.198e-05 *** Residuals 77 16.2218 0.2107 --- 39 0.0 0.5 log(species richness + 1) 1.0 1.5 2.0 2.5 1 3 2 4 5 6 7 40 ph 8 9 Q: What’s the big deal? All we did was get rid of the third order term, pHi3. A: Suppose we are interested in a 95% CI for β1: Model 3rd order 2nd order b1 7.08 2.82 s.e. 3.60 0.53 41 CI(β1) (−0.12, 14.28) (+1.75, 3.89) We can do all of this stuff with more than 1 predictor. Suppose we have (Xi1, Xi2, Yi), i = 1, . . . , n. 2nd order model: 2 2 E(Yi) = β0 + β1Xi1 + β2Xi2 + β3Xi1 + β4Xi2 + β5Xi1Xi2 We could test H0 : β3 = β4 = β5 = 0. That is: “Is a 1st order model sufficient?” Test statistic: F∗ = SSR(X12, X22, X1X2|X1, X2)/3 MSE(X1, X2, X12, X22, X1X2) Rejection rule: Reject H0 if F ∗ > F (0.95; 3, n − 6). 42