Defense Agile Acquisition Guide

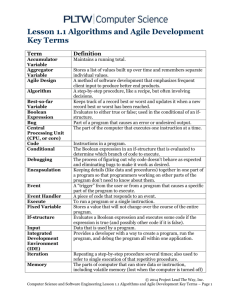

advertisement