Automating Hinting in an Intelligent Tutorial Dialog System

advertisement

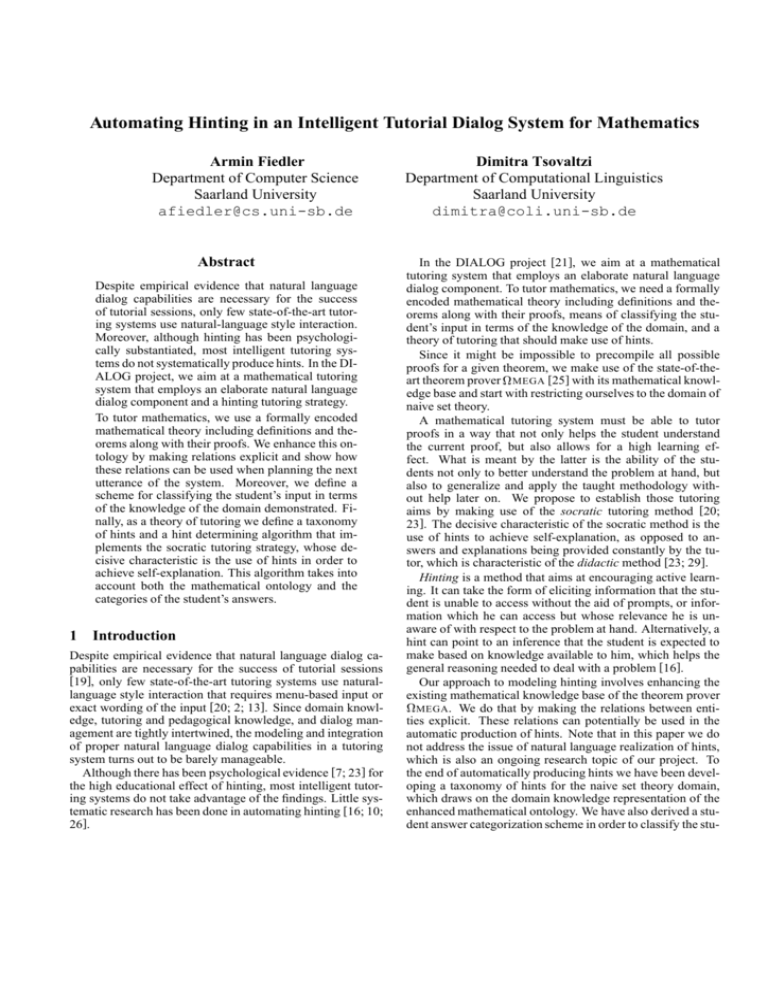

Automating Hinting in an Intelligent Tutorial Dialog System for Mathematics Armin Fiedler Department of Computer Science Saarland University afiedler@cs.uni-sb.de Abstract Despite empirical evidence that natural language dialog capabilities are necessary for the success of tutorial sessions, only few state-of-the-art tutoring systems use natural-language style interaction. Moreover, although hinting has been psychologically substantiated, most intelligent tutoring systems do not systematically produce hints. In the DIALOG project, we aim at a mathematical tutoring system that employs an elaborate natural language dialog component and a hinting tutoring strategy. To tutor mathematics, we use a formally encoded mathematical theory including definitions and theorems along with their proofs. We enhance this ontology by making relations explicit and show how these relations can be used when planning the next utterance of the system. Moreover, we define a scheme for classifying the student’s input in terms of the knowledge of the domain demonstrated. Finally, as a theory of tutoring we define a taxonomy of hints and a hint determining algorithm that implements the socratic tutoring strategy, whose decisive characteristic is the use of hints in order to achieve self-explanation. This algorithm takes into account both the mathematical ontology and the categories of the student’s answers. 1 Introduction Despite empirical evidence that natural language dialog capabilities are necessary for the success of tutorial sessions [19], only few state-of-the-art tutoring systems use naturallanguage style interaction that requires menu-based input or exact wording of the input [20; 2; 13]. Since domain knowledge, tutoring and pedagogical knowledge, and dialog management are tightly intertwined, the modeling and integration of proper natural language dialog capabilities in a tutoring system turns out to be barely manageable. Although there has been psychological evidence [7; 23] for the high educational effect of hinting, most intelligent tutoring systems do not take advantage of the findings. Little systematic research has been done in automating hinting [16; 10; 26]. Dimitra Tsovaltzi Department of Computational Linguistics Saarland University dimitra@coli.uni-sb.de In the DIALOG project [21], we aim at a mathematical tutoring system that employs an elaborate natural language dialog component. To tutor mathematics, we need a formally encoded mathematical theory including definitions and theorems along with their proofs, means of classifying the student’s input in terms of the knowledge of the domain, and a theory of tutoring that should make use of hints. Since it might be impossible to precompile all possible proofs for a given theorem, we make use of the state-of-theart theorem prover MEGA [25] with its mathematical knowledge base and start with restricting ourselves to the domain of naive set theory. A mathematical tutoring system must be able to tutor proofs in a way that not only helps the student understand the current proof, but also allows for a high learning effect. What is meant by the latter is the ability of the students not only to better understand the problem at hand, but also to generalize and apply the taught methodology without help later on. We propose to establish those tutoring aims by making use of the socratic tutoring method [20; 23]. The decisive characteristic of the socratic method is the use of hints to achieve self-explanation, as opposed to answers and explanations being provided constantly by the tutor, which is characteristic of the didactic method [23; 29]. Hinting is a method that aims at encouraging active learning. It can take the form of eliciting information that the student is unable to access without the aid of prompts, or information which he can access but whose relevance he is unaware of with respect to the problem at hand. Alternatively, a hint can point to an inference that the student is expected to make based on knowledge available to him, which helps the general reasoning needed to deal with a problem [16]. Our approach to modeling hinting involves enhancing the existing mathematical knowledge base of the theorem prover MEGA . We do that by making the relations between entities explicit. These relations can potentially be used in the automatic production of hints. Note that in this paper we do not address the issue of natural language realization of hints, which is also an ongoing research topic of our project. To the end of automatically producing hints we have been developing a taxonomy of hints for the naive set theory domain, which draws on the domain knowledge representation of the enhanced mathematical ontology. We have also derived a student answer categorization scheme in order to classify the stu- : element : intersection : union : subset : strict subset : superset : strict superset : powerset : not element : not subset : not strict subset : not superset : not strict superset Table 1: Mathematical concepts. Element: The elements of a set are its inhabitants: if and only if is an inhabitant of . Intersection: The intersection sets is the set of their oftwo common elements: and . Union: The union of two sets is the set of the elements of both sets: or . Subset: A set is a subset of another set if all elements of the former are also elements of the latter: if and only follows that . if for all Strict Subset: A set is a strict subset of another set if the latter if and only if has at least one element more: and there is an such that . Superset: A set is a superset of another set if all elements of if and the latter are also elements of the former: follows that . only if for all Equality: Two sets are equal if they share the same elements: if and only if for all follows that and for all follows that . Powerset: powerset of a set is the set of all its subsets: "!#The $%&' ( . The definition of the negated concepts from Table 1 is straightforward. Furthermore, we give examples of lemmata and theorems that use some of these concepts. Let , and ) be sets: * . Commutativity of Union: and then + . Equality of Sets: If )$! and )$! then Union in Powerset: If )$! . Finally, we give some examples for inference rules. Inference rules have the following general form: 2 A Mathematical Ontology for Hinting The proof planner MEGA [25] makes use of a mathematical database that is organized as a hierarchy of nested mathematical theories. Each theory includes definitions of mathematical concepts, lemmata and theorems about them, and inference rules, which can be seen as lemmata that the proof planner can directly apply. Moreover, each theory inherits all definitions, lemmata and theorems, which we will collectively call assertions henceforth, as well as all inference rules from nested theories. Since assertions and inference rules draw on mathematical concepts defined in the mathematical theories, the mathematical database implicitly represents many relations that can potentially be made use of in tutorial dialog. Further useful relations can be found when comparing the definitions of concepts with respect to common patterns. In this section, we shall first show in Section 2.1 a part of MEGA ’s mathematical database, which we shall use as an example domain henceforth. Then, we shall define in Section 2.2 the relations to be used in the hinting process. 2.1 dent’s answers. The taxonomy and the student answer categories are used by our hinting algorithm which models the socratic tutoring method and by employing an implicit student model. The implicit student model of the hinting algorithm makes use of the student answer categorization and of information on the domain knowledge demonstrated, or already provided by previous hints produced. Its aim is to use information from the running tutoring and the current progress of the student on the particular problem under consideration in order to produce hints. Those hints, in turn, intend to address the cognitive state of the student and cause him to reflect upon his reasoning. We are only concerned here with the process of making the student actively reflect on his answers. However, DIALOG uses ACTIVE M ATH [18] as an external facility for user modeling. In this paper, we first provide a comprehensive description of our domain ontology (Section 2) and its use (Section 3). In Section 4 we give an overview of the taxonomy of hints. In Section 5 we look at our student answer categorization scheme and in Section 6 the hinting algorithm is presented. Next, in Section 7 an example dialog demonstrates the use of the research presented here as envisaged for our system. Finally, we briefly discuss some related work in Section 8 and conclude the paper. ,.-0/1211/',43 5 6 A Mathematical Database where 7#89;:<:<:;'7>= , ?@BA are the premises, from which the conclusion C is derived by rule D . Let and be sets. Example inference rules are: In MEGA’s database, assertions are encoded in a simplytyped -calculus, where every concept has a type and wellformedness of formulae is defined by the type restrictions. In this paper, we will concentrate on the mathematical concepts from naive set theory given in Table 1. These concepts draw on the types sets and inhabitants of sets. We give the definitions of the mathematical concepts in intuitive terms as well as in more formal terms paraphrasing but avoiding the -calculus encoding of MEGA’s database. Let be sets and let be an inhabitant. EGFIH HJFE ELKLH Set= E&MNPORQTSUH+MNPORQ&S VN EWVHJMNPORQTS Note that the left inference rule encodes the lemma about the equality of sets, and the right inference rule encodes the lemma about the union in powersets. Since every lemma and 2 Mathematical Concepts, Formulae and Inference Rules Let be a mathematical concept, D be an inference rule and 7#8%;:<:;: '7>= 'C formulae, where 7#8%<:;:<:<27>= are the premises and C the conclusion of D . theorem can be rewritten as an inference rule and every inference rule can be rewritten as a lemma or theorem, we identify lemmata and theorems with inference rules henceforth. 2.2 Enhancing the Ontology Introduction: Rule D introduces if and only if occurs in the conclusion C , but not in any of the premises 7#8 <:<:;: '7>= . ! Examples: introduces Set= ! , introduces The mathematical database implicitly represents many relations that can be made use of in tutorial dialog. Further useful relations can be found when comparing the definitions of concepts with respect to common patterns. We consider relations between mathematical concepts, between mathematical concepts and inference rules, and among mathematical concepts, formulae and inference rules. By making these relations explicit we convert the mathematical database into an enhanced ontology that can be used in hinting. Elimination: Rule D eliminates if and only if occurs in at least one of the premises 7 8 ;:<:;:<'7 = , but not in the conclusion C . Examples: eliminates Set= ! , eliminates Set= ! Automation The automatic enhancement of the mathematical database by explicitly adding the relations defined previously is straightforward. The automation of the enhancement allows us to plug in any mathematical database and to convert it according to the same principles into a database that includes the relations we want to make use of in hinting. Mathematical Concepts Let 4 be mathematical concepts. We define the following relations between mathematical concepts: Antithesis: is in antithesis to if and only if it is its opposite concept (i.e., its logical negation). Examples: antithesis ! , antithesis ! Duality: 3 Making Use of the Ontology is dual to if and only if is defined in terms of 7 C and is defined in terms of C 7 for some formulae 7 C . Examples: dual ! , dual ! In this section we further explain the use of the mathematical ontology by pointing out its exact relevance with regard to the general working framework. We also give a few examples of the application of the ontology in automating hint production. The mathematical ontology is evoked primarily in the realization of hint categories chosen by the socratic algorithm described in Section 6. Due to the adaptive nature of the algorithm, and our goal to dynamically produce hints that fit the needs of the student with regard to the particular proof, we cannot restrict ourselves to the use of a gamed of static hints. The algorithm takes as input the number and kind of hints produced so far, the number of wrong answers by the student and the categories of the current and previous student answer. It computes the appropriate hint category to be produced next from the taxonomy of hints. The hint category is chosen with respect to the implicit student model that the algorithm’s input constitutes. This means that for every student and for his current performance on the proof being attempted, the hint category chosen must be realized in a different way. Each hint category in the hint taxonomy is defined based on generic descriptions of domain objects or relations. The role of the ontology is to map the generic descriptions on the actual objects or relations that are used in the particular context, that is, in the particular proof and the proof step at hand. Another equally significant use of the domain ontology is in categorizing the student’s answers. This use is a side-effect of the involvement of the ontology in automatically choosing hints. That is, the algorithm takes as input the analyzed student answer. In analyzing the latter, we compare it to the expected answer (see Section 5) and then look for the employment of necessary entities. The necessary entities are defined in terms of the ontology. The algorithm checks for the student’s level of understanding by trying to track the use of these entities in the student’s answer to be addressed next. The hint to be produced is then Junction: is in a junction to if and only if is defined in terms of 7#8 "7>= and is defined in terms of 7 8 7 = , or vice versa, for some formulae 7 8 <:;:<:;'7 = , where ? . Examples: junction ! Hypotaxis: is in hypotaxis to if and only if is defined using . We say, is a hypotaxon of , and is a hypertaxon of . Examples: hypotaxon ! , hypotaxon ! Primitive: is a primitive if and only if it has no hypotaxon. Examples: primitive ! Note that is a primitive in MEGA’s database, since it is defined using inhabitant, which is a type, but not a defined concept. Mathematical Concepts and Inference Rules Let , be mathematical concepts and D be an inference rule. We define the following relations: Relevance: is relevant to D if and only if D can only be applied when is part of the formula at hand (either in the conclusion or in the premises). Examples: relevant Set= ! , relevant Set=! , relevant Set= ! Dominance: is dominant over for rule D if and only if appears in both the premises and the conclusion, but does not. has to appear in one of the premises or the conclusion. !, Examples: dominant 3 Before we introduce the dimensions, let us clarify some terminology. In the following, we distinguish performable steps from meta-reasoning. Performable steps are the steps that can be found in the formal proof. These include premises, conclusion and inference methods such as lemmata, theorems, definitions of concepts, or calculus-level rules. Metareasoning steps consist of everything that leads to the performable step, but cannot be found in the formal proof. To be more specific, meta-reasoning consists of everything that could potentially be applied to any particular proof. It involves general proving techniques. As soon as a general technique is instantiated for the particular proof, it belongs to the performable step level. The two hint dimensions consist of the following classes: 1. active vs. passive 2. domain-relation vs. domain-object vs. inference-rule vs. substitution vs. meta-reasoning vs. performable-step In the second dimension, we ordered the classes with respect to their subordination relation. We say, that a class is subordinate to another one if it reveals more information. Each of these classes consists of single hint categories that elaborate on one of the attributes of the proof step under consideration. The hint categories are grouped in classes according to the kind of information they address in relation to the domain and the proof. By and large, the hints of the passive function of a class in the second dimension constitute the hints of the active function of its immediately subordinate class, in the same dimension. In addition, the class of pragmatic hints belongs to the second dimension as well, but we define it such that it is not subordinate to any other class and no other class is subordinate to it. In the following section we look at the structure of the second dimension just described through some examples of classes and the hints defined in them. picked according to the knowledge demonstrated by the student. Note that this knowledge might have already been provided by the system itself, in a previous turn when dealing with the same performable step. Since the algorithm only checks for generic descriptions of those concepts, we suggest the use of the present ontology to map the descriptions onto the actual concepts relevant to the particular context. Let us now give examples of the use of our domain ontology. All the relations mentioned here, which are defined in the ontology, have been explained in Section 2. For the hint give-away-relevant-concept, which points out the right mathematical concept that the student has to bring into play in order to carry the proof out, we use the domain ontology in the following way: 1. If the inference rule to be applied involves the elimination or the introduction of a concept, then we identify the relevant concept with the concept eliminated or introduced, respectively. 2. Otherwise, we look for a relevance relation and identify the relevant concept with that in the relation. To produce the hint elaborate-domain-object we have to find in the domain the exact way an inference rule needs to be applied, for instance, whether it involves an elimination or an introduction. The student will be informed accordingly. For more on the construction and use of the mathematical ontology see [28]. 4 A Taxonomy of Hints In this section we explain the philosophy and the structure of our hint taxonomy. We also look into some hints that are used in the algorithm. The names of the categories are intended to be as descriptive of the content as possible, and should in some cases be self-explanatory. The taxonomy includes more than the hint categories mentioned in this section. The full taxonomy is given in Table 2. Some categories are not real hints (e.g., point-to-lesson), but have been included in the taxonomy since they are part of the general hinting process. 4.1 4.2 First Dimension The first dimension distinguishes between the active and passive function of hints. The difference lies in the way the information to which the tutor wants to refer is approached. The idea behind this distinction resembles that of backward- vs. forward-looking function of dialog acts in DAMSL [8]. The active function of hints looks forward and seeks to help the student in accessing a further bit of information, by means of eliciting, that will bring him closer to the solution. The student has to think of and produce the answer that is hinted at. The passive function of hints refers to the small piece of information that is provided each time in order to bring the student closer to some answer. The tutor gives away some information, which he has normally unsuccessfully tried to elicit previously. Due to that relation between the active and passive function of hints, the passive function of one hint class in the second dimension consists of hint categories that are included in the active function in its subordinate class. Philosophy and Structure Our hint taxonomy was derived with regard to the underlying function that can be common for different surface realizations of hints. The underlying function is mainly responsible for the educational effect of hints. Although the surface structure, which undoubtedly plays its own significant role in teaching, is also being examined in the our project, we do not address this issue in this paper. We defined the hint categories based on the needs in the domain. To estimate those needs we made use of the objects and the relations between them as defined in the mathematical ontology. An additional guide for deriving hint categories that are useful for tutoring in our domain was a previous hint taxonomy, which was derived from the BE&E corpus [26]. The structure of the hint taxonomy reflects the function of the hints with respect to the information that the hint addresses or is meant to trigger. To capture different functions of a hint we define hint categories across two dimensions. 4.3 Second Dimension In this section we will give a few examples of classes and hint categories that capture the structure of the second dimension. 4 domain-relation domain-object inference rule substitution meta-reasoning performable-step pragmatic active elicit-antithesis elicit-duality elicit-junction elicit-hypotaxis elicit-specialization elicit-generalization give-away-antithesis give-away-duality give-away-junction give-away-hypotaxis give-away-specialization give-away-generalization give-away-relevant-concept give-away-hypotactical-concept give-away-primitive-concept elaborate-domain-object give-away-inference-rule elicit-substitution spell-out-substitution explain-meta-reasoning confer-to-lesson ordered-list unordered-list elicit-discrepancy passive give-away-antithesis give-away-duality give-away-junction give-away-hypotaxis give-away-specialization give-away-generalization give-away-relevant-concept give-away-hypotactical-concept give-away-primitive-concept give-away-inference-rule spell-out-substitution explain-meta-reasoning give-away-performable-step take-for-granted point-to-lesson Table 2: The taxonomy of hints. the number of the parts, and elicit-discrepancy, which points out that there is a discrepancy between the student’s answer and the expected answer. The latter can be used in place of all other active hint categories. Take-for-granted asks the student to just accept something as a fact either when the student cannot understand the explanation or when the explanation would require making use of formal logic. Point-to-lesson points the student to the lesson in general and asks him to read it again when it appears that he cannot be helped by tutoring because he does not remember the study material. There is no one-to-one correspondence between the active and passive pragmatic hints. Some pragmatic hints can be used in combination with hints from other classes. For more on the taxonomy of hints see [11]. Domain-relation hints address the relations between mathematical concepts in the domain, as described in Section 2. The passive function of domain-relation hints is the active function of domain-object hints, that is, they are used to elicit domain objects. Domain-object hints address an object in the domain. The hint give-away-relevant-concept names the most prominent concept in the proposition or formula under consideration. This might be, for instance, the concept whose definition the student needs to use in order to proceed with the proof, or the concept that will in general lead the student to understand which inference rule he has to apply. Other examples in the class are give-away-hypotactical-concept and give-awayprimitive-concept. The terms hypotactical and primitive concept refer to the relation, based on the domain hierarchy, between the addressed concept and the original relevant concept, which the tutor is trying to elicit. Since this class is subordinate to domain-relation, the hints in it are more revealing than domain-relation hints. The passive function of domainobject hints is used to elicit the applicable inference rule, and, therefore, is part of the active function of the respective class. The same structure holds for inference-rule, substitution, meta-reasoning and performable-step hints. Finally, the class of pragmatic hints is somewhat different from other classes in that it makes use of minimal domain knowledge. It rather refers to pragmatic attributes of the expected answer. The active function hints are ordered-list, which specifically refers to the order in which the parts of the expected answer appear, unordered-list, which only refers to 5 Student Answer Categorization Scheme In this section we present a scheme for categorizing student answers. We are only concerned here with the parts of the answer that address domain knowledge. We define student categories based on their completeness and accuracy with respect to the expected answer. The latter is always approximated for the student’s own line of reasoning. The output of the classification constitutes part of the input to the hinting algorithm, which models the hinting process. 5.1 Proof Step Matching The student’s answer is evaluated by use of an expected answer. The expected answer is the proof step which is expected 5 Accuracy Accuracy, contrary to completeness, is dependent on the domain ontology. It refers to the appropriateness of the object in the student answer with respect to the expected object. An object is accurate, if and only if it is the exact expected one. Currently we are using accuracy as a binary predicate in the same way that we do with completeness. However, we intend to extend our categorization to include different degrees of accuracy. next according to the formal proof which the system has chosen for the problem at hand. We want to make use of the student’s own reasoning in helping him with the task and avoid super-imposing a particular solution. We model that by trying to match the student’s answer to an proof step in one of a set of proofs. To this end we use the state-of-the-art theorem prover MEGA [25]. 5.2 Parts of Answers and Over-Answering We now define the relevant units for the categorization of the student answer. A part is a premise, the conclusion or the inference rule of a proof step. The two former are mathematical formulae and must be explicitly mentioned for the proof to be complete. The inference rule can either be referred to nominally, or it can be represented as a formula itself. In the latter case, we just consider that formula as one of the premises. It is up to the student to commit to using the rule one way or the other. A formula is a higher-order predicate logic formula. Every symbol defined in the logic is a function. Formulae can constitute of subformulae to an arbitrary degree of embedding. Constants are 0-ary functions that constitute the lowest level of entities considered. We also consider (accurate or inaccurate) over-answering as several distinct answers. That is, if the student’s answer has more proof steps than one, we consider the steps as multiple answers. The categorization is normally applied to them separately. Nevertheless, there are cases where the order of the presentation of the multiple answers is crucial. For example, we cannot count a correct answer that is inferred from a previous wrong answer, since the correct answer would have to follow from a wrong premise. 5.3 5.4 The Categories In this section we enumerate the categories of students answers based on our definitions of completeness and accuracy and with regard to the expected answer. We define the following student answer categories: Correct: An answer which is both complete and accurate. Complete-Partially-Accurate: An answer which is complete, but some parts in it are inaccurate. Complete-Inaccurate: An answer which is complete, but all parts in it are inaccurate. Incomplete-Accurate: An answer which is incomplete, but all parts that are present in it are accurate. Incomplete-Partially-Accurate: An answer which is incomplete and some of the parts in it are inaccurate. Wrong: An answer which is both incomplete and inaccurate. For the purposes of this paper, we collapse the categories complete-partially-accurate, complete-inaccurate and incomplete-partially-accurate to one category, namely, inaccurate. More on our student answer categorization scheme can be found in [27]. Completeness vs. Accuracy We define the predicates complete and accurate as follows: 5.5 Complete: An answer is complete if and only if all parts of the expected answer are mentioned. Subdialogs Subdialogs are crucial to the correct evaluation of the student answer and supplementary to the student answer categorization scheme just presented. The tutor can initiate subdialogs, for example, in case of ambiguity in order to resolve it. Moreover, students are given the opportunity to correct themselves, provide additional information on their reasoning and give essential information about the way they proceed with the task. Student’s can as well initiate subdialogs, usually with clarification questions or requests for particular information. An example of a subdialog initiation by the tutor is the case of potential “typos”. We want to treat them differently from conceptual domain mistakes, such as, wrong instantiations. We can prevent that by asking the student what he really meant to say. Our assumption is that if the student really just used the wrong symbol and did not make a conceptual domain mistake, he will realize it and correct it. If he does not correct it, we categorize the answer taking into account the domain mistake. This kind of subdialog we identify with alignment [6], and an instance of it is the example in Figure 11 . The tutor could not make sense of the student’s Accurate: A part of an answer is accurate if and only if the propositional content of the part is the true and expected one. Completeness From our definition of completeness it follows that completeness is dependent on domain objects, but not on our domain ontology. That is, the expected answer, which is the basis of the evaluation of the completeness of a student answer, necessarily makes use of objects in the domain. However, the relations of the objects in the domain are irrelevant to evaluating completeness. Completeness is a binary predicate. The only thing relevant to completeness is the presence or absence of objects in the student’s answer. In addition, we distinguish between getting the expected domain object right and instantiating it correctly. The latter does not follow from the former. Completeness is relevant to the presence of the object but not to its correct instantiation. In other words, a place holder for an expected object in the answer is enough for attributing completeness, no matter if the object itself is the expected one. That issue is dealt with by accuracy. 1 The examples presented here are from our recently collected corpus on mathematics tutorial dialogs in German [4]. Translations are included, where necessary. 6 utterance he substituted and for ! unless for ! . The student could not correct himself, although he realized from the tutor’s question that he had used the wrong symbol. O S , dann K K O F K K, weil O K, because S ] oder etwas anderes? Tutor (6): meinen Sie wirklich K [Do you really mean or something else?] F Student (7): Student (5): F wenn O S , then [if the student. The algorithm computes the appropriate hint category to be produced next from the taxonomy of hints. If the hinting does not effect correct student answers after several hints the algorithm switches to a didactic strategy, and, without hinting, explains the steps that the student cannot find himself. Nevertheless, the algorithm continues to ask the student for the subsequent step. If the student gives correct answers again and, thus, the tutor need not explain anymore, the algorithm switches back to the socratic strategy. In effect, hints are provided again to elicit the step under consideration. S The Main Algorithm The essentials of the algorithm are as follows: "!#!$%'&)(!+*,!-./0,"21 "3(4"!-.56!768(39#:&;"3 2/0&<&;"! =5?>(@>$/0A#B3C<&DEF153(G:>$(F&DHI3 J8&DE@152(G2K &+>$(@&HI360&:*,!""2*L "3(M>7*3*,256<>(@&HN26>(@1PO!Q!R&D3S7 2/0&<*3>//T"E(@*,0!( socratic !(P80(@>7*3*,E@">$ >$(@1P-.0&"&(O.@>DU&6!$%<&DE@13(G2K &+>$(F&DHI3 Figure 1: Example of alignment, Subject 13 Other cases of subdialog initiation include instances when the student’s answer cannot be classified, or when a student gives an answer which is in principle correct but not part of the current proof. 6 A Hinting Algorithm A tutoring system ideally aims at having the student find the solution to a problem by himself. Only if the student gets stuck should the system intervene. There is pedagogical evidence [7; 23] that students learn better if the tutor does not give away the answer but instead gives hints that prompt the student for self-explanations. Accordingly, based on [26] we have derived an algorithm that implements an eliciting strategy that is user-adaptive by choosing hints tailored to the students. Only if hints appear not to help does the algorithm switch to an explaining strategy, where it gives away the answer and explains it. We shall follow Person and colleagues [20] and Rosé and colleagues [23] in calling the eliciting strategy socratic and the explaining strategy didactic. 6.1 The Function socratic The bulk of the work is done by the function socratic, which we only outline here. The function takes as an argument the category of the student’s current answer. If the origin of the student’s mistake is not clear, a clarification dialog is initiated, which we do not describe here. Note, however, that the function stops if the student gives the correct answer during that clarification dialog, as that means that the student corrected himself. Otherwise, the function produces a hint in a user-adaptive manner. The function socratic calls several other functions, which we look at subsequently. Let denote the number of hints produced so far and 8 the category of the student’s previous answer. The hint is then produced as follows: V W Description of the Hinting Algorithm VCX Y > & W JA Z V ' &6H)"!(OQ!)0(@>*2*,EU>[":"3(4*2>$/0/ elicit Z '&60(@*,!7-Q@/3,\]>*2*,EU>[" 2V (^@!515E@*38>$(_>*L"`7">O-R>["0*0(G a Y >& W that is, ordered-list, or unordered-listb Z '&6H)"!(O V2(^ V+X 8 0&6H)"!(@OQ!76(@*3!-./0,",\]>*3*3EU>[ "3(4*2>$/0/ up-to-inference-rule 0&60(@>7*3*,E@">$ "V+3(4X *28 >$/0/ elicit-give-away 0&)*3!"c*L "V3(4X *28 >$/0/ elicit 3/'&D8*3>// Y >& W d elicit Z '&6H)"!(O 2V (^e'&)'&I"U1^H)!7(O.>$(@&HN2 "3(_"!515E@*3 explain-meta-reasoning 2/0&Z"3`#0!E@&I0(G+HI>7&)>$(_>*L"`7 &Ef@&DE5"!7(^@(G 2(_!515EF*, spell-out-substitution 3/'&De@2`G0!EF&6(G+H6>& spell-out-substitution "3(_"!515E@*, give-away-performable-step 2/0&8*3>$/0/ up-to-inference-rule 3/'&D8*3>// elicit-give-away We shall now present an algorithm that implements the socratic strategy. In intuitive terms, the algorithm aims at having the student find the proof by himself. If the student does not know how to proceed or makes a mistake, the algorithm prefers hinting at the right solution in order to elicit the problem solving instead of giving away the answer. An implicit student model makes the algorithm sensitive to students of a different level by providing increasingly informative hints. The algorithm takes as input the number and kind of hints produced so far, the number of wrong answers by the student and the current and previous student answer category. The particular input to the algorithm is the category that the student answer has been assigned, based on our student answer categorization scheme, and the domain knowledge employed in the answer. The category of the student answer in combination with the kinds of hints already produced and the use of required entities of the mathematical ontology together inform the algorithm of the level of the student’s knowledge. We described the use of the mathematical ontology in that context in Section 3. Moreover, the algorithm computes whether to produce a hint and which category of hint to produce, based on the number of wrong answers, the number and kind of hints already produced, as well as the domain knowledge demonstrated by 7 Y > & W Z '&6H)"!(O.>$(@1^+0&)>$+/c>&D6"U1PH)!7(OR>$(@&HN2 Function %"<&;"E@15elicit-relevant-concept 2(7#(![H+&6>R*3!(@*3356F>[+'&62/0>$21 !." V2(^@!515E@*3 point-to-lesson >$(F1P&D!7 " 3 0 / 3 [ ` $ > G ( + a The student is asked to read the lesson again. Afterwards, @3_( "!#1E@*,*,!78(@>$*,(_25> *,0`815!7-.>(\ !7f;2*,6@(G 3/'&C@ !515E@*3 give-away-relevant-concept starts anew. b 3/'&Dthe"!515algorithm E@*, explain-meta-reasoning Y >& W @ The algorithm we just presented can easily be adapted to O0`8> HI> A >(@&HN2+>$(@1_&H)ZU*U_! 15'1>*,'*<&DU>[2OA other because the hint taxonomy is independent &DH)"*U f@>7* >$ 3M"3 *3!(@&2*3E50`*3!"c*L >(@&DHI3U& from thedomains, algorithm and only called by it. That means that the H)Z"_>// *3!E(G"3U&N"2&, thing that has to be adapted for applying the algorithm a After four hints, the algorithm starts to guide the student more only to different domains are the hint categories in the taxonomy, than before to avoid frustration. It switches to the didactic stratwhile the structure of the taxonomy has to remain the same. egy in the fashion described a the beginning of the section. b Defining adapted hint categories can be done through the domain ontology, which is by definition domain specific. After having produced a hint the function socratic analyzes the student’s answer to that hint. If the student’s answer is still not right the function socratic is recursively called. However, if the student answers correctly and at least two hints have been produced the algorithm re-visits the produced hints in the reverse order to recapitulate the proof step and to make sure the student understands the reasoning so far. This is done by producing a sequence of active meta-reasoning hints, one for each hint that have addressed the current step of the proof, in the reverse order. If the active meta-reasoning hints get the student to say anything but the right answer, the algorithm produces an explain-meta-reasoning hint. This is done to avoid frustrating the student as his performance is poor. The function socratic calls several functions, which we present now. The functions are self-explanatory. 7 An Example Dialog Let us consider the following dialog between the tutoring system and a student on a simple proof. This will help elucidate how the algorithm for the socratic tutoring strategy proceeds and how it makes use of the hinting categories, the enhanced domain ontology, and the implicit student model. The excerpt comes from our recently collected corpus of tutorial dialogs in mathematics in German [4]. The subject is number 23. The tutoring system, denoted as tutor in the following, ! , then is teaching proof of the proposition “If !the ”, where stands for the complement of a set which is defined as follows: ), we If is the universal set, and is its subset (i.e., call the difference simply the complement of and denote it "! ; thus briefly by . ! Note that the corpus was collected via a Wizard of Oz experiment [5]. That means that the system is only partially implemented. Subjects interacted through an interface with a human “wizard” simulating the behavior of a system 2 . We give the example dialog in chunks which consist of one tutor and one student turn. The tutor’s turn includes a name of hint flag. The student’s turn includes the answer category flag. The explanation of the behavior of the tutor in each chunk precedes it every time. The original German transcriptions are provided and with them translations in English, where necessary. At the beginning of the session, the master proof, which will be taught, has not yet been determined. This will be done gradually, as the student will be ’committing’ himself to a particular proof by the choice of steps he makes (See Section 5.1). However, we provide here the proof actually taught in the particular example first in order to help the reader follow it. The proof was given in reality only as a summary at the very end of the tutoring session and was presented as follows: %"<&;"E@152(7#(![H+&N"82/2`[>$(G+*,!(F*,3 @3(_Ze@<&;"E@153(G#(![H+&N@8(522(@*,8"E/0 2(4*3>$/0/ up-to-substitution 3/'&D8*2>$/0/ up-to-inference-rule 3/'&*2>$/0/ elicit-relevant-concept Function elicit e"3`#0!E@&I0(G+HI>7&)>$(_>*,0`815!-R>(5\ !f ;2*L60(G @3(4*3>// up-to-inference-rule 3/'&C2`#!7E@&60(7+H6>&6>Q@>7&&`7815!-R>$0(5\ !f;c*L60(7 "3(_"!515E@*3 elaborate-domain-object 2/0&Z?"3`#0!E@&I0(G+HI>7& elaborate-domain-object 2(M*3>// up-to-substitution 3/'&D8@!515E@*3 give-away-performable-step >(@1 spell-out-substitution a this is to explain the substitutionb Function up-to-inference-rule e"3`#0!E@&I0(G+HI>7& give-away-hypotactical-concept @3(_"!#1E@*, give-away-inference-rule 3/'&C2`#!7E@&60(7+H6>& give-away-relevant-concept "3(_"!515E@*3 elaborate-domain-object 2/0&8Z"3`#0!E@&I0(G+HI>7& elaborate-domain-object 2(_!515EF*, give-away-hypotactical-concept 3/'&D"!515E@*, give-away-relevant-concept Function elicit-give-away e"3`#0!E@&I0(G+HI>7& @3(_"!#1E@*, 3/'&C0OG)(3"3(@*38E/0#(![H)( "3(_"!515E@*3 elicit-substitution 2/0&"!#1E@*, give-away-inference-rule Function up-to-substitution elicit-substitution spell-out-substitution Tutor (8): Ich zeige Ihnen nun den gesamten F Beweis: O S voZunächst setzen wir die Gültigkeit von raus, denn dies ist die Voraussetzung. Dann nehmen wir M und zeigen, ein beliebiges Element dieses O S sein muß. Sei also in . Danndaß auchO in S ist nicht und deshalb nach Voraussetzung Oauch nicht in in S , was . Wenn aber nicht in ist, so ist es in den Beweis abschließt, da beliebig war. 2 8 For details on the experiment see [4] [I will show you the proof now : First we assume F complete O S , as this assumption. Then the validity of M is the we take anO arbitrary element and show, that it has S as well. Let be in . Then is not in to O beS in and therefore according to theO assumption also not S , which concludes in . But if is not in , it is in the proof as was arbitrary.] Q W W Student (1): (wrong) F F F O O S , dann F S , then F Student (4): (unknown) W W S W Tutor (5): Was wollen Sie mit dem Zeichen ausdrücken? [What do you want to say with the character ?] F O S + F O S The W counter is not incremented this time, since there has StudentF (5): O (wrong) daraus S from that follows + F O folgt S] [ been no hint produced. The student’s response to the clarification subdialog is categorised as wrong. This is now the fourth wrong answer and . The system produces a give-awayperformable-step hint, which really gives the current answer away4 . W Tutor (6): (give-away-performable-step) Das ist genau das, wasF Sie Obeweisen sollen. Wir setzen nun S gilt. Daraus dann voraus, daß F wollen O S gilt.wirVersteschließen, daß die Behauptung F O S hen Sie, warum wir annehmen müssen, daß gilt? [That is exactly whatF youO are supposed to prove. We S holds. we want assume now that F FromO that S holds. to conclude, that the proposition F O DoS you understand why we need to assume that applies?] Tutor (2): (give-away-relevant-concept) Das ist nicht richtig! Sie müssen als erstes die wenn-dann-Beziehung betrachten. [That is not correct! First you have to consider the if-thenrelation.] F O O F O S*F O The counter is incremented again and it is now . The student answer cannot be categorized at all. Therefore the system initiates a subdialog to clarify the problematic point in the student’s answer. This answer is categorized as wrong, since it does not contain any accurate parts. Thus the algorithm calls the function A , the functions elicit is called socratic. Since and, through that, elicit-relevant-concept. The latter checks the student’s knowledge by matching something in the answer to the relevant concept needed. That is done by help of the domain ontology. The student has not used any of the concepts that can be matched to the relevant concept of a possible proof. Thus, the system has to choose the first step to teach. Eventually a hint of type give-away-relevant-concept is produced. The relevant concept is the implication. The tutor chooses a clearer referring expression for it, namely “ifthen-relation”. The active function of the hint is to elicit the inference rule, as will become evident later on in the example. Here is the realization of the hint: Student (2): (wrong) d Tutor (4): (explain-meta-reasoning) Sie müssen die Beziehung auflösen, indem Sie die Gültigkeit der Voraussetzung annehmen und daraus die Gültigkeit der Behauptung folgern. [You have to break down the relation by assuming the validity of the assumption and derive the validity of the proposition from that.] When the algorithm starts, the counter for the produced hints is initialized to A . Since the proof is not completed, the tutor prompts for the first step by introducing the task. Tutor (1): O S !Bitte zeigen Sie : Wenn [Please O S !] show the following: If W The counter is incremented by . The new student answer is also considered as wrong3. Since this is the third wrong , the algorithm now produces an explainanswer and meta-reasoning hint and explains the reasoning behind this step. The number of hints and wrong answers imply a bad student level. SS W With the production of this hint, the counter is incremented by . However, this hint is not sufficient to help the student. He gives an answer which is correct in principle, but does not lead anywhere. Therefore, it is categorized as wrong. The function socratic is called recursively. Since , the function up-to-inference-rule is called, which produces a hint of type elaborate-domain-object. This is a second attempt to elicit the inference rule. Again, the student’s knowledge of the necessary concept is checked first in the same function. The tutor addresses the answer and informs the student of its status. Student (6): (wrong) Nein [No] W W W , the algorithm switches to the didactic method in order to The counter is again incremented by . Since avoid frustrating the student. The system explains the metareasoning for the whole performable step, which has already been given in the previous tutor turn. Tutor (7): Wir müssen dies annehmen, weil wir die wenndann-Beziehung auflösen wollen. Bei einer solchen 3 We are currently investigating the need to differentiate between “I don’t know” answers, as this one and wrong attempts. However, these were treated uniformly in this dialog. 4 Note that normally the student would have been asked to read the lesson material again through a point-to-lesson and in effect the tutoring session would start anew after that. However, for the pusposes of the experiment and the collection of our corpus, we altered the algorithm in the way described here. Tutor (3): (elaborate-domain-object) Das ist zwar richtig, aber im Augenblick uninteressant. Wissen Sie, wie sie die wenn-dann-Beziehung behandeln müssen? [That is correct, but at the moment not interesting. Do you know how to deal with the if-then-relation?] Student (3): (wrong) Nein [No] 9 particular course of proceeding is followed. If the student could not answer any of the questions, then the system would give the answer away itself before asking the next question, addressing the next hint. Since only one hint was produced in our imaginary dialog, the recapitulation is omitted. Thus the algorithm proceeds with merely prompting for the next step. Beziehung muß man stets zeigen, daß aus einem bestimmten Ausdruck ein anderer Ausdruck folgt. Deshalb nehmen wir zuerst die Gültigkeit des ersten Ausdrucks an, weil wir sonst keine Basis hätten, von der wir ausgehen können. Verstehen Sie, warum wir zuerst die wenndann-Beziehung auflösen wollen? [We have to assume this, because we want to break down the if-then-relation. With such a relation one always has to show that from a certain term another term follows. Therefore we first assume the validity of the first term, as otherwise we would have no base to start from. Do you understand, why we first want to break down the if-then-relation?] 8 Related Work and Discussion Several other tutoring systems tackle, in one form or the other, hinting strategies, domain ontologies and student answer categorization. We will only mention here the ones that we judge to be most related to our work. Student (7): (wrong) Nein [No] Hinting Ms. Lindquist [14], a tutoring system for high-school algebra, has some domain specific types of questions which are used for tutoring. Although there is some mention of hints, and the notion of gradually revealing information by rephrasing the question is prominent, there is no taxonomy of hints or any suggestions for dynamically producing them. An analysis of hints can also be found in the CIRCSIMTutor [15; 16], an intelligent tutoring system for blood circulation. Our work has been largely inspired by the CIRCSIM project both for the general planning of the hinting process and for the taxonomy of hints. CIRCSIM-Tutor uses domain specific hint tactics that are applied locally, but does not include a global hinting strategy that models the cognitive reasoning behind the choice of hints. We, instead, make use of the hinting history in a more structured manner. Our algorithm takes into account the kind of hints produced previously as well as the necessary pedagogical knowledge, and follows a smooth transition from less to more informative hints. Furthermore, we have defined a structured hint taxonomy with refined definition of classes and categories based on the passive vs. active distinction, which is similar to active-passive continuum in CIRCSIM. We have distinguished these from functions, which resemble CIRCSIM tactics, but are again more detailed and more clearly defined. All this facilitates the automation of the hint production. AutoTutor [20] uses curriculum scripts on which the tutoring of computer literacy is based. There is mention of hints that are used by every script. Although it is not clear exactly what those hints are, they seem to be static. More emphasis seems to be put on the pedagogically oriented choice of dialog moves, prosodic features and facial expression features, but not on hints. In contrast, we have presented in this paper a hint taxonomy (cf. Section 4) and a tutoring algorithm (cf. Section 6) that models the dynamic generation of hints according to the needs of the student. AutoTutor also uses a cycle of prompting-hintingelaborating. This structure relies on the different role of the dialog moves involved to capture the fact that the tutor provides more and more information if the student cannot follow the tutoring well. However, it does not provide hints that themselves reveal more information as the tutoring process progresses, which we have modeled in our algorithm for the Socratic teaching method (cf. Section 6). Thus, the student is not merely made to articulate the expected answers, as is the case in AutoTutor, but he is also encouraged to actively After having completed the explanation of this proof step, the system re-visits all hints produced by the socratic strategy while explaining the step, and makes the student aware of why they were produced. However, we will not go into such detail here, since it is not in the focus of this paper. This constitutes the proof summary that we gave at the beginning of this example. So far we looked at the behavior of the algorithm when student constantly gives wrong ansers. To give the reader a clearer view, let us now examine what happens if the student gives a partial answer. Let us assume that, in the first turn, the student gave an incomplete-accurate answer instead of giving a wrong answer. This example is also from our corpus, albeit from a different teaching session with another subject (Subject 22) on the same proof. Student (1’): &K The student here gives a correct answer. However, the content of the answer cannot easily be inferred from the premise. That means that it has to be proved before it can be used in the proof. Since the student has not proved it, the answer is considered incomplete. That is there are missing parts, namely the proof of what is stated. Nonetheless, all parts that are there are accurate. That makes the student’s answer incomplete-accurate. Subject 22 took part in the experiment in a different condition, which did not involve hinting. However,we chose this example for its clarity. In the example that we have been investigating, the algorithm would call an active pragmatic hint for this sudent answer category, and more specifically an elicit-discrepancy hint. In the example we have been looking at a possible realization of such a hint would be: Tutor (2’): That is correct, but why? Now, if the student follows the hint he could give a correct answer, which would look like this: M / then it would Student (2’): there was M Ifand M Oan S element be , which is a contradiction to the assumption. ^ This is the correct answer, which completes the first proof step as it is shown in the formal proof. Have there been more than one hints so far, and since the student gave a correct answer, the system would do a recapitulation by revisiting the hints produced and asking the student to explain why the 10 of a student model. PACT-Geometry tutor [1] uses a semantic representation of the student’s input that makes use of a domain ontology in order to resolve concepts used and their relations. The correctness of the reasoning used is based on a hierarchy of explanation categories that includes legitimate reasoning steps in the domain. Although we do not use production rules, our work is similar to this approach in the use of organized domain knowledge to evaluate the student’s answer. It differs from it to the extent that the Geometry tutor appears not to have a classification, which is essential to us as input to the hinting algorithm. produce the content of the answer itself. Furthermore, the separation of the study material and the tutoring session facilitates the production of the answers by the student, since the tutor does not have to present the material and re-elicit it in one and the same session. The student is guided through making use of the study material that he has already read in order to solve the problem. Ontologies CIRCSIM-Tutor [17; 9] uses an ontology at three levels: The knowledge of domain concepts, the computer context of tutoring and the meta-language on attacking the problem. The ontology we present in this paper is not concerned with the second level. The first level corresponds to our existing knowledge base. The third level can be viewed as a simplified attempt to model tutoring, which we do via hinting. They do, however, use their domain ontology in categorizing the student answer and fixing mistakes. Within the framework of STEVE [22], Diligent [3] is a tool for learning domain procedural knowledge. Knowledge is acquired by observing an expert’s performance of a task, as a first step, subsequently conducting self-experimentation, and finally by human corrections on what Diligent has taught itself. The most relevant part of the knowledge representation is the representation of procedures in terms of steps in a task, ordering constraints, causal links and end goals. Although this is an elaborate learning tool it is not equally elaborate in its use for interacting with students. It is currently limited to making use of the procedure representation to learning text in order to provide explanations. 9 Conclusion and Future Work We have presented a domain ontology for the naive set theory in mathematics. We propose to use this ontology in the general framework of investigating tutorial dialogs for the domain. We have defined relations among mathematical concepts, formulae and inference rules and applied this approach to the domain of naive set theory. We have further built a hint taxonomy, which was derived with the aim of automating the hint categories defined in it. It is based on the needs of the domain, as those were revealed through the ontology, and previous work done on hints. Moreover, a student answer categorization which makes use of the notions of expected answer, completeness and accuracy was derived. The taxonomy of hints in combination with a student answer categorization is used in a socratic algorithm which models hint production in tutorial dialogs. Our effort was to create an algorithm which is easily adaptable to other tutoring domains as well. We propose to make use of our ontology in mapping descriptions of objects used in the hint categories onto to actual objects in the domain, which are each time different according to the proof under consideration. This facilitates the automatic production of hints, which the socratic algorithm models, tailored to the needs of a particular student for a particular attempt with a proof. The algorithm presented in this paper does not deal with the realization of the hints. We are currently investigating the surface structure of hints and their generation. Moreover, the produced hints do not necessarily complete the tutor’s dialog turn. Further examination is needed to suggest a model of dialog moves and dialog specifications in our domain. In the future we plan to make further developments in our domain ontology to improve the evaluation of the student’s answer, for instance, by capturing different degrees of accuracy of the parts in the answer (cf. Section 5). We will also refine our student answer categories and model more subdialgues to better treat the refined categories. We are also researching ways of making full use of our hint taxonomy. All these improvements will, in turn, help us augment the hinting algorithm. To meet these aims, we will use the empirical data from our recently collected corpus on mathematics tutorial dialogs [4] The corpus was collected through Wizard-of-Oz experiments with an expert mathematics tutor. Moreover the same data is precious for our research in the actual sentence level realiza- Student Answer Categorization AutoTutor [20] uses a sophisticated latent semantic analysis (LSA) approach. It computes the truth of an input based on the maximum match between it and the training material results. The system computes relevance by comparing it to expected answers. The latter are derived from the curriculum scripts. AutoTutor further uses the notions of completeness and compatibility. Both these notions are defined as percentages of the relevant aspect in the expected answer. Apart from the problem of insensitivity of statistical methods to recognizing linguistic phenomena crucial to the evaluation (e.g., negation) and the fact that LSA has proven a poor method for evaluating the kind of small answers representative of our domain, we also want a definition of completeness and accuracy that gives us insight to the part of the student answer that is problematic, and hence needs to be addressed by appropriate hinting. Therefore, defining them in terms of percentages is not enough. We need to represent the relevant domain entities that can serve as the basis for the hint production. CIRCSIM-Tutor and PACT-Geometry tutor both use reasoning based on domain knowledge which is similar to the approach presented in this paper. They compare and evaluate the conceptual content of the student’s response by use of domain ontologies. CIRCSIM-Tutor [30; 12] uses a finegrained classification. Unfortunately, the means of classifying the student’s response, that is the rationale of the classification, is not documented. That makes comparison difficult. However, a list of ways of responding after a particular classification is available. Still no flexibility is allowed in terms 11 [11] Armin Fiedler and Dimitra Tsovaltzi. Automating hinting in mathematical tutorial dialogue. In Proceedings of the EACL-03 Workshop on Dialogue Systems: interaction, adaptation and styles of management, pages 45– 52, Budapest, 2003. [12] Michael Glass. Processing language input in the circsim-Tutor intelligent tutoring system. In Johanna Moore, Carol Luckhardt Redfield, and W. Lewis Johnson, editors, Artificial Intelligence in Education, pages 210–212. IOS Press, 2001. [13] Neil Heffernan and Kenneth Koedinger. Intelligent tutoring systems are missing the tutor: Building a more strategic dialog-based tutor. In Rosé and Freedman [24], pages 14–19. [14] Neil T. Heffernan and Kenneth R. Koedinger. Building a 3rd generation ITS for symbolization: Adding a tutorial model with multiple tutorial strategies. In Proceedings of the ITS 2000 Workshop on Algebra Learning, Montréal, Canada, 2000. [15] Gregory Hume, Joel Michael, Allen Rovick, and Martha Evens. Student responses and follow up tutorial tactics in an ITS. In Proceedings of the 9th Florida Artificial Intelligence Research Symposium, pages 168–172, Key West, FL, 1996. [16] Gregory D. Hume, Joel A. Michael, Rovick A. Allen, and Martha W. Evens. Hinting as a tactic in one-on-one tutoring. Journal of the Learning Sciences, 5(1):23–47, 1996. [17] Chung Hee Lee, Jai Hyun Seu, and Martha W. Evens. Building an ontology for CIRCSIM-Tutor. In Proceedings of the 13th Midwest AI and Cognitive Science Conference, MAICS-2002, pages 161–168, Chicago, 2002. [18] E. Melis, E. Andres, A. Franke, G. Goguadse, M. Kohlhase, P. Libbrecht, M. Pollet, and C. Ullrich. A generic and adaptive web-based learning environment. In Artificial Intelligence and Education, pages 385–407, 2001. [19] Johanna Moore. What makes human explanations effective? In Proceedings of the Fifteenth Annual Conference of the Cognitive Science Society, pages 131–136. Hillsdale, NJ. Earlbaum, 2000. [20] Natalie K. Person, Arthur C. Graesser, Derek Harter, Eric Mathews, and the Tutoring Research Group. Dialog move generation and conversation management in AutoTutor. In Rosé and Freedman [24], pages 45–51. [21] Manfred Pinkal, Jörg Siekmann, and Christoph Benzmüller. Projektantrag Teilprojekt MI3 — DIALOG: Tutorieller Dialog mit einem mathematischen Assistenzsystem. In Fortsetzungsantrag SFB 378 — Ressourcenadaptive kognitve Prozesse, Universität des Saarlandes, Saarbrücken, Germany, 2001. [22] Jeff Rickel, Rajaram Ganeshan, Charles Rich, Candance L. Sidner, and Neal Lesh. Task-oriented tutorial dialogue: Issues and agents. In Rosé and Freedman [24], pages 52–57. tion of hints. We are currently working towards incorporatin gthe study of these empirical data into the research of automatically generating the hints that we can produce. References [1] Vicent Aleven, Ocav Popescu, and Kenneth R. Koedinger. A tutorial dialogue system with knowledgebased understanding and classification of student explanations. In Working Notes of 2nd IJCAI Workshop on Knowledge and Reasoning in Practical Dialogue Systems., Seattle, USA, 2001. [2] Vincent Aleven and Kenneth Koedinger. The need for tutorial dialog to support self-explanation. In Rosé and Freedman [24], pages 65–73. [3] Richard Angros, Jr., W. Lewis Johnson, Jeff Rickel, and Schorel Andrew. Learning domain knowledge for teaching procedural skills. In Proceedings of AAMAS’02, Bologna, Italy, 2002. [4] Chris Benzmüller, Armin Fiedler, Malte Gabsdil, Helmut Horacek, Ivana Kruijff-Korbayová, Manfred Pinkal, Jörg Siekmann, Dimitra Tsovaltzi, Bao Quoc Vo, and Magdalena Wolska. A Wizard-of-Oz experiment for tutorial dialogues in mathematics. In Proceedings of the AIED Workshop on Advanced Technologies for Mathematics Education, Sidney, Australia, 2003. Submitted. [5] N.O. Bernsen, H. Dybkjær, and L. Dybkjær. Designing Interactive Speech Systems — From First Ideas to User Testing. Springer, 1998. [6] Jean Carletta, Amy Isard, Stephen Isard, Jacqueline C. Kowtko, Gwyneth Doherty-Sneddon, and Anne H. Anderson. The reliability of a dialogue structure coding scheme. Computational Linguistics, 23(1):13–32, 1997. [7] Michelene T. H. Chi, Nicholas de Leeuw, MeiHung Chiu, and Christian Lavancher. Eliciting selfexplanation improves understanding. Cognitive Science, 18:439–477, 1994. [8] Mark G. Core and James F. Allen. Coding dialogues with DAMSL annotation scheme. In AAAI Fall Symposium on Communicative Action in Humans and Machines, pages 28–35, Boston, MA, 1993. [9] Martha W. Evens, Stefan Brandle, Ru-Charn Chang, Reva Freedman, Michael Glass, Yoon Hee Lee, Leem Seop Shim, Chong Woo Woo, Yuemei Zhang, Yujian Zhou, Joel A. Michael, and Allen A. Rovick. CIRCSIM-Tutor: An intelligent tutoring system using natural language dialogue. In Proceedings of 12th Midwest AI and Cognitive Science Conference, MAICS2001, pages 16–23, Oxford OH, 2001. [10] Armin Fiedler and Helmut Horacek. Towards understanding the role of hints in tutorial dialogues. In BI-DIALOG: 5th Workshop on Formal Semantics and Pragmatics in Dialogue, pages 40–44, Bielefeld, Germany, 2001. 12 [23] Carolyn P. Rosé, Johanna D. Moore, Kurt VanLehn, and David Allbritton. A comparative evaluation of socratic versus didactic tutoring. In Johanna Moore and Keith Stenning, editors, Proceedings 23rd Annual Conference of the Cognitive Science Society, University of Edinburgh, Scotland, UK, 2001. [24] Carolyn Penstein Rosé and Reva Freedman, editors. Building Dialog Systems for Tutorial Applications— Papers from the AAAI Fall Symposium, North Falmouth, MA, 2000. AAAI press. [25] Jörg Siekmann, Christoph Benzmüller, Vladimir Brezhnev, Lassaad Cheikhrouhou, Armin Fiedler, Andreas Franke, Helmut Horacek, Michael Kohlhase, Andreas Meier, Erica Melis, Markus Moschner, Immanuel Normann, Martin Pollet, Volker Sorge, Carsten Ullrich, Claus-Peter Wirth, and Jürgen Zimmer. Proof development with MEGA. In Andrei Voronkov, editor, Automated Deduction — CADE-18, number 2392 in LNAI, pages 144–149. Springer Verlag, 2002. [26] Dimitra Tsovaltzi. Formalising hinting in tutorial dialogues. Master’s thesis, The University of Edinburgh, Scotland, UK, 2001. [27] Dimitra Tsovaltzi and Armin Fiedler. An approach to facilitating reflection in a mathematics tutoring system. In Proceedings of AIED Workshop on Learner Modelling for Reflection, Sydney, Australia, 2003. Submitted. [28] Dimitra Tsovaltzi and Armin Fiedler. Enhancement and use of a mathematical ontology in a tutorial dialogue system. In IJCAI Workshop on Knowledge and Reasoning in Practical Dialogue Systems, Acapulco, Mexico, 2003. In press. [29] Dimitra Tsovaltzi and Colin Matheson. Formalising hinting in tutorial dialogues. In EDILOG: 6th workshop on the semantics and pragmatics of dialogue, pages 185–192, Edinburgh, Scotland, UK, 2002. [30] Zhou Yujian, Reva Freedman, Michael Glass, Joel A. Michael, Allen A. Rovick, and Martha W. Evens. What should the tutor do when the student cannot answer a question? In AAAI Press, editor, Proceedings of the Twelfth International Florida AI Research Society Conference (FLAIRS-99), pages 187–191, Orlando, FL, 1999. 13