InfoSphere DataStage v8.0

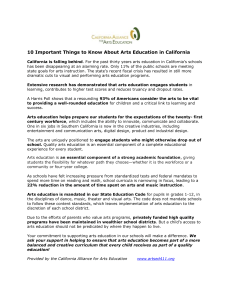

advertisement