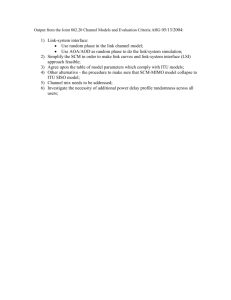

Analysis of Alternatives (AoA) Handbook

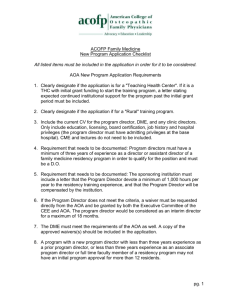

advertisement